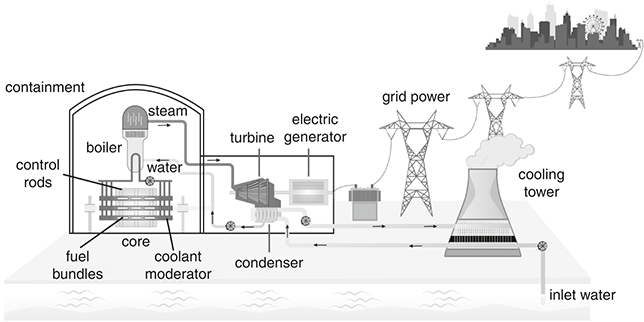

3.1 Nuclear Fission: Turning Mass into Energy

I did my first university work term at Atomic Energy Canada Limited, calculating the radioactivity released to the atmosphere after a LOCA, a seemingly innocuous acronym for “loss of coolant accident.” I was a second-year physics student at the time, in awe of the process of splitting uranium in a collision with a neutron that in turn released more neutrons to split more uranium, resulting in an ongoing chain reaction that generated lots of energy. My work entailed inputting data into computer models to simulate possible LOCA scenarios. Although a LOCA was a highly unlikely event given the independent backup safety systems in a nuclear reactor – gravity-assisted cobalt shutoff rods, cadmium injection, moderator dump – all of which would immediately stop the reactor by keeping more neutrons from splitting more uranium, the government licensing agency needed to know, just in case.

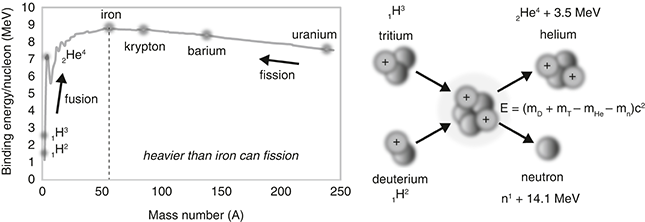

A nuclear reaction is a fairly straightforward process: a slowed-down neutron strikes a U-235 atom, which splits the uranium apart (fission) and releases energy because the resultant parts have less mass than the original atom. The energy of the mass difference is substantial as shown by Einstein’s equation, E = mc2. Indeed, very little mass (m) can make a lot of energy (E), because c2 is so large. For example, 1 gram = 9 × 1013 joules, that is, a 9 followed by 13 zeros or 90 trillion joules! By comparison, recall that a watt is 1 joule/second and thus a standard 60-watt incandescent light bulb consumes 60 joules in one second. The hard part is to keep the process going in a controlled way that turns heat into electricity via a piped-in, heat-transfer cooling system, followed by the same electrical generation system for burning fossil fuels, where heat converts water to steam to run a turbine that creates electricity as in a conventional power plant.

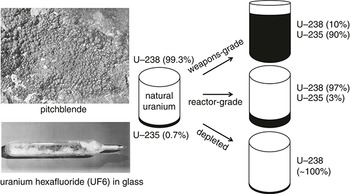

Not all uranium is “burnable.” The most common uranium isotope, U-238, absorbs neutrons instead of fissioning, so we have to use the much less plentiful isotope U-235 for our nuclear fuel, making the fission business that much harder. U-238 accounts for 99.3% of natural uranium as found in the ground, while the fissionable U-235 makes up only 0.7%, roughly one part in 140.1 Separating U-235 atoms from U-238 atoms (a.k.a. enriching) isn’t easy either, so neutron-absorbing uranium (U-238) is always present with fissioning uranium (U-235) in the reactor fuel.

Fortunately, a few tricks are available, such as enriching U-235 to around 3–5% or slowing down the neutrons even more to use the less-plentiful U-235 as is. Note that U-238 and U-235 have the same number of protons (92), but a different number of neutrons (146 and 143), and are known as “isotopes.” The “mass number” (for example, 238 or 235) indicates the number of protons and neutrons, collectively known as nucleons.

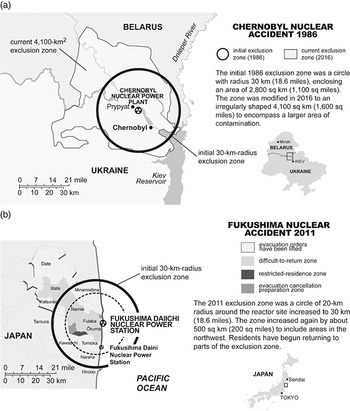

The general nuclear fission equation is n + X → X* → X1 + X2 + Nn + E, where a neutron (n) is “captured” by a fissionable atom X (for example, U-235), the X atom becomes unstable X*, breaks into two “daughter nuclei” fission products (X1 and X2), ejects a number of neutrons (N = 1, 2, or 3 depending on the fission products), and releases energy (E). One possible reaction for U-235 is shown in Figure 3.1, producing the fission products barium (Ba) and krypton (Kr). Numerous other possible reactions produce different fission-product pairs, but this one has all the ingredients of a typical fission reaction.

Figure 3.1 Nuclear fission: (a) U-235 fission-product yield versus mass number A (source: England, T. R. and Rider, B. F., “LA-UR-94–3106, ENDF-349, Evaluation and Compilation of Fission Product Yields 1993” (table 7, Set A, Mass Chain Yields, u235t), Los Alamos National Laboratory, October 1994) and (b) fission process started by a captured neutron. One possible reaction yields the fission products krypton (A = 92) and barium (A = 141).

For an element ZXA, the atomic number (subscript Z) and mass number (superscript A) are always conserved in a nuclear reaction. So, in this example, the atomic number (number of protons), Z = 92 = 56 + 36, and the mass number (number of nucleons), A = 1 + 235 = 236 = 141 + 92 + 3, are the same before and after the reaction. Conservation means we get out what we put in.

The energy is worked out by simple atomic accounting, adding up the difference in mass of the original atom and the resultant parts. Here, the U-235 fission reaction produces 170 MeV, the mass–energy difference between a U-235 atom (plus one captured neutron) and the two fission products, in this case barium (Ba-141) and krypton (Kr-92) (plus three liberated neutrons). As a single atom is so small, the released energy is typically given in MeV (mega-electron-volts), which is equivalent to 1.6 × 10–13 joules (0.00000000000016 J), although in a reactor there are gazillions of atoms to make the energy add up.

One might think uranium would split into two equal parts of atomic number Z = 46 (92/2) or mass number A = 118 (236/2), but in fact the split is uneven (roughly 3:2). Typically, we get one large fission product near barium (Z = 56, A = 141) and another smaller fission product near krypton (Z = 36, A = 92), although there are more than 100 different possible daughter nuclei, as shown in Figure 3.1a. Most are highly unstable (that is, radioactive) because each resultant fission product is neutron heavy and must realign itself to become more stable. For example, barium (56Ba141) turns into stable praseodymium (59Pr141) after about a month and krypton (36Kr92) turns into stable zirconium (40Zr92) in about 6 hours, both via a series of “beta” decays where a neutron turns into a proton and an electron, increasing the atomic number of the decaying atom by one. Highly energetic and potentially very dangerous, alpha particles (doubly charged helium nuclei, 2He4++) and beta particles (nuclear electrons created when a neutron turns into a proton, –1β°) are both ejected during the ongoing radioactive decay of fission products, as are highly penetrating gamma rays (high-energy photons).

Nuclear fission is a statistical process with many different possible reactions, producing different radioactive fission products, a range of energies, and from one to three neutrons. Importantly, the average number of neutrons is about 2.5, and thus capable of creating a chain reaction in the uranium, while the average energy is about 200 MeV. In each case, the stored energy in the U-235 fuel is converted to the kinetic energy of the fission-product pairs, which heats the coolant to create the steam, while delayed neutrons from the decaying fission products help keep the reaction going. Astonishingly, 1 g of U-235 fuel per day in a reactor can generate almost 1 MW of power from over 3 × 1013 fissions per second.2

What’s not to like? Uranium ore (UO2) is fairly abundant in the Earth’s crust, mined primarily in Kazakhstan (~40%), Canada (~20%), and Australia (~10%), while very little fuel is needed to generate lots of energy, although the uranium must first be refined, for example, milled, enriched, and packaged as rods in a fuel assembly (or bundle) in a “light-water” reactor. Fortunately, as nuclear advocates and some environmentalists are keen to note, there are no nasty carbon-containing by-products or greenhouse gases as with fossil fuels, and thus running a nuclear plant reduces carbon emissions that would otherwise be emitted in an equivalent-rated coal-, oil-, or natural-gas-burning plant.

Indeed, in those green university days, I thought nuclear power was the answer to all our energy needs, buoyed by company literature citing the impressive performance and safety record of a CANDU (CANada Deuterium Uranium), Canada’s pressurized “heavy-water” reactor that uses natural uranium as fuel. As co-op students, we were sent to regular industry talks, the last slide in a presentation typically showing a picture of the nuclear power plant life cycle, the 40 years or so from green pasture to working 600-MW reactor back to green pasture again after being decommissioned, with accompanying family bike-riding pictures through the pretty, now-restored, green pasture. Nothing much was said about the waste material – what it was, how long it lasted, how much there was, where it would go – but none of us asked too many questions other than about the physics, little wondering if the talks were more PR than reality. No mention either about carbon-intensive and dangerous mining.

There are two main nuclear reactor types, depending on the concentration of U-235 and the absorbing material employed to slow down the neutrons (called a “moderator”). A typical light-water reactor uses “enriched” uranium and a light-water moderator (mostly regular H2O water), while a heavy-water reactor uses “natural” uranium and a heavy-water moderator (mostly “heavy” D2O water). The moderator also doubles as a coolant to take away the fission heat, the whole point of a reactor.

In the more common light-water reactor, found in the United States and most nuclear-power-producing countries, the U-235 content is increased roughly six times to between 3% and 5% to make up for the high neutron absorption of U-238 because the amount of U-235 in natural uranium is not enough to maintain a chain reaction. In a heavy-water reactor, fewer neutrons are absorbed by the U-238 atoms and instead bounce around inside the reactor, improving the likelihood of capture by U-235 atoms. The neutron “cross-section” (fissionability3) in heavy water is 30 times that of light water, making up for the lower percentage of U-235 in the fuel. In short, we enrich the U-235 content to use light water (in a more common, light-water reactor) or we thermalize more neutrons with heavy water to use the lower-percentage U-235 found in naturally occurring uranium (in the niche-market, heavy-water reactor).

Interestingly, water in a stream, lake, or household tap is not all regular H2O, as not all the hydrogen is “protium” (1H1), but includes two naturally occurring hydrogen isotopes: heavy hydrogen or deuterium (1H2 or D, ~0.015%) and tritium (1H3 or T, <10–18%). Still, it takes time and money to turn regular light water into heavy water, separating out the roughly 1-in-7,000 deuterium oxide molecules (D2O) from the hydrogen oxide molecules (H2O) by isotope separation.

There are pros and cons to both designs. The advantage of a heavy-water reactor is that we don’t need to enrich the fuel, and can use the uranium more or less as dug out from the ground, separated from its impure UO2 ore (uraninite, a.k.a. pitchblende4), saving money on expensive fuel-refining costs. The disadvantage is that we need to spend money making heavy water, produced by bombarding regular light water with neutrons (H2O + 2n = D2O). Although heavy water is mostly used as a neutron moderator in CANDU reactors, other applications include tracer compounds to label hydrogen in organic reactions, nuclear magnetic resonance (NMR) spectroscopy, and neutrino detectors.

Conversely, we can use regular H2O water in a light-water reactor, but must pay to enrich the U-235, a difficult and expensive process involving gaseous UF6 diffusion or centrifuges, the same methods employed to enrich uranium to make a nuclear bomb. Note that weapons-grade uranium contains 90% U-235, while reactor-grade uranium contains either 3–5% U-235 for light-water reactors or 0.7% U-235 for heavy-water reactors. Depleted uranium (DU) has less than 0.3% U-235, that is, almost 100% U-238, still dangerous but no good as is for power. Depleted uranium is what’s left over after U-235 has been removed for enriching (Figure 3.2).

Enriching natural uranium into reactor-grade U-235 (from 0.7% to 3–5%) is done by isotope separation, based on the differences in the atomic weights of U-235/U-238 (with three more neutrons, U-238 is heavier). After milling to separate the uranium from its ore, the uranium is ground into a condensed powder known as yellowcake (mostly U3O8) – so-called because the separated uranium crystals are yellow – and turned into purified UO2 by smelting, which is then fabricated into pellets for use in heavy-water reactors (thus no enriching needed) or converted into UF6 (uranium hexafluoride or “hex”) for enriching in light-water reactors. Vaporous UF6 is better for enrichment because U-235 is more easily separated from U-238 by gaseous diffusion (hex has a low boiling point), where the lighter U-235 atoms move faster across a barrier.5 Thousands of cascading units are needed because the U-235 yield at each stage is minimal.

Centrifuges require much less electricity and are more common today than gaseous diffusion, separating out the U-235 by high-speed spinning until the desired percentage is reached (the heavier U-238 atoms move to the outside), the same process for making today’s weapons-grade uranium and hence an important indicator of the existence of an illegal weapons program. For use in a light-water reactor, the high-grade UF6 is then remade into solid UO2, before being sintered and shaped into 1-inch-long cylindrical pellets, packed into 12-feet-long, 1-inch-diameter zirconium tubes to make fuel rods (think of a Pez dispenser) and arranged in a multi-rod assembly to be lowered into a reactor for irradiation.

The fuel rods are left in the reactor core for up to 4 years as the fission heat is transferred to the circulating coolant. During burning, the U-235 fuel is replaced by neutron-absorbing, fission-product “waste” such as krypton and barium, before being removed and stored in onsite “spent fuel” pools. All fuel handling is done by machine, while the reactor is sealed in a metal pressure vessel and enclosed in a thick-walled concrete containment building. Some of the U-235 remains unburned, as much as 2% depending on the time spent in the reactor and the fueling scheme, becoming unusable along with the highly radioactive, reaction-killing, fission-product waste. The life of a CANDU fuel bundle is similar as it passes horizontally through the reactor core (the middle is the hottest), before being ejected out the other end. CANDU refueling is continuous without the need for a reactor shutdown.

Atom smashing is now big business, generating almost 5% of global electrical power in 440 nuclear power plants worldwide. One hundred are in the United States, providing almost 20% of electrical power to the US national grid. At the beginning of the Atomic Age, after the physics and engineering of harnessing U-235 atoms had been worked out, some believed nuclear power would be the answer to all our energy needs and become “too cheap to meter.”

Note that “atomic” and “nuclear” physics seem interchangeable, the fundamental difference muddied by the history of nuclear power. An atom consists of a nucleus (protons and neutrons) and orbital electrons, but although “atomic” often means nuclear as in weapons and power, atomic physics technically refers to the electronic structure of an atom and the energy levels of the electrons that orbit the nucleus, while nuclear physics is what goes on inside the nucleus. Furthermore, chemical reactions involve only the orbital electrons, while nuclear reactions break apart the core of an atom and release much more energy (~ 200 MeV/eV). Alas, we are stuck with the muddied distinction as defined in the 1954 US Atomic Energy Act, which states “The term ‘atomic energy’ means all forms of energy released in the course of nuclear fission or nuclear transformation.”

3.2 Nuclear Beginnings: Atoms for War

To mark a beginning to nuclear physics, one typically starts with Ernest Rutherford, a New Zealander who did pioneering work at the universities of Manchester, McGill, and Cambridge. He was the first to recognize during a series of experiments from 1908 to 1913 that an atom was made of a dense central “nucleus” (which he named from the Latin for “little nut”), when alpha particles fired at a gold-foil target bounced back toward the source rather than being deflected as assumed in an earlier theoretical model. In the first practical model of the material world, the hydrogen nucleus (H+) became the building block of all elements, which Rutherford called a “proton.” In the academic home of Isaac Newton, and where in 1897 J. J. Thompson discovered the first subatomic particle, the electron,6 Rutherford built a different kind of laboratory to explore the inner workings of matter, generating new atomic stones to fling at increasingly higher velocities. Smashing stuff had become acceptable science.

Building on Rutherford’s work at the Cavendish Lab in Cambridge, his research partner James Chadwick discovered the neutron in 1932, similar in mass to a proton but without an electric charge. Chadwick noted that atoms would have too much positive charge if only protons accounted for the atomic mass of an element (hence the atomic number is not equal to the atomic mass). In 1938, John Cockcroft and Ernest Walton would be the first to break apart an atom, smashing a lithium target with a stream of protons accelerated by a high voltage,7 the original particle accelerator and “first direct quantitative check of Einstein’s equation, E = mc2.”8 The atomic zoo was expanding with every curious new discovery.

Elsewhere, the Joliot-Curies in Paris employed alpha particles to break apart other light elements, building on the work of Marie Curie – the other great pioneer of nuclear science who earlier isolated two highly radioactive unknown elements in uranium ore, which she named polonium and radium – before Enrico Fermi in Rome tried neutrons as his atomic bullets, differentially slowed by his marble and wooden benches (hydrogenous wood slowed more neutrons). Although they had lots of fun smashing elements with their high-velocity protons, alpha particles, and neutrons, the vast amount of energy stored in the nucleus wasn’t fully understood by any of the pioneering nuclear alchemists, either physicists or chemists.

A massive array of capacitors and rectifiers accelerated the atom-smashing particles at higher speeds, while oscilloscopes, scintillation screens, and Geiger counters measured what came out after the smashing.9 Nothing much was expected from the exploratory work other than a better understanding of the properties of matter and more insight into a previously unknown nuclear realm. As Rutherford noted, “Anyone who expects a source of power from the transformation of these atoms is talking moonshine.”10 Einstein himself was also doubtful at first, stating in 1934, “splitting the atom by bombardment is something akin to shooting birds in the dark in a place where there are only a few birds.”11

What came next is caught up in the intricacies of World War II, ultimately pushing the United States to build the first nuclear bomb as part of the Manhattan Project, fearful that Nazi Germany was developing its own nuclear-bomb program after scientists in Berlin showed that the nuclear fission of uranium could in fact release an enormous amount of energy. Generating usable nuclear power was a by-product of the bomb.

Systematically working his way through the elements from hydrogen (Z = 1) on up, Fermi had created the first “transuranium” elements (Z > 92) in 1934 by bombarding uranium with slow neutrons, which spurred on Otto Hahn, a chemist, and Lise Meitner, a physicist, to do their own uranium smashing at the Kaiser Wilhelm Institute in Berlin. Although the German chemist Ida Noddock was the first to suggest the theory of fission – a cell biology term used by American biologist William Arnold while working with Niels Bohr in Copenhagen – no one was quite sure what was going on in the bombardment. Fermi believed he had either created neptunium (Z = 93) and plutonium (Z = 94),12 a nuclear isomer, or various complex radioactive decay products, none of which made sense until Hahn and his young assistant Fritz Strassmann calculated that the mass number of the target uranium atoms was equal to the sum of the mass numbers of the measured reaction products.

Meitner, a Jewish-born Austrian exile who had fled Germany after the 1938 Anschluss to work in Stockholm, and her nephew Otto Frisch determined that the uranium atoms had been split apart, basing her thinking on the liquid-drop models of Bohr, George Gamow, and Carl Friedrich von Weizsäcker. As Frisch noted, “gradually the idea took shape that this was no chipping or cracking of the nucleus but rather a process to be explained by Bohr’s idea that the nucleus was like a liquid drop; such a liquid drop might elongate and divide itself.”13 Imagine a water-filled balloon squeezed somewhere near the middle to form a dumbbell that then snaps in two. Indeed, when a nucleus expands after capturing a neutron, the long-range, proton–proton, repulsive forces exceed the short-range, nucleon–nucleon, attractive forces that bind the atom together, causing “fission.” The resultant kinetic energy would be considerable, as much as 200 MeV, as Meitner and Frisch wrote in a Nature letter on January 16, 1939.

Meitner’s news spread fast, prompting Fermi to continue his neutron-induced radioactivity and uranium-smashing experiments at Columbia University in New York, where he had relocated with his Jewish wife Laura by way of Sweden after receiving the 1938 Nobel Prize “for his demonstrations of the existence of new radioactive elements produced by neutron irradiation, and for his related discovery of nuclear reactions brought about by slow neutrons.” Adding to the sense of urgency, the results of Hahn and Strassmann were verified by Frisch in Copenhagen, who recorded electric pulses of the fission products with an oscilloscope, and in a cloud-chamber photograph by two Berkeley physicists.14

The importance of the “secondary” neutrons produced in the reaction was immediately understood as a way to create a chain reaction, but whether any such reaction could be controlled was still unknown. In a now better-understood nuclear splitting, Fermi also proposed the existence of a new particle, which he called a neutrino for “little neutral one,” earlier hypothesized by the Austrian physicist Wolfgang Pauli as part of the process of beta decay. The neutrino satisfied the law of conservation of energy that some had thought to abandon to explain the mysterious hidden energy of the nucleus, becoming yet another part of the growing subatomic catalogue of particles, all of which had to be carefully accounted for in any reaction.

As the atomic scientists surmised from a now credibly established theory, a “controlled” nuclear reaction via “primary” neutrons would almost certainly be able to detonate a bomb.15 Adding to the worry of such raw power, Germany’s fission research was expanding, led by Nobel physicist Werner Heisenberg, who was building an experimental reactor (Uranmaschine) at the Kaiser Wilhelm Institute in Berlin, fueled with uranium from Europe’s only source, the Ore Mountains south of the Czech–German border.

The American quest to make the bomb would begin in earnest after the Hungarian émigré Leo Szilard – another European scientist caught in the crossfire of war, who had fled to the United States like so many others – commissioned Einstein to write a letter to President Franklin Roosevelt, warning of the likely German progress on nuclear fission. Dated August 2, 1939, the letter called for concerted government action: “In the course of the last four months it has been made probable – through the work of Joliot in France and Fermi and Szilard in America – that it may become possible to set up a nuclear chain reaction in a large mass of uranium, by which vast amounts of power and large quantities of radium-like elements would be generated.” The letter goes on to list possible uranium sources in Canada, Czechoslovakia, and the Belgian Congo, and ends with an ominous declaration that Germany had stopped the sale of uranium ore from Czechoslovakian mines upon annexing the Sudetenland. One month later, the Germans would invade Poland.16

On September 1, 1939, prior to a ban on reporting nuclear results, Niels Bohr and his former student John Wheeler published their seminal paper “The Mechanism of Nuclear Fission” in the American journal Physical Review, calculating that the odd-numbered, least-abundant uranium isotope U-235 was more likely to fission than U-238, and highlighting for all the practicality of generating large amounts of energy with uranium. There was no return once the nuclear genie was loosed from its atomic bottle. That same day, the world was at war.

The $2 billion Manhattan Project was the largest research project ever, employing more than 125,000 scientists, technicians, office workers, laborers, and military personnel at its peak at various locations across the United States, including the main think tank at Los Alamos in New Mexico and two productions centers, one in Oak Ridge, Tennessee, and another in Hanford, Washington (codenamed Project Y, K-25/Y-12, and Site W). Most of the heavy thinking took place in Los Alamos (a.k.a. “the Hill”), where the top physicists of the day had been corralled by the army, such as Hans Bethe, Felix Bloch, Emilio Segrè, Edward Teller, Eugene Wigner, and a young Richard Feynman, who wowed everyone with his talent, levity, and safecracking ability, and would soon head the Theoretical Computations Group in charge of the IBM calculating machines.

After a daring escape in an open boat from occupied Denmark to England, Niels Bohr, the Danish theoretician responsible for the concept of atomic shells, made several trips to Los Alamos, having calculated that 1 kg of purified U-235 would be sufficient to make a bomb,17 while others acted as visiting consultants from university laboratories across the USA, such as Enrico Fermi, Ernest Lawrence, and Isidor Isaac Rabi. The British national James Chadwick, who had first discovered the neutron only a decade earlier, fittingly became a part of the team after the American and British efforts were combined. Einstein, however, was considered a security risk, mostly because of his misunderstood pacifist views, and was not asked to join the project and besides wasn’t a nuclear physicist, although he did do some isolated work from Princeton on isotope separation and ordinance capabilities.18

The thousands of dedicated workers at Los Alamos were all led by J. Robert Oppenheimer, known as Oppie, a hyper-intelligent, philosophically minded theoretical physicist from Berkeley and colleague of the pioneering American atom-smasher Ernest Lawrence, the 1939 Nobel Prize winner for the invention of the cyclotron. Having visited the Pecos Wilderness near Los Alamos as a child on family vacations, Oppie reckoned that the secluded New Mexico desert was perfect for a “secret complex of atomic-weapons laboratories.”19

Given the uncertain outcome of working with novel materials, two bomb designs were devised to improve the chances of a successful detonation. One employed enriched uranium (U-235) and the other plutonium (Pu-239), an element found only in trace amounts in nature. Both materials were produced with great difficulty: U-235 via electromagnetic separation coupled with gaseous diffusion at Oak Ridge and Pu-239 via uranium neutron capture in the B Reactor at Hanford, based on Fermi’s test pile at the University of Chicago, to where he had moved from Columbia to support the war effort. Feynman’s as-yet-unfinished PhD thesis was on the separation of U-235 from U-238, although the method eventually employed to create weapons-grade U-235 was invented by Lawrence at Berkeley, while the Pu-239 was collected from inside the Hanford reactor after the transmutation of U-239.

In the “uranium-gun” design, two subcritical-mass pieces of enriched U-235 would be kept separate until triggering, while the “plutonium-implosion” bomb would be triggered by setting off plastic explosives around a subcritical spherical mass of Pu-239, condensing the core to twice its density via “shaped charges” that evenly focused the implosion like a lens. Plutonium was much easier to produce, but spontaneous fission in Pu-240 was too high for a gun design, solved by imploding the plutonium to a supercritical mass.20 The “lens” was made of layered plastic explosives that had the consistency of taffy and could be shaped to produce a massive, evenly applied shockwave, symmetrically compressing the core. The implosion had to be perfectly uniform to avoid a dud, a.k.a. a “mangled grapefruit.” The fast-to-slow detonation layers were designed by another European émigré, John von Neumann.21

After almost 4 years of development, the first test was prepared at Alamogordo in the New Mexico desert, about 150 miles south of Albuquerque. Codenamed Trinity, some were unsure if the atmosphere would catch fire via a nitrogen fusion chain and ignite the world.22 Others took bets on the size of the explosion. Although the world survived, the blast heat turned the desert sand to green glass – now known as “trinitite” – in all directions for 800 yards.

The Manhattan Project (codenamed S-1) detonated three atomic bombs, the original Trinity “gadget” test, July 16, 1944, and two more on live targets less than 3 weeks later, even though Germany had already been defeated by then and were no longer involved in an atomic weapons program, negating the project’s purpose. The second (Little Boy) was dropped by the B-29 bomber Enola Gay over the city of Hiroshima on August 6, 1945, and the third (Fat Man) by another B-29, Bock’s Car, on Nagasaki three days later, effectively ending the war.

The Hiroshima bomb was the uranium-gun design with an explosive power of 12.5 kilotons TNT (~ 50 TJ), killing an estimated 105,000 people and destroying 54,000 buildings, while the Nagasaki bomb employed the plutonium-implosion design – as at the proof-firing Trinity test – with the explosive power of 22 kilotons TNT (~90 TJ), killing 65,000 people and destroying 14,000 buildings.23 Almost 10,000 people per square kilometer were killed by the two roughly 1,800-feet-high “air-bursts,” the explosive power of the Hiroshima bomb equal to 16,000 of the largest conventional bombs dropped from a B-17. Twenty years later, Oppenheimer would capture the magnitude of worry most of the scientists felt upon witnessing the destructive power of the world’s deadliest creation that had lit up the sky like a second Sun at Alamogordo:

We knew the world would not be the same. A few people laughed, a few people cried, most people were silent. I remembered the line from the Hindu scripture, the Bhagavad-Gita; Vishnu is trying to persuade the Prince that he should do his duty and, to impress him, takes on his multi-armed form and says, “Now I am become Death, the destroyer of worlds.” I suppose we all thought that, one way or another.24

The ethics of dropping a nuclear bomb on live targets has been debated ever since. Some said two bombs were detonated to demonstrate the ease of deployment and that further Japanese resistance would be futile, saving countless Allied lives in a prolonged invasion of Japan (both bombs were stored together at the assembly site on Tinian Island). Some believed the Americans had to beat the Soviets into Japan to control reconstruction and post-war markets, while sending a message against communist expansion into Western Europe and Asia.

Others thought more sinister goals were at play, two billion dollars too much to spend on an untested concept. The English physicist and novelist C. P. Snow wrote in The New Men, “It had to be dropped in a hurry because the war will be over and there won’t be another chance,” while Cornell physicist Freeman Dyson noted “the whole machinery was ready.” Still others thought the world should know the bomb’s full potential as the United Nations was being formed. Already, the scientists and government overlords were debating the future deployment policy of the most deadly weapon ever constructed.

Niels Bohr argued that hard-won nuclear secrets should be shared with the Soviets to avoid an unnecessary and costly arms race as well as future proliferation, which has indeed transpired, but the politics of the day triumphed over scientific logic. Bohr even met with Roosevelt to discuss the possibility of “atomic diplomacy” with the Soviets, nixed by British prime minister Winston Churchill, who refused to see beyond the existential challenges of the current war.25 Bohr’s “open world” policy was akin to his complementarity principle, where wave-particle duality was compared to stockpiling atomic bombs that made the world both safer (as a permanently unusable deterrent) yet more dangerous (in the event the unthinkable transpired).26 The usually apolitical Fermi imagined an honest agreement with effective control measures and that “perhaps the new dangers may lead to an understanding between nations.”27 The future of warfare and life had become both freed and enslaved by science.

Expressing concern about the lack of contact between scientists and government officials, Einstein also sent a second letter to Roosevelt. Dated March 25, 1945, the letter remained unopened on the president’s desk, Roosevelt dying before he could read it. Often credited as the Father of the Bomb because of his equation and first letter, despite having done nothing to build it, Einstein lamented, “Had I known the Germans would not succeed in producing an atomic bomb, I never would have lifted a finger.”28 In fact, Germany’s nuclear program had been primitive, unable even to build a working reactor.29 Einstein would spend the rest of his life campaigning for arms control, reduced militaries, and a “supernational” security authority. Later, he noted that signing the letter in 1939, ostensibly starting the world on the road to an unstoppable nuclear-armed future, was the greatest mistake of his life.

***

After the war was over and the physics of exploding nuclear bombs better understood, other countries built their own kiloton and then megaton weapons using the Earth as a giant test lab. The Soviet Union detonated its first atomic bomb in 1949 at the Polygon test site in northeast Kazakhstan, a plutonium fission bomb codenamed “First Lightning” (called “Joe-1” by the USA after Joseph Stalin). Soviet development was helped by German refugee and spy Klaus Fuchs, who worked with the British contingent at Los Alamos, a little-known American physicist turned spy Ted Hall, who worked on explosion experiments and was unhappy that the USA hadn’t shared nuclear information with its Allies, and 300 tons of uranium dioxide found after Germany’s surrender, including a small amount at the Kaiser Wilhelm Institute in Dahlem where Hahn and Strassmann had first split the uranium atom a decade earlier.

The Soviets were keen to catch up to the Americans after the fall of Berlin, igniting a race for nuclear supremacy and the Cold War, which would divide the world into rival spheres of influence and may have averted a possible American first strike on a war-weary USSR. In 1952, the British tested their first nuclear bomb in the Montebello Islands off the northwest coast of Australia. After the detonation of the first nuclear bomb, the power and pace of destruction increased more in a decade than ever before in human history.

Overseen by the tutelage of the Hungarian émigré and Manhattan Project alumnus Edward Teller, nuclear weapons today are thermonuclear bombs, where a plutonium-fission “A-bomb” is detonated to generate the million-degree temperature needed to trigger a fusion “H-bomb,” fusing hydrogen nuclei to release energy rather than fissioning U-235 or Pu-239. Much more powerful than an A-bomb, the “Super” was opposed by some of the leading Los Alamos scientists because of its limitless destructive power, yet advocated by others to strengthen US military capability. The first Super, codenamed “Mike,” was successfully tested by the United States on November 1, 1952, on the remote Pacific Ocean coral atoll of Eniwetok in the Marshall Islands, halfway between Hawaii and the Philippines. Redesigned to be deployed by plane, the first H-bomb that could literally wipe out an entire country was dropped on March 1, 1954, on nearby Bikini Atoll. At 15 megatons TNT, “Bravo” was more than 1,000 times as powerful as the original Hiroshima blast, creating a 250-foot deep, mile-wide crater on the ocean floor and “fallout across more than 7,000 square miles of the Pacific Ocean.”30 In the midst of a widening Cold War chasm, few questions were asked.

Between 1946 and 1958, more than 1,200 nuclear bombs were detonated, equivalent to over one Hiroshima per day, leaving a distinctive, still detectable radiation signature across the globe. More atomic muscle-flexing followed when the Soviet Union detonated a 58-megaton-TNT H-bomb in 1961 in the Russian Arctic. At almost 5,000 times the power of the original Hiroshima blast, RDS-220 or “Tsar Bomba” was the largest bomb ever exploded. The “destroyer of worlds” now contains enough destructive power to kill us all many times over.

For most of the scientists in the Manhattan Project, weapons development was morally permissible during war, but not in peacetime. As noted in the 1949 General Advisory Committee report, signed off by Oppenheimer before he washed his hands of any further development, there was “no inherent limit in the destructive power” to the H-bomb and that such a “weapon of genocide … should never be produced.”31 But wiser heads did not prevail, the $2 billion wartime project spiraling out of control into half a century of Cold War gamesmanship. The USA brazenly tested bombs without any military need and the Soviets marched through eastern Europe as if daring their former allies to stop them. The US price tag for testing and stockpiling their new military toys was a staggering $5.5 trillion.32

Presently, nine countries possess verifiable nuclear weapons – the USA, Russia, the UK, France, China, India, Pakistan, Israel, and North Korea – with an estimated 13,500 nuclear warheads either deployed on base with operational forces in a state of “launch on warning” or in reserve with some assembly required (down from a peak of 85,000 at the height of the Cold War). More than 90% are held by the USA (5,800) and Russia (6,375), totaling 20 EJ of destructive energy or almost half a million Hiroshimas.33 Unofficially, there may be more, the wonder not that so many countries possess nuclear weapons, but that so few do or that none have been used again in anger since the end of World War II.

Furthermore, despite reducing the possibility of direct conflict between the United States and the Soviet Union cum Russia in any number of hotspots around the world, the arms race has diverted – and continues to divert – a substantial amount of spending to the military along with “a sharp increase in the influence of the military upon foreign policy.”34 It is unfathomable that trillions of dollars have and are still being spent on something that can never be used. Even if they could, how many times can one obliterate existence?

Nonetheless, thousands of unusable doomsday machines stand poised, ready to destroy, despite their essential impotence, the risk of accident ever present – operational, machine-triggered, or software-assisted. When a nuclear-armed American B-52 collided in midair with a refueling plane during a Chrome Dome regular test run in 1966, seven of 11 crew members on the two planes died and four nuclear bombs inadvertently dropped, landing near the fishing village of Palomares in southern Spain. Although the 70-kiloton-plus H-bombs didn’t detonate, plutonium was released in the resultant, non-nuclear TNT explosions of two of the bombs, spreading radiation over an 800-km radius and contaminating a 2-km2 area with highly radioactive debris. Initially denied and then downplayed by the American and Spanish governments, one of the errant bombs was retrieved 10 weeks later from the sea, a flotilla of ships sent to find the missing “broken arrow.”35 If the nuclear bombs had exploded, a large part of southern Spain would have been destroyed and rendered completely uninhabitable. More than 50 years on, the cleanup around Palomares continues, 1.6 million tons of soil removed to the United States at a cost of $2 billion, while an estimated 50,000 m3 of contaminated soil still remains.36

To be sure, atomic bookkeeping comes with its own unique concerns, too chilly to comprehend. At least two of the 32 broken arrows reported since 1957 have never been found, one accidentally jettisoned mid-flight off the coast of Georgia in 1958 after another midair collision involving a hydrogen-bomb-carrying B-47, while another rolled off the side of an aircraft carrier in 1965.37 Both are still unaccounted for, buried somewhere under miles of murky ocean waters. Others have also likely gone missing from the secret arsenals of nuclear-armed states.

By the start of the 1960s, the “strategic” deployment of a nuclear deterrent was the raison d’être of the Cold War, a.k.a. “Balance of Terror,” fueled by meaningless claims of a “missile gap.” But the question soon changed from how a bomb is built to whether more should be built. The expense is enormous, a 1998 source estimating that the US nuclear-bomb program had cost $5 trillion to develop and maintain since 1940, while at least 5% of all commercial energy consumed in the United States and Soviet Union from 1950 to 1990 was spent on developing, stockpiling, and creating launch systems for a growing nuclear arsenal.38 No new American bombs have been added since the 1990s, although a recently enacted US nuclear makeover is expected to cost over $1 trillion (including $56 billion for expected cost overruns!), while over $50 billion is spent each year on maintenance.

Mutually assured destruction (MAD) is the agreed outcome in a nuclear confrontation, with billions dead in minutes and billions more in the resultant radioactive fallout, followed by years of “nuclear winter,” the term coined by American astrophysicist Carl Sagan and others in a 1983 paper using models previously based on the effects of volcanic eruptions. Oppenheimer likened the arms race “to two scorpions in a bottle, each capable of killing the other, but only at the risk of his own life.”39 Regardless the unthinkable outcome, there is little value in maintaining an oversized arsenal as a deterrent for future aggression, either militarily or financially. At least, for now, there are no nuclear weapons in space.

Although a horrific possible future has kept rival powers from initiating an unwinnable war, Vaclav Smil noted in Energy and Civilization that “the magnitude of the nuclear stockpiles amassed by the two adversaries, and hence their embedded energy cost, has gone far beyond any rationally defensible deterrent level.”40 In fact, stockpiling unusable nuclear weapons undermines spending on conventional weapons that may be needed in the event of a real war and for important industrial and social spending. And yet, undeterred by the cost and horror of total annihilation, a silent sentinel stands guard on a future no one can endure.

In the changing times that immediately followed World War II, however, the powers that be sought to implement a new policy to attempt to make amends for the devastation at Hiroshima and Nagasaki, turning to the prospect of peaceful nuclear power. Thousands of scientists and engineers retooled their abilities to tame the raw energy within the atom as power moved from the work benches of Los Alamos to the halls of government. Former bomb-making factories were converted to national research laboratories, while academic and civilian research facilities grew along with the ever-expanding military program, tasked with preventing another world war by maintaining an unthinkable, always-ready deterrent. Whether any of the modern alchemists could reorient their thinking was still unknown.

In From Faust to Strangelove, a book that explores the changing public perception and image of the scientist, Roslynn Haynes notes that after Hiroshima and Nagasaki physicists were no longer perceived as innocent boffins, while “it became progressively more difficult to believe in the moral superiority of scientists and even more difficult to believe in their ability to initiate a new, peaceful society.”41 Nonetheless, despite its clandestine origins, moral ambiguity (“technical arrogance” to use Freeman Dyson’s characterization), and the intellectual chasm of classifying the mysterious workings of a compact “little nut” at the core of an uncertain new Atomic Age, nuclear fission would become fully vested in locomotion and everyday electrical power. How we got there is as fascinating a tale as there is in the history of science and engineering, from which the promise of endless energy rolls on.

3.3 The Origins of Nuclear Power: Atoms for War and Peace

On a brisk, wintery morning, December 2, 1942, Enrico Fermi made his way to work at the University of Chicago, where he had been enlisted in the war effort to engineer a way to make plutonium, known only as element number 94 until earlier that March. Waiting for him at a campus doubles squash court underneath the west stands of the disused Stagg Field football stadium was a team of scientific workers from the “Metallurgical Lab” (Met Lab), who under Fermi’s supervision had assembled a nuclear reactor as part of the top-secret Manhattan Project. Called a “pile” for its numerous uranium-filled layers of graphite, the goal was to prove that a geometrically assembled array of lumped uranium could go “critical,” that is, produce a self-sustaining fission chain reaction, by which plutonium could be produced.42

Assembled around the clock in two 12-hour shifts over a period of a month, the Chicago Pile One (CP-1) consisted of 45,000 highly purified graphite blocks stacked in 57 layers in a 30-by-32-foot lattice, some of the blocks hollowed out to hold 40 tons of hockey-puck-shaped uranium-metal discs. Graphite (a carbon allotrope) was a readily available moderator, known from Fermi’s earlier work at Columbia, which according to his precise calculations should sufficiently slow down enough fast-fission neutrons to kick-start the fission process that would produce the secondary fission-product neutrons needed for the growing pile to go critical in an ongoing chain reaction. As each layer was added, Fermi measured the neutron count, confident of his numbers.43

Containing enough graphite to provide a pencil for “each inhabitant of the earth, man, woman, and child,”44 as his wife Laura would later write, Fermi called the 385 tons of graphite, 6 tons of pure uranium metal, and 34 tons of uranium oxide, all held together in a wooden cradle standing 22 feet high, “a crude pile of black bricks and wooden timber.” To keep the pile from going critical on its own, 14 extractable, neutron-absorbing, cadmium control rods were horizontally inserted into the middle of the pile, while three brave scientists – called the “liquid control” or “suicide” squad – stood overhead at the ready with buckets of cadmium salt solution in case the massive construction caught fire.

Inch by inch and one by one, the control rods were pulled out, Fermi noting the increasing neutron levels on a Geiger counter. The “neutron economy” was everything as more neutrons were released by more U-235 fissions within the pile, sufficiently slowed down by the graphite moderator to facilitate even more. After a break for lunch, the final rod was removed and at 2:20 pm the reaction went critical, the reproduction factor, k, greater than one (1.0006). The neutron count was literally off the chart. In a phone call to Washington to report on the success, the project leader Arthur Compton, who had recruited Fermi to Chicago, declared, “The Italian navigator has just landed in the new world.”45

The CP-1 prototype was a great success, the ever-cautious Fermi not only happy for having built the world’s first nuclear reactor, but for the ease in which he could control the ongoing reaction, exclaiming, “To operate a pile is as easy to keep a car running on a straight road by adjusting the steering wheel when the car tends to shift right or left.”46 Although the uranium was not enriched, hence the large size for the minimal power generated (under 1 watt47), criticality had been proven, essential for the Manhattan Project’s plutonium production and later to show that peaceful nuclear power was doable after the horrors of war.

“Atoms for Peace,” as President Eisenhower styled his proposed safer world in a 1953 UN speech, would absolve “atoms for war” that saw 170,000 people die instantly or in the immediate aftermath of the Hiroshima and Nagasaki blasts, followed by hundreds of thousands more from cellular degradation and radiation-induced cancers in the ensuing years. As Fermi noted in 1952, “It was our hope during the war years that with the end of the war, the emphasis would be shifted from weapons to the development of these peaceful aims. Unfortunately, it appears that the end of the war really has not brought peace. We all hope as time goes on that it may become possible to devote more and more activity to peaceful purposes and less and less to the production of weapons.”48

Throughout the war, nuclear scientists working on the Manhattan Project had been sidetracked in the rush to make the bomb before Germany. In the aftermath, the idea to produce peaceful power for the masses, “outside of the shadows of war,” renewed their hopes for a saner future, while aiding the rehabilitation of their reputations as creators of death. The basics entailed using water or some other coolant to remove heat continuously from inside the pile instead of exploding a highly enriched core. Military research money was earmarked to remake death and destruction into energy and light, starting at the newly reformed weapons facilities cum laboratories in the USA, USSR, and UK.

CP-1 had already moved during the war away from public scrutiny and potential urban catastrophe to Argonne Woods in southwest Chicago, reassembled as CP-2 and then CP-3, the site eventually renamed the Argonne National Laboratory before moving again to nearby LaMont. Part atomic facility and part University of Chicago research lab, Fermi oversaw the research at Argonne during and after the war, building on a growing nuclear expertise there and at Los Alamos, Oak Ridge, Livermore (Lawrence’s reformed Berkeley lab), and especially Hanford. The central tenets of reactor physics were worked out in the early post-war years, nuclear science transmuting from a source of curiosity about the nature of matter and the raw power within to the engineering challenge of turning mass into utility for all. No longer beyond comprehension, the atom could do what no alchemist had ever imagined:

The basic theory of a nuclear reactor was developed during the war by Fermi, Wigner, and others and tested in the military reactors. After 1945, a large amount of work was spent in completing the understanding of fission processes and developing a detailed theory of the nuclear reactor. By 1958, with the publication of Alvin Weinberg’s and Eugene Wigner’s authoritative The Physical Theory of Neutron Chain Reactors, the work of the physicists was over in this area.49

Capitalizing on a functionally superior, light-weight, cleaner fuel, the first operational nuclear submarine, the USS Nautilus, was launched in 1955, overseen by Admiral Hyman Rickover, while the USS Enterprise – the first nuclear-powered aircraft carrier and longest naval vessel ever built – was commissioned in 1961, launched by Eisenhower’s wife Mamie. Although keen to generate nuclear power for naval propulsion, the USA still had plenty of energy from its vast oil and coal reserves, and was less inclined to instigate a national energy makeover. The navy was also eager to get in on the atomic act and not be excluded from the nuclear club by the army or air force.

Called the “Father of the Nuclear Navy,” Rickover was a highly intelligent, hard-working electrical engineer who had worked his way up the ranks to admiral in 1953. During World War II, he was in charge of the electrical section of the Bureau of Ships, overseeing the change to infrared signal communication from visible light for ship-to-ship messaging “that made American ships targets for enemy bombs and torpedoes.”50 Assigned to Oak Ridge after the war, Rickover wanted to build “the ultimate fighting platform,” the world’s first nuclear-powered submarine that could remain undetected underwater for prolonged periods of time.51 Importantly, he chose water as the reactor moderator, subsequently employed in all future American commercial designs.

But despite their head start on nuclear-power technology, the Americans were more interested in making bigger bombs and designing advanced ship propulsion for a nuclear fleet unmatched in history. Built by the British near the coastal village of Seascale in northwest England, the first civilian nuclear reactor went critical on October 17, 1956. Calder Hall was a 92-MW, graphite-moderated, CO2-gas-cooled reactor, based on wartime research at Chalk River on the Ottawa River and later at Harwell in Oxfordshire, both directed by the original atom-smasher John Cockcroft. The reactor was dual-purpose, designed to generate electrical power and make plutonium for the British bomb-making program, hence the oddest of nuclear acronyms, PIPPA (pressurized pile producing power and plutonium).

Adapted from the propulsion reactor in Rickover’s nuclear navy, the first US civilian reactor was a 60-MW pressurized light-water reactor, successfully tested – “cold-critical” without power hook-up – on December 2, 1957, the fifteenth anniversary of Fermi’s original CP-1, and “grid-tied” on December 18. Constructed on the shores of the Ohio River, 25 miles northwest of Pittsburgh, the Shippingport Atomic Power Station reactor was built by Westinghouse and the Duquesne Lighting Company under the direction of Rickover’s Naval Research Group, and proclaimed the “world’s first full-scale atomic electric plant devoted exclusively to peacetime uses.”52 Triumphantly fulfilling Eisenhower’s earlier Atoms for Peace declaration to the UN despite the simultaneous building up of nuclear arms, Shippingport was the first commercial American nuclear power plant, following 14 previous reactors built to manufacture weapons-grade plutonium cores.53 Clean air was also cited in contrast to a proposed coal plant on the Alleghany River. As historian Richard Rhodes noted, “no expensive precipitators for smoke control, no expensive scrubbers for sulfur-oxide control, 60 megawatts of peak-load power, and a leg up on nuclear-power technology.”54

Rickover also made a strategic design change, employing uranium dioxide ceramic fuel clad in zirconium instead of uranium metal fuel, making the uranium more difficult to repurpose in a bomb. The United States was ready to take on the world with an unrivaled nuclear arsenal, deterrent to any and all potential belligerents, as well as a space-age atomic-power program to keep the home fires burning brightest. Shippingport operated for 20 years before being re-engineered as a light-water “breeder” reactor, where a thorium core is surrounded by a natural uranium “blanket” to create U-233 fuel (another fissile isotope of uranium), before being decommissioned in 1982.55

***

The pace of construction increased thereafter with two scaled-up commercial reactors in operation in the United States by the end of the 1960s, the 570-MW Connecticut Yankee “pressurized-water” reactor built by Westinghouse south of Hartford on the shores of the Connecticut River (1968) and the 640-MW Oyster Creek “boiling-water” reactor built by General Electric on the New Jersey shore (1969). In the following decade, 100 reactors were built by the two pioneering electrical companies, Westinghouse (pressurized) and GE (boiling), competing again as if electric power had come full circle to its origins. In other countries, reactor designs evolved from their own engineering and technological practices. The dominant design in the USA employed enriched U-235 fuel with light water as a moderator and coolant (pressurized or boiling), known as a light-water reactor (LWR), while graphite moderators became prevalent in the UK (air- or gas-cooled) and in the Soviet Union (light-water cooled).

Essential experience was gained in the early years of nuclear power, such as calculating the optimal fuel scheme to get the most out of every gram of U-235 and the safe operating procedures of the world’s newest energy source. In the United Kingdom, nine more reactors were built in the decade after Calder Hall, supplying almost 25% of British electrical power, while in the United States 20 went online.56 More reactors were built in the secretive and closed-off Soviet Union, starting in the town of Obninsk near Moscow with a small, dual-purpose, high-power channel reactor (reaktor bolshoy moshchnosty kanalny or RBMK) that went critical in 1954. By 1985, there were 25 Soviet reactors.57

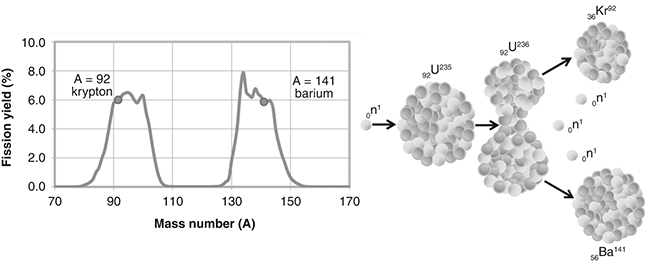

Today, the most common reactor employs a light-water moderator/coolant, either pressurized (PWR) or boiling (BWR). In a PWR, a light-water coolant is kept under high pressure to remain liquid (2000 psi and 300°C58) as it passes through the core before heating a secondary coolant in a heat-exchange system to generate steam to turn the turbine. In a BWR, the light-water coolant is boiled and the steam used to turn the turbine before being condensed and returned to the reactor. A PWR is safer because the irradiated primary coolant stays within the containment structure, while the coolant in a BWR also turns the turbines and thus radioactive matter can escape more easily to the atmosphere in the event of an accident (Figure 3.3).

Figure 3.3 The basics of a pressurized light-water nuclear reactor (PWR).

The other main reactor types employ either a pressurized, heavy-water moderator/coolant (PHWR) as in a CANDU, found mostly in Canada and India, a graphite moderator/gas coolant (Magnox and AGR), found exclusively in the UK, or a graphite moderator/light-water coolant (RBMK), made in Russia, while some experimental breeder reactors (a.k.a. catalytic nuclear burners) are still being tested. Before being discontinued, the Magnox reactor used natural uranium clad in magnesium oxide (hence the name), while an advanced gas-cooled reactor (AGR) uses 2%-enriched U-235 fuel. The RBMK-1000 is the same reactor as in Chernobyl, but now comes with a containment structure to reduce radioactive release in the event of a core breach as occurred in 1986 (which we’ll look at later).

In a “breeder” reactor, fissionable fuel is created inside the reactor core. Breeding is a two-stage process as the fuel can’t fission on its own, but turns into a fuel that can fission via neutron absorption while in operation. Typically, the uranium isotope U-233 is bred from thorium and is thus called a thorium reactor (0n1 + 90Th232 → 90Th233 → 91Pa233 + –1β° → 92U233 + –1β°). Thorium reactors are cooled with liquid sodium and need a boost to start. All reactors “breed” some nuclear fuel, providing a kick or “plutonium peak” in normal operation as U-238 transmutes into U-239 that decays into neptunium and then plutonium before fissioning, which provides about one-third the reactor power (also a way to make weapons-grade bomb material, believed to be how India made its first nuclear bomb in 1974).

Breeder reactors convert “fertile” thorium-232 or natural uranium-238 into fissionable fuel and don’t need to be enriched, saving time, money, and the environmental stress of extensive refining, but have not become economically viable as originally supposed. In his 1956 address to the American Petroleum Institute, where he famously introduced the theory of Peak Oil, M. King Hubbert was overly optimistic, stating “it will be assumed that complete breeding will have become the standard practice within the comparatively near future.”59

Whatever the reactor design, the essential neutron economy is managed by raising and lowering neutron-absorbing “control rods” to keep the reaction steady: both “prompt” primary neutrons emitted during fission and “delayed” secondary neutrons released by fission products on average about 14 seconds later that help maintain a constant criticality. As plenty of water is needed to cool the core and produce steam, nuclear power plants are constructed near rivers, lakes, or oceans, while all nuclear reactors produce a cornucopia of radioactive waste stored in onsite spent fuel pools (a.k.a. bays).

Today, there are 437 commercial nuclear plants operating worldwide in 30 countries, providing roughly 400 GW of power, 92 of which are in the USA (see Table 3.1). The American reactors are all light-water (LWR), either PWR (~67%) or BWR (~33%), while almost 85% of global nuclear reactors are LWR. The others are either PHWR (such as the CANDU, ~10%), graphite-moderated/gas-cooled (~2.5%), graphite-moderated/water-cooled (~2.5%), or fast-breeder reactors (<0.5%).

| Reactor | # | GW | Fuel | Moderator | Coolant | |

|---|---|---|---|---|---|---|

| Pressurized water | PWR | 307 | 293 | Enriched UO2 | Water | Water |

| Boiling water | BWR | 60 | 61 | Enriched UO2 | Water | Water |

| Pressurized heavy water | PHWR | 47 | 24 | Natural UO2 | Heavy water | Heavy water |

| Light water graphite | RBMK | 11 | 7 | Enriched UO2 | Graphite | Water |

| Gas-cooled | Magnox, AGR | 8 | 5 | Natural U metal, enriched UO2 | Graphite | CO2 |

| Fast neutron | FBR | 2 | 1 | PuO2 and UO2 | None | Liquid sodium |

The World Nuclear Association (WNA) maintains a global nuclear power database, citing 437 working nuclear power plants in 2022 rated at a total peak capacity of 390 GW that annually requires 68,000 tonnes of uranium fuel to keep running.60 Two countries, France (65%) and Ukraine (53%), generate more than half their electric power with nuclear (Table 3.2).

| Country | Number of plants | Power (GW) | Global percentage | National percentage |

|---|---|---|---|---|

| USA | 92 | 95 | 24 | 18 |

| France | 56 | 61 | 16 | 65 |

| China | 54 | 52 | 13 | 5 |

| Japan | 33 | 32 | 8 | 6 |

| Russia | 37 | 28 | 7 | 19 |

| South Korea | 25 | 24 | 6 | 26 |

| Canada | 19 | 14 | 3 | 14 |

| Ukraine | 15 | 13 | 3 | 53 |

| Spain | 7 | 7 | 2 | 21 |

| Sweden | 6 | 7 | 2 | 32 |

| Rest | 90 | 58 | 15 | |

| Total | 437 | 394 | 100 |

The largest nuclear power station in the world is the 8-GW Kashiwazaki-Kariwa plant on the west coast of Japan (five BWRs, two ABWRs), while the largest in Europe is the 6-GW Zaporizhzhia plant on the Dnieper River in southeast Ukraine, comprising six water-cooled, water-moderated PWRs, a.k.a. water–water energetic reactors (VVERs). During the 2022 Russian invasion of Ukraine, the site was overrun by military forces as was the Chernobyl plant, raising alarms about possible radiation contamination in a worst-case scenario. After an anxiety-filled visit to the plant by a team of nuclear inspectors from the International Atomic Energy Agency (IAEA) after only one of the six reactors had remained online, two inspectors stayed onsite as the IAEA called for a demilitarized perimeter and nuclear safety protection zone in the embattled region, underscoring the challenge of keeping citizens safe from radiation leaks in wartime.

***

Despite many engineering firsts, dizzying technical achievements, and a seemingly simpler supply of fuel, today providing almost 5% of global electric power, atomic energy is under fire on numerous fronts, because of high construction costs, safety concerns, and the ongoing problem of radioactive waste, all a far cry from the early 1960s when everyone wanted to get into the nuclear biz. The army had their bigger and better bombs, the navy their submarines that can stay submerged for years, while the air force even wanted to build a nuclear plane, spending almost $5 billion on a prototype before admitting that flying something as bulky as a nuclear reactor was not practical nor would the damage be easy to contain in the event of a radioactive reactor falling from the sky.

Edward Teller wanted to use atomic bombs for small-scale engineering to clear land for a deepwater Alaskan port (codenamed Operation Chariot), build a Sacramento Valley canal to move water to San Francisco, and extract oil and gas from the heavy bituminous Albertan oil sands in an ill-devised 1958 nuclear fracking plan (codenamed Project Oilsands).61 For a trained physicist, who intimately knew the secrets of the atom, one wonders how the father of the H-bomb was so ignorant about the realities of radioactive contamination. Project Plowshare was similarly designed to use “nuclear excavation technology” for large-scale landscaping, including creating a new Panama Canal (dubbed the Pan-Atomic Canal), but was abandoned for obvious safety reasons. Project Gasbuggy, however, was approved to detonate an atomic bomb to extract natural gas about a mile underground in northern New Mexico in another fracking experiment to explore peaceful nuclear uses. The site is now a no-go area. After a similar test detonation in Colorado, the gas was “too heavily contaminated with radioactive elements to be marketable.”62

Even NASA wanted to go nuclear, hoping to power future satellites with plutonium. That is, until a plutonium-powered navigation satellite failed to achieve orbit in a 1964 launch and fell back to Earth, disintegrating upon re-entry (also why launching nuclear waste into the Sun or space is not such a smart idea). A subsequent soil-sampling program found, “embarrassingly, that radioactive debris from the satellite was present in ‘all continents and at all latitudes’.”63

NASA also had heady plans to power a spaceship with “nuclear bomblets” that would carry 10 times the payload of a Saturn V moon rocket, but Project Orion literally didn’t make it off the ground and was ditched in 1965 after a decade of fruitless work.64 Initially seeded with $1 million in funding to a private contractor, the idea was to focus the massive propulsive power of 100 nuclear bombs detonated at half-second intervals to reach space, although no one was quite sure how to keep the ship from blowing itself apart.65 Project A119 was another top-secret US plan to detonate a lunar atomic bomb as a show of force after Sputnik, also discarded in favor of the PR-winning Apollo moon landing. The lunar detonation was the brainchild of another Manhattan Project luminary, Harold Urey, who thought the explosion would shower the Earth with moon rocks or that the resulting debris collected by a following missile could then be analyzed.

There was even a plan to nuke the recently discovered Van Allen radiation belts in an unforgettable fireworks display to show the world the full power of the American atomic arsenal, in particular the Soviets. Unfortunately, this demented scheme – dubbed the Rainbow Bomb – went ahead and on July 8, 1962, a 1.4-megaton H-bomb was exploded 250 miles above a Pacific Ocean launch site on Johnston Island. In his catalogue of bizarre experiments conducted in the name of science, Alex Boese noted that “Huge amounts of high-energy particles flew in all directions. On Hawaii, people first saw a brilliant white flash that burned through the clouds. There was no sound, just the light. Then as the particles descended into the atmosphere, glowing streaks of green and red appeared.”66

The man-made atomic light show lasted 7 minutes, but also knocked out seven of 21 satellites in orbit at the time and temporarily damaged the Earth’s magnetic field. Launched the previous day, AT&T’s Telstar I also became a casualty of the EM pulse months later, while radiation levels around the Van Allen belts took 2 years to settle. On the plus side, one of the most irresponsible displays of military hubris brought an end to atmospheric, underwater, and space testing of atomic bombs as the United States and the Soviet Union signed the Partial Test Ban Treaty the next year. Apparently, filling the skies with nuclear radiation was a step too bold even for science.

In the early heyday of reactor building, many thought nuclear power was as simple as igniting an atomic-powered Batmobile. Fortunately, calmer heads prevailed and we aren’t now cleaning up a permanent radioactive mess created by atomic planes, nuclear blasters, or plutonium-powered satellites and rockets. Nonetheless, we are still dealing with the radiation fallout from decades of weapons testing, a grim reminder of the madness of unchecked technological warfare. There are also restrictions about where to build a nuclear power plant, such as not on seismically active ground, above water tables, or near large populations. Reactor containment buildings must also be able to withstand a jet plane crash or a large-scale earthquake. Alas, not all of the world’s 400 or so reactors fit the bill.

Decommissioning is an especially sticky issue, where after 60 years of operation, radiation-degraded materials are no longer fit for service. The Nuclear Regulatory Commission (the US atomic licensing agency) grants a 40-year operating period that can be renewed up to a further 20 years. The first commercial US nuclear power plant – Oyster Creek Nuclear Generating Station near the Jersey shore – was shut down in 2018 after almost 50 years, while the Connecticut Yankee Nuclear Generating Station built in 1968 was taken offline in 1996 and decommissioned 8 years later. In the absence of a permanent long-term nuclear waste-disposal plan, the spent fuel rods remain onsite, stored in a so-called Independent Spent Fuel Storage Installation (ISFSI).

The USS Enterprise was deactivated in 2012, although its reactors are still intact with no decision yet on full decommissioning, while the USS Nautilus was retired in 1973 after 15 years of service and is now a museum in New London, Connecticut. The first vessel to reach the North Pole – in 1958 on its third attempt – Nautilus logged over 500,000 miles, more than seven times the 20,000 leagues of its Jules Verne-inspired namesake.

Having ignored the realities of nuclear aging by continuously kicking the decommissioning can down the road, today’s fleet of 40- to 60-year-old reactors are showing their age. The WNA estimates that at least 100 plants will be shut down by 2040,67 although in early 2021 the US Nuclear Regulatory Commission called for American reactor lifetimes to be extended to 100 years (“Life Beyond Eighty”), increasing the threat to public safety and the likelihood of an accident. Despite ongoing concerns about cost, safety, waste disposal, and what to do when a reactor is no longer fit for service, however, 60 new reactors are under construction in 16 countries (half in China and India), roughly 100 are on order or planned (with a total capacity over 100 GW), and more than 300 are in the works.68

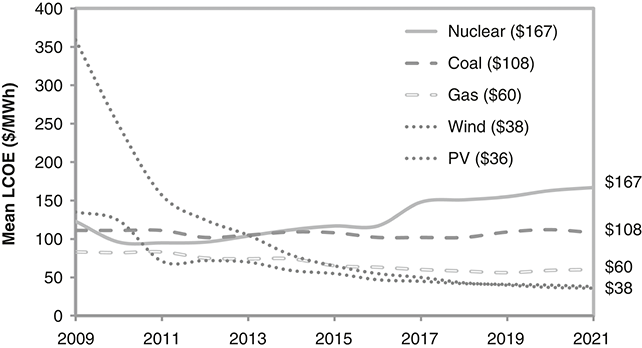

Large capital costs are also a deterrent for would-be operators, particularly when amortized over decades or subjected to expensive cost overruns from delayed site licensing that can take years depending on local opposition. Nuclear NIMBY (“not in my backyard”) is a powerful force against building a nuclear plant, especially after the headline-grabbing accidents of Three Mile Island (1979), Chernobyl (1986), and Fukushima (2011). No new nuclear power plants have been built in the USA since 1979, although two under construction in Georgia are expected online soon according to the latest revised estimates (Vogtle 3 and Vogtle 4).

To make up for the shortfall as old units go offline and no new replacement reactors are built, more energy is being squeezed out of existing reactors at a rating higher than the original design (called “uprating”), of which 149 of 150 requests in the USA have been approved.69 Uprating and less downtime have kept the overall percentage of nuclear power in the United States at around 20% over the last 40 years despite an ever-growing population and no new reactors being built on American soil in four decades.

***

Although the physics is basically the same, new reactor designs have been employed to improve safety. Gen-III or “advanced” nuclear power reactors are fitted with “passive” safety features (for example, natural water convection) and designed to work without electricity in the event of a failure, retroactively added to the older Gen-I and Gen-II reactors to avoid a Fukushima-type loss of power and coolant. EDF’s EPR, Hitachi’s ABWR, Toshiba’s AP1000, and KEPCO’s APR1400 are essentially Gen-III upgrades of older Gen-II reactors.

Experimental Gen-IV reactors are also in various stages of development, such as the very-high-temperature reactor (VHTR) and molten salt reactor (MSR) designs. A VHTR is a helium-gas-cooled, graphite-moderated reactor with an exceedingly high coolant temperature over 1,000°C that uses a tri-isotropic (TRISO)-coated particle fuel comprised of a uranium, carbon, and oxygen kernel, which resembles a billiard-ball-sized pebble, hence the name “pebble-bed reactor” (PBR). The reaction heat can also be used to generate high temperatures required in the chemical, oil, and iron industries or to produce hydrogen. MSRs employ liquid-sodium cooling that circulates at a lower temperature and pressure to run in a simpler, less-expensive reactor design. Trials are starting up in China and the USA. Advanced fuel types such as thorium, plutonium, and mixed oxide (MOx) have also been proposed.

Small-scale nuclear reactors are also being designed to limit construction costs that can run to at least $10 billion per gigawatt, not including overruns. The so-called SMRs – small modular reactors – have lower power ratings at up to 350 MW, less upfront financing, are built in sections in a factory and shipped on site for easy assembly (nuclear IKEA!), and require fewer operators and less maintenance. Those cooled with sodium don’t need water and thus aren’t restricted to coastal or riverside sites. Just add more to expand, although the average cost is still high without any obvious economy of scale.

One proposed “micro-reactor” was the Toshiba 4S (Super-Safe, Small, and Simple), a 10-MW cigar-shaped, liquid-sodium-cooled design, run on highly enriched fuel (19.9% U-235) and a fast-neutron graphite reflector to control the reaction (fission stops upon opening the reflector). The coolant circulates via electromagnetic pumps, while more fuel is burnt than in a conventional nuclear reactor, producing less waste and less plutonium. The underground setup also increases safety in the event of an accident, and is meant to run for 30 years with minimal supervision, although weapons-grade uranium can more easily be made from the higher enriched uranium. Especially suited to remote areas, a 4S mini-reactor was planned for Galena, Alaska, a 500-strong town on the Yukon River with only 4 hours of daylight in the depths of winter, where customers pay well above the going price for diesel. Alas, the Galena project was cancelled and no commercial mini-nukes have yet been built as the search continues to make nuclear power safe and affordable.

Funded by Microsoft co-founder Bill Gates, TerraPower is investing in small-scale nuclear, such as the traveling wave reactor (TWR), a prototype, liquid-sodium-cooled, fast-breeder “Natrium” reactor that uses fertile depleted uranium for fuel. Fertile material is not fissionable on its own, but can be converted into fissionable fuel by absorbing neutrons within the reactor; for example, U-238 first absorbs a neutron to become U-239 that beta-decays to Np-239 and then fissionable Pu-239. A small amount of concentrated fissile U-235 “initiator” fuel is needed to start a breeder reactor as in a traditional nuclear reactor before the fertile U-238 atoms absorb enough neutrons to begin producing Pu-239. Other fertile/fissile fuel combinations are possible besides the U-238/Pu-239 cycle, such as thorium-232 and uranium-233 in a Th-232/U-233 cycle. A steady “breed-burn” wave is maintained by moving the fuel within the reactor to ensure a constant neutron flux.

Such low-pressure, non-light-water reactors (NLWRs) have never been proven, however, ironically noted by one economist as “PowerPoint reactors – it looks nice on the slide but they’re far from an operating pilot plant. We are more than a decade away from anything on the ground.”70 Safety and maintenance is also a concern. Hyman Rickover avoided sodium-cooled reactors for the US navy because of high volatility, leaks, radiation exposure, and excess repair time, reporting to Congress in 1957 that “Sodium becomes 30,000 times as radioactive as water. Furthermore, sodium has a half-life of 14.7 hours, while water has a half-life of about 8 seconds.”71

Nonetheless, in 2021, a Chinese research group in Wuwei, Gansu, completed construction on the first liquid-sodium-cooled reactor since Oak Ridge National Laboratory’s 7-MW, U-233-fueled, molten-salt test device that was shut down in 1969 after 5 years.72 Expected to produce only 2 MW and fueled for the first time with thorium, the Chinese MSR could take a decade to commercialize to a working 300-MW reactor. TerraPower is also hoping to build a $4 billion, 345-MW, depleted-uranium demonstration reactor in the coal-mining town of Kemmerer, Wyoming (population 2,656), which could be up and running by 2030 subject to the testing process.73 Half of the seed money is coming from the US Department of Energy, angering critics of the unproven technology, while no long-term, waste-disposal solutions were included in the plan.

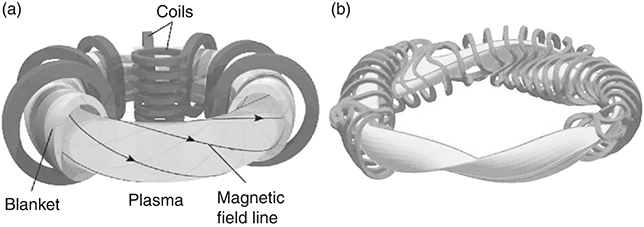

Today, the only operational SMR is a floating reactor that brings nuclear power to the consumer. In 2018, Russia’s state nuclear power company, Rosatom, started up the Akademik Lomonosov, a $480 million, 70-MW, barge-mounted, pressurized LWR, held in place by tether to a wharf behind a storm- and tsunami-safe breakwater in Kola Bay near the northern city of Murmansk. Factory-assembled and mass-produced in a shipyard, costs were reduced by about one-third and construction time by more than half. Used to provide district heating to the town of Pevek via steam-outlet heat transfer, one resident replied when asked about a possible radiation leak or accident, “We try not think about it, honestly.”74