The chapters in this book have explored how privacy commons, understood as the sharing and use of personal information, are governed, as well as how information subjects are sometimes excluded from governance. Our previous two books, Governing Medical Knowledge Commons (2017) and Governing Knowledge Commons (2014), collected case studies of commons governance aimed at promoting and sustaining innovation and creativity by sharing and pooling knowledge. While personal information is often shared and pooled for similar purposes, it is distinctive in several important respects. First, and foremost, personal information is inherently about someone, who arguably has a particularized stake in the way that information is shared, pooled and used. This relationship means that privacy commons governance may be ineffective, illegitimate or both if it does not appropriately account for the interests of information subjects. Second, personal information is often shared unintentionally or involuntarily as a side effect of activities aimed at other goals, possibly creating a schism between those seeking to pool and use personal information and the individuals most intimately tied to it. Third, in our current technological era, personal information often flows via commercial communication infrastructure. This infrastructure is owned and designed by actors whose interests may be misaligned or in conflict with the interests of information subjects or of communities seeking to pool, use and manage personal information for common ends. Finally, governing the flow of personal information can be instrumental and often essential to building trust among members of a community, and this can be especially important in contexts where it is a community interested in producing and sharing knowledge.

As the chapters in this volume illustrate, the distinctive characteristics of personal information have important implications for the observed features of commons governance and, ultimately, for legitimacy. Taken together, the studies in this volume thus deepen our understanding of privacy commons governance, identify newly salient issues related to the distinctive characteristics of personal information, and confirm many recurring themes identified in previous GKC studies.

Voice-shaped, Exit-shaped and Imposed Patterns in Commons Governance of Personal Information

To organize some of the lessons that emerge from the GKC analysis of privacy, we harken back to patterns of governance that we identified in our privacy-focused meta-analysis of earlier knowledge commons studies (Reference Sanfilippo, Frischmann and StrandburgSanfilippo, Frischmann and Strandburg, 2018). Though those earlier case studies were neither selected nor conducted using a privacy lens, the meta-analysis identified three patterns of commons governance: member-driven, public-driven and imposed. We observe similar patterns in the privacy-focused case studies gathered here. Reflecting on these new cases allows to refine our understanding of these governance patterns in three respects, which inform the analyses in sub-sections 1.1, 1.2 and 1.3, which illustrate and systematize some of the important patterns that we observe.

First, we hone our understanding of these patterns by drawing on A. O. Hirschmann’s useful conceptions of ‘voice’ and ‘exit’ as distinctive governance mechanisms. What we previously termed ‘member-driven’ commons governance is characterized by the meaningful exercise of participant ‘voice’ in governing the rules-in-use (Reference Gorham, Nissenbaum, Sanfilippo, Strandburg and VerstraeteGorham et al. 2020). Even when participants do not have a direct voice in governance, however, they may exert indirect influence by ‘voting with their feet’ as long as they have meaningful options to ‘exit’ if they are dissatisfied. The governance pattern that we previously characterized as ‘public-driven’ is associated with just such opt out capacity, driving those with direct authority to take participants’ governance preference into account – it is in this sense ‘exit-shaped’. Commons governance is ‘imposed’ when participants have neither a direct ‘voice’ in shaping rules-in-use nor a meaningful opportunity to ‘exit’ when those rules are not to their liking.

Second, as discussed in the Introduction to this volume, personal information can play two different sorts of roles in knowledge commons governance. Most obviously, as reflected in the cases studied in Chapters 2 through 5, personal information is one type of knowledge resource that can be pooled and shared. For example, personal health information from patients may be an important knowledge resource for a medical research consortium. In these cases, privacy is often an important objective to information subjects, as actors who may or may not be adequately represented in commons governance. But even when personal information is not pooled as a knowledge resource, the rules-in-use governing how personal information flows within and outside of the relevant community can have important implications for sustaining participation in a knowledge commons and for the legitimacy of its governance. Chapters 5 through 7 analyse this sort of situation. Either sort of privacy commons can be governed according to any of the three patterns we previously identified. Moreover, and independently, privacy commons governance can also be distinguished according to the role played by information subjects because personal information about one individual can be contributed, disclosed or collected by someone else. Thus, members who have a voice in commons governance might use personal information about unrepresented non-members to create a knowledge resource. Similarly, participants who opt to contribute to a knowledge commons might contribute information about non-participants who have neither a voice in the governance of their personal information nor any ability to opt out of contributing it. And, of course, imposed commons governance might be designed to force participants to contribute personal information ‘without representation’.

Third, we note that even the more nuanced taxonomy presented here papers over many grey areas and complexities that are important in real-world cases. Governance patterns reside on a continuum in, for example, the extent to which governance institutions empower particular individuals and groups. Moreover, most shared knowledge resources are governed by overlapping and nested institutions that may follow different patterns. The often polycentric nature of resource governance, involving overlapping centres of decision-making associated with different actors, often with different objectives and values, is well-recognized in studies of natural resource commons (e.g. Reference McGinnisMcGinnis, 1999; Reference OstromOstrom, 1990). Polycentricity is equally important in knowledge commons governance. Thus, the rules-in-use that emerge in any given case may have complex origins involving interactions and contestation between different groups of commons participants and between commons governance and exogenous background institutions. Different aspects of a case may exhibit different governance patterns. Moreover, some participants may have a voice in shaping certain rules-in-use, while others encounter those same rules on a take-it-or-leave-it basis. This polycentricity means that some cases appear in multiple categories in the analysis mentioned later.

We also emphasize that our categorization of voice-shaped, exit-shaped and imposed commons governance is descriptive. The normative valence of any commons activity depends on its overall social impact. Thus, different governance patterns may be normatively preferable for different knowledge commons or even for different aspects of the same knowledge commons. In particular, as we explain below, any of the three governance patterns can be implemented in a way that accounts adequately or inadequately for the interests and concerns of personal information subjects. For example, while imposed commons governance associated with commercial infrastructure is notoriously unresponsive to information subject concerns, government-imposed commons governance often aims to bring the interests of information subjects into the picture.

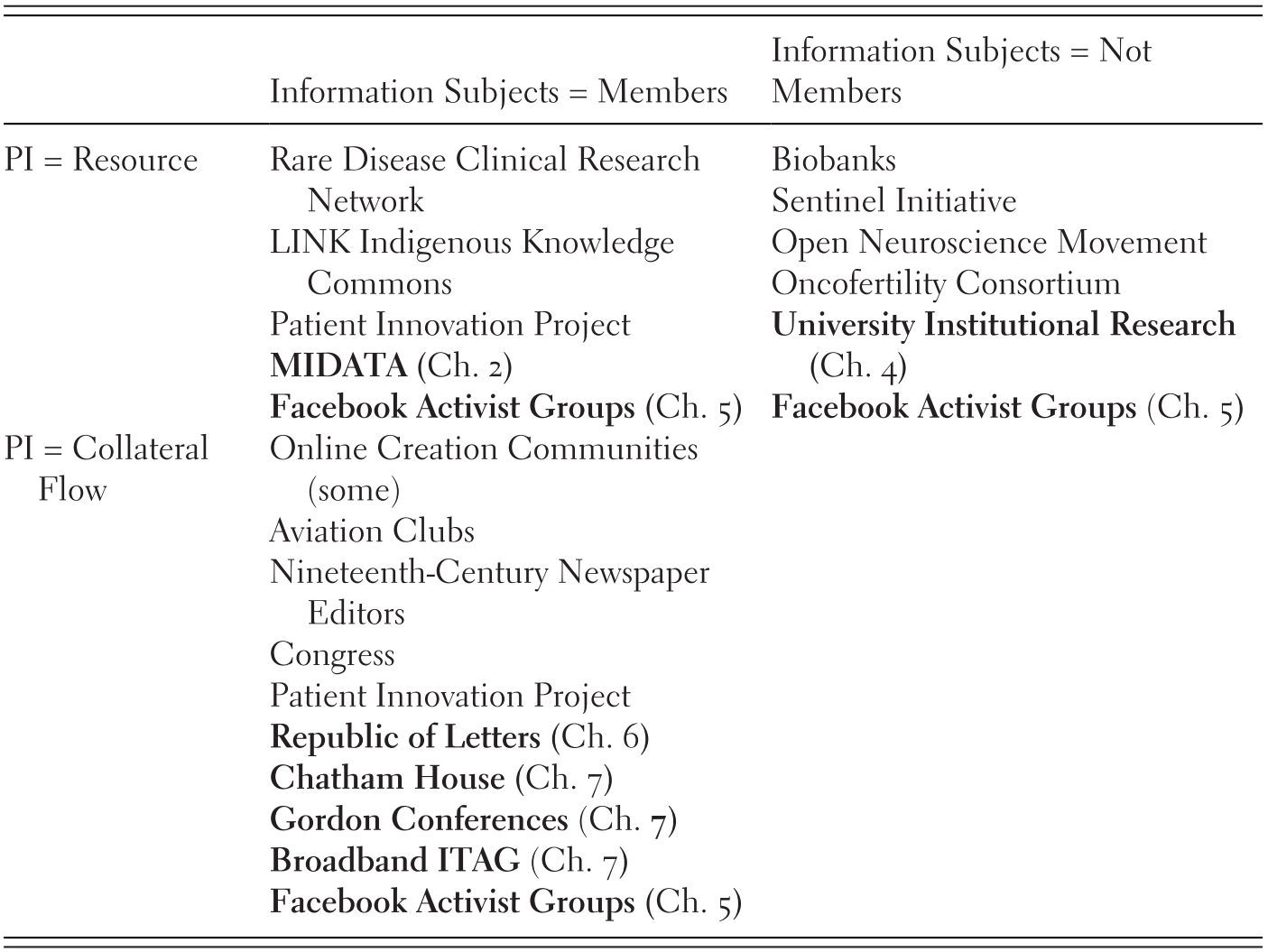

Voice-shaped Commons Governance

In the voice-shaped governance pattern, those creating and using knowledge resources are also responsible for their governance. The success of voice-shaped commons arrangements depends on governance that encourages reciprocal contribution for a mutually beneficial outcome. Chapters 2, 4, 5, 6 and 7 in this book describe cases characterized at least in significant part by voice-shaped governance of personal information. In Chapters 2, 4 and 6 this voice-shaped governance is mostly informal, while Chapters 3 and 7 describe more formal governance structures. Cases exhibiting voice-shaped commons can be further characterized as illustrated in Table 11.1, which employs the distinctions based on source and use of personal information described above to categorize cases from this volume and from our earlier meta-analysis.

Table 11.1 Voice-shaped commons breakdown

(Case studies in this volume are in bold)

| Information Subjects = Members | Information Subjects = Not Members | |

|---|---|---|

| PI = Resource |

| |

| PI = Collateral Flow |

|

As illustrated in the top row of Table 11.1, voice-shaped commons governance is sometimes applied to create and manage a pool of personal information as a resource. In the cases listed in the upper left quadrant, members participate in governance of knowledge resources created by pooling their own personal information. That quadrant includes medical commons in which patients or their representatives have a direct voice in commons governance, including the MIDATA case explored in Chapter 2 and earlier-studied RDCRN cases, the previously studied LINK Indigenous Knowledge Commons, in which representatives of indigenous groups participate in governing information resources that they view as intimately related to their communities, as well as some aspects of the Facebook activist groups explored in Chapter 5.

In the cases listed in the upper right quadrant, members govern knowledge resources they create by contributing other people’s personal information. In the previously studied medical cases in that quadrant, for example, patient information is governed by consortia of physicians and medical researchers without direct patient involvement. Similarly, in Chapter 4 of this volume, Jones and McCoy describe institutional research by university administrators using a pool of student personal information. Governance of the sharing and use of student information is largely voice-shaped, in that many of the rules-in-use are determined by university personnel who contribute and use the information. Crucially, however, the student information subjects are not members of this governing community.

The distinction is normatively significant. While members may have altruistic concerns for non-member information subjects or be bound, as in the medical and education contexts, by background legal or professional obligations to them, voice-shaped governance is no guarantee that the concerns of non-members will be adequately addressed. Indeed, the NIH included patient representatives as governing members in the Rare Disease Clinical Research Network as a condition of government funding following complaints that patient interests had not been sufficiently represented in earlier consortia made up entirely of physicians and researchers.

That said, governance without the direct participation of information subjects does not necessarily give members free rein to share and use other people’s personal information however they please. Personal health and education information, for example, is governed by applicable background privacy legislation, ethical rules and professional norms. Moreover, in some contexts commons members may be required to obtain the consent of information subjects before contributing their personal information to the pool. Consent, however, is not the same as participation in governance, a point we explore further below and in related work (Gorham et al.).

As illustrated in the bottom left quadrant of Table 11.1, voice-shaped commons governance may also be applied to collateral flows of members’ personal information that occur in conjunction with or as a by-product of creating some other sort of shared knowledge resource. Appropriate governance of such collateral flows of personal information can be important for encouraging participation, improving the quality of other sorts of knowledge resources the group creates and otherwise furthering the goals and objectives of voice-shaped commons governance. The cases in Chapter 7 by Frischmann et al. illustrate how constraints on the flow of members’ personal information to outsiders can incentivize diverse and open participation in creating other sorts of knowledge resources and improve their quality. Whether it is the Chatham House Rule’s incredibly simple prohibitionFootnote 1 on revealing the identity or affiliation of speakers or the more elaborate confidentiality rules adopted by Broadband Internet Technical Advisory Group (BITAG), privacy governance fosters knowledge production and sharing by members. Madison’s Chapter 6 illustrates how informal norms against disclosing personal information in exchanges with other members created a venue for building a knowledge base through rational, scientific argument. The previously studied Patient Innovation Project similarly aims to create a pool of generalizable knowledge about medical innovations made by patients and caregivers, but personal information flows are an inevitable by-product of the sharing of innovations so intimately bound up with patients’ medical conditions. Though the Patient Innovation Project governs these collateral flows of personal information in part by platform design, as discussed in the next sub-section, sub-communities have also developed more tailored, voice-shaped information sharing norms. The bottom right quadrant of Table 11.1 is empty, perhaps because collateral flow of non-member personal information that is not being pooled into a shared resource is rare.

The Facebook activist groups studied in Chapter 5 are included in three of the four quadrants in Table 11.1 because of the variety of personal information-based resources involved and the various ways in which intentional and collateral personal information flows affected participation in these groups. We can describe the governance of these pooled personal information resources and collateral flows as voice-shaped to the extent that contributors either participated actively in creating the mostly informal rules-in-use that emerged or viewed themselves as adequately represented by the groups’ more actively involved leaders and organizers. Voice-shaped governance was only part of the story for these Facebook activist groups, however, as discussed in the sections on exit-shaped and imposed commons later.

In these cases, personal information was contributed directly to shared knowledge resources by those who posted personal narratives to the public Facebook pages, contributed photos, joined Facebook groups or signed up for events or email lists. These pooled knowledge resources were used to further the group’s objectives by informing and persuading the public, facilitating communication of information to members and so forth. While much of this personal information pertained to the contributors, these cases are included in both left and right quadrants of the top row because it was also possible to contribute personal information pertaining to someone else. Indeed, this sort of behaviour occurred often enough that groups developed mechanisms for protecting potentially vulnerable non-participants from such disclosures through rules-in-use. These cases thus illustrate not only the potential for information subjects to be left out of voice-shaped governance, but also the fact that voice-shaped governance may nonetheless incorporate protections for non-members.

The Facebook activist groups of Chapter 5 are also represented in the bottom left quadrant of Table 11.1 because they adopted rules-in-use governing collateral personal information flow arising, for example, from the metadata identifying those who posted to the Facebook pages and the interactions between organizers behind the scenes. In some ways, the various interactions between personal information and participation parallel patterns observed within the Patient Innovation Project, a previous case study. With respect to Patient Innovation, however, personal information as a resource or as collateral flows always pertained to members, rather than non-member information subjects.

Exit-shaped Commons Governance

Exit-shaped commons governance, as we identified in Chapter 1, occurs when an individual or group creates an infrastructure for voluntary public participation in creating a shared knowledge resource. It thus differs from voice-shaped governance in that contributors to the knowledge resource do not participate directly in its governance. The key characteristic that distinguishes exit-shaped commons governance from imposed governance is that contributions are meaningfully voluntary. As a result, whoever governs the shared knowledge resource must do so in a way that will attract participants.

The characteristics of personal information surface distinctions among cases of exit-shaped commons governance similar to those we observed for voice-shaped governance, as illustrated in Table 11.2.

Table 11.2 Exit-shaped commons breakdown

(Case studies in this volume are in bold)

| Information Subjects = Public participants | Information Subjects = Others | |

|---|---|---|

| PI = Resource | Facebook Activist Groups (Ch. 5) IoT (Ch. 9) | |

| PI = Collateral Flow | Online creation communities Galaxy Zoo Patient Innovation Project Facebook Activist Groups (Ch. 5) |

Before delving into the distinctions between cases in the different quadrants in Table 11.2, we focus on common features of exit-shaped commons governance. Most importantly, given that participation is meaningfully voluntary, designers of exit-shaped commons governance must ensure that potential participants will find it worth their while to contribute. As a result, even though contributors do not participate directly in governance, designers of exit-shaped commons cannot stray too far out of alignment with their interests. Trust is important. So, setting aside personal information for the moment, the need to attract participants means that the mental health chatbot must offer mental health assistance that, all things considered, is at least as attractive as alternatives. Galaxy Zoo and many online creation communities have adopted rules favouring non-commercial use of their (non-personal) knowledge resources, presumably because potential contributors find those policies attractive. More limited forms of democratic participation adopted by some online communities may have served similar purposes.

Turning more specifically to the exit-shaped commons governance of personal information, Table 11.2, like Table 11.1, lists cases aiming to create a pool of personal information in the top row and cases involving only personal information flow collateral to other sorts of activities in the bottom row.

The Woebot mental health chatbot described by Mattioli in Chapter 3 appears in the top left quadrant because it creates of pool of personal information contributed by patients as they use the app. By using a therapy chatbot, patients receive mental health assistance, while simultaneously contributing their personal health information to a knowledge pool that can be used by the app’s creators to improve its performance. Based on the analysis in Chapter 3, we categorize the governance of the personal information collected by the Woebot chatbot as exit-shaped. Governance of these personal information resources is not voice-shaped because it is physicians, not patients, who control the design of the app and the use of the associated personal information. Use of these chatbots, and the associated information pooling, does however currently appear to be meaningfully voluntary. Patients seem to have many viable alternative treatment options. Moreover, the chatbot’s physician designers appear to have transparently committed to using the resulting knowledge pool only for research purposes and to improve the app’s operation. It thus seems plausible that patients using the chatbot understand the ramifications of the chatbot’s collection of their personal information, because interesting rules-in-use operationalize this intent in ways that align with patient expectations.

We categorize the Facebook activist groups discussed in Chapter 5 under exit-shaped governance, as well as voice-shaped governance. Informal governance by trusted leaders is a recurring theme in knowledge commons governance. Nonetheless, participation in these movements was so broad that it is virtually inevitable that some participants – especially those who joined at a later stage – experienced the rules-in-use and governance as essentially ‘take it or leave it’. Like the more involved members discussed earlier, such participants could have posted personal information pertaining to themselves or to others. These groups were extremely successful in attracting large numbers of participants who contributed various sorts of personal information. While this success presumably reflects some satisfaction with the rules-in-use for personal information, later joining participants may not have viewed their choice to participate in these particular groups as entirely voluntary. As these groups became foci for expressing certain political views, their value undoubtedly rose relative to alternative protest avenues. This rich-get-richer phenomenon thus may have tipped the balance toward imposed governance, as discussed in the next sub-section.

The rules-in-use for collecting and employing personal information about users of Internet of Things (IoT) devices are largely determined by the commercial suppliers of ‘smart’ devices. The survey study by Shvartzshnaider et al., reported in Chapter 9, suggests that some device users have a sufficient understanding of the way that their personal information is collected and used by IoT companies that their decision to opt in by purchasing and using a given device or to opt out by not doing so are meaningfully voluntary. For this subset of users, the governance of IoT personal information resources may be categorized as exit-shaped and entered into the top left quadrant of Table 11.2. Notably, however, those users’ choices to opt in may also result in the collection of personal information from bystanders, guests and others who have made no such choice. We thus also categorize the IoT in the top right quadrant of Table 11.2. Much as for mental health chatbots, diminishing opportunities for meaningful exit amid pervasive surveillance environments oriented around IoT may disempower users, tipping governance from exit-shaped to imposed, as we will discuss in the next sub-section. On the other hand, one very interesting observation of the Shvartzshnaider et al. study is that online IoT forums allow users to pool their experiences and expertise to create knowledge resources about personal information collection by smart devices and strategies to mitigate it (at least to some degree). Those forums may thus empower consumers and expand the extent to which the governance of personal information resources collected through the IoT is exit-shaped.

The cases in the bottom row of Table 11.2 involve exit-shaped governance of collateral flows of personal information associated with the creation of other sorts of knowledge resources. Galaxy Zoo and the online creation community cases identified in our earlier meta-analysis both fall into this category. We observed in our earlier meta-analysis that those systems governed the collateral flow of personal information, at least in part, by allowing anonymous or pseudonymous participation. Nonetheless, though anonymity was the norm, participants were not discouraged from strategically revealing personal information on occasion in order to establish credibility or expertise. This set of rules presumably encouraged public participation by protecting participants from potentially negative effects of exposing their personal information publicly online while still allowing them to deploy it strategically in ways that benefitted them and may have improved the quality of the knowledge resource. The Patient Innovation Project similarly involves collateral flows of personal information intertwined with information about medical innovations developed by patients and caregivers, though its rules-in-use are different. Though sub-community governance is partially voice-shaped, as discussed above, much of the governance of personal information flows depends on platform design and is thus categorized as exit-shaped.

As noted in the previous section, the Facebook activist groups discussed in Chapter 5 also developed rules-in-use to govern collateral flows of personal information associated with the creation of other sorts of knowledge resources. To the extent those rules-in-use applied to contributors who were not adequately represented in governance, they also constitute exit-shaped commons governance.

Notably, all of the previously studied cases in Table 11.2 appear in the bottom row and involved the creation of general knowledge resources not comprised of personal information. These previously studied knowledge commons were also designed to make the knowledge resources they created openly available. For these earlier cases, the designation ‘public-driven’ may have been ambiguous, conflating openness to all willing contributors with public accessibility of the pooled information or public-generated data sets. The studies categorized in the top row of Table 11.2 clarify that there is a distinction. When we speak of exit-shaped commons governance, we mean openness regarding contributors.

We thus emphasize again the importance of meaningful voluntariness as the key characteristic of exit-shaped commons governance. If participation is not meaningfully voluntary, commons governance becomes imposed, rather than exit-shaped – a very different situation, which we discuss in the next section. Meaningful voluntariness means that potential contributors have meaningful alternatives as well as a sufficient grasp of the ramifications of contributing to the knowledge pool. Exit-shaped commons governance must therefore be designed to attract contributors in order to succeed. The need to attract contributors forces governance to attend sufficiently to participants’ interests. We do not, therefore, expect rules-in-use of open accessibility to emerge from exit-shaped commons governance of personal information pools because open availability would be likely to deter, rather than attract, potential contributors. In exit-shaped commons governance, rules-in-use regarding access to pooled resources are tools that designers can shape to attract participation. We would thus expect access rules to vary depending on the sorts of personal information involved and the goals and objectives of both potential participants and designers.

Of course, while meaningful voluntariness is the key to categorizing governance as exit-shaped, it is no guarantee of success. For example, one could imagine a version of the mental health chatbot that was completely transparent in its intentions to sell mental health information to advertisers or post it on the dark web. That sort of governance would be sufficiently voluntary to be classified as exit-shaped, but highly unlikely to attract enough participants to succeed.

Finally, it is important to note that while exit-shaped commons governance gives contributors some indirect influence over the rules-in-use, it does nothing to empower individuals whose personal information is contributed by others. Thus, cases in the upper right quadrant of Table 11.2 raise the same sorts of privacy concerns as cases in the upper right quadrant of Table 11.1. Just as members-driven governance may fail to attend to the interests of non-member information subjects, designers of exit-shaped governance may fail to attend to the interests of individuals whose personal information can be obtained without their participation.

Imposed Commons Governance

Imposed commons governance is similar to exit-shaped commons governance in that knowledge contributors do not have a voice in the rules-in-use that emerge, but differs significantly because contributors also do not opt for imposed governance in any meaningfully voluntary way. In other words, to the extent commons governance is imposed, contributors and information subjects alike lack both voice and the option to exit. While there is no bright line between voluntarily accepted and mandatory governance, one practical result is that imposed commons governance, unlike exit-shaped governance, need not be designed to attract participation. Thus, though designers might choose to take the interests and preferences of contributors into account, they need not do so.

Those with decision-making power over rules and governance are not always or necessarily the information subjects. Communities can include different membership groups and subgroups, and can rely on different existing infrastructures and technologies for collecting, processing and managing data. Governance associated with these infrastructure and external platforms are determined in design, by commercial interests, and through regulations, thus they will vary accordingly. Externally imposing commons governance requires power of some sort that effectively precludes contributors from opting out of participation. Such power may arise from various sources and can reside in either government or private hands.

One important source of power to impose commons governance over personal information is control and design of important infrastructure or other input resources needed to effectively create and manage the desired knowledge resources. This power is often associated with infrastructure because of network and similar effects that reduce the number of viable options. The Facebook activist groups study in Chapter 5 provides a good example of this source of privately imposed commons governance. Organizers repeatedly noted that they were displeased with certain aspects of Facebook’s platform design and treatment of contributors’ personal information. For these reasons, all three activist groups resorted to alternative means of communication for some purposes. Nonetheless, all concluded that they had no reasonable alternative to using Facebook as their central platform for communicating, aggregating and publicizing information. This example illustrates that complete market dominance is not required to empower a party to impose commons governance to some degree.

Another important source of imposed governance is the law, which is part of the background environment for every knowledge commons arrangement. (Of course, in a democracy, citizens ultimately create law, but on the time frame and scale of most knowledge commons goals and objectives, it is reasonable to treat legal requirements as mandatory and externally imposed.) Applicable law can be general or aimed more specifically at structuring and regulating the creation of particular knowledge resources. To create a useful categorization, we treat only the latter sorts of legal requirements as imposed commons governance. Thus, for example, while acknowledging the importance of HIPAA, and other health privacy laws, we do not classify every medical knowledge commons as involving imposed commons governance. We do, however, classify the specific government mandates of the previously studied Sentinel program as imposed governance. The power to impose governance through law is, of course, limited to governments. However, there are also parallels in corporate policies that, when imposed on employees and teams, are strictly enforced rules.

Commons governance may also be imposed through the power of the purse. For example, while medical researchers are not literally forced to accept government conditions on funding, such as those associated with the Rare Disease Clinical Research Network, their acceptance of those conditions is not meaningfully voluntary in the sense that matters for our categorization. While researchers could in principle rely entirely on other funding sources or choose a different occupation, the paucity of realistic alternatives empowers funding agencies to impose commons governance. Indeed, while there more often are viable funding alternatives in the private sector, large private funders may have similar power to impose governance in some arenas.

Collecting knowledge resources by surveillance is another way to elude voluntary exit and thus impose commons governance. Both governments and some sorts of private entities may be able to impose governance in this way. Many ‘smart city’ activities create knowledge resources through this sort of imposed governance. Private parties exercise this sort of power when they siphon off information that individuals contribute or generate while using their products or services for unrelated purposes. Internet giants such as Facebook and Google are notorious for pooling such information for purposes of targeting ads, but a universe of smaller ad-supported businesses also contribute to such pools. More recently, as discussed in Chapters 8 and 9, the IoT provides a similar source of private power to impose commons governance. Governments can accomplish essentially the same sort of thing by mandating disclosure. The earlier case study of Congress provides an interesting example of the way that open government laws create this sort of imposed commons governance.

Commons governance can also be imposed through control or constraint over contributor participation. This source of power can be illustrated by a thought experiment based on the mental health chatbots studied in Chapter 3. Mattioli’s study suggests that patients’ contributions of personal health information by using the current version of Woebot are meaningfully voluntary. If, however, a mental health chatbot’s use were to be mandated or highly rewarded by insurance companies, its governance pattern would shift from exit-shaped to imposed.Footnote 2 A less obvious example of this type of power comes from the Facebook activist group study. While there might initially be several different groups vying to organize a national protest movement, as time goes on potential participants will naturally value being a part of the largest group. At some point, this rich-get-richer effect can implicitly empower the most popular group to impose its rules-in-use on later joiners.

Finally, and in a somewhat different vein, power to impose commons governance can stem from a party’s ability to undermine contributor voluntariness by misleading individuals about the implications of contributing to a knowledge pool or using particular products or services. This concern has long been central to privacy discourse, especially in the private realm. Empirical studies have convinced many, if not most, privacy experts that privacy policies and similar forms of ‘notice and consent’ in the commercial context ordinarily do not suffice to ensure that participants understand the uses to which their personal information will be put. Facebook is only one prominent example of a company that has been repeatedly criticized in this regard. As another illustration, consider how the voluntariness of patients’ use of the mental health chatbot would be eroded if its pool of personal information came under the control of private parties who wanted to use to target advertising or for other reasons unrelated to improving mental health treatment. If the implications of such uses were inadequately understood by patients, the chatbot’s governance pattern might well shift from exit-shaped to imposed.

Table 11.3 lists cases that involve significant imposed governance. In most of these cases, imposed governance of some aspects of commons activity is layered with voice-shaped or exit-shaped governance of other aspects. The distinctions in Tables 11.1 and 11.2 based on information subjects’ role in governance and on whether pooling personal information is a knowledge commons objective are less salient for categorizing imposed governance in which both contributors and information subjects have neither voice nor the capacity to exit. Instead, the columns in Table 11.3 distinguish between cases in which governance is imposed by government and cases in which it is imposed by private actors, while the rows differentiate between rules-in-use associated with actors and knowledge resources, including contribution, access to, and use of personal information resources. Overall, though governments must balance many competing interests and are not immune to capture, one would expect government-imposed governance to be more responsive than privately imposed governance to the concerns of information subjects.

With respect to imposed governance, it is also important to note that some of these cases highlighted efforts to contest these constraints, when they didn’t align with information subjects’ norms and values. Many of the efforts to create more representative rules-in-use or work arounds developed within existing knowledge commons, such as activists on Facebook (Chapter 5). Yet, occasionally, communities of information subjects emerged for the sole purpose of pooling knowledge about exit or obfuscation. For example, the formation of sub-communities of IoT users through online forums that wants to assert more control over the pools of user data generated through their use of smart devices. These users, rather than pooling personal information, create a knowledge resource aimed at supporting other users to more effectively decide whether or how to exit, as well as how to obfuscate the collection of personal information. In this sense, these forums allow information subjects, as actors, to cope with exogenously imposed governance by manufacturers, as well as publicly driven governance.

Privacy as Knowledge Commons Governance: Newly Emerging Themes

These new studies of privacy’s role in commons governance highlight several emerging themes that have not been emphasized in earlier Governing Knowledge Commons (GKC) analyses. In the previous section we reflected on the role of personal information governance in boundary negotiation and socialization, the potential for conflicts between knowledge contributors and information subjects; the potential adversarial role of commercial infrastructure in imposing commons governance; and the role of privacy work-around strategies in responding to those conflicts. Additional newly emerging themes include: the importance of trust; the contestability of commons governance legitimacy; and the co-emergence of contributor communities and knowledge resources.

The Importance of Trust

Focusing on privacy and personal information flows reveals the extent to which the success of voice-shaped or exit-shaped commons governance depends on trust. Perhaps this is most obvious in thinking of cases involving voluntary contributions of personal information to create a knowledge resource. Whether commons governance is voice-shaped or exit-shaped, voluntary contribution must depend on establishing a degree of trust in the governing institutions. Without such trust, information subjects will either opt out or attempt to employ strategy to avoid full participation. Voice-shaped commons governance can create such trust by including information subjects as members. This is the approach taken by the Gordon Research Conferences, the BITAG, the MIDATA case and RDCRN consortia, for example. When a group decides to adopt the Chatham House Rule to govern a meeting, it creates an environment of trust. Exit-shaped commons governance must rely on other mechanisms to create trust. In the medical and education contexts, professional ethics are a potentially meaningful basis for trust. Trust might also be based in shared agendas and circumstances, as was likely the case for the informal governance of the Facebook activist groups. The studies in Chapters 6 and 7 illustrate the perhaps less obvious extent to which trust based on rules-in-use about personal information can be essential to the successful of knowledge commons resources that do not incorporate personal information. This effect suggests that mechanisms for creating and reinforcing trust may be of very broad relevance to knowledge commons governance far beyond the obvious purview of personal information-based resources.

The Contestability of Commons Governance Legitimacy

These privacy-focused studies draw attention to the role of privately imposed commons governance, especially through the design of commercial infrastructure. Previous GKC studies that have dealt with imposed commons governance have focused primarily on government mandates, while previous consideration of infrastructure has been mostly confined to the benign contributions of government actors or private commons entrepreneurs whose goals and objectives were mostly in line with those of contributors and affected parties. These cases also highlight the potentially contestable legitimacy of commons governance of all three sorts and call out for more study of where and when commons governance is socially problematic and how communities respond to illegitimate governance. The issue of legitimacy also demands further attention, of the sort reflected in Chapters 8 through 10 of this volume, to policy approaches for improving the situation.

While GKC theory has always acknowledged the possibility that commons governance will fail to serve the goals and values of the larger society, previous studies have focused primarily on the extent to which a given knowledge commons achieved the objectives of its members and participants. Concerns about social impact focused mainly on the extent to which the resources created by a knowledge commons community would be shared for the benefit of the larger society. These privacy commons studies help to clarify the ways in which knowledge commons governance can fail to be legitimate from a social perspective. They underscore the possibility that knowledge commons governance can be illegitimate and socially problematic even if a pooled knowledge resource is successfully created. This sort of governance failure demands solutions that go beyond overcoming barriers to cooperation. Various types of solutions can be explored, including the development of appropriate regime complexes discussed by Shackelford in Chapter 8, the participatory design approach discussed by Mir in Chapter 9, to the collaborative development of self-help strategies illustrated by the IoT forums discussed in Chapter 10, the imposition of funding requirements giving information subjects a direct voice in governance illustrated by the RDRCN, the development of privacy-protective technologies and infrastructures, and the imposition of more effective government regulation.

Co-emergence of Communities and Knowledge Resources

One of the important differences between the IAD and GKC frameworks is the recognition that knowledge creation communities and knowledge resources may co-emerge, with each affecting the character of the other. The privacy commons studies provide valuable illustrations of this general feature of knowledge commons, particularly in voice-shaped and some exit-shaped situations. In some cases, this co-emergence occurs because at least some participants are subjects of personal information that is pooled to create a knowledge resource. This sort of relationship was identified in earlier RDCRN case studies and is a notable feature of cases discussed in Chapters 2, 3 and 5. The Gordon Research Conferences and BITAG examples from Chapter 5 are perfect examples. Even when the knowledge resource ultimately created by the community does not contain personal information, however, participants’ personal perspectives or experiences may be essential inputs that shape the knowledge resources that are ultimately created, as illustrated by the case studies discussed in Chapter 7 and in the earlier study of the Patient Innovation Project.

Privacy as Knowledge Commons Governance: Deepening Recurring Themes

The contributions in this volume also confirm and deepen insights into recurring themes identified in previous GKC studies (Reference Frischmann, Madison and StrandburgFrischmann, Madison and Strandburg, 2014; Reference Strandburg, Frischmann and MadisonStrandburg, Frischmann and Madison, 2017). These privacy-focused studies lend support to many of those themes, while the distinctive characteristics of personal information add nuance, uncover limitations and highlight new observations which suggest directions for further research. Rather than re-visiting all of those earlier observations, this section first summarizes some recurring themes that are distinctively affected by the characteristics of personal information and then identifies some new themes that emerge from privacy commons studies.

Knowledge Commons May Confront Diverse Obstacles or Social Dilemmas, Many of Which are Not Well Described or Reducible to the Simple Free Rider Dilemma

When we developed the GKC framework more than ten years ago, our focus was on challenging the simplistic view that the primary obstacle to knowledge creation was the free rider dilemma, which had to be solved by intellectual property or government subsidy. We were directly inspired by Ostrom’s demonstration that private property and government regulation are not the sole solutions to the so-called tragedy of the commons for natural resources. It became immediately clear from our early case studies, however, not only that there were collective solutions to the free rider problem for knowledge production, but that successful commons governance often confronted and overcame many other sorts of social dilemmas. Moreover, these other obstacles and dilemmas were often more important to successful knowledge creation and completely unaddressed by intellectual property regimes. Considering privacy and personal information confirms this observation and adds some new twists.

Among the dilemmas identified in the earlier GKC studies, the privacy-focused studies in this volume call particular attention to:

Dilemmas attributable to the nature of the knowledge or information production problem.

As we have already emphasized, personal information flow and collection creates unique dilemmas because of the special connection between the information and its subjects, who may or may not have an adequate role in commons governance.

Dilemmas arising from the interdependence among different constituencies of the knowledge commons.

When personal information is involved, these sorts of dilemmas reappear in familiar guises, but also with respect to particular concerns about the role of information subjects in governance.

Dilemmas arising from (or mitigated by) the broader systems within which a knowledge commons is nested or embedded.

On the one hand, accounting for personal information highlights the important (though often incomplete) role that background law and professional ethics play in mitigating problems that arise from the lack of representation of information subjects’ interests in commons governance. On the other hand, it draws attention to the ways in which infrastructure design, especially when driven by commercial interests, can create governance dilemmas related to that lack of representation.

Dilemmas associated with boundary management

The studies in this volume identify the important role that privacy governance can play in governing participation and managing access boundaries for knowledge commons, often even when the relevant knowledge resources are not comprised of personal information.

Close Relationships Often Exist between Knowledge Commons and Shared Infrastructure

Earlier GKC case studies noted the important role that the existence or creation of shared infrastructure often played in encouraging knowledge sharing by reducing transaction costs. In those earlier studies, when infrastructure was not created collaboratively, it was often essentially donated by governments or ‘commons entrepreneurs’ whose goals and objectives were closely aligned with those of the broader commons community. While some studies of privacy commons also identify this sort of ‘friendly’ infrastructure, their most important contribution is to identify problems that arise when infrastructure owners have interests that conflict with the interests of information subjects. This sort of ‘adversarial infrastructure’ is often created by commercial entities and closely associated with the emergence of imposed commons governance. Undoubtedly, there are times when society’s values are best served by embedding and imposing governance within infrastructure in order to constrain some knowledge commons from emerging, in competition with sub-communities’ preferences; in these cases infrastructure operationalizes rules to prevent certain resources from being pooled or disseminated, such as by white supremacists or for terrorism, or the emergence of rules-in-use to prevent social harms, such as pornography. There is a special danger, however, that society’s values will not be reflected in private infrastructure that takes on the role of imposing commons governance, as many of the privacy commons studies illustrate.

Knowledge Commons Governance Often Did Not Depend on One Strong Type or Source of Individual Motivations for Cooperation

Earlier GKC case studies largely presumed that contributing to a knowledge commons was largely, if not entirely, a voluntary activity and that commons governance had to concern itself with tapping into, or supplying, the varying motivations required to attract the cooperation of a sometimes diverse group of necessary participants. Privacy commons studies turn this theme on its head by emphasizing the role of involuntary – perhaps even coerced – contribution. Thus, a given individual’s personal information can sometimes be contributed by others, obtained by surveillance or gleaned from other behaviour that would be difficult or costly to avoid. This possibility raises important questions about the legitimacy of commons governance that were not a central focus of earlier GKC case studies.

The Path Ahead

The studies in this volume move us significantly forward in our understanding of knowledge commons, while opening up important new directions for future research and policy development. We mention just a few such directions in this closing section.

First, while the taxonomy of voice-shaped, exit-shaped and imposed commons governance emerged from studies of personal information governance, it is more broadly applicable. To date, GKC case studies have tended to focus on voice-shaped commons governance. More studies of exit-shaped commons governance would be useful, for knowledge commons aimed at pooling personal information and others. For example, it might be quite interesting to study some of the commercial DNA sequencing companies, such as 23andMe, which create pools of extremely personal genetic information, used at least partly for genetic research. There are currently a number of such companies, which seem to attract a fair amount of business. Without further study, it is unclear whether the behaviour of these entities is sufficiently clear to contributors to qualify them as exit-shaped commons governance. Moreover, these companies also collect a considerable amount of information that pertains to information subjects who are not contributors, making them a promising place to study those issues as well.

Second, we learned from these cases that the distinction between public- and voice-shaped governance is strongly associated with the differences between meaningful exit and voice, respectively. While these mechanisms are important in providing legitimacy (Gorham et al.), individual rules-in-use to establish exit and voice functions vary significantly across contexts. It is not yet clear what makes exit or voice meaningful in a given context. Future case studies should address the institutional structure, differentiating between specific strategies, norms and rules and seeking to associate particular governance arrangements with social attributes and background characteristics in order to understand when exit or voice solutions might work and the contextual nature of successful governance arrangements.

Third, many of these privacy commons case studies emphasized the complexity of governance arrangements, identifying many competing layers of rules-in-use and rules-on-the-books, which reflected the interests of different actors, including information subjects, private sector firms and government actors. These conflicting layers illustrate the polycentric nature of knowledge commons governance, providing an opportunity to reconnect to insights from natural resource commons in future case studies. Further, there is room for considerably more study of how adversarial (or at least conflicting) infrastructure design affects commons governance. Additional inquiries into communities’ relationships with social media platforms would likely provide significant insight, as would case studies in smart city contexts.

While each of these directions should be explored in their own right, they are also reflected in supplementary questions added to the GKC framework, as represented in Table 11.4, and should be considered in future case studies.

Table 11.4 Updated GKC framework (with supplementary questions in bold)

| Knowledge Commons Framework and Representative Research Questions | |

|---|---|

| Background Environment | |

| What is the background context (legal, cultural, etc.) of this particular commons? | |

| What normative values are relevant for this community? | |

| What is the ‘default’ status of the resources involved in the commons (patented, copyrighted, open, or other)? | |

| How does this community fit into a larger context? What relevant domains overlap in this context? | |

| Attributes | |

| Resources | What resources are pooled and how are they created or obtained? |

| What are the characteristics of the resources? Are they rival or nonrival, tangible or intangible? Is there shared infrastructure? | |

| What is personal information relative to resources in this action arena? | |

| What technologies and skills are needed to create, obtain, maintain, and use the resources? | |

| What are considered to be appropriate resource flows? How is appropriateness of resource use structured or protected? | |

| Community Members | Who are the community members and what are their roles, including with respect to resource creation or use, and decision-making? |

| Are community members also information subjects? | |

| What are the degree and nature of openness with respect to each type of community member and the general public? | |

| Which non-community members are impacted? | |

| Goals and Objectives | What are the goals and objectives of the commons and its members, including obstacles or dilemmas to be overcome? |

| Who determines goals and objectives? | |

| What values are reflected in goals and objectives? | |

| What are the history and narrative of the commons? | |

| What is the value of knowledge production in this context? | |

| Governance | |

| Context | What are the relevant action arenas and how do they relate to the goals and objective of the commons and the relationships among various types of participants and with the general public? |

| Are action arenas perceived to be legitimate? | |

| Institutions | What legal structures (e.g., intellectual property, subsidies, contract, licensing, tax, antitrust) apply? |

| What other external institutional constraints are imposed? What government, agency, organization, or platform established those institutions and how? | |

| How is institutional compliance evaluated? | |

| What are the governance mechanisms (e.g., membership rules, resource contribution or extraction standards and requirements, conflict resolution mechanisms, sanctions for rule violation)? | |

| What are the institutions and technological infrastructures that structure and govern decision making? | |

| What informal norms govern the commons? | |

| What institutions are perceived to be legitimate? Illegitimate? How are institutional illegitimacies addressed? | |

| Actors | What actors and communities: are members of the commons, participants in the commons, users of the commons and/or subjects of the commons? |

| Who are the decision-makers and how are they selected? Are decision-makers perceived to be legitimate? Do decision-makers have an active stake in the commons? | |

| How do nonmembers interact with the commons? What institutions govern those interactions? | |

| Are there impacted groups that have no say in governance? If so, which groups? | |

| Patterns and Outcomes | |

| What benefits are delivered to members and to others (e.g., innovations and creative output, production, sharing and dissemination to a broader audience and social interactions that emerge from the commons)? | |

| What costs and risks are associated with the commons, including any negative externalities? | |

| Are outcomes perceived to be legitimate by members? By decision-makers? By impacted outsiders? | |

| Do governance patterns regarding participation provide exit and/or voice mechanisms for participants and/or community members? | |

| Which rules-in-use are associated with exit-shaped, voice-shaped or imposed governance? Are there governance patterns that indicate the relative impact of each within the commons overall? | |