Cognitive bias modification (CBM) has been defined as the ‘direct manipulation of a target cognitive bias, by extended exposure to task contingencies that favor predetermined patterns of processing selectivity’. Reference MacLeod and Mathews1 A recent review remarks that there has been ‘exponential growth of research employing these cognitive bias modification (CBM) procedures’, Reference MacLeod and Mathews1 especially in recent years. Research has focused mainly on two types of interventions Reference MacLeod and Mathews1 : attention bias modification (ABM) and interpretative bias modification (CBM-I). Another narrative review Reference Macleod2 concludes that both ABM and CBM-I can reliably have an impact on clinically relevant symptoms, with greatest confidence for anxiety symptoms and depression. The principle of ABM involves teaching participants to avoid the negative, ‘threat’ stimuli (usually pictures or words) by directing their attention, without their knowledge, to neutral or positive stimuli (‘avoid threat’). The principle of CBM-I Reference MacLeod and Mathews1,Reference Mathews and MacLeod3 is similar, but it uses more complex stimuli, such as ambiguous paragraphs or sentences. Participants are given a task that consistently disambiguates the valence of the sentence or paragraph (for example a word completion task), towards a positive (sometimes neutral) or a negative interpretation. Other interventions are also included under CBM, such as concreteness training (CNT) Reference Watkins, Baeyens and Read4 or alcohol approach and avoidance training (A-AAT). Reference Wiers, Eberl, Rinck, Becker and Lindenmeyer5

Three previous meta-analyses examined the efficiency of various CBM approaches for psychological problems. The first Reference Hakamata, Lissek, Bar-Haim, Britton, Fox and Leibenluft6 looked at studies of ABM for anxiety and found an effect size of 0.61. The second one Reference Hallion and Ruscio7 investigated both ABM and CBM-I for anxiety and depression and found a small, but significant post-intervention effect for anxiety and depression taken together (g = 0.13). Finally, the third meta-analysis Reference Beard, Sawyer and Hofmann8 found non-significant effects for subjective experience following ABM. The present meta-analysis was prompted by a number of aspects that still remain unclear regarding the efficacy of CBM interventions. It is not clear if the previous meta-analyses were carried out on randomised controlled trials (RCTs). For instance, one of these Reference Hakamata, Lissek, Bar-Haim, Britton, Fox and Leibenluft6 mentioned randomisation as an inclusion criterion, but out of 11 studies, two were experiments described in a narrative review, for which no independent, peer-reviewed publication as separate RCTs existed. Reference Mathews and MacLeod3 Another Reference Hallion and Ruscio7 did not list randomisation as an inclusion criterion. Second, the quality of the studies included was not considered in any of the previous meta-analyses, even though there is ample evidence that the quality of the RCTs included in a meta-analysis can significantly bias outcomes, Reference Wood, Egger, Gluud, Schulz, Jüni and Altman9–Reference Cuijpers, Smit, Bohlmeijer, Hollon and Andersson13 especially for smaller trials. Reference Kjaergard, Villumsen and Gluud12 Some CBM researchers themselves Reference Beard14 have suggested that the field is overly reliant on small studies, the vast majority of which do not follow the Consolidated Standards of Reporting trials (CONSORT) guidelines. Reference Schulz, Altman and Moher15 Third, moderators of treatment effects that were considered were generally restricted to the purported mechanisms of action of CBM interventions (change in targeted bias), and to specific procedural details of the interventions (such as stimulus type, stimulus duration, stimulus orientation, number of trials). Finally, publication bias was analysed in earlier meta-analyses, but with quite different results. Our goal was to present an updated meta-analysis including all CBM interventions tested in RCTs for clinically relevant outcomes. A number of RCTs examining CBM interventions have been conducted since the three previous meta-analysis were published (we counted 18 new trials). We aimed to examine whether there is robust empirical evidence of strong methodological quality (i.e. reduced risk of bias) for the clinical efficacy of CBM interventions. CBM is ultimately advocated as a therapy, with the purpose of significantly reducing symptoms and distress, so its efficacy has to be clearly established in clinical samples. Finally, we wanted to analyse more general moderators of treatment response, relevant to most psychotherapies.

Method

Identification and selection of studies

We conducted a comprehensive literature search (see online supplement DS1) for the complete search string) in PubMed, PsycInfo, the Cochrane library and EMBASE through May 2013 using the following key words: ”cognitive bias modification”, ”attention* bias modification”, ”attention” bias ”training”, ”bias training”, ”interpret* bias modification”. We checked the reference sections of the three previous meta-analyses. We also periodically checked for newly published trials during the preparation of the meta-analysis until the end of September 2013.

We included studies in which: (a) participants were randomised and (b) the effect of a CBM intervention, alone or in combination with another treatment, was (c) compared to the effects of a control group, another active treatment or a combination of treatments, (d) in adults, (e) for clinically relevant outcomes, (f) assessed on established, standardised symptom or distress measures and (g) published in peer-reviewed scientific journals in English. We included studies that combined a CBM intervention with another active treatment (psychological or pharmacological), provided that there was a control condition for the CBM intervention (i.e. a group that received no CBM intervention, a no-contingency intervention, an intervention supposed to increase bias), whether alone or combined with another active treatment.

We considered active CBM interventions the ones designed to decrease bias, regardless of its type, and consequently that improved symptoms and mood. As relevant outcome measures, we excluded outcomes not related to clinical symptoms or distress, as well as outcomes that were not measured on established, standardised, instruments (for example, reaction times, biological data, data measured on Likert or visual analogue scales devised ad hoc). Dissertations and conference abstracts were not included.

Quality assessment and data extraction

The methodological quality of the included studies was assessed with five criteria of the risk of bias assessment tool, developed by the Cochrane Collaboration Reference Higgins, Altman, Gotzsche, Juni, Moher and Oxman16 to assess sources of bias in RCTs.

-

(a) Criterion 1: adequate generation of allocation sequence.

-

(b) Criterion 2: concealment of allocation to conditions.

-

(c) Criterion 3: prevention of knowledge of the allocated intervention to assessors of outcome.

-

(d) Criterion 4: prevention of knowledge of the allocated intervention to participants.

-

(e) Criterion 5: dealing with incomplete data.

Criterion 4 (masking of participants) was included because unlike other psychotherapy studies, in which it is impossible for participants to remain unaware of the allocated intervention, most CBM interventions are carried out without making participants aware of the contingency they are exposed to, the purpose of the intervention or, in some cases, even the fact they are being subjected to an intervention. In fact, lack of participant awareness of the training has been regarded as evidence of the implicit mechanism of action of these interventions, through modifying low-level biases, which are inaccessible to awareness. Reference MacLeod and Mathews1,Reference Beard14 Criterion 5 (dealing with incomplete data) was rated as positive if there were no missing data or if data were analysed in an intent-to-treat approach (meaning all randomised participants were included in the analysis). Risk of bias was rated by two independent researchers (I.C. and R.K.). Disagreements were discussed and if they remained unresolved, the senior author was consulted (P.C.).

We also coded several aspects of the included studies, as potential moderators.

-

(a) Type of sample: clinical – diagnosed using a structured clinical interview (such as Structured Clinical Interview for DSM Disorders (SCID)); subclinical/analogue – selected for high values on a scale for clinical symptoms or distress; unselected participants.

-

(b) Recruitment type: patient samples; community volunteers; university students.

-

(c) Delivery type: exclusively laboratory-based; including a home-based component.

-

(d) Participant compensation: yes (money, course credit, both); no (no type of compensation).

-

(e) Number of sessions.

-

(f) Publication year.

-

(g) Type of bias intervention: attentional (ABM); interpretational (CBM-I); other (CNT, A-AAT, combinations).

-

(h) Impact factor (from Web of Science) of the journal in which the study was published (at the time of publication).

This last moderator was chosen for exploratory analysis, since we noticed both CBM trials and CBM reviews have been published in top-tier journals in the field of clinical psychology. Other researchers have also noted the tremendous surge of interest in CBM interventions. Reference Hallion and Ruscio7,17 Top journals have dedicated special issues (for example Journal of Abnormal Psychology, February 2009; Cognitive Therapy & Research, April 2014) to CBM interventions. Although we acknowledge there are intrinsic problems with journal metrics such as the impact factor, it still can be seen as an indicator for the best articles in a given field. We wanted to examine whether there might be a trend of positive CBM results getting published in higher impact factor journals.

Meta-analysis

For each comparison between a CBM intervention (alone or in combination) and a comparison group, the effect size indicating the differences between the two groups at post-test was calculated (Cohen’s d or standardised mean difference). The effect size were calculated by subtracting, at post-test, the mean score of the CBM group from the mean score of the comparison group, and dividing the result by the pooled standard deviation the two groups. According to Cohen, Reference Cohen18 effect sizes of 0.2 are considered small, whereas effect sizes of 0.5 are moderate and effect sizes of 0.8 are deemed large. Because a substantial proportion of studies had small sample sizes, we corrected the effect size for small sample bias, as recommended by Hedges & Olkin, Reference Hedges and Olkin19 and reported the corrected indicator Hedges’ g.

We calculated and pooled the individual effect sizes with Comprehensive Meta-analysis (CMA; version 2.2.064 for Windows). As there was a lot of variability in the symptom outcomes considered and the instruments used to measure them, we grouped outcome measures into the following categories: anxiety – all (all anxiety outcomes, whether measured by disorder-specific or general anxiety instruments); general anxiety (all outcomes relating to general anxiety, such as the State-Trait Anxiety Inventory, excluding outcomes specific to various anxiety disorders); social anxiety; depression. If a study used more than one outcome from the same category or if the same outcome was measured by more than one instrument, an average effect size was computed. If means and standard deviations were not reported for the symptom outcomes in a study, we used the procedures recommended by CMA Reference Borenstein, Hedges, Higgins and Rothstein20 to calculate the standardised mean difference from dichotomous data or from other statistics such as t-values or exact P-values. If the effect size could not be calculated, the study was excluded. For the studies that had more than one control group (i.e. sham/no contingency training, attend to threat or waitlist), we used only one control group to calculate effect size. We chose the group most similar to a placebo group (i.e. sham training), as this was the most common control condition in CBM studies. Also, this decision was made to reduce effect size inflation attributable to non-specific effects of the intervention or to the fact that the control condition was supposed to achieve an opposite effect from the intervention.

We only reported effect sizes for post-test. Neither follow-up, nor post-challenge (i.e. after the confrontation with a stressor) data were considered because of considerable variability among studies. For follow-up, there was considerable variability regarding duration, and there was hardly any control as to whether participants underwent some other treatment in that period. For post-challenge data, the types of stressors used were extremely diverse (for example public speaking situation, contamination, a test), as were the outcome measures, which in most cases were not standardised (for example reaction times, ad hoc Likert scales).

We report results from two meta-analyses: one containing all participant samples, the other one including only studies in which participants were diagnosed with a clinical condition, by use of a standardised diagnostic interview (such as SCID, Anxiety Disorders Interview Schedule (ADIS)). To facilitate clinical interpretation, we also transformed the standardised mean difference into number needed to treat (NNT), using the formulae of Kraemer & Kupfer. Reference Kraemer and Kupfer21 The NNT represents the number of patients that would have to be treated to generate one additional positive outcome. Reference Laupacis, Sackett and Roberts22

We expected considerable heterogeneity among studies and consequently decided to calculate mean effect sizes using a random effects model. To test for the homogeneity of effect sizes, we calculated the I2 statistic, which indicates heterogeneity in percentages. A value of 0% indicates no observed heterogeneity, whereas as values over 0% refer to increasing heterogeneity, with 25% low, 50% moderate and 75% or above indicative of high heterogeneity. Reference Higgins, Thompson, Deeks and Altman23 We calculated 95% confidence intervals around I2 , Reference Ioannidis, Patsopoulos and Evangelou24 using the non-central χ2-based approach with the heterogi module for Stata MP 13.1 for Mac. Reference Orsini, Bottai, Higgins and Buchan25 We also calculated the Q-statistic, but only report whether it is significant. Outliers were defined as studies in which the 95% confidence interval was outside the 95% confidence interval of the pooled studies (on both sides of the confidence interval). Subgroup and meta-regression analyses were conducted with outliers removed.

Subgroup analyses were conducted using a mixed-effects model, in which studies within subgroups are pooled using the random-effects model, but tests for significant differences between subgroups are carried out using a fixed-effects model. Subgroups with fewer than three studies were not reported. For continuous moderator variables, we used meta-regression analyses to test whether there was a significant relationship between each of these variables and the effect size, and reported a Z-value and an associated P. We also conducted multivariate meta-regression analysis using the Stata program, both by including all moderators simultaneously, and by using a back-step procedure, removing the predictor with the highest P-value at each step.

Publication bias was assessed by visually inspecting the funnel plot for the main outcome categories and employing the trim and fill procedure of Duval & Tweedie Reference Duval and Tweedie26 (as implemented in CMA, version 2.2.064), to obtain an estimate of the effect size after the publication bias has been taken into account. We also conducted Egger’s test of the intercept to test the symmetry of the funnel plot.

Results

Selection and inclusion of studies

We examined a total of 738 records (356 after duplicates were removed) and excluded 264 based on inspection of the abstract. We retrieved the full text of the remaining 92 articles, totalling 97 trials. Figure 1 presents the flowchart of the inclusion process and details the reasons for the exclusion of trials, following the PRISMA statement. Reference Moher, Liberati, Tetzlaff and Altman27 This process resulted in 44 published articles, with a total of 49 RCTs that met our inclusion criteria and were included in the meta-analysis.

Characteristics of included studies

We conducted the analyses only for post-test data, which totalled 52 comparisons from 49 RCTs (see online supplement DS2 for the complete list). Two RCTs Reference Wiers, Eberl, Rinck, Becker and Lindenmeyer5,Reference Eberl, Wiers, Pawelczack, Rinck, Becker and Lindenmeyer28 only reported follow-up symptom data. These studies were rated for descriptive characteristics, but did not contribute to post-test outcomes.

The number of intervention sessions ranged from 1 to 15, but 21 RCTs included only one session. Fifteen RCTs used unselected participants, 16 subclinical or analogue samples, 16 clinical mental-problem samples and 2 samples with a physical problem (pain). In total, 24 studies recruited participants from student samples, 15 from community volunteers, 9 patient samples and 1 was from general medical patients. Thirty-six comparisons were based on interventions carried out exclusively in the laboratory, while 13 also included a home component. Participants received compensation for participation (money, course credit or both) in 27 RCTs, and did not receive any compensation in 22 studies. There were 22 comparisons based on ABM interventions, whereas

Fig. 1 Flow chart of selection and inclusion process, following the PRISMA statement.

CBM, cognitive bias modification; RCT, randomised controlled trials.

23 were based on CBM-I and 4 on other types of bias interventions (2 for CNT and 2 for A-AAT). Six RCTs were published in journals with an impact factor under 2, 24 in journals with an impact factor between 2 and 4, and 13 over 4 (impact factors for the other studies at the time of publication could not be retrieved). Online Table DS1 presents selected characteristics of the included RCTs.

Quality of the included studies

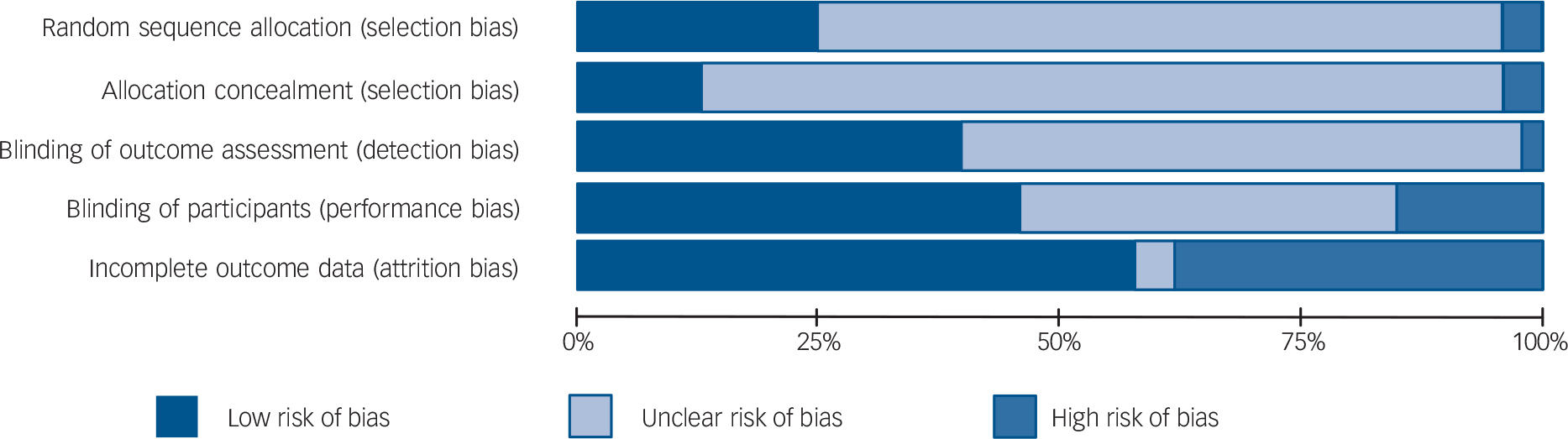

Overall, the quality of the included RCTs was not optimal. More than two-thirds of the studies (33/49) met fewer than three of the five quality criteria considered. One-fifth of the included RCTs (10/49) did not meet any quality criteria, and only 4% (5/49) met all criteria. Figure 2 presents the percentage of studies with a low, unclear (i.e. not enough information) and high risk of bias, for each of the quality criteria. It is worth mentioning that for all criteria except handling incomplete outcome data, a sizeable proportion of the RCTs (71% for sequence generation, 84% for allocation concealment, 57% for masking of assessors and 39% for masking of participants) did not provide the information necessary for assessing whether the criteria were met.

CBM compared with a control condition: all samples

Main effect sizes for all outcome categories

Anxiety (all measures). Figure 3 displays the forest plot of the standardised effect sizes of CBM interventions. The mean effect size was a g of 0.37 (95% CI 0.20-0.54) for 38 RCTs (41 comparisons). Heterogeneity was high (I 2 = 72.66%) and highly significant (Table 1 and see online Table DS2 for a more detailed version of this table). Three studies were included in which two CBM interventions were compared with the same control group, meaning that multiple comparisons from these studies were not independent from each other. Their use in the same analyses could have affected the pooled effect size by leading to an artificial reduction of heterogeneity. We conducted sensitivity analyses including only one effect size per study to examine these possible effects, by first including only the comparisons with the largest effect sizes from each study and then only the ones with the smallest effect sizes. The resulting effect size and heterogeneity were very close to the ones found in the overall analysis. Three studies (four comparisons) were identified as outliers. With their removal, the effect size decreased to a g of 0.23 (95% CI 0.14–0.32) and heterogeneity became non-significant.

Anxiety (general). The mean effect size was a g of 0.38 (95% CI 0.17–0.59) for 31 RCTs (34 comparisons). Heterogeneity was high (I 2 = 76.98%) and highly significant. Sensitivity analysis were conducted in the same way as for anxiety (all measures), with results remaining similar to the overall analysis. With the

Fig. 2 Risk of bias graph: authors’ judgements about each risk of bias item presented as percentages across all included studies.

exclusion of four outliers, the effect size decreased to 0.18 (95% CI 0.08–0.28) and heterogeneity became non-significant.

Social anxiety. Ten RCTs with ten comparisons resulted in a g of 0.40 (95% CI 0.06–0.74). Heterogeneity was high (I 2 = 74.62%) and highly significant. Removal of one outlier reduced the effect size to a non-significant g of 0.23 (95% CI –0.001 to 0.46). Heterogeneity was no longer significant (P>0.05).

Depression. We identified 17 RCTs with 17 comparisons, leading to a g of 0.43 (95% CI 0.16–0.71). Heterogeneity was high (I 2 = 74.24%) and highly significant. With the removal of two outliers, the effect size decreased to a g of 0.33 (95% CI 0.16–0.50) and heterogeneity became non-significant.

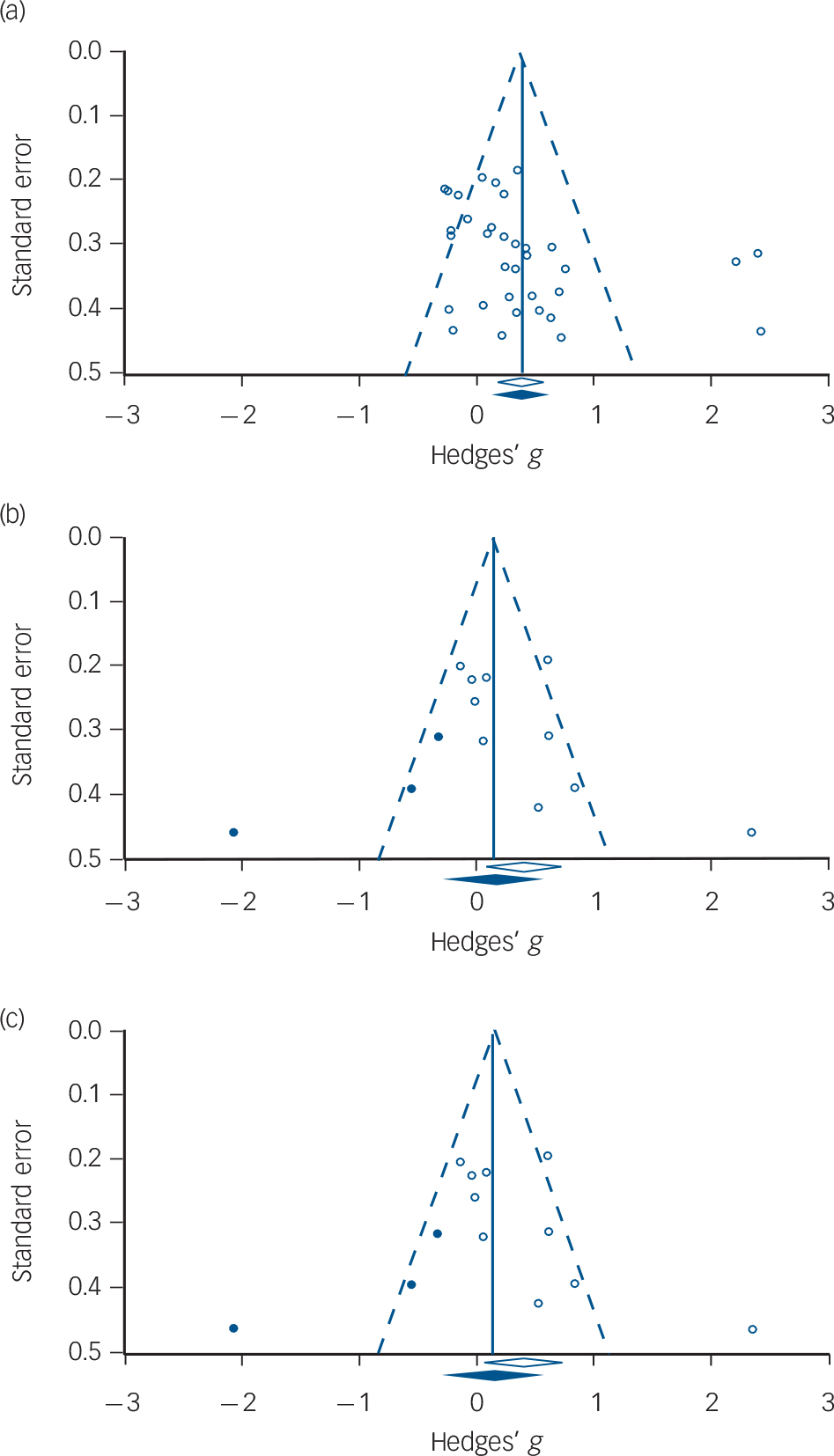

Publication bias

We found significant publication bias for general anxiety, social anxiety and depression. For general anxiety, visual inspection and Egger’s test indicated an asymmetric funnel plot (intercept 2.95, 95% CI 0.27–5.62, P = 0.031). The Duval & Tweedie trim and fill procedure did not impute any studies. For social anxiety inspection of the funnel plot (Fig. 4(b)) and the Duval & Tweedie trim and fill procedure indicated significant publication bias. After adjustment for missing studies (n = 3), the effect size decreased from a g of 0.40 to a non-significant g of 0.14 (95% CI –0.22 to 0.51). Egger’s test was not significant (intercept 4.04, 95% CI –0.39 to 8.48, P = 0.068). For depression inspection of the funnel plot (Fig. 4(c)) and the Duval & Tweedie trim and fill procedure indicated significant publication bias. After adjustment for missing studies (n = 7), the effect size decreased from a g of 0.43 to a non-significant g of 0.09 (95% CI –0.21 to 0.39). Egger’s test was not significant (intercept 3.25, 95% CI –0.61 to 7.12, P = 0.09).

Subgroup analyses

The results of subgroup analysis are shown in online Table DS2. For participant compensation, higher effect sizes were obtained if participants were compensated, than if they were not, for anxiety – all measures (P = 0.028), general anxiety (P = 0.054) and social anxiety (P = 0.001). For sample type, significantly higher effect sizes were obtained for subclinical or analogue samples than for clinical ones for social anxiety (P = 0.025). For recruitment, no significant differences were found. For delivery type, we found significantly higher effect sizes if the interventions were delivered exclusively in the laboratory, as opposed to them including a home-based component for anxiety – all measures (P = 0.030), general anxiety (P = 0.006) and social anxiety (P = 0.001). For type of bias, we found significantly higher effect

Fig. 3 Standardised effect sizes of cognitive bias modification (CBM) interventions for all the samples for anxiety (all measures). S, study; C, comparison.

Table 1 Effects of cognitive bias modification interventions, compared with control, at post-test, for all samples and outcome categoriesFootnote a

| Variable | n comp | g(95% CI) | Z | I 2 (95% CI)Footnote b | Number needed to treat |

|---|---|---|---|---|---|

| Anxiety (all measures) | 41 | 0.37 (0.20 to 0.54) | 4.28 | 73Footnote * (63-80) 4.72 | |

| One effect size per study (only highest) | 38 | 0.39 (0.21 to 0.57) | 4.26 | 74Footnote * (65-81) | 4.59 |

| One effect size per study (only lowest) | 38 | 0.37 (0.19 to 0.55) | 4.00 | 75Footnote * (65-81) | 4.72 |

| Outliers removedFootnote c | 37 | 0.23 (0.14 to 0.32) | 4.96 | 2 (0-38) | 7.69 |

| General anxiety | 34 | 0.38 (0.17 to 0.59) | 3.58 | 77Footnote * (68-83) | 4.59 |

| One effect size per study (only highest) | 31 | 0.41 (0.18 to 0.63) | 3.56 | 78Footnote * (70-85) | 4.27 |

| One effect size per study (only lowest) | 31 | 0.38 (0.15 to 0.61) | 3.30 | 78Footnote * (71-85) | 4.59 |

| Outliers removedFootnote c | 30 | 0.18 (0.08 to 0.28) | 3.46 | 0 (0-41) | 9.80 |

| Social anxiety | 10 | 0.40 (0.06 to 0.74) | 2.34 | 75Footnote * (53-86) | 4.39 |

| Outlier removedFootnote d | 9 | 0.23 (–0.001 to 0.46) | 1.95 | 44 (0-75) | 7.69 |

| Generalised anxiety | 3 | 0.68 (0.31 to 1.05) | 3.64 | 0 (0-90) | 2.63 |

| Panic symptoms | 4 | 0.02 (–0.43 to 0.44) | 0.10 | 51 (0-84) | 83.33 |

| Depression | 17 | 0.43 (0.16 to 0.71) | 3.17 | 74Footnote * (59-84) | 4.1 |

| Outliers removedFootnote e | 15 | 0.33 (0.16 to 0.50) | 3.82 | 27 (0-61) | 5.43 |

n comp, number of comparisons.

a. All results are reported with Hedges g, using a random-effects model.

b. The P levels in this column indicate whether the Q-statistic is significant (the I 2 statistic does not include a test of significance).

c. Outliers were defined as studies in which the 95% CI was outside the 95% CI of the pooled studies. Below the 95% CI: Steel et al; Reference Steel, Wykes, Ruddle, Smith, Shah and Holmes59 above the 95% CI: Lester et al Reference Lester, Mathews, Davison, Burgess and Yiend45 Study 1 and Study 2, Schmidt et al. Reference Schmidt, Richey, Buckner and Timpano56

d. Above the 95% CI: Schmidt et al. Reference Schmidt, Richey, Buckner and Timpano56

e. Below the 95% CI: Baert et al, Reference Baert, De Raedt, Schacht and Koster33 Study 1; above the 95% CI: Lester et al Reference Lester, Mathews, Davison, Burgess and Yiend45 Study 1 and Study 2.

* P<0.05; other results are not significant (P>0.05).

Fig. 4 Funnel plots.

(a) General anxiety (no imputed studies); (b) social anxiety (with imputed studies); (c) depression (with imputed studies).

White circles: observed studies; blue circles: studies imputed by the trim and fill procedure; white diamond: pooled mean effect size of observed studies only; blue diamond: pooled mean effect size of both observed and imputed studies.

sizes for CBM-I than ABM for anxiety – all measures (P = 0.048), general anxiety (P = 0.034) and depression (P = 0.014).

For publication year, meta-regression indicated a significant, negative relationship between publication year and effect size for general anxiety (slope b = –0.06, 95% CI –0.10 to –0.02, P = 0.003), social anxiety (slope b = –0.17, 95% CI –0.29 to –0.05, P = 0.003), and depression, (slope b = –0.10, 95% CI –0.19 to –0.02, P = 0.01). For general anxiety, a multivariate meta-regression using a back-step procedure found publication year as significant (slope b = –0.06, 95% CI –0.10 to –0.01, P = 0.006). For number of sessions meta-regression indicated a significant, negative relationship between number of sessions and effect sizes for general anxiety (slope b = –0.02, 95% CI –0.05 to –0.0006, P = 0.044) and depression (slope b = –0.05, 95% CI –0.09 to –0.01, P = 0.007). In terms of quality (low risk of bias) we found a significant negative relationship between the quality score (number of criteria met) and effect sizes for general anxiety (slope b = –0.07, 95% CI –0.13 to –0.02, P = 0.007) and depression (slope b = –0.10, 95% CI –0.20 to –0.006, P = 0.036). We found a borderline significant relationship between the journal impact factor and effect size (slope b = 0.08, 95% CI –0.001 to 0.16, P = 0.053) for anxiety (all measures).

CBM compared with a control condition: clinical samples only

Main effect sizes for all outcome categories

Anxiety (all measures). Thirteen RCTs, with 13 comparisons aggregated to a g of 0.28 (95% CI 0.01–0.55). Heterogeneity was high (I 2 = 72.76%) and highly significant (Table 2). Removal of one outlier with an extremely high effect size (g = 2.36) reduced the effect size by approximately half, to a non-significant g of 0.16 (95% CI –0.03 to 0.35). Heterogeneity remained significant, but moderate (I 2 = 47.44%).

General anxiety. Eight RCTs with eight comparisons led to a non-significant g of 0.29 (95% CI –0.14 to 0.74). Heterogeneity was high (I 2 = 81.75%) and highly significant. Removal of one outlier let to a g of –0.01 (95% CI –0.22 to 0.20) and heterogeneity was no longer significant.

Social anxiety. Seven RCTs with seven comparisons aggregated into a non-significant g of 0.32 (95% CI –0.09 to 0.74), with high heterogeneity (I 2 = 80.84%, Table 2). Removal of one outlier led to a g of 0.11 (95% CI –0.13 to 0.35) and heterogeneity was no longer significant.

Depression. Nine RCTs with nine comparisons aggregated into a g of 0.24 (95% CI 0.02 to 0.46), with non-significant heterogeneity.

Publication bias

We found evidence of publication bias for general anxiety and depression. In both cases inspection of the funnel plot and the Duval & Tweedie trim and fill procedure indicated significant publication bias. For general anxiety, after adjustment for missing studies (n = 3), the effect size decreased from a g of 0.29 to a non-significant g of –0.09 (95% CI –0.58 to 0.40). Egger’s test was also significant (intercept 6.23, 95% CI 1.32–11.14, P = 0.020). For depression, after adjustment for missing studies (n = 4), the effect size decreased from a g of 0.24 to a non-significant g of 0.04 (95% CI –0.20 to 0.29). Egger’s test was also significant (intercept 3.50, 95% CI 0.69–6.31, P = 0.021). Moreover, for depression a meta-regression analysis indicated a significant relationship between publication year and effect size (slope b = –0.12, 95% CI –0.24 to –0.008, P = 0.036).

Subgroup and mediation analysis

As there were few studies in each outcome category, we did not conduct subgroup analysis. We inspected which of the included studies in our meta-analysis had also conducted formal mediation analysis (i.e. not just correlations) to test whether the effects of the intervention on emotional outcomes were mediated by changes in bias. Only 11 out of the 49 RCTs had conducted formal tests of mediation. Only four studies Reference Eberl, Wiers, Pawelczack, Rinck, Becker and Lindenmeyer28,Reference Amir, Bomyea and Beard31,Reference See, MacLeod and Bridle63,Reference Wells and Beevers64 reported clear evidence of successful mediation. Three other studies found evidence of mediation for only one, but not for the other outcomes Reference Amir, Beard, Burns and Bomyea30,Reference Heeren, Reese, McNally and Philippot40,Reference Najmi and Amir50 and in two of these, the outcome was physiological Reference Heeren, Reese, McNally and Philippot40 or behavioural Reference Najmi and Amir50 and not the emotional or symptom outcome our analysis focused on. Two studies Reference Beard and Amir34,Reference Bowler, Mackintosh, Dunn, Mathews, Dalgleish and Hoppitt36 found evidence of mediation for change in one type of bias, but not in another, and in one of these there was mediation for both the CBM and the computerised CBT control group. Reference Bowler, Mackintosh, Dunn, Mathews, Dalgleish and Hoppitt36 Finally, two studies found

Table 2 Effects of cognitive bias modification interventions, compared with control, at post-test, for clinical samplesFootnote a

| Variable | n comp | g (95% CI) | Z | I 2 (95% CI)Footnote b | Number needed to treat |

|---|---|---|---|---|---|

| Anxiety (all measures) | 13 | 0.28 (0.01 to 0.55) | 2.06 | 73Footnote * (53-84) | 6.41 |

| Outlier removedFootnote c | 12 | 0.16 (–0.03 to 0.35) | 1.64 | 47Footnote * (0-73) | 11.11 |

| General anxiety | 8 | 0.29 (–0.14 to 0.74) | 1.32 | 82Footnote * (65-90) | 5.95 |

| Outlier removedFootnote c | 7 | –0.01 (–0.22 to 0.20) | –0.09 | 23 (0-66) | - |

| Social anxiety | 7 | 0.32 (–0.09 to 0.74) | 1.51 | 81Footnote * (61-91) | 5.56 |

| Outlier removedFootnote c | 6 | 0.11 (–0.13 to 0.35) | 0.89 | 43Footnote * (0-78) | 16.13 |

| Depression | 9 | 0.24 (0.02 to 0.46) | 2.19 | 39 (0-72) | 7.46 |

n comp, number of comparisons.

a. All results are reported with Hedges g, using a random-effects model.

b. The P levels in this column indicate whether the Q-statistic is significant (the I 2 statistic does not include a test of significance).

c. Outliers were defined as studies in which the 95% CI was outside the 95% CI of the pooled studies. Above the 95% CI: Schmidt et al. Reference Schmidt, Richey, Buckner and Timpano56

* P<0.05; other results are not significant (P>0.05).

no evidence of mediation of changes in symptom or emotional outcomes by change in bias. Reference Wiers, Eberl, Rinck, Becker and Lindenmeyer5,Reference Peters, Constans and Mathews65

Discussion

Summary of main findings

We conducted an updated meta-analysis including all CBM interventions tested in RCTs for clinically relevant outcomes. We also evaluated, for the first time, the quality of the CBM studies by assessing the presence of risk of bias. CBM interventions are ultimately advocated as therapeutic, so we also separately examined their effects for clinical patients, not just for the blend of healthy, subclinical and clinical participants that were the focus of the three previous meta-analyses. Finally, we wanted to analyse more general moderators of treatment response, relevant to most psychotherapeutic interventions, and to examine more closely publication bias and its possible ramifications (for example a possible effect of time-lag bias).

Overall, for the meta-analysis including all the samples, the effects of CBM intervention in all outcome categories were small and showed a high degree of heterogeneity. Exclusion of outliers significantly reduced effect sizes across all outcome categories, in some cases by almost half. We note that for anxiety outcomes, three of the four outliers identified were not only outside the confidence intervals of the effect size, but had effect sizes almost ten times higher than the pooled mean effect size. One of these, Schmidt et al, 2009, Reference Schmidt, Richey, Buckner and Timpano56 was identified as an outlier in two of the three previous meta-analysis. Reference Hakamata, Lissek, Bar-Haim, Britton, Fox and Leibenluft6,Reference Hallion and Ruscio7 Ironically, this was also one of the first articles that marked the ABM research and practice boom. Adjustment for publication bias also reduced effect sizes considerably, and for some outcomes rendered them non-significant.

The only other meta-analysis Reference Hallion and Ruscio7 that looked at both ABM and CBM-I interventions found even smaller, yet significant, results for both anxiety and depression, respectively, but no evidence of heterogeneity. We note that many new studies have been published over the past 3 years, since the search conducted for their meta-analysis. Whereas they included any controlled clinical trials, we restricted our meta-analysis to RCTs examining any CBM intervention, which used an established, standardised measure of symptom or distress-related outcomes.

For clinical samples, the effects of CBM interventions on anxiety and depression outcomes were small and in most cases non-significant; in the cases where they were significant, such as for depression, it seems to have been as a result of the presence of outliers and/or publication bias. The three previous meta-analysis Reference Hakamata, Lissek, Bar-Haim, Britton, Fox and Leibenluft6–Reference Beard, Sawyer and Hofmann8 reported either non-significant differences or a trend towards higher effects for clinical or high symptomatology samples as compared with unselected ones. Including only RCTs and looking exclusively at participants with a clinical diagnosis, our data portray a different picture.

The effects of CBM interventions on types of bias was not specifically approached in this meta-analysis, as it was analysed in all three previous meta-analyses. In the studies that measured bias, this was generally considered just another outcome measure, alongside emotional and symptom outcomes. Even if mediation analysis was conducted, it could not reliably indicate that changes in bias were causally related to changes in symptoms, because they were both measured at the same time point. Measures of bias were heterogeneous, employing many different indices and procedure, not standardised and consequently difficult to interpret. Moreover, in many cases the task used to measure bias was the same one used in the intervention (for example the dot probe task), making it susceptible to the biasing effect of demand characteristics. Our examination of whether the studies included examined if the effects of the CBM interventions of emotional and symptom outcomes was mediated by changes in biases provided results at best mixed, reflecting the difficulties we highlighted above. Thus, it is evident we cannot even be sure which are the mechanisms of change in CBM.

Implications

Given that CBM interventions are primarily intended as cost-effective therapeutic alternatives, Reference Van Bockstaele, Verschuere, Tibboel, De Houwer, Crombez and Koster66 alleged to have an impact on clinically relevant symptoms Reference MacLeod and Mathews1 , we believe our results cast serious doubts on the majority of them having strong clinical utility. In contrast, effect sizes for other forms of psychotherapy have proven much more robust and of a greater magnitude, even when compared with a placebo condition. For instance, for adult depression a meta-analysis Reference Rosenthal67 found a g of 0.51 (corresponding to an NNT of 3.55) for cognitive–behavioural therapy as compared with placebo.

Leading CBM researchers Reference MacLeod and Mathews1 have argued that demand characteristics are an implausible explanation for CBM findings. Yet the results of our meta-analysis point in a different direction. Across anxiety outcome categories, we found that effect sizes were higher if participants received compensation for participation than if they did not, and if the intervention was delivered exclusively in the laboratory as opposed to also including a home-based component. Moreover, contrary to previous meta-analyses, our results showed that the effect sizes for general anxiety and depression were negatively linearly related with the number of sessions. Although the slope for this relationship is quite small, albeit significant, and we noted that a considerable proportion of RCTs included only one session, we can at least conclude that the lack of reliable effects of CBM is not because of insufficient exposure to the intervention.

Put together, these results seem to indicate that it is not unlikely that many positive CBM findings may have been influenced by a variant of the ‘experimenter effect’ Reference Rosenthal67 or other experimental artefacts, unrelated to the scope and purported mechanisms of action of these interventions. In support of these conjectures comes the effect, present in many CBM studies, that the placebo control group (‘no contingency’) also shows improvement. Reference Baert, De Raedt, Schacht and Koster33–Reference Boettcher, Leek, Matson, Holmes, Browning and Macleod35,Reference Carlbring, Apelstrand, Sehlin, Amir, Rousseau and Hofmann37,Reference Lang, Blackwell, Harmer, Davison and Holmes44,Reference Neubauer, von Auer, Murray, Petermann, Helbig-Lang and Gerlach51,Reference Amir, Beard, Taylor, Klumpp, Elias and Burns68 Surprisingly, it has been recently argued that at least for ABM in the case of social anxiety, the opposite intervention, assumed to increase bias and used as a control condition in previous studies, would be more beneficial. Reference Boettcher, Leek, Matson, Holmes, Browning and Macleod35,Reference Boettcher, Hasselrot, Sund, Andersson and Carlbring69

As importantly, we found strong evidence of publication bias, both for the all samples and the clinical samples meta-analyses. For the former, there was considerable publication bias for general anxiety, social anxiety and depression. In fact, for social anxiety and depression, adjustment for publication bias rendered effect sizes no longer significant. The same pattern emerged for the clinical samples meta-analysis. There was strong and consistent evidence of publication bias for general anxiety and depression.

We also found a strong and consistent negative linear relationship between publication year and effect size across most outcome categories: older studies showed significantly higher effect sizes, whereas more recent studies obtained effect sizes that were non-significant, very close to zero and, in some cases, even negative. This is a common phenomenon in intervention research, when a new intervention is proposed and tested, with the first studies showing large effects because of methodological considerations (i.e. the use of pilot, low powered studies where only large effects can overcome the significance threshold) and a strong publication bias for positive findings. In fact, promising new interventions like CBM are very susceptible to a particular type of publication bias –time lag bias – the phenomenon in which studies with positive results get to be published first and dominate the field, until the negative, but equally important, studies are published Reference Higgins and Green70,Reference Ioannidis71 – if they are published at all. Nonetheless, we suspect this phenomenon was aggravated for CBM by highly laudatory narrative reviews, comments and editorials, published before the efficiency of the new interventions had been established in well-powered, methodologically appropriate RCTs. In addition, if replication studies are based on high effect sizes found in early studies, there is a distinct possibility that these subsequent replication studies are powered to detect a large effect size and thus are severely underpowered. Reference Ioannidis72 Moreover, overtly positive pieces about CBM have been almost exclusively published in top-tier journals, thus contributing to indirectly enforcing the notion that we were witnessing the development of a powerful new therapy – ‘a new clinical weapon’. Reference Macleod and Holmes73

Interestingly, CBM-I seems to have better results than ABM. Although this result seems to suggest differential potential, we need to be very cautious in its interpretation. Studies on CBM-I are a newer development and we run the risk of having caught them exactly in the phase where mostly positive results are getting published (i.e. time-lag bias). Also, by their nature, CBM-I interventions are more susceptible to demand characteristics because it is easier for participants to catch on to a particular interpretative pattern being favoured.

The view that the praise for CBM is much ahead of its corresponding empirical evidence is further sustained by results regarding the quality of the studies. Using a widely accepted and recommended instrument – the Cochrane Collaboration’s Risk of bias assessment tool – we showed that the quality of the studies investigating CBM is substandard. In fact, a significant proportion of the included studies satisfied no quality criteria at all and about two-thirds satisfied fewer than three quality criteria. Moreover, it is even more problematic that for three of the five risk of bias criteria analysed (sequence generation, allocation concealment, masking of assessors), a significant proportion of the studies (ranging from 58 to 83%) was unclear or simply did not contain information to permit assessment. Even for the masking of participants, a characteristic asserted as a strong point of these studies, Reference Beard14 40% were rated as unclear.

Risk of bias can also be associated with artificial inflation of effect sizes. Indeed, our results showed that for both general anxiety and depression, the quality of the included RCTs was negatively related to effect size. The effect size decreased by 0.07 with every quality criterion that was satisfied for general anxiety and by 0.10 for depression.

Limitations

Given that CBM interventions include a number of different approaches, under different names and with many task variations, we might have missed some studies that would have been eligible. We tried to be inclusive with our search criteria and given that many CBM studies present themselves as experimental studies and not RCTs, we conducted our search without restricting it to RCTs. Statistical power might have also represented a problem, especially for some subgroup analysis and it might have prevented us from finding significant differences. There was a very high degree of heterogeneity for all the outcome categories considered and one might even wonder if it makes sense to combine these studies at all. Reference Ioannidis, Patsopoulos and Evangelou24 However, we underscore that the exclusion of outliers significantly reduced heterogeneity to non-significant values for all outcome categories considered. Nonetheless, confidence intervals around I 2 remained large, indicating heterogeneity was most likely still present. This is probably also as a result of the fact that CBM studies used a wide range of outcome measurements, which we were only able to group loosely into categories. Few studies declared a primary outcome measure.

Future directions

In conclusion, our updated meta-analysis of all types of CBM interventions examined in RCTs showed small effects for anxiety and depression outcomes and significant heterogeneity, when all participant samples were considered. The effects were marked by significant outliers and publication bias and were largely reduced when these factors were adjusted. For clinical patients, we showed small and mostly non-significant effects for anxiety and depression outcomes. Adjustment for publication bias rendered results for all outcome categories non-significant for clinical patients. The quality of the included RCTs was suboptimal and higher-quality studies obtained smaller, closer to zero, effect sizes. More sessions were associated with smaller effect sizes, as were the absence of participant compensation and the non-exclusively laboratory-based delivery of the intervention. Along with strong evidence of publication bias, publication year was a robust predictor of effect size, across almost all outcome categories: the more recent the study, the smaller and closer to zero its corresponding effect size. Our results highlight considerable problems with CBM interventions, underscoring their lack of clinically relevant effects for patients, as well as the genuine possibility of substantial artificial effect size inflation because of aspects unrelated to the interventions themselves, but to demand characteristics and publication bias.

Even if, at least recently, CBM researchers have started acknowledging the ever more frequent negative results and their significance for CBM interventions, the search for answers continues to be confined by the strict boundaries of the paradigm, with researchers constantly ‘trying out’ new variations of CBM designs, tasks, instructions, doses (i.e. number of sessions) or moderating variables to attempt to validate a theoretical framework. We argue that this approach is detrimental, as it hinders the development of well-conducted, independently run RCTs. Unlike other forms of psychotherapy, not only is there no established protocol for CBM interventions, but it becomes difficult to choose from the wide variety of task variations and dosages proposed.

We believe that the only way of creating a more robust CBM is by replacing the myriad of experimental variations with proper clinical research (i.e. on clinical patients with a randomised trial design, a pre-specified, reproducible protocol, sufficient power) on the most promising CBM approaches (for instance AAT for alcohol problems). One CBM approach that has done that is CNT, Reference Watkins, Baeyens and Read74,Reference Watkins, Taylor, Byng, Baeyens, Read and Pearson75 which now requires independent validation from other investigator groups in order to be considered an evidence-based treatment for depression. Only if such studies are conducted and result in clinically relevant outcomes, is there a future for CBM.

eLetters

No eLetters have been published for this article.