1. Introduction

Advancements in computational capabilities and flow measurement technologies are producing a rapidly increasing amount of high-fidelity flow data. The coherent spatio-temporal structures of the flow data enable data-driven reduced-order models (ROMs). In terms of kinematics, ROMs furnish simplified descriptions that enrich our understanding of fundamental flow processes (Holmes, Lumley & Berkooz Reference Holmes, Lumley and Berkooz1996), facilitated by increasingly powerful machine learning methods (Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020). The ROMs may also allow the prediction of future states with acceptable accuracy. In the context of flow control, ROMs are serving as efficient tools for designing and testing control strategies, replacing costly high-fidelity simulations with an acceptable trade-off in accuracy (Bergmann & Cordier Reference Bergmann and Cordier2008).

First-principle-based ROMs have historically been the foundation of the ROM community, as only a limited number of large data sets were available at that time. The Galerkin framework is one of the most classic methods in this category. By projecting the Navier–Stokes equations onto a low-dimensional subspace, the Galerkin model elegantly describes the original dynamics, exhibiting self-amplified amplitude-limited dynamics (Stuart Reference Stuart1971; Busse Reference Busse1991; Noack & Eckelmann Reference Noack and Eckelmann1994). Landau (Reference Landau1944) and Stuart (Reference Stuart1958) pioneered the mean-field model, a major progress in first-principle-based ROMs that provides insight into flow instabilities and bifurcation theory. For instance, in the case of a supercritical Hopf bifurcation, mean-field models have been applied to the vortex shedding behind a cylinder (Strykowski & Sreenivasan Reference Strykowski and Sreenivasan1990; Schumm, Berger & Monkewitz Reference Schumm, Berger and Monkewitz1994; Noack et al. Reference Noack, Afanasiev, Morzyński, Tadmor and Thiele2003) and high-Reynolds-number turbulent wake flow (Bourgeois, Noack & Martinuzzi Reference Bourgeois, Noack and Martinuzzi2013). For more complex flows undergoing successive bifurcations, including both Pitchfork and Hopf bifurcations, weakly nonlinear mean-field analysis is applied to the wake of axisymmetric bodies (Fabre, Auguste & Magnaudet Reference Fabre, Auguste and Magnaudet2008), the wake of a disk (Meliga, Chomaz & Sipp Reference Meliga, Chomaz and Sipp2009) and the fluidic pinball (Deng et al. Reference Deng, Noack, Morzyński and Pastur2020). Furthermore, in the field of resolvent analysis, the mean-field theory also contributes by decomposing the system into time-resolved linear dynamics and a feedback term involving quadratic nonlinearity (McKeon et al. Reference McKeon, Li, Jiang, Morrison and Smits2004; Gómez et al. Reference Gómez, Blackburn, Rudman, Sharma and McKeon2016; Rigas et al. Reference Rigas, Schmidt, Colonius and Brès2017).

In contrast to a first-principle ROM, a data-driven version is based on a low-dimensional representation of flow snapshots. Proper orthogonal decomposition (POD) is a commonly used example. Proper orthogonal decomposition begins with the eigenvalue or singular value decomposition of the correlation matrix, yielding a low-dimensional subspace comprising leading orthogonal eigenvectors. This subspace provides an ‘optimal’ Galerkin expansion with minimal average residual in the energy norm. Since Aubry et al. (Reference Aubry, Holmes, Lumley and Stone1988) introduced the groundbreaking POD–Galerkin model for unforced turbulent boundary layers, numerous POD models have emerged for various configurations. Examples include POD models for channel flow (Podvin & Lumley Reference Podvin and Lumley1998; Podvin Reference Podvin2009), the wake of a two-dimensional square cylinder (Bergmann, Bruneau & Iollo Reference Bergmann, Bruneau and Iollo2009), laminar and turbulent vortex shedding (Iollo, Lanteri & Désidéri Reference Iollo, Lanteri and Désidéri2000) and flow past a circular cylinder with a dynamic subgrid-scale model and variational multiscale model (Wang et al. Reference Wang, Akhtar, Borggaard and Iliescu2012). There are also various variations of the POD model, e.g. integrating the actuation terms into the projection system for control design (Bergmann & Cordier Reference Bergmann and Cordier2008; Luchtenburg et al. Reference Luchtenburg, Günther, Noack, King and Tadmor2009) and balanced POD (Rowley Reference Rowley2005), which is derived from a POD approximation to the product of controllability and observability Gramians to obtain an approximately balanced truncation (Moore Reference Moore1981). Increasingly powerful machine learning methods can make data-driven ROMs more automated. Examples include the sparse identification of nonlinear dynamics (SINDy) aim at human interpretable models (Brunton, Proctor & Kutz Reference Brunton, Proctor and Kutz2016), ROMs with artificial neural networks (San & Maulik Reference San and Maulik2018; San, Maulik & Ahmed Reference San, Maulik and Ahmed2019; Zhu et al. Reference Zhu, Zhang, Kou and Liu2019; Kou & Zhang Reference Kou and Zhang2021), turbulence modelling and flow estimation with multi-input multi-output by deep neural networks (Kutz Reference Kutz2017; Li, Li & Noack Reference Li, Li and Noack2022) and manifold learning methods (Farzamnik et al. Reference Farzamnik, Ianiro, Discetti, Deng, Oberleithner, Noack and Guerrero2023).

In this work we focus on automated data-driven modelling. The starting point is cluster-based ROMs (CROMs), pioneered by Burkardt, Gunzburger & Lee (Reference Burkardt, Gunzburger and Lee2006). Clustering is an unsupervised classification of patterns into groups commonly used in data science (Jain & Dubes Reference Jain and Dubes1988; Jain, Murty & Flynn Reference Jain, Murty and Flynn1999; Jain Reference Jain2010), it is popular in data mining, document retrieval, image segmentation and feature detection (Kim et al. Reference Kim, Liu, Jain and Liu2022). The foundation of the CROM lies in the cluster-based Markov model (CMM) proposed by Kaiser et al. (Reference Kaiser2014), which combines a cluster analysis of an ensemble of snapshots and a Markov model for transitions between different flow states reduced by clustering. The CMM has provided a valuable physical understanding of the mixing layer, Ahmed body wakes (Kaiser et al. Reference Kaiser2014), combustion-related mixing (Cao et al. Reference Cao, Kaiser, Borée, Noack, Thomas and Guilain2014) and the supersonic mixing layer (Li & Tan Reference Li and Tan2020). Nair et al. (Reference Nair, Yeh, Kaiser, Noack, Brunton and Taira2019) applied the cluster-based model to feedback control for drag reduction and first introduced a directed network for dynamical modelling. Building on this concept, Fernex, Noack & Semaan (Reference Fernex, Noack and Semaan2021) and Li et al. (Reference Li, Fernex, Semaan, Tan, Morzyński and Noack2021) further proposed the cluster-based network model (CNM) with improved long-time-scale resolution. Instead of the ‘stroboscopic’ view of the CMM, the CNM focuses on non-trivial transitions. The dynamics is restricted to a simple network model between the cluster centroids, like a deterministic–stochastic flight schedule that allows only a few possible flights with corresponding probabilities and flight times consistent with the data set. Networks of complex dynamic systems have gained great interest, forming an increasingly important interdisciplinary field known as network science (Watts & Strogatz Reference Watts and Strogatz1998; Albert & Barabási Reference Albert and Barabási2002; Börner, Sanyal & Vespignani Reference Börner, Sanyal and Vespignani2007; Barabási Reference Barabási2013). Network-based approaches are often used in fluid flows (Nair & Taira Reference Nair and Taira2015; Hadjighasem et al. Reference Hadjighasem, Karrasch, Teramoto and Haller2016; Taira, Nair & Brunton Reference Taira, Nair and Brunton2016; Yeh, Gopalakrishnan Meena & Taira Reference Yeh, Gopalakrishnan Meena and Taira2021; Taira & Nair Reference Taira and Nair2022), in conjunction with clustering analysis (Bollt Reference Bollt2001; Schlueter-Kuck & Dabiri Reference Schlueter-Kuck and Dabiri2017; Murayama et al. Reference Murayama, Kinugawa, Tokuda and Gotoda2018; Krueger et al. Reference Krueger, Hahsler, Olinick, Williams and Zharfa2019). The critical structures that modify the dynamical system can be identified by the intra- and inter-cluster interactions using community detection (Gopalakrishnan Meena, Nair & Taira Reference Gopalakrishnan Meena, Nair and Taira2018; Gopalakrishnan Meena & Taira Reference Gopalakrishnan Meena and Taira2021).

The CROMs are fully automated, robust and physically interpretable, while the model accuracy is strongly related to the clustering process. The state space is equivalently discretized in the above-mentioned CROMs, leading to a lack of dynamic coverage. For example, the CNM struggles to capture multiscale behaviours such as the oscillations near attractors and the amplitude variations between trajectories. To address this issue, an effective solution is to employ dynamics-augmented clustering to determine the centroid distribution. Inspired by the hierarchical clustering (Deng et al. Reference Deng, Noack, Morzyński and Pastur2022) and the network sparsification (Nair & Taira Reference Nair and Taira2015), we propose a dynamics-augmented cluster-based network model (dCNM) with an improved resolution of complex dynamics. In this case, the time-resolved dynamics is reflected by the evolution of trajectory segments after the state space is clustered. These segments are automatically identified from cluster transitions and are represented by centroids obtained through segment averaging. A second-stage clustering further refines the centroids, eliminating the network redundancy and also deepening the comprehension of underlying physical mechanisms. The proposed dCNM can systematically identify complex dynamics involved in the case of multi-attractor, multi-frequency and multiscale dynamic systems. Figure 1 provides a comparative illustration of CNM and dCNM in terms of kinematics and dynamics, exemplified by an inward spiral trajectory in a two-dimensional state space.

Figure 1. Principle sketches: the CNM and the dCNM are illustrated using an inward spiral trajectory in a two-dimensional state space with the same number of centroids. The thick solid lines denote cluster divisions and the thin solid lines represent sub-cluster divisions. The centroids are represented by coloured dots and their colours represent their cluster affiliations. The CNM centroids are derived from snapshot averages within each cluster and show uniform geometric coverage, whereas the dCNM centroids incorporate dynamic features and exhibit a weighted distribution. Consequently, dCNM accurately reconstructs the cycle-to-cycle variations and also ensures precise transition sequencing.

The dCNM is first applied to the Lorenz (Reference Lorenz1963) system as an illustrative example. The Lorenz attractor is notable for the ‘butterfly effect’, showcasing the chaotic dynamics governed by only three ordinary differential equations. Subsequently, we demonstrate the dCNM on the sphere wake of the periodic, quasi-periodic and chaotic flow regimes. The sphere wake is a well-investigated benchmark configuration, serving as a prototype flow of bluff body wakes commonly encountered in many modern applications, for instance, the design of drones and air taxis. Despite the simple geometry, the sphere wake can experience a series of bifurcations with increasing Reynolds number. Along the route to turbulence, the flow system exhibits steady, periodic, quasi-periodic and chaotic flow regimes. The transient and post-transient flow dynamics, characterised by multi-frequency and multiscale behaviours, provide a challenging testing ground for reduced-order modelling.

This paper is organised as follows. In § 2 the clustering algorithm and the different perspectives on the dCNM strategy are described. In § 3 the dCNM is illustrated on the Lorenz system and in § 4 it is demonstrated on the sphere wake of three flow regimes: the periodic flow, the quasi-periodic flow and the chaotic flow. In § 5 the main findings and improvements are summarised and future directions are suggested.

2. Dynamics-augmented CNM

In this section we detail the process of the dCNM. In § 2.1 the ![]() $k$-means++ clustering algorithm and its demonstration on the state space are introduced. The second-stage clustering on the trajectory segments is further discussed in § 2.2. In § 2.3 the transition characteristics are described and in § 2.4 different criteria are introduced to evaluate the performance of the proposed model. The variables used in this section are listed in table 1.

$k$-means++ clustering algorithm and its demonstration on the state space are introduced. The second-stage clustering on the trajectory segments is further discussed in § 2.2. In § 2.3 the transition characteristics are described and in § 2.4 different criteria are introduced to evaluate the performance of the proposed model. The variables used in this section are listed in table 1.

Table 1. Table of variables. Subscripts ![]() $k$ and

$k$ and ![]() $i$ are related to the level of clusters from the state space clustering, and subscripts

$i$ are related to the level of clusters from the state space clustering, and subscripts ![]() $l$ and

$l$ and ![]() $j$ are related to the level of trajectory segments.

$j$ are related to the level of trajectory segments.

2.1. Clustering the state space

The dynamics-augmented clustering procedure is divided into two steps. Initially, the state space is clustered, yielding coarse-grained state transition dynamics with trajectory segments composed of time-continuous snapshots within each cluster. Subsequently, we cluster these trajectory segments, utilising centroids derived from the average of each segment. This step optimises the centroid distribution and eliminates the redundancy of the trajectory segments.

The first-stage clustering discretizes the high-dimensional state space by grouping the snapshots. We first define a Hilbert space ![]() $\mathscr {L}^{2}(\varOmega )$, in which the inner product of vector fields in the domain

$\mathscr {L}^{2}(\varOmega )$, in which the inner product of vector fields in the domain ![]() $\varOmega$ is given by a square-integrable function:

$\varOmega$ is given by a square-integrable function:

Here ![]() $\boldsymbol {u}$ and

$\boldsymbol {u}$ and ![]() $\boldsymbol {v}$ represent snapshots of this vector field, also known as observations in the machine learning context. The corresponding norm is defined as

$\boldsymbol {v}$ represent snapshots of this vector field, also known as observations in the machine learning context. The corresponding norm is defined as

The distance ![]() $D$ between two snapshots can be calculated as

$D$ between two snapshots can be calculated as

The unsupervised ![]() $k$-means++ algorithm (MacQueen Reference MacQueen1967; Lloyd Reference Lloyd1982; Arthur & Vassilvitskii Reference Arthur and Vassilvitskii2007) is used for clustering. It operates automatically, devoid of assumptions or data categorisation. Serving as the foundation of cluster analysis, this algorithm partitions a set of

$k$-means++ algorithm (MacQueen Reference MacQueen1967; Lloyd Reference Lloyd1982; Arthur & Vassilvitskii Reference Arthur and Vassilvitskii2007) is used for clustering. It operates automatically, devoid of assumptions or data categorisation. Serving as the foundation of cluster analysis, this algorithm partitions a set of ![]() $M$ time-resolved snapshots

$M$ time-resolved snapshots ![]() $\boldsymbol {u}^m$, where

$\boldsymbol {u}^m$, where ![]() $m=1 \ldots M$, into

$m=1 \ldots M$, into ![]() $K$ clusters

$K$ clusters ![]() $\mathcal {C}_k$, where

$\mathcal {C}_k$, where ![]() $k = 1 \dots K$. Each cluster corresponds to a centroidal Voronoi cell, with the centroid defined as the average of the snapshots within the same cluster. The algorithm comprises the following steps.

$k = 1 \dots K$. Each cluster corresponds to a centroidal Voronoi cell, with the centroid defined as the average of the snapshots within the same cluster. The algorithm comprises the following steps.

(i) Initialisation:

$K$ centroids

$K$ centroids  $\boldsymbol {c}_{k}$, where

$\boldsymbol {c}_{k}$, where  $k = 1 \ldots K$, are randomly selected. In contrast to the

$k = 1 \ldots K$, are randomly selected. In contrast to the  $k$-means algorithm,

$k$-means algorithm,  $k$-means++ optimises the placement of these centroids to prevent sensitivity to initial conditions.

$k$-means++ optimises the placement of these centroids to prevent sensitivity to initial conditions.(ii) Assignment: each snapshot

$\boldsymbol {u}^{m}$ is allocated to the nearest centroid by

$\boldsymbol {u}^{m}$ is allocated to the nearest centroid by  $\underset {k}{\rm arg\min } D(\boldsymbol {u}^{m},\boldsymbol {c}_{k})$. The characteristic function is used to mark their affiliation, and it is defined as follows:

(2.4)

$\underset {k}{\rm arg\min } D(\boldsymbol {u}^{m},\boldsymbol {c}_{k})$. The characteristic function is used to mark their affiliation, and it is defined as follows:

(2.4) \begin{equation} \chi_{k}^{m}:=\left\{\begin{array}{@{}ll@{}} 1, & \text{if } \boldsymbol{u}^{m} \in \mathcal{C}_{k}, \\ 0, & \text{otherwise}. \end{array}\right. \end{equation}

\begin{equation} \chi_{k}^{m}:=\left\{\begin{array}{@{}ll@{}} 1, & \text{if } \boldsymbol{u}^{m} \in \mathcal{C}_{k}, \\ 0, & \text{otherwise}. \end{array}\right. \end{equation}(iii) Update: each centroid is recalculated by averaging all the snapshots belonging to the corresponding cluster as

(2.5)where \begin{equation} \boldsymbol{c}_{k}=\frac{1}{M_{k}} \sum_{\boldsymbol{u}^{m} \in \mathcal{C}_{k}} \boldsymbol{u}^{m}=\frac{1}{M_{k}} \sum_{m=1}^{M} \chi_{k}^{m} \boldsymbol{u}^{m} , \end{equation}(2.6)

\begin{equation} \boldsymbol{c}_{k}=\frac{1}{M_{k}} \sum_{\boldsymbol{u}^{m} \in \mathcal{C}_{k}} \boldsymbol{u}^{m}=\frac{1}{M_{k}} \sum_{m=1}^{M} \chi_{k}^{m} \boldsymbol{u}^{m} , \end{equation}(2.6) \begin{equation} M_{k}=\sum_{m=1}^{M} \chi_{k}^{m}. \end{equation}

\begin{equation} M_{k}=\sum_{m=1}^{M} \chi_{k}^{m}. \end{equation}(iv) Iteration: the assignment and update steps are repeated until convergence is reached. Convergence means that the centroids do not move or stabilise below a certain threshold. The algorithm minimises the intra-cluster variance and maximises the inter-cluster variance. The intra-cluster variance is computed as follows:

(2.7)Each iteration reduces the value of the criterion \begin{equation} J\left(\boldsymbol{c}_{1}, \ldots, \boldsymbol{c}_{K}\right) = \sum_{k=1}^{K} \sum_{m=1}^{M} \chi_{k}^{m} \| \boldsymbol{u}^{m}-\boldsymbol{c}_{k} \|_{\varOmega}^{2} . \end{equation}

\begin{equation} J\left(\boldsymbol{c}_{1}, \ldots, \boldsymbol{c}_{K}\right) = \sum_{k=1}^{K} \sum_{m=1}^{M} \chi_{k}^{m} \| \boldsymbol{u}^{m}-\boldsymbol{c}_{k} \|_{\varOmega}^{2} . \end{equation} $J$ until convergence is reached.

$J$ until convergence is reached.

The cluster probability distribution ![]() $\boldsymbol {P} = [P_1, \ldots, P_K]$ is determined by

$\boldsymbol {P} = [P_1, \ldots, P_K]$ is determined by ![]() $P_k = M_{k}/M$ for each cluster

$P_k = M_{k}/M$ for each cluster ![]() $\mathcal {C}_k$, and satisfies the normalisation condition

$\mathcal {C}_k$, and satisfies the normalisation condition ![]() ${\sum }_{k=1}^{K}P_k = 1$.

${\sum }_{k=1}^{K}P_k = 1$.

The geometric properties of the clusters are quantified for further analysis. The cluster standard deviation on the snapshots ![]() $R_{k}^{u}$ measures the cluster size, following Kaiser et al. (Reference Kaiser2014), as

$R_{k}^{u}$ measures the cluster size, following Kaiser et al. (Reference Kaiser2014), as

\begin{equation} R_{k}^{u} = \sqrt{ \frac{1}{M_{k}} \sum_{m=1}^{M} \chi_{k}^{m} \left\| \boldsymbol{u}^{m}-\boldsymbol{c}_{k} \right\|_{\varOmega}^{2}} . \end{equation}

\begin{equation} R_{k}^{u} = \sqrt{ \frac{1}{M_{k}} \sum_{m=1}^{M} \chi_{k}^{m} \left\| \boldsymbol{u}^{m}-\boldsymbol{c}_{k} \right\|_{\varOmega}^{2}} . \end{equation}

The time-resolved snapshots should be equidistantly sampled and cover a statistically representative time window of the coherent structure evolution. As a rule of thumb, at least ten periods of the dominant frequency are needed to obtain reasonably accurate statistical moments and at least ![]() $K$ snapshots per characteristic period to capture an accurate temporal evolution.

$K$ snapshots per characteristic period to capture an accurate temporal evolution.

2.2. Clustering the trajectory segments

After the state space is discretized, the trajectory is also divided into segments. We use the cluster transition information to identify the trajectory segments that pass through a cluster.

Based on the temporal information from the given data set, the nonlinear dynamics between snapshots are modelled as linear transitions between clusters, known as the classic CNM (Fernex et al. Reference Fernex, Noack and Semaan2021; Li et al. Reference Li, Fernex, Semaan, Tan, Morzyński and Noack2021). We infer the probability of cluster transition from the data as

where ![]() $Q_{ik}$ is the direct cluster transition probability from cluster

$Q_{ik}$ is the direct cluster transition probability from cluster ![]() $\mathcal {C}_{k}$ to

$\mathcal {C}_{k}$ to ![]() $\mathcal {C}_{i}$ and

$\mathcal {C}_{i}$ and ![]() $n_{ik}$ is the number of transitions from

$n_{ik}$ is the number of transitions from ![]() $\mathcal {C}_{k}$ only to

$\mathcal {C}_{k}$ only to ![]() $\mathcal {C}_{i}$:

$\mathcal {C}_{i}$:

\begin{equation} n_{ik}=\sum_{m=1}^{M} \chi_{ik}^m. \end{equation}

\begin{equation} n_{ik}=\sum_{m=1}^{M} \chi_{ik}^m. \end{equation}Here

![]() $n_{k}$ is the total number of transitions from

$n_{k}$ is the total number of transitions from ![]() $\mathcal {C}_{k}$ regardless of the destination cluster:

$\mathcal {C}_{k}$ regardless of the destination cluster:

\begin{equation} n_{k}=\sum_{i=1}^{K} n_{ik}, \quad i, k=1, \ldots, K. \end{equation}

\begin{equation} n_{k}=\sum_{i=1}^{K} n_{ik}, \quad i, k=1, \ldots, K. \end{equation}

If ![]() $Q_{ik} \neq 0$, it can be inferred that in cluster

$Q_{ik} \neq 0$, it can be inferred that in cluster ![]() $\mathcal {C}_{k}$ there exists at least one trajectory segment that is bound for cluster

$\mathcal {C}_{k}$ there exists at least one trajectory segment that is bound for cluster ![]() $\mathcal {C}_{i}$. We assign distinct labels to each trajectory segment corresponding to all destination clusters, denoted as

$\mathcal {C}_{i}$. We assign distinct labels to each trajectory segment corresponding to all destination clusters, denoted as ![]() $\mathcal {T}_{(kl)}$, where

$\mathcal {T}_{(kl)}$, where ![]() $k$ and

$k$ and ![]() $l$ represent the

$l$ represent the ![]() $l$th segment in

$l$th segment in ![]() $\mathcal {C}_{k}$. Therefore, the snapshots are marked according to their trajectory affiliations by a characteristic function:

$\mathcal {C}_{k}$. Therefore, the snapshots are marked according to their trajectory affiliations by a characteristic function:

Here ![]() $k$ represents the cluster affiliation and

$k$ represents the cluster affiliation and ![]() $l$ represents the trajectory segment affiliation. The total number of trajectory segments in

$l$ represents the trajectory segment affiliation. The total number of trajectory segments in ![]() $\mathcal {C}_{k}$ equals

$\mathcal {C}_{k}$ equals ![]() $n_{k}$. Note that the final trajectory segment of the data set will not be considered as it will not lead to any destination cluster and is usually incomplete. The total number of trajectory segments in the data set can be obtained by the sum of

$n_{k}$. Note that the final trajectory segment of the data set will not be considered as it will not lead to any destination cluster and is usually incomplete. The total number of trajectory segments in the data set can be obtained by the sum of ![]() $n_{k}$ as follows:

$n_{k}$ as follows:

\begin{equation} n_{traj} = \sum_{k=1}^{K} n_{k}. \end{equation}

\begin{equation} n_{traj} = \sum_{k=1}^{K} n_{k}. \end{equation}

Analogous trajectory segments within the same cluster will be merged in the subsequent clustering stage. Operations on the trajectories can often be costly. Efficiency in clustering can be achieved by mapping the operations performed on trajectory segments to their corresponding averages, i.e. the trajectory segment centroids, given their topological relationship. Additionally, the propagation of our model relies on centroids, rendering the trajectory information essentially unnecessary. We define the centroids ![]() $\boldsymbol {c}_{(kl)}$ as the average of snapshots belonging to the same trajectory segment:

$\boldsymbol {c}_{(kl)}$ as the average of snapshots belonging to the same trajectory segment:

\begin{equation} \boldsymbol{c}_{(kl)}=\frac{1}{M_{(kl)}} \sum_{\boldsymbol{u}^{m} \in \mathcal{T}_{(kl)}}\boldsymbol{u}^{m}=\frac{1}{M_{(kl)}} \sum_{m=1}^{M} \chi_{(kl)}^{m} \boldsymbol{u}^{m}. \end{equation}

\begin{equation} \boldsymbol{c}_{(kl)}=\frac{1}{M_{(kl)}} \sum_{\boldsymbol{u}^{m} \in \mathcal{T}_{(kl)}}\boldsymbol{u}^{m}=\frac{1}{M_{(kl)}} \sum_{m=1}^{M} \chi_{(kl)}^{m} \boldsymbol{u}^{m}. \end{equation}Here

\begin{equation} M_{(kl)}= \sum_{m=1}^{M} \chi_{(kl)}^{m}. \end{equation}

\begin{equation} M_{(kl)}= \sum_{m=1}^{M} \chi_{(kl)}^{m}. \end{equation}

The subsequent question pertains to how to determine the number of sub-clusters. The allocation of sub-clusters within each cluster can be automatically learnt from the data. To maintain the spatial resolution, more sub-clusters should be assigned to clusters with a larger transverse size. We first introduce a transverse cluster size vector ![]() $\boldsymbol {R}^{\mathcal {T}}$, which is defined by the standard deviation of the

$\boldsymbol {R}^{\mathcal {T}}$, which is defined by the standard deviation of the ![]() $n_{k}$ centroids

$n_{k}$ centroids ![]() $\boldsymbol {c}_{(kl)}$ with respect to the cluster centroid

$\boldsymbol {c}_{(kl)}$ with respect to the cluster centroid ![]() $\boldsymbol {c}_{k}$ as follows:

$\boldsymbol {c}_{k}$ as follows:

\begin{equation} R_{k}^{\mathcal{T}} = \sqrt{\frac{1}{n_{k}} \sum_{l=1}^{n_{k}}\|\boldsymbol{c}_{(kl)}-\boldsymbol{c}_{k}\|_{\varOmega}^{2}}. \end{equation}

\begin{equation} R_{k}^{\mathcal{T}} = \sqrt{\frac{1}{n_{k}} \sum_{l=1}^{n_{k}}\|\boldsymbol{c}_{(kl)}-\boldsymbol{c}_{k}\|_{\varOmega}^{2}}. \end{equation}

Next, we denote the number of sub-clusters as ![]() $L_{k}$ for clustering the centroids in cluster

$L_{k}$ for clustering the centroids in cluster ![]() $\mathcal {C}_{k}$. A

$\mathcal {C}_{k}$. A ![]() $K$-dimensional vector

$K$-dimensional vector ![]() $\boldsymbol {L} = [L_{1}, \ldots, L_{K}]^{\intercal }$ records the numbers of sub-clusters in each cluster, with

$\boldsymbol {L} = [L_{1}, \ldots, L_{K}]^{\intercal }$ records the numbers of sub-clusters in each cluster, with ![]() $L_{k}$ determined by

$L_{k}$ determined by

Here, the vector ![]() $\boldsymbol {R}^{\mathcal {T}}$ is normalised with the sum

$\boldsymbol {R}^{\mathcal {T}}$ is normalised with the sum ![]() ${\sum }_{k=1}^{K} R_{k}^{\mathcal {T}}$, denoted as

${\sum }_{k=1}^{K} R_{k}^{\mathcal {T}}$, denoted as ![]() $\boldsymbol {\hat {R}}^{\mathcal {T}}$, which ensures a suitable distribution of sub-clusters for the ensemble of

$\boldsymbol {\hat {R}}^{\mathcal {T}}$, which ensures a suitable distribution of sub-clusters for the ensemble of ![]() $n_{traj}$ trajectories. To increase the flexibility of the model, we introduce a sparsification controller

$n_{traj}$ trajectories. To increase the flexibility of the model, we introduce a sparsification controller ![]() $\beta \in [0, 1]$ in this clustering process. For the extreme value of

$\beta \in [0, 1]$ in this clustering process. For the extreme value of ![]() $\beta =1$, all the centroids are merged into one centroid, and the dCNM is identical to a classic CNM, with the maximum sparsification. For the other extreme

$\beta =1$, all the centroids are merged into one centroid, and the dCNM is identical to a classic CNM, with the maximum sparsification. For the other extreme ![]() $\beta =0$, the dCNM is minimally sparsified according to the transverse cluster size. For periodic or quasi-periodic systems, the dCNM with a large

$\beta =0$, the dCNM is minimally sparsified according to the transverse cluster size. For periodic or quasi-periodic systems, the dCNM with a large ![]() $\beta$ can capture most of the dynamics, while for complex systems such as chaotic systems, a small

$\beta$ can capture most of the dynamics, while for complex systems such as chaotic systems, a small ![]() $\beta$ may be needed. In addition, the minimum function prevents the possibility that the left-hand side of the equation exceeds the number of centroids

$\beta$ may be needed. In addition, the minimum function prevents the possibility that the left-hand side of the equation exceeds the number of centroids ![]() $n_{k}$ when

$n_{k}$ when ![]() $\beta$ is too small, causing the second-stage clustering to not be performed. The choice of

$\beta$ is too small, causing the second-stage clustering to not be performed. The choice of ![]() $\beta$ is discussed in Appendix C.

$\beta$ is discussed in Appendix C.

The refined centroids are obtained by averaging a series of centroids related to analogous trajectory segments. The redundancy of the ![]() $n_{traj}$ centroids is mitigated, and the corresponding transition network becomes sparse. The

$n_{traj}$ centroids is mitigated, and the corresponding transition network becomes sparse. The ![]() $k$-means++ algorithm is also used in the second-stage clustering. It will iteratively update the centroids

$k$-means++ algorithm is also used in the second-stage clustering. It will iteratively update the centroids ![]() $\boldsymbol {c}_{(kl)}$ and the characteristic function

$\boldsymbol {c}_{(kl)}$ and the characteristic function ![]() $\chi _{(kl)}^{m}$ until convergence or the maximum number of iterations is reached. The overall clustering process of the dCNM is summarised in Algorithm 1.

$\chi _{(kl)}^{m}$ until convergence or the maximum number of iterations is reached. The overall clustering process of the dCNM is summarised in Algorithm 1.

Algorithm 1: Pseudocode for the dynamics-augmented clustering procedure

2.3. Characterising the transition dynamics

We use the centroids obtained from § 2.2 as the nodes of the network and the linear transitions between these centroids as the edges of the network. First, we introduce two transition properties: the centroid transition probability ![]() $Q_{(ij) (kl)}$ and the transition time

$Q_{(ij) (kl)}$ and the transition time ![]() $T_{ik}$.

$T_{ik}$.

Figure 2 illustrates the definition of the subscripts in the centroid transition probability ![]() $Q_{(ij) (kl)}$, which can contain all possible transitions between the refined centroids of clusters

$Q_{(ij) (kl)}$, which can contain all possible transitions between the refined centroids of clusters ![]() $\mathcal {C}_{k}$ and

$\mathcal {C}_{k}$ and ![]() $\mathcal {C}_{i}$. Considering the transitions between these centroids, we define

$\mathcal {C}_{i}$. Considering the transitions between these centroids, we define ![]() $Q_{(ij) (kl)}$ as

$Q_{(ij) (kl)}$ as

where ![]() $n_{(ij) (kl)}$ is the number of transitions from

$n_{(ij) (kl)}$ is the number of transitions from ![]() $\boldsymbol {c}_{(kl)}$ only to

$\boldsymbol {c}_{(kl)}$ only to ![]() $\boldsymbol {c}_{(ij)}$. This definition differs from that of the CNM, which uses the cluster transition

$\boldsymbol {c}_{(ij)}$. This definition differs from that of the CNM, which uses the cluster transition ![]() $Q_{ik}$ in (2.9) to define the probability. In fact, we can compute

$Q_{ik}$ in (2.9) to define the probability. In fact, we can compute ![]() $Q_{ik}$ by summing up

$Q_{ik}$ by summing up ![]() $Q_{(ij) (kl)}$ as follows:

$Q_{(ij) (kl)}$ as follows:

\begin{equation} Q_{ik}=\sum_{j=1}^{L_{i}} \sum_{l=1}^{L_{k}} Q_{(ij) (kl)}. \end{equation}

\begin{equation} Q_{ik}=\sum_{j=1}^{L_{i}} \sum_{l=1}^{L_{k}} Q_{(ij) (kl)}. \end{equation}

The definition of the transition time ![]() $T_{ik}$ is identical to the CNM, as shown in figure 3. This property is not further investigated in the present work, as the transition time crossing the same clusters varies little in most dynamic systems.

$T_{ik}$ is identical to the CNM, as shown in figure 3. This property is not further investigated in the present work, as the transition time crossing the same clusters varies little in most dynamic systems.

Figure 2. Illustration of the subscripts in the refined centroid transitions. After the state space is clustered, only one subscript is needed to distinguish the different clusters, such as ![]() $\mathcal {C}_{k}$ and

$\mathcal {C}_{k}$ and ![]() $\mathcal {C}_{i}$. After the trajectory segments are clustered, two subscripts are needed to represent the refined centroids, such as

$\mathcal {C}_{i}$. After the trajectory segments are clustered, two subscripts are needed to represent the refined centroids, such as ![]() $\boldsymbol {c}_{(kl)}$ in

$\boldsymbol {c}_{(kl)}$ in ![]() $\mathcal {C}_{k}$ and

$\mathcal {C}_{k}$ and ![]() $\boldsymbol {c}_{(ij)}$ in

$\boldsymbol {c}_{(ij)}$ in ![]() $\mathcal {C}_{i}$.

$\mathcal {C}_{i}$.

Figure 3. Individual transition time ![]() $\tau ^{n}_{ik}$ for the transition from cluster

$\tau ^{n}_{ik}$ for the transition from cluster ![]() $\mathcal {C}_{k}$ to

$\mathcal {C}_{k}$ to ![]() $\mathcal {C}_{i}$.

$\mathcal {C}_{i}$.

Let ![]() $t^{n}$ be the instant when the first snapshot enters, and

$t^{n}$ be the instant when the first snapshot enters, and ![]() $t^{n+1}$ be the instant when the last snapshot leaves on one trajectory segment passing through cluster

$t^{n+1}$ be the instant when the last snapshot leaves on one trajectory segment passing through cluster ![]() $\mathcal {C}_{k}$. The residence time

$\mathcal {C}_{k}$. The residence time ![]() $\tau _{k}^{n}$ is the duration of staying in cluster

$\tau _{k}^{n}$ is the duration of staying in cluster ![]() $\mathcal {C}_{k}$ on this segment, which is given by

$\mathcal {C}_{k}$ on this segment, which is given by

For an individual transition from ![]() $\mathcal {C}_{k}$ to

$\mathcal {C}_{k}$ to ![]() $\mathcal {C}_{i}$, the transition time is defined as

$\mathcal {C}_{i}$, the transition time is defined as ![]() $\tau _{ik}^{n}$, which can be obtained by the average of the residence times from both clusters:

$\tau _{ik}^{n}$, which can be obtained by the average of the residence times from both clusters:

By averaging ![]() $\tau _{ik}^{n}$ for all the individual transitions from

$\tau _{ik}^{n}$ for all the individual transitions from ![]() $\mathcal {C}_{k}$ to

$\mathcal {C}_{k}$ to ![]() $\mathcal {C}_{i}$, the transition time can be expressed as

$\mathcal {C}_{i}$, the transition time can be expressed as

The essential dynamics can also be summarised into single entities as in the CNM, since the cluster-level information is still retained in the current model. For completeness, we introduce the cluster transition probability matrix ![]() $\boldsymbol {Q}$ and the cluster transition time matrix

$\boldsymbol {Q}$ and the cluster transition time matrix ![]() $\boldsymbol {T}$ as

$\boldsymbol {T}$ as

\begin{equation} \left. \begin{aligned} \boldsymbol{Q} & =Q_{ik} \in \mathbb{R}^{K \times K}, \quad i, k=1, \ldots, K\\ \boldsymbol{T} & =T_{ik} \in \mathbb{R}^{K \times K}, \quad i, k=1, \ldots, K. \end{aligned} \right\} \end{equation}

\begin{equation} \left. \begin{aligned} \boldsymbol{Q} & =Q_{ik} \in \mathbb{R}^{K \times K}, \quad i, k=1, \ldots, K\\ \boldsymbol{T} & =T_{ik} \in \mathbb{R}^{K \times K}, \quad i, k=1, \ldots, K. \end{aligned} \right\} \end{equation}

The cluster indices are reordered in both matrices to enhance readability. Cluster ![]() $\mathcal {C}_1$ is the cluster with the highest distribution probability,

$\mathcal {C}_1$ is the cluster with the highest distribution probability, ![]() $\mathcal {C}_2$ is the cluster with the highest transition probability leaving from

$\mathcal {C}_2$ is the cluster with the highest transition probability leaving from ![]() $\mathcal {C}_1$ and

$\mathcal {C}_1$ and ![]() $\mathcal {C}_3$ is the cluster with the highest transition probability leaving from

$\mathcal {C}_3$ is the cluster with the highest transition probability leaving from ![]() $\mathcal {C}_2$, so on and so forth. If the cluster with the highest probability is already assigned, we choose the cluster with the second highest probability. If all the clusters with non-zero transition probabilities are already assigned, we choose the next cluster with the highest distribution probability among the rest.

$\mathcal {C}_2$, so on and so forth. If the cluster with the highest probability is already assigned, we choose the cluster with the second highest probability. If all the clusters with non-zero transition probabilities are already assigned, we choose the next cluster with the highest distribution probability among the rest.

By analogy with ![]() $\boldsymbol {Q}$, the centroid transition probability

$\boldsymbol {Q}$, the centroid transition probability ![]() $Q_{(ij) (kl)}$ for given affiliations of the departure cluster

$Q_{(ij) (kl)}$ for given affiliations of the departure cluster ![]() $k$ and destination cluster

$k$ and destination cluster ![]() $i$ can form a centroid transition matrix

$i$ can form a centroid transition matrix ![]() $\boldsymbol {Q}_{ik}$ that captures all possible centroid dynamics between the two clusters:

$\boldsymbol {Q}_{ik}$ that captures all possible centroid dynamics between the two clusters:

Moreover, to summarise the centroid transition dynamics, the centroid transition probability ![]() $Q_{(ij) (kl)}$ for a given affiliation

$Q_{(ij) (kl)}$ for a given affiliation ![]() $k$ of only the departure cluster can form a centroid transition tensor

$k$ of only the departure cluster can form a centroid transition tensor ![]() $\mathcal {Q}_k$ that captures all the possible centroid dynamics from this cluster, as

$\mathcal {Q}_k$ that captures all the possible centroid dynamics from this cluster, as

The dCNM propagates the state motion based on the centroids ![]() $\boldsymbol {c}_{(kl)}$ for the reconstruction. To determine the transition dynamics, we first use

$\boldsymbol {c}_{(kl)}$ for the reconstruction. To determine the transition dynamics, we first use ![]() $\mathcal {Q}_{k}$ to find the centroid transitions from the initial centroid

$\mathcal {Q}_{k}$ to find the centroid transitions from the initial centroid ![]() $\boldsymbol {c}_{(kl)}$ to the destination centroid

$\boldsymbol {c}_{(kl)}$ to the destination centroid ![]() $\boldsymbol {c}_{(ij)}$. As the destination centroids are determined, the cluster-level dynamics is determined correspondingly. Then,

$\boldsymbol {c}_{(ij)}$. As the destination centroids are determined, the cluster-level dynamics is determined correspondingly. Then, ![]() $\boldsymbol {T}$ is used to identify the related transition time.

$\boldsymbol {T}$ is used to identify the related transition time.

We assume a linear state propagation between the two centroids ![]() $\boldsymbol {c}_{(kl)}$ and

$\boldsymbol {c}_{(kl)}$ and ![]() $\boldsymbol {c}_{(ij)}$ obtained from the tensors, as follows:

$\boldsymbol {c}_{(ij)}$ obtained from the tensors, as follows:

Here ![]() $t_{k}$ is the time when the centroid

$t_{k}$ is the time when the centroid ![]() $\boldsymbol {c}_{(kl)}$ is left. Note that we can use splines (Fernex et al. Reference Fernex, Noack and Semaan2021) or add the trajectory supporting points (Hou, Deng & Noack Reference Hou, Deng and Noack2022) to interpolate the motion between the centroids for smoother trajectories.

$\boldsymbol {c}_{(kl)}$ is left. Note that we can use splines (Fernex et al. Reference Fernex, Noack and Semaan2021) or add the trajectory supporting points (Hou, Deng & Noack Reference Hou, Deng and Noack2022) to interpolate the motion between the centroids for smoother trajectories.

Intriguingly, we observe that the trajectory-based clustering of the dCNM enhances the resolution of the cluster transitions. Now each centroid only has a limited number of destination centroids, often within the same cluster. This minimises the likelihood of selecting the wrong destination cluster based solely on the cluster transition probability matrix, as is the case in classic CNM. Consequently, it becomes feasible to accurately resolve long-term cluster transitions without the need for historical information. It can be argued that dCNM effectively constrains cluster transitions, leading to outcomes similar to those obtained with the higher-order CNM (Fernex et al. Reference Fernex, Noack and Semaan2021). This improvement is attained by replacing higher-order indexing with higher-dimensionality dual indexing. Specifically, the dual indexing also results in a substantial reduction in the model complexity. While the complexity of the high-order CNM is defined as ![]() $K^{\tilde {L}}$, where

$K^{\tilde {L}}$, where ![]() $K$ is the number of clusters and

$K$ is the number of clusters and ![]() $\tilde {L}$ is the order, the model complexity of the dCNM is expressed as

$\tilde {L}$ is the order, the model complexity of the dCNM is expressed as ![]() ${\sum }_{{k}=1}^{K} {L}_{k}$, which is a significantly lower value, particularly when

${\sum }_{{k}=1}^{K} {L}_{k}$, which is a significantly lower value, particularly when ![]() $\tilde {L}$ is relatively large. In terms of computational efficiency, dCNM with

$\tilde {L}$ is relatively large. In terms of computational efficiency, dCNM with ![]() $\beta = 0.80$ reduces the computational time by

$\beta = 0.80$ reduces the computational time by ![]() $40\,\%$ as compared with CNM with the same number of centroids. This improvement is primarily attributed to the hierarchical clustering. The computational load of the first-stage clustering on the state space is reduced by a small number of clusters

$40\,\%$ as compared with CNM with the same number of centroids. This improvement is primarily attributed to the hierarchical clustering. The computational load of the first-stage clustering on the state space is reduced by a small number of clusters ![]() $K$. The second-stage clustering on the trajectory segments accounts only for

$K$. The second-stage clustering on the trajectory segments accounts only for ![]() $20\,\%$ of the total computation time.

$20\,\%$ of the total computation time.

2.4. Validation

The auto-correlation function and the representation error are used for validation. We examine the prediction errors for cluster-based models considering both spatial and temporal perspectives. The spatial error arises from the inadequate representation by cluster centroids, as evidenced by the representation error and the auto-correlation function. The temporal error arises due to the imprecise reconstruction of intricate snapshot transition dynamics. This can be observed directly through the temporal evolution of snapshot affiliations and, to some extent, through the auto-correlation function.

The auto-correlation function is a practical tool for evaluating ROMs, as it can statistically reflect the prediction errors. Additionally, the auto-correlation function circumvents the problem of directly comparing two trajectories with finite prediction horizons, which may suffer from phase mismatch (Fernex et al. Reference Fernex, Noack and Semaan2021). This is particularly relevant for chaotic dynamics, whereby minor differences in initial conditions can lead to divergent trajectories, making the direct comparison of time series meaningless. The unbiased auto-correlation function of the state vector (Protas, Noack & Östh Reference Protas, Noack and Östh2015) is given by

In this study, ![]() $R(\tau )$ will be normalised by

$R(\tau )$ will be normalised by ![]() $R(0)$ (Deng et al. Reference Deng, Noack, Morzyński and Pastur2022). This function can also infer the spectral behaviour by computing the fluctuation energy at the vanishing delay.

$R(0)$ (Deng et al. Reference Deng, Noack, Morzyński and Pastur2022). This function can also infer the spectral behaviour by computing the fluctuation energy at the vanishing delay.

The representation error can be numerically computed as

\begin{equation} E_{r}=\frac{1}{M} \sum_{m=1}^{M} D_{\mathcal{T}}^{m}, \end{equation}

\begin{equation} E_{r}=\frac{1}{M} \sum_{m=1}^{M} D_{\mathcal{T}}^{m}, \end{equation}

where ![]() $D_{\mathcal {T}}^{m}$ is the minimum distance from the snapshot

$D_{\mathcal {T}}^{m}$ is the minimum distance from the snapshot ![]() $\boldsymbol {u}^m$ to the states on the reconstructed trajectory

$\boldsymbol {u}^m$ to the states on the reconstructed trajectory ![]() $\mathcal {T}$:

$\mathcal {T}$:

3. Lorenz system as an illustrative example

In this section we apply the dCNM to the Lorenz (Reference Lorenz1963) system to illustrate its superior spatial resolution in handling multiscale dynamics. We also compare it with the CNM (Fernex et al. Reference Fernex, Noack and Semaan2021; Li et al. Reference Li, Fernex, Semaan, Tan, Morzyński and Noack2021) of the same rank as a reference.

The Lorenz system is a three-dimensional autonomous system with non-periodic, deterministic and dissipative dynamics that exhibit exponential divergence and convergence to strange fractal attractors. The system is governed by three coupled nonlinear differential equations:

\begin{equation} \left. \begin{aligned} \mathrm{d} x/\mathrm{d}t & =\sigma(y-x),\\ \mathrm{d} y/\mathrm{d}t & =x(\rho-z)-y,\\ \mathrm{d}z/\mathrm{d}t & =x y-\beta z. \end{aligned} \right\} \end{equation}

\begin{equation} \left. \begin{aligned} \mathrm{d} x/\mathrm{d}t & =\sigma(y-x),\\ \mathrm{d} y/\mathrm{d}t & =x(\rho-z)-y,\\ \mathrm{d}z/\mathrm{d}t & =x y-\beta z. \end{aligned} \right\} \end{equation}

The system parameters are set as ![]() $\sigma = 10$,

$\sigma = 10$, ![]() $\rho = 28$ and

$\rho = 28$ and ![]() $\beta = 8/3$. These equations emulate the Rayleigh–Bénard convection. The trajectory of the system revolves around two weakly unstable oscillatory fixed points, forming two sets of attractors, that are loosely called ‘ears.’ These two ears have similar but not identical shapes, with the left ear being rounder and thicker in the toroidal region. The region where the ears overlap is called the branching region. The Lorenz system has two main types of dynamics. One is that the inner loop in each ear varies and oscillates for several cycles. The other is that the inner loop may randomly switch from one ear to another in the branching region and resume oscillatory motion.

$\beta = 8/3$. These equations emulate the Rayleigh–Bénard convection. The trajectory of the system revolves around two weakly unstable oscillatory fixed points, forming two sets of attractors, that are loosely called ‘ears.’ These two ears have similar but not identical shapes, with the left ear being rounder and thicker in the toroidal region. The region where the ears overlap is called the branching region. The Lorenz system has two main types of dynamics. One is that the inner loop in each ear varies and oscillates for several cycles. The other is that the inner loop may randomly switch from one ear to another in the branching region and resume oscillatory motion.

We numerically integrate the system using the fourth-order explicit Runge–Kutta method. The time-resolved ![]() $10\,000$ snapshots data with

$10\,000$ snapshots data with ![]() $\boldsymbol {u}^m=[x, y, z]^{\intercal }$ are collected at a sampling time step of

$\boldsymbol {u}^m=[x, y, z]^{\intercal }$ are collected at a sampling time step of ![]() $\Delta t = 0.015$ with an initial condition of

$\Delta t = 0.015$ with an initial condition of ![]() $[-3, 0, 31]^{\intercal }$ (Fernex et al. Reference Fernex, Noack and Semaan2021). This time step corresponds to approximately one-fiftieth of a typical cycle period. The first

$[-3, 0, 31]^{\intercal }$ (Fernex et al. Reference Fernex, Noack and Semaan2021). This time step corresponds to approximately one-fiftieth of a typical cycle period. The first ![]() $5\,\%$ of the snapshots are neglected to reserve only the post-transient dynamics.

$5\,\%$ of the snapshots are neglected to reserve only the post-transient dynamics.

Figure 4 shows the phase portrait of the clustered Lorenz system from the CNM and dCNM.

Figure 4. Phase portrait of the clustered Lorenz system from the CNM and dCNM. The small dots represent the snapshots and the large dots represent the centroids. Snapshots and centroids with the same colour belong to the same cluster. As a comparison, the CNM result in (a) is shown with the same number of centroids as the corresponding dCNM result. The dCNM result in (b) is shown with ![]() $K = 10$ and

$K = 10$ and ![]() $\beta = 0.90$.

$\beta = 0.90$.

We set ![]() $K =10$ for the state space clustering of the dCNM, which is consistent with previous studies (Kaiser et al. Reference Kaiser2014; Li et al. Reference Li, Fernex, Semaan, Tan, Morzyński and Noack2021). This number is large enough for the further subdivision of transition dynamics and is also small enough to obtain a simple structure for understanding. The sparsification index

$K =10$ for the state space clustering of the dCNM, which is consistent with previous studies (Kaiser et al. Reference Kaiser2014; Li et al. Reference Li, Fernex, Semaan, Tan, Morzyński and Noack2021). This number is large enough for the further subdivision of transition dynamics and is also small enough to obtain a simple structure for understanding. The sparsification index ![]() $\beta$ is chosen with large numbers as

$\beta$ is chosen with large numbers as ![]() $\beta =0.90$ to allow for a distinct visualisation of the centroids. In addition, since the trajectory in each ‘ear’ is confined to a two-dimensional surface, a high value of

$\beta =0.90$ to allow for a distinct visualisation of the centroids. In addition, since the trajectory in each ‘ear’ is confined to a two-dimensional surface, a high value of ![]() $\beta$ is deemed suitable. The normalised transverse cluster size vector

$\beta$ is deemed suitable. The normalised transverse cluster size vector ![]() $\boldsymbol {\hat {R}}^{\mathcal {T}} = [0.1163, 0.1262,0.1164,0.0921,0.0943,0.0908,0.1116,0.0840,0.0866,0.0817]^{\intercal}$ corresponds to the number of sub-clusters

$\boldsymbol {\hat {R}}^{\mathcal {T}} = [0.1163, 0.1262,0.1164,0.0921,0.0943,0.0908,0.1116,0.0840,0.0866,0.0817]^{\intercal}$ corresponds to the number of sub-clusters ![]() $\boldsymbol {L} = [13,15,13,11,11,11,13,10,10,10]^{\intercal }$.

$\boldsymbol {L} = [13,15,13,11,11,11,13,10,10,10]^{\intercal }$.

The two models exhibit notable differences in centroid distribution. The CNM clustering relies solely on the spatial topology in the phase space, evenly dividing the entire attractor and dispersing centroids uniformly throughout the phase portrait. It can be inferred that increasing the number of centroids under this uniform distribution does not lead to substantial changes, merely resulting in a denser centroid distribution. This uniform distribution possesses certain disadvantages regarding the dynamics. First, it unnecessarily complicates the transition rhythm as the deterministic large-scale transition may be fragmented into several stochastic transitions. Second, even with many centroids, it fails to capture the increasing oscillation amplitude between the loops in one ear, as the uniform distribution provides only a limited number of centroid orbits. The same result occurs for the branching region where these limited numbers of centroids usually oversimplify the switch between ears. In contrast, the distribution of the dCNM centroids resembles a weighted reallocation. For the Lorenz system, the state space is stratified along the trajectory direction, leading to a concentrated distribution of the dCNM centroids in the radial direction of the attractor and the branching region, which correspond to the system's primary dynamics. Additionally, varying quantities of the centroids can be observed in the radial direction in the toroidal region, depending on its thickness. In thinner toroidal regions with smaller variations between trajectory segments, the second-stage clustering assigns fewer sub-clusters and, consequently, builds fewer centroids.

The cluster transition matrices, which are a distinctive feature of cluster modelling, are preserved because the dCNM maintains the coarse-grained transitions at the cluster level. Figure 5 illustrates the cluster transition probability matrix ![]() $\boldsymbol {Q}$ and the corresponding transition time matrix

$\boldsymbol {Q}$ and the corresponding transition time matrix ![]() $\boldsymbol {T}$ to illustrate the significant dynamics of the Lorenz system. It is worth noting that in the case of the CNM with an equivalent number of centroids, the matrices become considerably larger, which diminishes their readability and interpretability. The matrices reveal three distinct cluster groups. The first group comprises clusters

$\boldsymbol {T}$ to illustrate the significant dynamics of the Lorenz system. It is worth noting that in the case of the CNM with an equivalent number of centroids, the matrices become considerably larger, which diminishes their readability and interpretability. The matrices reveal three distinct cluster groups. The first group comprises clusters ![]() $\mathcal {C}_{1}$ and

$\mathcal {C}_{1}$ and ![]() $\mathcal {C}_{2}$, which resolve the branching region and exhibit similar transition probabilities to clusters

$\mathcal {C}_{2}$, which resolve the branching region and exhibit similar transition probabilities to clusters ![]() $\mathcal {C}_{3}$ and

$\mathcal {C}_{3}$ and ![]() $\mathcal {C}_{7}$. The branching region is further linked to different ears and is crucial to the attractor oscillation. Clusters

$\mathcal {C}_{7}$. The branching region is further linked to different ears and is crucial to the attractor oscillation. Clusters ![]() $\mathcal {C}_{1}$ and

$\mathcal {C}_{1}$ and ![]() $\mathcal {C}_{2}$ can be referred to as flipper clusters (Kaiser et al. Reference Kaiser2014), representing a switch between the different groups. The equivalent transition probability from

$\mathcal {C}_{2}$ can be referred to as flipper clusters (Kaiser et al. Reference Kaiser2014), representing a switch between the different groups. The equivalent transition probability from ![]() $\mathcal {C}_{2}$ is consistent with the random jumping behaviour of the two ears. The other two groups demonstrate an inner-group circulation corresponding to the main components of the two ears, exemplified by the cluster chains

$\mathcal {C}_{2}$ is consistent with the random jumping behaviour of the two ears. The other two groups demonstrate an inner-group circulation corresponding to the main components of the two ears, exemplified by the cluster chains ![]() $\mathcal {C}_{3} \to \mathcal {C}_{4} \to \mathcal {C}_{5} \to \mathcal {C}_{6}$ and

$\mathcal {C}_{3} \to \mathcal {C}_{4} \to \mathcal {C}_{5} \to \mathcal {C}_{6}$ and ![]() $\mathcal {C}_{7} \to \mathcal {C}_{8} \to \mathcal {C}_{9} \to \mathcal {C}_{10}$. These chains exhibit deterministic transition probabilities that resolve the cyclic behaviour. In the second-stage clustering these two groups are further categorised into numerous centroid orbits. Moreover, the transition time matrix resolves the variance in the transition times, with significantly shorter transition times observed in the cyclic groups compared with transitions involving the flipper clusters.

$\mathcal {C}_{7} \to \mathcal {C}_{8} \to \mathcal {C}_{9} \to \mathcal {C}_{10}$. These chains exhibit deterministic transition probabilities that resolve the cyclic behaviour. In the second-stage clustering these two groups are further categorised into numerous centroid orbits. Moreover, the transition time matrix resolves the variance in the transition times, with significantly shorter transition times observed in the cyclic groups compared with transitions involving the flipper clusters.

Figure 5. Transition matrices of the Lorenz system. The colour bar indicates the values of the terms. (a) Transition probability matrix ![]() $\boldsymbol {Q}$. (b) Transition time matrix

$\boldsymbol {Q}$. (b) Transition time matrix ![]() $\boldsymbol {T}$.

$\boldsymbol {T}$.

The original and reconstructed trajectories in the phase space are directly compared. We focus solely on the spatial resolution, disregarding phase mismatches during temporal evolution. Figure 6 shows the original Lorenz system and the reconstruction by the CNM and dCNM with the same parameters as in figure 4. To ensure clarity, we select a time window from ![]() $t=0$ to

$t=0$ to ![]() $t=30$ for the trajectories and employ spline interpolation for a smooth reconstruction. Inaccurate or non-physical centroid transitions, along with incomplete dynamic coverage, can lead to substantial deformations in the reconstructed trajectory. As expected, the dCNM provides a more accurate reconstruction than the CNM. The CNM uses a finite number of centroid orbits to represent oscillating attractors, converting slow and continuous amplitude growth into limited and abrupt amplitude jumps. Furthermore, the CNM may group one continuous snapshot loop into clusters belonging to different centroid orbits, often when these clusters are adjacent to each other. This can lead to unnecessary orbit-crossing centroid transitions and result in non-physical radial jumps in the reconstructed trajectory. In contrast, the dCNM provides more comprehensive dynamic coverage, resolving more cyclic behaviour with additional centroid orbits. Dual indexing also guarantees accurate centroid transitions. The radial jumps are eliminated, as departing centroids can only transition to destination centroids within the same centroid orbits. Consequently, oscillations are effectively resolved by the centroid orbits, and transitions between them are constrained by densely distributed centroids in the branching region, ensuring a smoothly varied oscillation.

$t=30$ for the trajectories and employ spline interpolation for a smooth reconstruction. Inaccurate or non-physical centroid transitions, along with incomplete dynamic coverage, can lead to substantial deformations in the reconstructed trajectory. As expected, the dCNM provides a more accurate reconstruction than the CNM. The CNM uses a finite number of centroid orbits to represent oscillating attractors, converting slow and continuous amplitude growth into limited and abrupt amplitude jumps. Furthermore, the CNM may group one continuous snapshot loop into clusters belonging to different centroid orbits, often when these clusters are adjacent to each other. This can lead to unnecessary orbit-crossing centroid transitions and result in non-physical radial jumps in the reconstructed trajectory. In contrast, the dCNM provides more comprehensive dynamic coverage, resolving more cyclic behaviour with additional centroid orbits. Dual indexing also guarantees accurate centroid transitions. The radial jumps are eliminated, as departing centroids can only transition to destination centroids within the same centroid orbits. Consequently, oscillations are effectively resolved by the centroid orbits, and transitions between them are constrained by densely distributed centroids in the branching region, ensuring a smoothly varied oscillation.

Figure 6. Trajectory of the Lorenz system. The thin grey curve represents the original trajectory, the thick red curve represents the reconstructed trajectory and the red dots represent the centroids. (a) The CNM reconstruction and (b) the dCNM reconstruction are performed with the same parameters as in figure 4.

The auto-correlation function is computed to reflect the model accuracy, as shown in figure 7. In the original data set, the normalised auto-correlation function ![]() $R(\tau ) / R(0)$ vanishes smoothly as

$R(\tau ) / R(0)$ vanishes smoothly as ![]() $\tau$ increases, and the variance between the periodic behaviour can be clearly observed. However, the CNM reconstruction captures only the first four periods of oscillation dynamics. As

$\tau$ increases, and the variance between the periodic behaviour can be clearly observed. However, the CNM reconstruction captures only the first four periods of oscillation dynamics. As ![]() $\tau$ increases, there is a sudden amplitude decay accompanied by a phase mismatch. This can be attributed to amplitude jumps between the centroid loops and commonly occurring orbit-crossing transitions. In contrast, the dCNM reconstruction accurately captures both the amplitude and frequency of the oscillation dynamics, demonstrating robust and precise long-time-scale behaviours.

$\tau$ increases, there is a sudden amplitude decay accompanied by a phase mismatch. This can be attributed to amplitude jumps between the centroid loops and commonly occurring orbit-crossing transitions. In contrast, the dCNM reconstruction accurately captures both the amplitude and frequency of the oscillation dynamics, demonstrating robust and precise long-time-scale behaviours.

Figure 7. Auto-correlation function for ![]() $\tau \in [0, 30)$ of the Lorenz system. The thin black curves represent the original data set and the thick red curves represent the models: (a) CNM and (b) dCNM.

$\tau \in [0, 30)$ of the Lorenz system. The thin black curves represent the original data set and the thick red curves represent the models: (a) CNM and (b) dCNM.

4. Dynamics-augmented modelling of the sphere wake

In this section we demonstrate the dCNM for the transient and post-transient flow dynamics of the sphere wake. The numerical method for obtaining the flow field data set and the flow characteristics is presented in § 4.1. The performance of the dCNM for the periodic, quasi-periodic and chaotic flow regimes is evaluated in §§ 4.2, 4.3 and 4.4, respectively. The physical interpretation of the modelling strategy is discussed in § 4.5.

4.1. Numerical methods and flow features

Numerical simulation is performed to obtain the data set, as shown in figure 8. A sphere with a diameter ![]() $D$ is placed in a uniform flow with a streamwise velocity

$D$ is placed in a uniform flow with a streamwise velocity ![]() $U_\infty$. The computational domain takes the form of a cylindrical tube, with its origin at the centre of the sphere and its axial direction along the streamwise direction (

$U_\infty$. The computational domain takes the form of a cylindrical tube, with its origin at the centre of the sphere and its axial direction along the streamwise direction (![]() $x$ axis). The dimensions of the domain in the

$x$ axis). The dimensions of the domain in the ![]() $x$,

$x$, ![]() $y$ and

$y$ and ![]() $z$ directions are

$z$ directions are ![]() $80D$,

$80D$, ![]() $10D$ and

$10D$ and ![]() $10D$, respectively. The inlet is located

$10D$, respectively. The inlet is located ![]() $20D$ upstream from the sphere. These specific domain parameters are chosen to minimise any potential distortion arising from the outer boundary conditions while also mitigating computational costs (Pan, Zhang & Ni Reference Pan, Zhang and Ni2018; Lorite-Díez & Jiménez-González Reference Lorite-Díez and Jiménez-González2020). The fluid flow is governed by the incompressible Navier–Stokes equations:

$20D$ upstream from the sphere. These specific domain parameters are chosen to minimise any potential distortion arising from the outer boundary conditions while also mitigating computational costs (Pan, Zhang & Ni Reference Pan, Zhang and Ni2018; Lorite-Díez & Jiménez-González Reference Lorite-Díez and Jiménez-González2020). The fluid flow is governed by the incompressible Navier–Stokes equations:

\begin{equation} \left. \begin{gathered} \partial{\boldsymbol{u}} / \partial{t} + \boldsymbol{u} \boldsymbol{\cdot} \boldsymbol{\nabla} \boldsymbol{u} + \boldsymbol{\nabla} p- \nabla^{2} \boldsymbol{u} / {{Re}} = 0,\\ \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}=0. \end{gathered} \right\} \end{equation}

\begin{equation} \left. \begin{gathered} \partial{\boldsymbol{u}} / \partial{t} + \boldsymbol{u} \boldsymbol{\cdot} \boldsymbol{\nabla} \boldsymbol{u} + \boldsymbol{\nabla} p- \nabla^{2} \boldsymbol{u} / {{Re}} = 0,\\ \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u}=0. \end{gathered} \right\} \end{equation}

Here ![]() $\boldsymbol {u}$ denotes the velocity vector

$\boldsymbol {u}$ denotes the velocity vector ![]() $(u_x, u_y, u_z)$,

$(u_x, u_y, u_z)$, ![]() $p$ is the static pressure and

$p$ is the static pressure and ![]() ${{Re}}$ is the Reynolds number, which is defined by

${{Re}}$ is the Reynolds number, which is defined by

where ![]() $\nu$ is the kinematic viscosity.

$\nu$ is the kinematic viscosity.

Figure 8. Numerical sketch of the sphere wake.

The net forces on the sphere have three components ![]() $F_\alpha$,

$F_\alpha$, ![]() $\alpha = x, y, z$, and the corresponding force coefficients

$\alpha = x, y, z$, and the corresponding force coefficients ![]() $C_{\alpha }$ are defined as

$C_{\alpha }$ are defined as

where ![]() $S={\rm \pi} D^{2}/4$ is the projected surface area of the sphere in the streamwise direction. The total drag force coefficient is

$S={\rm \pi} D^{2}/4$ is the projected surface area of the sphere in the streamwise direction. The total drag force coefficient is ![]() $C_D = C_x$. Since the lift coefficient can have any direction in the

$C_D = C_x$. Since the lift coefficient can have any direction in the ![]() $yz$ plane on the axisymmetric sphere, the total lift force coefficient

$yz$ plane on the axisymmetric sphere, the total lift force coefficient ![]() $C_L$ is given by

$C_L$ is given by

The flow parameters are non-dimensionalised based on the characteristic length ![]() $D$ and the free-stream velocity

$D$ and the free-stream velocity ![]() $U_\infty$. This implies that the time unit scales are

$U_\infty$. This implies that the time unit scales are ![]() $D/U_\infty$ and the pressure scales are

$D/U_\infty$ and the pressure scales are ![]() $\rho U_\infty ^2$, where

$\rho U_\infty ^2$, where ![]() $\rho$ is the density. The Strouhal number

$\rho$ is the density. The Strouhal number ![]() $St$ is correspondingly expressed as

$St$ is correspondingly expressed as

where ![]() $f$ is the characteristic frequency.

$f$ is the characteristic frequency.

The ANSYS Fluent ![]() $15.0$ software is used as the computational fluid dynamics (CFD) solver for the governing equations with the cell-centred finite volume method. We impose a uniform streamwise velocity

$15.0$ software is used as the computational fluid dynamics (CFD) solver for the governing equations with the cell-centred finite volume method. We impose a uniform streamwise velocity ![]() $\boldsymbol {u} = [U_\infty, 0, 0]$ at the inlet boundary and an outflow condition at the outlet boundary. The outflow condition is set as a Neumann condition for the velocity,

$\boldsymbol {u} = [U_\infty, 0, 0]$ at the inlet boundary and an outflow condition at the outlet boundary. The outflow condition is set as a Neumann condition for the velocity, ![]() $\partial _x \boldsymbol {u} = [0, 0, 0]$, and a Dirichlet condition for the pressure,

$\partial _x \boldsymbol {u} = [0, 0, 0]$, and a Dirichlet condition for the pressure, ![]() $p_{out} = 0$. We apply a no-slip boundary condition on the sphere surface and a slip boundary condition on the cylindrical tube walls to prevent wake-wall interpolations. The pressure-implicit split-operator algorithm is chosen for pressure–velocity coupling. For the governing equations, the second-order scheme is used for the spatial discretization, and the first-order implicit scheme is used for the temporal term. To satisfy the Courant–Friedrichs–Levy condition, a small integration time step is set as

$p_{out} = 0$. We apply a no-slip boundary condition on the sphere surface and a slip boundary condition on the cylindrical tube walls to prevent wake-wall interpolations. The pressure-implicit split-operator algorithm is chosen for pressure–velocity coupling. For the governing equations, the second-order scheme is used for the spatial discretization, and the first-order implicit scheme is used for the temporal term. To satisfy the Courant–Friedrichs–Levy condition, a small integration time step is set as ![]() $\Delta t = 0.01$ non-dimensional time unit, such that the Courant number is below

$\Delta t = 0.01$ non-dimensional time unit, such that the Courant number is below ![]() $1$ for all simulations. For the periodic flow at

$1$ for all simulations. For the periodic flow at ![]() ${{Re}} = 300$, the simulation starts in the vicinity of the steady solution and runs for

${{Re}} = 300$, the simulation starts in the vicinity of the steady solution and runs for ![]() $t = 200$ time units, incorporating the transient and post-transient dynamics. For the quasi-periodic flow, the simulations are performed for

$t = 200$ time units, incorporating the transient and post-transient dynamics. For the quasi-periodic flow, the simulations are performed for ![]() $t = 500$ time units and for the chaotic flow for

$t = 500$ time units and for the chaotic flow for ![]() $t = 700$ time units. The snapshots are collected at a sampling time step of

$t = 700$ time units. The snapshots are collected at a sampling time step of ![]() $\Delta t_s = 0.2$ time units for all the test cases. Moreover, we discard the first

$\Delta t_s = 0.2$ time units for all the test cases. Moreover, we discard the first ![]() $200$ time units to eliminate any transient phases for the quasi-periodic and chaotic cases. The relevant numerical investigation approach can be found in Johnson & Patel (Reference Johnson and Patel1999) and Rajamuni, Thompson & Hourigan (Reference Rajamuni, Thompson and Hourigan2018). For the convergence and validation studies, see Appendix A.

$200$ time units to eliminate any transient phases for the quasi-periodic and chaotic cases. The relevant numerical investigation approach can be found in Johnson & Patel (Reference Johnson and Patel1999) and Rajamuni, Thompson & Hourigan (Reference Rajamuni, Thompson and Hourigan2018). For the convergence and validation studies, see Appendix A.

The wake of a sphere exhibits different flow regimes as ![]() ${{Re}}$ increases, ultimately transitioning to a chaotic state. At

${{Re}}$ increases, ultimately transitioning to a chaotic state. At ![]() ${{Re}} = 20 \sim 24$, flow separation occurs, forming a steady recirculating bubble, as observed in previous studies (Sheard, Thompson & Hourigan Reference Sheard, Thompson and Hourigan2003; Eshbal et al. Reference Eshbal, Rinsky, David, Greenblatt and van Hout2019). The length of this wake grows linearly with

${{Re}} = 20 \sim 24$, flow separation occurs, forming a steady recirculating bubble, as observed in previous studies (Sheard, Thompson & Hourigan Reference Sheard, Thompson and Hourigan2003; Eshbal et al. Reference Eshbal, Rinsky, David, Greenblatt and van Hout2019). The length of this wake grows linearly with ![]() $\ln ({{Re}})$. When

$\ln ({{Re}})$. When ![]() ${{Re}}$ surpasses

${{Re}}$ surpasses ![]() $130$ (Taneda Reference Taneda1956), the wake bubble starts oscillating in a wave-like manner, while the flow maintains axisymmetry. The first Hopf bifurcation takes place at approximately

$130$ (Taneda Reference Taneda1956), the wake bubble starts oscillating in a wave-like manner, while the flow maintains axisymmetry. The first Hopf bifurcation takes place at approximately ![]() ${{Re}} \approx 212$ (Fabre et al. Reference Fabre, Auguste and Magnaudet2008), leading to a loss of axisymmetry and the emergence of a planar-symmetric double-thread wake with two stable and symmetric vortices. The orientation of the symmetry plane can vary (Johnson & Patel Reference Johnson and Patel1999). At a subsequent Hopf bifurcation around

${{Re}} \approx 212$ (Fabre et al. Reference Fabre, Auguste and Magnaudet2008), leading to a loss of axisymmetry and the emergence of a planar-symmetric double-thread wake with two stable and symmetric vortices. The orientation of the symmetry plane can vary (Johnson & Patel Reference Johnson and Patel1999). At a subsequent Hopf bifurcation around ![]() ${{Re}} = 270 \sim 272$ (Johnson & Patel Reference Johnson and Patel1999; Fabre et al. Reference Fabre, Auguste and Magnaudet2008), the flow becomes time dependent, initiating periodic vortex shedding with the same symmetry plane as before. In the range

${{Re}} = 270 \sim 272$ (Johnson & Patel Reference Johnson and Patel1999; Fabre et al. Reference Fabre, Auguste and Magnaudet2008), the flow becomes time dependent, initiating periodic vortex shedding with the same symmetry plane as before. In the range ![]() $272 < {{Re}} < 420$ (Eshbal et al. Reference Eshbal, Rinsky, David, Greenblatt and van Hout2019), periodicity and the symmetry plane diminish, with the vortex shedding becoming quasi-periodic and then fully three dimensional. Beyond

$272 < {{Re}} < 420$ (Eshbal et al. Reference Eshbal, Rinsky, David, Greenblatt and van Hout2019), periodicity and the symmetry plane diminish, with the vortex shedding becoming quasi-periodic and then fully three dimensional. Beyond ![]() ${{Re}} = 420$, shedding becomes irregular and chaotic (Ormières & Provansal Reference Ormières and Provansal1999; Pan et al. Reference Pan, Zhang and Ni2018; Eshbal et al. Reference Eshbal, Rinsky, David, Greenblatt and van Hout2019), due to the azimuthal rotation of the separation point and lateral oscillations of the shedding.

${{Re}} = 420$, shedding becomes irregular and chaotic (Ormières & Provansal Reference Ormières and Provansal1999; Pan et al. Reference Pan, Zhang and Ni2018; Eshbal et al. Reference Eshbal, Rinsky, David, Greenblatt and van Hout2019), due to the azimuthal rotation of the separation point and lateral oscillations of the shedding.

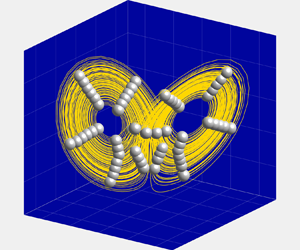

In this study we examine three baseline flow regimes of the sphere wake: periodic flow at ![]() ${{Re}} = 300$, quasi-periodic flow at

${{Re}} = 300$, quasi-periodic flow at ![]() ${{Re}} = 330$ and chaotic flow at

${{Re}} = 330$ and chaotic flow at ![]() ${{Re}} = 450$. Figure 9 illustrates the flow characteristics of these regimes. Figure 9(a,b) depicts the lift coefficient

${{Re}} = 450$. Figure 9 illustrates the flow characteristics of these regimes. Figure 9(a,b) depicts the lift coefficient ![]() $C_L$ of the periodic flow. The oscillation of

$C_L$ of the periodic flow. The oscillation of ![]() $C_L$ starts after a few time units, with the amplitude gradually increasing in the cycle-to-cycle evolution, eventually forming the limit cycle. Figure 9(c) shows the instantaneous vortex structures of the quasi-periodic flow, identified by the

$C_L$ starts after a few time units, with the amplitude gradually increasing in the cycle-to-cycle evolution, eventually forming the limit cycle. Figure 9(c) shows the instantaneous vortex structures of the quasi-periodic flow, identified by the ![]() $Q$ criterion and colour coded by the non-dimensional velocity

$Q$ criterion and colour coded by the non-dimensional velocity ![]() $U_{\infty }$. The vortex shedding forms hairpin vortices with slight variations between successive shedding events, signifying the absence of short-term periodicity while retaining long-term periodic behaviour. Figure 9(d,e) shows the temporal evolution of

$U_{\infty }$. The vortex shedding forms hairpin vortices with slight variations between successive shedding events, signifying the absence of short-term periodicity while retaining long-term periodic behaviour. Figure 9(d,e) shows the temporal evolution of ![]() $C_L$ and its phase portrait, respectively. The amplitude of

$C_L$ and its phase portrait, respectively. The amplitude of ![]() $C_L$ is strongly associated with the quasi-periodic dynamic, and the modulation also thickens the limit cycle of the oscillator on the phase portrait. The power spectral density in figure 9(f) indicates two dominant frequencies: a higher frequency linked to natural shedding and a lower frequency associated with amplitude modulation resulting from variations between shedding events. For chaotic flow, periodicity entirely vanishes, and the flow regime displays the typical features of a chaotic system. The hairpin vortexes in figure 9(g) shed irregularly, with varying separation angles and even double spirals. The temporal evolution of

$C_L$ is strongly associated with the quasi-periodic dynamic, and the modulation also thickens the limit cycle of the oscillator on the phase portrait. The power spectral density in figure 9(f) indicates two dominant frequencies: a higher frequency linked to natural shedding and a lower frequency associated with amplitude modulation resulting from variations between shedding events. For chaotic flow, periodicity entirely vanishes, and the flow regime displays the typical features of a chaotic system. The hairpin vortexes in figure 9(g) shed irregularly, with varying separation angles and even double spirals. The temporal evolution of ![]() $C_L$ in figure 9(h) exhibits more complex dynamics, with the phase diagram in figure 9(i) depicting many random loops that no longer exhibit circular patterns. Furthermore, the power spectral density of