1. Introduction

The motions of the free surface of a water flow can exhibit various signatures, such as waves, ripples and dimples (Sarpkaya Reference Sarpkaya1996; Brocchini & Peregrine Reference Brocchini and Peregrine2001), which are influenced by the flow underneath and governed by the kinematics and dynamics of the free surface. Inferring information about subsurface flows and even reconstructing the subsurface flow field from observable free-surface features are of great interest for many applications, such as non-intrusive flow measurements. For example, measurements of the turbulence in the ocean boundary layer can be challenging in the field. In situ measurements relying on single or multiple devices, such as the acoustic Doppler current profiler, can only provide sampled or averaged statistics about the subsurface motions. The capability of inferring the subsurface flow field from free-surface motions can enable measuring subsurface flows through remote sensing and aerial imaging techniques, which have already been applied to the measurements of surface currents (see e.g. Lund et al. Reference Lund, Graber, Hessner and Williams2015; Metoyer et al. Reference Metoyer, Barzegar, Bogucki, Haus and Shao2021) and can provide a greater spatial coverage than point measurements. By identifying subsurface flow structures, which may originate from submerged objects, subsurface flow reconstruction can also benefit applications such as bathymetry mapping and the detection of submerged objects.

In turbulent free-surface flows, the motion of the free surface is related to the various turbulent coherent structures located underneath. For example, in turbulent open-channel flows, it has been found that the turbulence eddies generated near the water bottom can rise to the near-surface region and excite the free surface (e.g. Komori, Murakami & Ueda Reference Komori, Murakami and Ueda1989; Komori et al. Reference Komori, Nagaosa, Murakami, Chiba, Ishii and Kuwahara1993; Rashidi Reference Rashidi1997; Muraro et al. Reference Muraro, Dolcetti, Nichols, Tait and Horoshenkov2021). Nagaosa (Reference Nagaosa1999) and Nagaosa & Handler (Reference Nagaosa and Handler2003) tracked the evolution of hairpin-like vortices from the bottom wall to the near-surface region and provided detailed visualisations of the vortices impacting the free surface and the vortex-induced surface velocities. It was found that upwelling vortices can induce spiral motions at the free surface (Pan & Banerjee Reference Pan and Banerjee1995; Kumar, Gupta & Banerjee Reference Kumar, Gupta and Banerjee1998). These studies advanced our understanding of the interactions between free surfaces and turbulence. However, estimating subsurface flows remains challenging despite our knowledge of the correlations between certain surface signatures and flow structures, such as the relation between surface dimples and surface-connected vertical vortices. This is because turbulence is characterised by nonlinear processes and flow structures with various types and scales, which result in complex surface manifestations. Furthermore, water waves, which are governed by the free-surface kinematic and dynamic boundary conditions, are ubiquitous in free-surface flows and can arise from disturbances caused by turbulence. For example, random capillary–gravity waves can be excited by turbulence eddies (Savelsberg & van de Water Reference Savelsberg and van de Water2008). Isotropic small-scale waves were also observed in the numerical experiments conducted by Yamamoto & Kunugi (Reference Yamamoto and Kunugi2011). These oscillatory motions may further obfuscate the correlations between free-surface features and subsurface structures.

In previous research, some efforts have been made to infer subsurface characteristics from surface features. Koltakov (Reference Koltakov2013) showed that the mean depth and the effective roughness of the bottom can be inferred from surface motions. Mandel et al. (Reference Mandel, Rosenzweig, Chung, Ouellette and Koseff2017) proposed an algorithm based on the Schlieren method that can track the scales and movements of Kelvin–Helmholtz rollers over submerged canopies. In these works, the predictable information was limited to either targeted features, such as Kelvin–Helmholtz rollers, or statistical features, such as bottom roughness.

Inferring flow information from boundary observations is also of interest for other types of flows. For example, for wall-bounded flows, various techniques for reconstructing flow fields from wall shear stress and/or pressure have been developed, including the Kalman filtering technique (Colburn, Cessna & Bewley Reference Colburn, Cessna and Bewley2011), the linear stochastic estimation (LSE) method (Suzuki & Hasegawa Reference Suzuki and Hasegawa2017; Encinar & Jiménez Reference Encinar and Jiménez2019) and the adjoint method (Wang & Zaki Reference Wang and Zaki2021). These methods are capable of estimating three-dimensional flow velocities, which contain more details than what can be inferred by the aforementioned techniques for free-surface flows. How turbulence, a chaotic dynamical system, impacts the accuracy of reconstruction algorithms has also been studied in detail for wall-bounded flows. For example, based on an adjoint-variational reconstruction method, Wang, Wang & Zaki (Reference Wang, Wang and Zaki2022) quantitatively studied the difficulty of flow reconstruction by computing the sensitivity of the measurements to the flow state and showed how the wall distance and measurement time affect the reconstruction accuracy.

In recent years, various machine learning methods based on neural network (NN) architectures have seen increasing use in many fields involving fluid dynamics and turbulence research. Neural networks are inspired from the biological networks of neurons and use networks of artificial neurons to approximate the nonlinear relationships between input and output data (Duraisamy, Iaccarino & Xiao Reference Duraisamy, Iaccarino and Xiao2019; Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020). Specialised NNs have also been developed for various tasks, e.g. the convolutional NNs (CNNs) (see, e.g. Lecun et al. Reference Lecun, Bottou, Bengio and Haffner1998) are commonly used for processing image-like data, and recurrent NNs (see, e.g. Rumelhart, Hinton & Williams Reference Rumelhart, Hinton and Williams1986) are employed for temporal data. Successful applications of NNs in fluid dynamics include dimensionality reduction (Milano & Koumoutsakos Reference Milano and Koumoutsakos2002; Fukami, Nakamura & Fukagata Reference Fukami, Nakamura and Fukagata2020; Murata, Fukami & Fukagata Reference Murata, Fukami and Fukagata2020; Page, Brenner & Kerswell Reference Page, Brenner and Kerswell2021), turbulence modelling (Ling, Kurzawski & Templeton Reference Ling, Kurzawski and Templeton2016; Parish & Duraisamy Reference Parish and Duraisamy2016; Wang, Wu & Xiao Reference Wang, Wu and Xiao2017), flow control and optimisation (Park & Choi Reference Park and Choi2020) and prediction of flow evolution (Lee & You Reference Lee and You2019; Srinivasan et al. Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019; Du & Zaki Reference Du and Zaki2021). Machine learning has also been applied to the identification of special regions in fluid flows, such as identifying the turbulent/non-turbulent regions (Li et al. Reference Li, Yang, Zhang, He, Deng and Shen2020) and finding the dynamically significant regions in turbulent flows (Jiménez Reference Jiménez2018; Jagodinski, Zhu & Verma Reference Jagodinski, Zhu and Verma2020). In addition, NNs have been found useful in inverse problems. For example, it has been shown that NNs can infer small-scale structures and reconstruct turbulent flow fields from low-resolution spatio-temporal measurements (Xie et al. Reference Xie, Franz, Chu and Thuerey2018; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2019; Werhahn et al. Reference Werhahn, Xie, Chu and Thuerey2019; Liu et al. Reference Liu, Tang, Huang and Lu2020; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2021; Kim et al. Reference Kim, Kim, Won and Lee2021). This type of super-resolution reconstruction technique can be used to improve the quality of experimental and simulation data with limited resolutions.

Neural networks have also been successfully applied to the state estimation of turbulent flows from indirect or limited measurements. Milano & Koumoutsakos (Reference Milano and Koumoutsakos2002) proposed a fully connected NN model that reconstructs the near-wall velocity field of a turbulent channel flow using wall shear stress and pressure. They showed that the NN with nonlinear mappings produced better reconstructions than the linear model. Güemes, Discetti & Ianiro (Reference Güemes, Discetti and Ianiro2019) proposed a CNN model combined with proper orthogonal decomposition to reconstruct large-scale motions from wall shear stress. A generative adversarial network was developed by Güemes et al. (Reference Güemes, Discetti, Ianiro, Sirmacek, Azizpour and Vinuesa2021) to reconstruct a flow from wall shear stress and pressure. Guastoni et al. (Reference Guastoni, Encinar, Schlatter, Azizpour and Vinuesa2020) showed that a fully CNN could also produce good velocity field reconstructions from wall shear stress. This model was further extended by Guastoni et al. (Reference Guastoni, Güemes, Ianiro, Discetti, Schlatter, Azizpour and Vinuesa2021) to predict three-dimensional velocity fields. Erichson et al. (Reference Erichson, Mathelin, Yao, Brunton, Mahoney and Kutz2020) developed a shallow NN to reconstruct a flow field from a limited number of sensors and showed that it performed well for a variety of problems, including the flow past a cylinder, the distribution of sea surface temperatures and homogeneous isotropic turbulence. Matsuo et al. (Reference Matsuo, Nakamura, Morimoto, Fukami and Fukagata2021) combined two-dimensional and three-dimensional CNNs to reconstruct a three-dimensional flow field from several two-dimensional slices. The known physical laws can also be integrated with NNs for reconstruction purposes. For example, the governing equations that describe the evolution of flows were used by Raissi, Perdikaris & Karniadakis (Reference Raissi, Perdikaris and Karniadakis2019) to build a physics-informed NN, which can reconstruct velocity and pressure fields using only the visualisation of the mass concentration in the corresponding flow (Raissi, Yazdani & Karniadakis Reference Raissi, Yazdani and Karniadakis2020).

In the present study, we aim to explore the feasibility of using a CNN to reconstruct a turbulent flow under a free surface based solely on surface observations. Gakhar, Koseff & Ouellette (Reference Gakhar, Koseff and Ouellette2020) developed a CNN to classify bottom roughness types from the surface elevation information, indicating that machine learning can be applied to inverse problems involving free-surface flows. In our work, the reconstruction method directly predicts three-dimensional velocity fields, which is a significant improvement over the aforementioned subsurface flow inference techniques that can only predict targeted features. Although similar three-dimensional reconstruction models have been proposed for turbulent channel flows, the available reconstruction methods for free-surface flows are limited. Moreover, compared with reconstructions from wall measurements, reconstructions from free surfaces have unique challenges associated with surface deformation and motions. In addition to demonstrating this CNN application, the present work also discusses the implications on the flow physics underlying the interactions between a free surface and turbulence.

The CNN model developed in the present work takes the surface elevation and surface velocities as inputs to estimate the three-dimensional velocity field underneath a free surface. The model embeds the fluid incompressibility constraint and is trained to minimise the induced reconstruction errors using the data obtained from the direct numerical simulation (DNS) of turbulent open-channel flows. For comparison, a reconstruction model based on the LSE method is also considered. Both qualitative and quantitative measures indicate that near the surface, both the CNN and LSE models can reconstruct the flow fields reasonably well; however, away from the surface, the CNN model achieves significantly better reconstruction performance than the LSE model. We further evaluate the models’ reconstruction performance at different spatial scales to provide a comprehensive picture of what flow structures can be predicted from a free surface and to what extent.

The present study also aims to gain insights into how the LSE and CNN models are related to the flow physics of subsurface turbulence. For the LSE model, its linear transformation kernel is examined, revealing characteristic coherent structures that are linearly correlated with the surface motions. For the CNN model, we compute saliency maps to assess the importance of each surface quantity to the reconstruction outcomes and find that some quantities are less important and can be omitted with only a negligible impact on the reconstruction accuracy. The relative importance levels of different surface variables are found to depend on the Froude number as a result of the influence of gravity on the free-surface dynamics. We also find that the salient regions of the CNN have correlations with dynamically important structures beneath the surface, which indicates that the CNN can identify their manifestations on the free surface and utilise them to reconstruct the flow field. We consider this outcome a first step towards interpreting the outstanding performance of the CNN model. We also apply the CNN model that is trained using data for one Froude number to flows with other Froude numbers to assess the generalisation ability of the CNN model with respect to free-surface flows with various surface deformation magnitudes.

The remainder of this paper is organised as follows. In § 2, we present the formulation of the free-surface flow reconstruction problem and the proposed methods. In § 3, we assess the performance of the reconstruction models. In § 4, we further discuss the obtained physical insights regarding these reconstruction models and their relations with the flow dynamics. In § 5, we summarise the results of the paper.

2. Methodology

The reconstruction of a subsurface flow field based on surface observations is equivalent to finding a mapping ![]() $\mathcal {F}$ from the surface quantities to a three-dimensional velocity field

$\mathcal {F}$ from the surface quantities to a three-dimensional velocity field

where ![]() $\boldsymbol {E}$ denotes a vector consisting of the considered surface quantities, and

$\boldsymbol {E}$ denotes a vector consisting of the considered surface quantities, and ![]() $\tilde {\boldsymbol {u}}=(\tilde {u}, \tilde {v}, \tilde {w})$ denotes the estimated velocity, with

$\tilde {\boldsymbol {u}}=(\tilde {u}, \tilde {v}, \tilde {w})$ denotes the estimated velocity, with ![]() $\tilde {u}$,

$\tilde {u}$, ![]() $\tilde {v}$ and

$\tilde {v}$ and ![]() $\tilde {w}$ being the components of

$\tilde {w}$ being the components of ![]() $\tilde {\boldsymbol {u}}$ in the

$\tilde {\boldsymbol {u}}$ in the ![]() $x$-,

$x$-, ![]() $y$- and

$y$- and ![]() $z$-directions, respectively. We denote the horizontal directions as the

$z$-directions, respectively. We denote the horizontal directions as the ![]() $x$- and

$x$- and ![]() $y$-directions and the vertical direction as the

$y$-directions and the vertical direction as the ![]() $z$-direction. In the present study, the elevation and velocity fluctuation observations at the surface are used as the inputs of the reconstruction process, i.e.

$z$-direction. In the present study, the elevation and velocity fluctuation observations at the surface are used as the inputs of the reconstruction process, i.e.

where the subscript ![]() ${({\cdot })}_s$ denotes the quantities evaluated at the free surface and

${({\cdot })}_s$ denotes the quantities evaluated at the free surface and ![]() $\eta (x,y,t)$ denotes the surface elevation. For reconstruction purposes,

$\eta (x,y,t)$ denotes the surface elevation. For reconstruction purposes, ![]() $\mathcal {F}$ should minimise the difference between the predicted velocity field

$\mathcal {F}$ should minimise the difference between the predicted velocity field ![]() $\tilde {\boldsymbol {u}}$ and the true velocity

$\tilde {\boldsymbol {u}}$ and the true velocity ![]() $\boldsymbol {u}$. We note that in the present study, we only consider the spatial mapping between

$\boldsymbol {u}$. We note that in the present study, we only consider the spatial mapping between ![]() $\tilde {\boldsymbol {u}}$ and

$\tilde {\boldsymbol {u}}$ and ![]() $\boldsymbol {E}$; i.e.

$\boldsymbol {E}$; i.e. ![]() $\tilde {\boldsymbol {u}}$ and

$\tilde {\boldsymbol {u}}$ and ![]() $\boldsymbol {E}$ are acquired at the same time instant.

$\boldsymbol {E}$ are acquired at the same time instant.

2.1. The CNN method

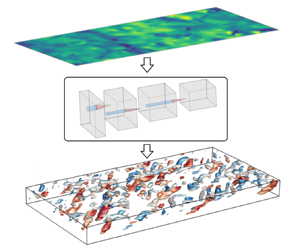

We utilise a CNN (Lecun et al. Reference Lecun, Bottou, Bengio and Haffner1998), which is widely used to process grid-like data to obtain their spatial features, to model the mapping ![]() $\mathcal {F}$ described above for flow reconstruction purposes. The overall architecture of the NN is sketched in figure 1(a). The model consists of two parts: an encoder that maps the surface quantities into a representation with reduced dimensions and a decoder that reconstructs the three-dimensional flow field from the reduced-dimension representation. The input fed into the encoder includes the surface quantities

$\mathcal {F}$ described above for flow reconstruction purposes. The overall architecture of the NN is sketched in figure 1(a). The model consists of two parts: an encoder that maps the surface quantities into a representation with reduced dimensions and a decoder that reconstructs the three-dimensional flow field from the reduced-dimension representation. The input fed into the encoder includes the surface quantities ![]() $\boldsymbol {E}$ sampled on a grid of size

$\boldsymbol {E}$ sampled on a grid of size ![]() $256 \times 128$. With

$256 \times 128$. With ![]() $\boldsymbol {E}$ consisting of four variables, the total dimensions of the input values are

$\boldsymbol {E}$ consisting of four variables, the total dimensions of the input values are ![]() $256 \times 128 \times 4$. The encoding process, which outputs a compressed representation of dimensionality

$256 \times 128 \times 4$. The encoding process, which outputs a compressed representation of dimensionality ![]() $32 \times 16\times 24$, extracts the most important surface features for the subsequent reconstructions, which may alleviate the problem of the model overfitting insignificant or non-generalisable features (Verleysen & François Reference Verleysen and François2005; Ying Reference Ying2019). The decoder reconstructs velocities with three components from the output of the encoder onto a three-dimensional grid of size

$32 \times 16\times 24$, extracts the most important surface features for the subsequent reconstructions, which may alleviate the problem of the model overfitting insignificant or non-generalisable features (Verleysen & François Reference Verleysen and François2005; Ying Reference Ying2019). The decoder reconstructs velocities with three components from the output of the encoder onto a three-dimensional grid of size ![]() $128\times 64 \times 96$. The total dimensions of the output data are

$128\times 64 \times 96$. The total dimensions of the output data are ![]() $128\times 64 \times 96 \times 3$. The input and output of the CNN are based on the same time instant, and each instant is considered independently; therefore, no temporal information is used for the reconstruction process.

$128\times 64 \times 96 \times 3$. The input and output of the CNN are based on the same time instant, and each instant is considered independently; therefore, no temporal information is used for the reconstruction process.

Figure 1. Building blocks of the network for flow reconstruction. (a) Overview of the reconstruction process. (b) Structure of the residual block in the encoder. Here, SE refers to the squeeze-and-excitation layer (Hu, Shen & Sun Reference Hu, Shen and Sun2018).

The detailed architectures of the CNN layers are listed in table 1. The basic building block of a CNN is the convolutional layer, which is defined as

\begin{equation} X^{out}_n = b_n + \sum_{k=1}^{K^{in}} W_n(k)*X^{in}_k,\quad n=1,\ldots,K^{out}, \end{equation}

\begin{equation} X^{out}_n = b_n + \sum_{k=1}^{K^{in}} W_n(k)*X^{in}_k,\quad n=1,\ldots,K^{out}, \end{equation}

where ![]() $X^{in}_k$ denotes the

$X^{in}_k$ denotes the ![]() $k$th channel of an input consisting of

$k$th channel of an input consisting of ![]() $K^{in}$ channels,

$K^{in}$ channels, ![]() $X^{out}_n$ denotes the

$X^{out}_n$ denotes the ![]() $n$th channel of the

$n$th channel of the ![]() $K^{out}$-channel output,

$K^{out}$-channel output, ![]() $b$ denotes the bias,

$b$ denotes the bias, ![]() $W$ is the convolution kernel and ‘

$W$ is the convolution kernel and ‘![]() $*$’ denotes the convolution operator. Strictly speaking, ‘

$*$’ denotes the convolution operator. Strictly speaking, ‘![]() $*$’ calculates the cross-correlation between the kernel and the input, but in the context of a CNN, we simply refer to it as convolution. We note that, in (2.3), the input may consist of multiple channels. For the first layer that takes the surface quantities as inputs, each channel corresponds to one surface variable. Each convolutional layer can apply multiple kernels to the input to extract different features and as a result, its output may also contain multiple channels. Each channel of the output is thus called a feature map.

$*$’ calculates the cross-correlation between the kernel and the input, but in the context of a CNN, we simply refer to it as convolution. We note that, in (2.3), the input may consist of multiple channels. For the first layer that takes the surface quantities as inputs, each channel corresponds to one surface variable. Each convolutional layer can apply multiple kernels to the input to extract different features and as a result, its output may also contain multiple channels. Each channel of the output is thus called a feature map.

Table 1. Detailed architecture of the CNN with its parameters, including the input size, kernel size and stride of each block (if applicable). The input size is written as ![]() $N_x\times N_y \times N_c$ for two-dimensional data for the encoder or

$N_x\times N_y \times N_c$ for two-dimensional data for the encoder or ![]() $N_x \times N_y \times N_z \times N_c$ for three-dimensional data for the decoder, where

$N_x \times N_y \times N_z \times N_c$ for three-dimensional data for the decoder, where ![]() $N_x$,

$N_x$, ![]() $N_y$ and

$N_y$ and ![]() $N_z$ denote the grid dimensions in the

$N_z$ denote the grid dimensions in the ![]() $x$-,

$x$-, ![]() $y$- and

$y$- and ![]() $z$-directions, respectively, and

$z$-directions, respectively, and ![]() $N_c$ denotes the number of channels. Note that the output of each block is the input of the next block. The output size of the last block is

$N_c$ denotes the number of channels. Note that the output of each block is the input of the next block. The output size of the last block is ![]() $128\times 64 \times 96 \times 3$.

$128\times 64 \times 96 \times 3$.

These feature maps are often fed into a nonlinear activation function, which enables the CNN to learn complex and nonlinear relationships. We have compared the performance of several types of nonlinear activation functions, including the rectified linear unit function, the sigmoid function and the hyperbolic tangent function. The hyperbolic tangent function, which performs better, is selected in the present work. We note that Murata et al. (Reference Murata, Fukami and Fukagata2020) also found the hyperbolic tangent function performs well for the mode decomposition of flow fields.

In the encoder, we use strided convolution layers and blur pooling layers to reduce the dimensionality of feature maps. In a strided convolution, the kernel window slides over the input by more than one pixel (grid point) at a time, and as a result of skipping pixels, the input is downsampled. A blur pooling layer can be considered a special strided convolution operation with fixed weights. The blur kernel we use here is the outer product of ![]() $[0.25,0.5,0.25]$ (Zhang Reference Zhang2019), which applies low-pass filtering and downsampling to the input at the same time. The blur pooling can improve the shift invariance of the CNN (Zhang Reference Zhang2019), which is discussed further below. In the encoder part, we also utilise a design called residual blocks, which is empirically known to ease the training processes of NNs (He et al. Reference He, Zhang, Ren and Sun2016). The structure of a residual block is illustrated in figure 1(b). The output of the block is the sum of the original input and the output of several processing layers. Following Vahdat & Kautz (Reference Vahdat and Kautz2020), the residual block in this work consists of two rounds of activation–convolution layers, followed by a squeeze-and-excitation layer that enables the network to learn crucial features more effectively (Hu et al. Reference Hu, Shen and Sun2018). In our experiments, we find that the use of residual blocks in the encoder part can reduce the number of epochs needed to train the network. The residual blocks can also be implemented for the decoder as in Vahdat & Kautz (Reference Vahdat and Kautz2020),but we do not observe any significant differences in the training or the performance of the network.

$[0.25,0.5,0.25]$ (Zhang Reference Zhang2019), which applies low-pass filtering and downsampling to the input at the same time. The blur pooling can improve the shift invariance of the CNN (Zhang Reference Zhang2019), which is discussed further below. In the encoder part, we also utilise a design called residual blocks, which is empirically known to ease the training processes of NNs (He et al. Reference He, Zhang, Ren and Sun2016). The structure of a residual block is illustrated in figure 1(b). The output of the block is the sum of the original input and the output of several processing layers. Following Vahdat & Kautz (Reference Vahdat and Kautz2020), the residual block in this work consists of two rounds of activation–convolution layers, followed by a squeeze-and-excitation layer that enables the network to learn crucial features more effectively (Hu et al. Reference Hu, Shen and Sun2018). In our experiments, we find that the use of residual blocks in the encoder part can reduce the number of epochs needed to train the network. The residual blocks can also be implemented for the decoder as in Vahdat & Kautz (Reference Vahdat and Kautz2020),but we do not observe any significant differences in the training or the performance of the network.

The reconstruction process of the decoder uses a transposed convolution operation, which is essentially the adjoint operation of convolution. To interpret the relationships between a convolution and a transposed convolution, we first note that a convolution operation can be computed as a matrix-vector product, with the matrix defined by the kernel weights and the vector being the flattened input. By transposing the matrix, one obtains the adjoint operation, i.e. the transposed convolution, associated with the initial convolution operation. It should also be noted that the dimensions of the input and output of a transposed convolution are the reverse of those of the corresponding convolution. In the decoder, strided transposed convolutions have the opposite effect relative to that of the strided convolutions in the encoder, i.e. they are used to upsample the feature maps.

To ensure that the CNN can better represent physical correlations between the surface and subsurface flows, the CNN architecture is designed to maintain good translation invariance, i.e. the ability to produce equivalent reconstructions when the surface input is shifted in space. Although the convolution operations are shift invariant by themselves, the downsampling by strided convolutions can produce different results when inputs are shifted (Azulay & Weiss Reference Azulay and Weiss2019; Zhang Reference Zhang2019). From the perspective of the Fourier analysis, downsampling may introduce aliasing errors at high wavenumber modes of the feature maps, which may be amplified by subsequent layers, and as a result, break the translation invariance of the network. Therefore, following Zhang (Reference Zhang2019), we employ the blur pooling layers, which perform antialiasing while downsampling to reduce aliasing errors. The blur pooling layers act as the first two downsampling layers in the encoder (see table 1). As reported in Appendix A, the present network can produce more consistent reconstructions for shifted inputs than a network that only uses strided convolutions for downsampling. The blur pooling is not applied to the last downsampling operation in the encoder because we find that it barely improves the translation invariance but decreases the reconstruction accuracy as the blurring can result in loss of information (Zhang Reference Zhang2019). We also apply periodic paddings in the horizontal directions to reduce the translation-induced errors owing to the edge effect. Furthermore, we apply random translations in the horizontal directions to the training data, known as data augmentation, which is detailed in § 2.3.2, to help improve the translation invariance (Kauderer-Abrams Reference Kauderer-Abrams2017). We note that complete translation invariance is difficult to achieve because nonlinear activation functions can also introduce aliasing effects (Azulay & Weiss Reference Azulay and Weiss2019). Nevertheless, our tests indicate that the reconstructions are consistent for shifted inputs and therefore the translation-induced errors should be small.

The choice of model parameters, such as the kernel sizes and strides, is often a balance between performance and computational cost. Most existing CNNs use small kernels, with sizes between ![]() $3$ and

$3$ and ![]() $7$ (see e.g. Simonyan, Vedaldi & Zisserman Reference Simonyan, Vedaldi and Zisserman2014; He et al. Reference He, Zhang, Ren and Sun2016; Lee & You Reference Lee and You2019; Tan & Le Reference Tan and Le2019), for computational efficiency. In the present work, we use kernels of sizes varying from

$7$ (see e.g. Simonyan, Vedaldi & Zisserman Reference Simonyan, Vedaldi and Zisserman2014; He et al. Reference He, Zhang, Ren and Sun2016; Lee & You Reference Lee and You2019; Tan & Le Reference Tan and Le2019), for computational efficiency. In the present work, we use kernels of sizes varying from ![]() $3$ and

$3$ and ![]() $5$. For transposed convolutions, the kernel size is chosen to be divisible by the stride to reduce the checkerboard artefacts (Odena, Dumoulin & Olah Reference Odena, Dumoulin and Olah2016). For both strided convolutions and blur pooling layers, we use a stride of

$5$. For transposed convolutions, the kernel size is chosen to be divisible by the stride to reduce the checkerboard artefacts (Odena, Dumoulin & Olah Reference Odena, Dumoulin and Olah2016). For both strided convolutions and blur pooling layers, we use a stride of ![]() $2$, i.e. a downsampling ratio of 2, which is a common choice when processing images (Tan & Le Reference Tan and Le2019) and fluid fields (Lee & You Reference Lee and You2019; Park & Choi Reference Park and Choi2020). Similarly, in the decoder, the spatial dimensions are in general expanded by a ratio of

$2$, i.e. a downsampling ratio of 2, which is a common choice when processing images (Tan & Le Reference Tan and Le2019) and fluid fields (Lee & You Reference Lee and You2019; Park & Choi Reference Park and Choi2020). Similarly, in the decoder, the spatial dimensions are in general expanded by a ratio of ![]() $2$, except for one layer where the features maps are expanded by a ratio of

$2$, except for one layer where the features maps are expanded by a ratio of ![]() $3$ in the vertical direction owing to the fact that the final dimension

$3$ in the vertical direction owing to the fact that the final dimension ![]() $96$ has a factor of

$96$ has a factor of ![]() $3$. Some transposed convolution layers only expand one spatial dimension to accommodate the different expansion ratios needed in different dimensions to obtain the output grid. Although there are many combinations for how the dimension expansions are ordered in the decoder, we find that the network performance is not particularly sensitive to the ordering.

$3$. Some transposed convolution layers only expand one spatial dimension to accommodate the different expansion ratios needed in different dimensions to obtain the output grid. Although there are many combinations for how the dimension expansions are ordered in the decoder, we find that the network performance is not particularly sensitive to the ordering.

The performance of the CNN is also affected by the number of channels (feature maps) in each layer. A network with more layers can in theory provide a greater capacity to describe more types of features. As presented in § 1 of the supplementary material available at https://doi.org/10.1017/jfm.2023.154, a network with ![]() $50\,\%$ more channels has almost the same performance as the one presented in table 1, indicating that the present network has enough number of feature maps for expressing the surface–subsurface mapping. A special layer worth more consideration is the output of the encoder or the input of the decoder, which is the bottleneck of the entire network and encodes the latent features that are useful for reconstructions. The effect of the dimension of this bottleneck layer on the reconstruction performance is studied by varying the number of channels in this layer. It is found that the present size with

$50\,\%$ more channels has almost the same performance as the one presented in table 1, indicating that the present network has enough number of feature maps for expressing the surface–subsurface mapping. A special layer worth more consideration is the output of the encoder or the input of the decoder, which is the bottleneck of the entire network and encodes the latent features that are useful for reconstructions. The effect of the dimension of this bottleneck layer on the reconstruction performance is studied by varying the number of channels in this layer. It is found that the present size with ![]() $24$ layers can retain most surface features necessary for the network to achieve an optimal performance. More detailed comparisons are provided in § 1 of the supplementary material. We have also considered some variants of the network architecture to investigate whether a deeper network can improve the reconstruction performance. These modified networks show no significant performance differences from the current model, indicating that the present network already has enough expressivity to describe the mapping for subsurface flow reconstructions. Detailed comparisons are reported in § 2 of the supplementary material.

$24$ layers can retain most surface features necessary for the network to achieve an optimal performance. More detailed comparisons are provided in § 1 of the supplementary material. We have also considered some variants of the network architecture to investigate whether a deeper network can improve the reconstruction performance. These modified networks show no significant performance differences from the current model, indicating that the present network already has enough expressivity to describe the mapping for subsurface flow reconstructions. Detailed comparisons are reported in § 2 of the supplementary material.

The entire network is trained to minimise the following loss function:

\begin{equation} J = \frac{1}{3 L_z}\sum_{i=1}^3 {\int_z \frac{\displaystyle\iint_{x,y}{\left| \tilde{u}_i - u_i \right|}^2 \mathrm{d}\kern0.06em x\,\mathrm{d}y}{\displaystyle\iint_{x,y} u_i^2 \,\mathrm{d}\kern0.06em x,\mathrm{d}y} \mathrm{d}z}, \end{equation}

\begin{equation} J = \frac{1}{3 L_z}\sum_{i=1}^3 {\int_z \frac{\displaystyle\iint_{x,y}{\left| \tilde{u}_i - u_i \right|}^2 \mathrm{d}\kern0.06em x\,\mathrm{d}y}{\displaystyle\iint_{x,y} u_i^2 \,\mathrm{d}\kern0.06em x,\mathrm{d}y} \mathrm{d}z}, \end{equation}which first computes the mean squared errors normalised by the plane-averaged Reynolds normal stresses for each component and for each horizontal planes, and then averages these relative mean squared errors. This loss function is defined to consider the inhomogeneity of the open-channel flow in the vertical direction and the anisotropy among the three velocity components. Compared with the mean squared error of the velocity fluctuations (equivalent to the error of the total turbulent kinetic energy), the above loss function gives higher weights to the less energetic directions and less energetic flow regions.

The incompressibility constraint is embedded into the network to produce a reconstruction that satisfies mass conservation. At the end of the decoder, the reconstructed velocity is determined by

where ![]() $\tilde {\boldsymbol {A}}$ is a vector potential estimated by the network. The solenoidality of

$\tilde {\boldsymbol {A}}$ is a vector potential estimated by the network. The solenoidality of ![]() $\tilde {\boldsymbol {u}}$ is automatically satisfied following the vector identity

$\tilde {\boldsymbol {u}}$ is automatically satisfied following the vector identity ![]() $\boldsymbol {\nabla } \boldsymbol {\cdot } (\boldsymbol {\nabla }\times \tilde {\boldsymbol {A}}) = 0$. It should be noted that the vector potential of a velocity field is not unique, which can be seen from

$\boldsymbol {\nabla } \boldsymbol {\cdot } (\boldsymbol {\nabla }\times \tilde {\boldsymbol {A}}) = 0$. It should be noted that the vector potential of a velocity field is not unique, which can be seen from ![]() $\boldsymbol {\nabla }\times \tilde {\boldsymbol {A}} = \boldsymbol {\nabla }\times (\tilde {\boldsymbol {A}} + \boldsymbol {\nabla }\psi )$, where

$\boldsymbol {\nabla }\times \tilde {\boldsymbol {A}} = \boldsymbol {\nabla }\times (\tilde {\boldsymbol {A}} + \boldsymbol {\nabla }\psi )$, where ![]() $\psi$ is an arbitrary scalar function. Considering that

$\psi$ is an arbitrary scalar function. Considering that ![]() $\tilde {\boldsymbol {A}}$ is just an intermediate quantity and it is the velocity field we are interested in, we do not add any constraints on

$\tilde {\boldsymbol {A}}$ is just an intermediate quantity and it is the velocity field we are interested in, we do not add any constraints on ![]() $\boldsymbol {A}$ to fix the choice of

$\boldsymbol {A}$ to fix the choice of ![]() $\psi$. In other words, we let the network architecture and the optimisation process freely estimate the vector potential. We find that predicting the velocity field through its vector potential and predicting the velocity directly yields no discernible differences in the reconstruction accuracy (see detailed results in § 4 of the supplementary material), indicating that the imposed incompressibility constraint does not negatively impact the CNN's reconstruction capability.

$\psi$. In other words, we let the network architecture and the optimisation process freely estimate the vector potential. We find that predicting the velocity field through its vector potential and predicting the velocity directly yields no discernible differences in the reconstruction accuracy (see detailed results in § 4 of the supplementary material), indicating that the imposed incompressibility constraint does not negatively impact the CNN's reconstruction capability.

The spatial derivatives in (2.5) are computed using the second-order central difference scheme, which can be easily expressed as a series of convolution operations with fixed kernel coefficients. We have also tested the Fourier spectral method to calculate the derivatives in the ![]() $x$- and

$x$- and ![]() $y$-directions, which barely affect the reconstruction accuracy but significantly increases the training time. This indicates that a higher-order scheme does not provide additional reconstruction accuracy, which we believe is because most reconstructable structures are low-wavenumber structures and the second-order central scheme is adequately accurate for resolving these structures.

$y$-directions, which barely affect the reconstruction accuracy but significantly increases the training time. This indicates that a higher-order scheme does not provide additional reconstruction accuracy, which we believe is because most reconstructable structures are low-wavenumber structures and the second-order central scheme is adequately accurate for resolving these structures.

The total number of trainable parameters in the designed network is 279 551. The network is trained using the adaptive moment estimation with decoupled weight decay optimiser (Loshchilov & Hutter Reference Loshchilov and Hutter2019) with early stopping to avoid overfitting. The training process is performed on an NVIDIA V100 GPU.

2.2. The LSE method

For comparisons with the CNN model, we also consider a classic approach based on the LSE method (Adrian & Moin Reference Adrian and Moin1988), which is commonly used to extract turbulent coherent structures from turbulent flows (Christensen & Adrian Reference Christensen and Adrian2001; Xuan, Deng & Shen Reference Xuan, Deng and Shen2019). It has also been applied to reconstruct the velocity on an off-wall plane in a turbulent channel flow from wall measurements (Suzuki & Hasegawa Reference Suzuki and Hasegawa2017; Encinar & Jiménez Reference Encinar and Jiménez2019; Guastoni et al. Reference Guastoni, Encinar, Schlatter, Azizpour and Vinuesa2020). Wang et al. (Reference Wang, Gao, Wang, Pan and Wang2021) demonstrated that this method can be used to transform a three-dimensional vorticity field into a velocity field.

The LSE method, as the name suggests, estimates a linear mapping from the observations of some known random variables to unknown random variables. In the present problem, the known random variables are the surface quantities ![]() $\boldsymbol {E}$ and the unknowns are the estimated

$\boldsymbol {E}$ and the unknowns are the estimated ![]() $\tilde {\boldsymbol {u}}$. The reconstruction of

$\tilde {\boldsymbol {u}}$. The reconstruction of ![]() $\tilde {\boldsymbol {u}}$ by a linear relationship can be expressed as

$\tilde {\boldsymbol {u}}$ by a linear relationship can be expressed as

where ![]() $\mathcal {L}_{ij}$ (written as

$\mathcal {L}_{ij}$ (written as ![]() $\mathcal {L}$ below for conciseness) are linear operators. In other words, (2.6) treats the mapping

$\mathcal {L}$ below for conciseness) are linear operators. In other words, (2.6) treats the mapping ![]() $\mathcal {F}$ in (2.1) as a linear function of

$\mathcal {F}$ in (2.1) as a linear function of ![]() $\boldsymbol {E}$. Note that two distinct coordinates are contained in the above equation:

$\boldsymbol {E}$. Note that two distinct coordinates are contained in the above equation: ![]() $\boldsymbol {x}$ and

$\boldsymbol {x}$ and ![]() $\boldsymbol {x}_s$. The coordinate

$\boldsymbol {x}_s$. The coordinate ![]() $\boldsymbol {x}$ denotes the position to be reconstructed and

$\boldsymbol {x}$ denotes the position to be reconstructed and ![]() $\mathcal {L}$ is a function of

$\mathcal {L}$ is a function of ![]() $\boldsymbol {x}$ because the mapping between

$\boldsymbol {x}$ because the mapping between ![]() $\boldsymbol {E}$ and

$\boldsymbol {E}$ and ![]() $\tilde {\boldsymbol {u}}$ should depend on the location to be predicted. The coordinate

$\tilde {\boldsymbol {u}}$ should depend on the location to be predicted. The coordinate ![]() $\boldsymbol {x}_s$ denotes the horizontal coordinates, i.e.

$\boldsymbol {x}_s$ denotes the horizontal coordinates, i.e. ![]() $\boldsymbol {x}_s=(x,y)$, which signifies that the observed surface quantity

$\boldsymbol {x}_s=(x,y)$, which signifies that the observed surface quantity ![]() $\boldsymbol {E}(\boldsymbol {x}_s)$ is a planar field. Here, we denote the surface plane as

$\boldsymbol {E}(\boldsymbol {x}_s)$ is a planar field. Here, we denote the surface plane as ![]() $S$, i.e.

$S$, i.e. ![]() $\boldsymbol {x}_s \in S$. For each

$\boldsymbol {x}_s \in S$. For each ![]() $\boldsymbol {x}$,

$\boldsymbol {x}$, ![]() $\mathcal {L}$ is a field operator on

$\mathcal {L}$ is a field operator on ![]() $S$ that transforms

$S$ that transforms ![]() $\boldsymbol {E}(\boldsymbol {x}_s)$ into the velocity

$\boldsymbol {E}(\boldsymbol {x}_s)$ into the velocity ![]() $\boldsymbol {\tilde {u}}(x)$. The LSE method states that

$\boldsymbol {\tilde {u}}(x)$. The LSE method states that ![]() $\mathcal {L}$ can be determined by

$\mathcal {L}$ can be determined by

where ![]() $\langle {\cdot }\rangle$ is defined as averaging over all the ensembles (instants) in the dataset. The above equation is obtained by minimising the mean squared errors between

$\langle {\cdot }\rangle$ is defined as averaging over all the ensembles (instants) in the dataset. The above equation is obtained by minimising the mean squared errors between ![]() $\tilde {u}_i(\boldsymbol {x})$ and

$\tilde {u}_i(\boldsymbol {x})$ and ![]() ${u}_i(\boldsymbol {x})$ over all the ensembles (instants) in the dataset; i.e. the operator

${u}_i(\boldsymbol {x})$ over all the ensembles (instants) in the dataset; i.e. the operator ![]() $\mathcal {L}$ defined above is the optimal estimation of the mapping

$\mathcal {L}$ defined above is the optimal estimation of the mapping ![]() $\mathcal {F}$ in the least-squares sense. Note that, on the left-hand side of (2.7),

$\mathcal {F}$ in the least-squares sense. Note that, on the left-hand side of (2.7), ![]() $\langle {E_m(\boldsymbol {r}') E_j(\boldsymbol {x}_s)}\rangle$ should be considered a planar field function of

$\langle {E_m(\boldsymbol {r}') E_j(\boldsymbol {x}_s)}\rangle$ should be considered a planar field function of ![]() $\boldsymbol {x}_s$ when multiplied by the operator

$\boldsymbol {x}_s$ when multiplied by the operator ![]() $\mathcal {L}$. By varying

$\mathcal {L}$. By varying ![]() $\boldsymbol {r}'$ and

$\boldsymbol {r}'$ and ![]() $m$, one can obtain a linear system to solve for

$m$, one can obtain a linear system to solve for ![]() $\mathcal {L}$. Similar to the CNN model in § 2.1, the LSE model only describes the spatial correlations between the subsurface and surface quantities.

$\mathcal {L}$. Similar to the CNN model in § 2.1, the LSE model only describes the spatial correlations between the subsurface and surface quantities.

As pointed out by Wang et al. (Reference Wang, Gao, Wang, Pan and Wang2021), the operator ![]() $\mathcal {L}$ in (2.6) can be alternatively written in an integral form as

$\mathcal {L}$ in (2.6) can be alternatively written in an integral form as

Utilising the horizontal homogeneity of the open-channel turbulent flow, it can be easily shown that ![]() $l_{ij}$ is a function that is dependent on

$l_{ij}$ is a function that is dependent on ![]() $\boldsymbol {x}-\boldsymbol {x}_s$ and, therefore, (2.8) can be written as

$\boldsymbol {x}-\boldsymbol {x}_s$ and, therefore, (2.8) can be written as

Therefore, the LSE of the subsurface velocity field can be obtained by a convolution of the kernel ![]() $l_{ij}$ and the surface quantities

$l_{ij}$ and the surface quantities ![]() $E_j$ over the horizontal plane

$E_j$ over the horizontal plane ![]() $S$. As a result, the vertical coordinate

$S$. As a result, the vertical coordinate ![]() $z$ is decoupled, and (2.9) can be evaluated independently for each

$z$ is decoupled, and (2.9) can be evaluated independently for each ![]() $x$–

$x$–![]() $y$ plane.

$y$ plane.

For efficiently computing ![]() $l_{ij}$, we apply the convolution theorem to (2.9) and transform it into pointwise multiplications in the Fourier wavenumber space. Similarly, (2.7) can also be expressed in the convolutional form. This yields

$l_{ij}$, we apply the convolution theorem to (2.9) and transform it into pointwise multiplications in the Fourier wavenumber space. Similarly, (2.7) can also be expressed in the convolutional form. This yields

where ![]() $\hat {f}$ denotes the Fourier coefficients of a quantity

$\hat {f}$ denotes the Fourier coefficients of a quantity ![]() $f$ and

$f$ and ![]() $\hat {f}^*$ denotes the complex conjugate of

$\hat {f}^*$ denotes the complex conjugate of ![]() $\hat {f}$. The above equations can be solved independently for each wavenumber in the horizontal directions, each vertical location and each velocity component.

$\hat {f}$. The above equations can be solved independently for each wavenumber in the horizontal directions, each vertical location and each velocity component.

2.3. Dataset descriptions

The reconstruction models proposed above are trained and tested with datasets obtained from the DNS of a turbulent open-channel flow. Figure 2(a) shows the configuration of the turbulent open-channel flow, which is doubly periodic in the streamwise (![]() $x$) and spanwise (

$x$) and spanwise (![]() $y$) directions. In the vertical (

$y$) directions. In the vertical (![]() $z$) direction, the bottom wall satisfies the no-slip condition, and the top surface satisfies the free-surface kinematic and dynamic boundary conditions. The utilised simulation parameters are listed in table 2. The domain size is

$z$) direction, the bottom wall satisfies the no-slip condition, and the top surface satisfies the free-surface kinematic and dynamic boundary conditions. The utilised simulation parameters are listed in table 2. The domain size is ![]() $L_x\times L_y \times L_z = 4{\rm \pi} h \times 2{\rm \pi} h \times h$, with

$L_x\times L_y \times L_z = 4{\rm \pi} h \times 2{\rm \pi} h \times h$, with ![]() $L_z$ being the mean depth of the channel. The flow is driven by a constant mean pressure gradient

$L_z$ being the mean depth of the channel. The flow is driven by a constant mean pressure gradient ![]() $G_p$ in the streamwise direction. The friction Reynolds number is defined as

$G_p$ in the streamwise direction. The friction Reynolds number is defined as ![]() ${Re}_\tau =u_\tau h/\nu =180$, where

${Re}_\tau =u_\tau h/\nu =180$, where ![]() $u_\tau =\sqrt {G_p/\rho }$ is the friction velocity,

$u_\tau =\sqrt {G_p/\rho }$ is the friction velocity, ![]() $h$ is the mean depth of the channel,

$h$ is the mean depth of the channel, ![]() $\rho$ is the density and

$\rho$ is the density and ![]() $\nu$ is the kinematic viscosity. Flows with different Froude numbers, which are defined based on the friction velocity as

$\nu$ is the kinematic viscosity. Flows with different Froude numbers, which are defined based on the friction velocity as ![]() $Fr_\tau = u_\tau /\sqrt {gh}$ with g being the gravitational acceleration, are considered. The domain size

$Fr_\tau = u_\tau /\sqrt {gh}$ with g being the gravitational acceleration, are considered. The domain size ![]() $4{\rm \pi} h \times 2{\rm \pi} h \times h$ is sufficiently large to obtain domain-independent one-point turbulence statistics in open-channel flows at

$4{\rm \pi} h \times 2{\rm \pi} h \times h$ is sufficiently large to obtain domain-independent one-point turbulence statistics in open-channel flows at ![]() ${Re}_\tau =180$ (Wang, Park & Richter Reference Wang, Park and Richter2020). The autocorrelations studied by Pinelli et al. (Reference Pinelli, Herlina, Wissink and Uhlmann2022) indicate that a spanwise domain size of

${Re}_\tau =180$ (Wang, Park & Richter Reference Wang, Park and Richter2020). The autocorrelations studied by Pinelli et al. (Reference Pinelli, Herlina, Wissink and Uhlmann2022) indicate that a spanwise domain size of ![]() $6h$ is sufficiently large for the spanwise scales to be decorrelated; in the streamwise direction, a much larger domain length,

$6h$ is sufficiently large for the spanwise scales to be decorrelated; in the streamwise direction, a much larger domain length, ![]() $L_x>24h$, is needed for complete decorrelation. Owing to the higher computational cost of the deformable free-surface simulations compared with the rigid-lid simulations, here we use a smaller domain length

$L_x>24h$, is needed for complete decorrelation. Owing to the higher computational cost of the deformable free-surface simulations compared with the rigid-lid simulations, here we use a smaller domain length ![]() $L_x=4{\rm \pi} h$. The normalised autocorrelation of the streamwise velocity fluctuations,

$L_x=4{\rm \pi} h$. The normalised autocorrelation of the streamwise velocity fluctuations, ![]() $R_u(x', z) = \langle u(x') u(x+ x') \rangle /\langle u^2\rangle$, where

$R_u(x', z) = \langle u(x') u(x+ x') \rangle /\langle u^2\rangle$, where ![]() $\langle {\cdot }\rangle$ denotes the plane average, is smaller than

$\langle {\cdot }\rangle$ denotes the plane average, is smaller than ![]() $0.065$ at the largest separation distance

$0.065$ at the largest separation distance ![]() $L_x/2$ across the depth of the channel, which is small enough to allow most streamwise structures to be captured, consistent with the numerical result of Pinelli et al. (Reference Pinelli, Herlina, Wissink and Uhlmann2022).

$L_x/2$ across the depth of the channel, which is small enough to allow most streamwise structures to be captured, consistent with the numerical result of Pinelli et al. (Reference Pinelli, Herlina, Wissink and Uhlmann2022).

Figure 2. (a) Set-up of the turbulent open-channel flow. The contours illustrate the instantaneous streamwise velocity ![]() $u$ of one snapshot from the simulation. (b) Sketch of the boundary-fitted curvilinear coordinate system. For illustration purposes, the domain is stretched, and the grid resolution is lowered in the plots.

$u$ of one snapshot from the simulation. (b) Sketch of the boundary-fitted curvilinear coordinate system. For illustration purposes, the domain is stretched, and the grid resolution is lowered in the plots.

Table 2. The simulation parameters of the turbulent open-channel flows. The superscript ![]() ${}^+$ denotes the quantities normalised by wall units

${}^+$ denotes the quantities normalised by wall units ![]() $\nu /u_\tau$.

$\nu /u_\tau$.

2.3.1. Simulation method and parameters

To simulate free-surface motions, we discretise the governing equations on a time-dependent boundary-fitted curvilinear coordinate system, ![]() $\boldsymbol {\xi } = (\xi _1, \xi _2, \xi _3)$, which is defined as (Xuan & Shen Reference Xuan and Shen2019)

$\boldsymbol {\xi } = (\xi _1, \xi _2, \xi _3)$, which is defined as (Xuan & Shen Reference Xuan and Shen2019)

The above transformation maps the vertical coordinate ![]() $z\in [0, \eta +h]$ to the computational coordinate

$z\in [0, \eta +h]$ to the computational coordinate ![]() $\xi _3\in [0, h]$. The mass and momentum equations are written using the curvilinear coordinates as

$\xi _3\in [0, h]$. The mass and momentum equations are written using the curvilinear coordinates as

where ![]() $J=\det ( {\partial } \xi ^i / {\partial } x_j )$ is the Jacobian determinant of the transformation;

$J=\det ( {\partial } \xi ^i / {\partial } x_j )$ is the Jacobian determinant of the transformation; ![]() $U^j=u_k ( {\partial } \xi ^j / {\partial } x_k)$ is the contravariant velocity;

$U^j=u_k ( {\partial } \xi ^j / {\partial } x_k)$ is the contravariant velocity; ![]() $U^j_g= \dot {x}_k({ {\partial } \xi ^j}/{ {\partial } x_k})$ is the contravariant velocity of the grid, where

$U^j_g= \dot {x}_k({ {\partial } \xi ^j}/{ {\partial } x_k})$ is the contravariant velocity of the grid, where ![]() $\dot {x}_k(\xi _j)$ is the moving velocity of

$\dot {x}_k(\xi _j)$ is the moving velocity of ![]() $\xi _j$ in the Cartesian coordinates;

$\xi _j$ in the Cartesian coordinates; ![]() $g^{ij}=( {\partial } \xi ^i / {\partial } x_k)( {\partial } \xi ^j / {\partial } x_k)$ is the metric tensor of the transformation. The above curvilinear coordinate system, which is illustrated in figure 2(b), enables the discretised system to more accurately resolve the surface boundary layers.

$g^{ij}=( {\partial } \xi ^i / {\partial } x_k)( {\partial } \xi ^j / {\partial } x_k)$ is the metric tensor of the transformation. The above curvilinear coordinate system, which is illustrated in figure 2(b), enables the discretised system to more accurately resolve the surface boundary layers.

The solver utilises a Fourier pseudo-spectral method for discretisations in the horizontal directions, ![]() $\xi _1$ and

$\xi _1$ and ![]() $\xi _2$, and a second-order finite-difference scheme in the vertical direction

$\xi _2$, and a second-order finite-difference scheme in the vertical direction ![]() $\xi _3$. The temporal evolution of (2.13) is coupled with the evolution of the free surface

$\xi _3$. The temporal evolution of (2.13) is coupled with the evolution of the free surface ![]() $\eta (x, y, t)$; the latter is determined by the free-surface kinematic boundary condition, written as

$\eta (x, y, t)$; the latter is determined by the free-surface kinematic boundary condition, written as

The above equation is integrated using a two-stage Runge–Kutta scheme. In each stage after the ![]() $\eta$ is updated, (2.13) is solved to obtain the velocity in the domain bounded by the updated water surface. This simulation method has been validated with various canonical wave flows (Yang & Shen Reference Yang and Shen2011; Xuan & Shen Reference Xuan and Shen2019) and has been applied to several studies on turbulent free-surface flows, such as research on the interaction of isotropic turbulence with a free surface (Guo & Shen Reference Guo and Shen2010) and turbulence–wave interaction (Xuan, Deng & Shen Reference Xuan, Deng and Shen2020; Xuan & Shen Reference Xuan and Shen2022). More details of the numerical schemes and validations are described in Xuan & Shen (Reference Xuan and Shen2019).

$\eta$ is updated, (2.13) is solved to obtain the velocity in the domain bounded by the updated water surface. This simulation method has been validated with various canonical wave flows (Yang & Shen Reference Yang and Shen2011; Xuan & Shen Reference Xuan and Shen2019) and has been applied to several studies on turbulent free-surface flows, such as research on the interaction of isotropic turbulence with a free surface (Guo & Shen Reference Guo and Shen2010) and turbulence–wave interaction (Xuan, Deng & Shen Reference Xuan, Deng and Shen2020; Xuan & Shen Reference Xuan and Shen2022). More details of the numerical schemes and validations are described in Xuan & Shen (Reference Xuan and Shen2019).

The horizontal grid, following the Fourier collocation points, is uniform. Near the bottom of the channel and the free surface, the grid is clustered with a minimum vertical spacing ![]() $\varDelta ^+_{min}=0.068$, where the superscript

$\varDelta ^+_{min}=0.068$, where the superscript ![]() ${}^+$ denotes the length normalised by wall units

${}^+$ denotes the length normalised by wall units ![]() $\nu /u_\tau$; the maximum vertical spacing at the centre of the channel is

$\nu /u_\tau$; the maximum vertical spacing at the centre of the channel is ![]() $\Delta z^{+}_{max}=2.7$. The above grid resolutions, as listed in the last four columns in table 2, are comparable to those used in the DNS of turbulent rigid-lid open-channel flows (Yao, Chen & Hussain Reference Yao, Chen and Hussain2022) and turbulent free-surface open-channel flows (Yoshimura & Fujita Reference Yoshimura and Fujita2020). Figure 3 compares the free-surface fluctuation magnitudes

$\Delta z^{+}_{max}=2.7$. The above grid resolutions, as listed in the last four columns in table 2, are comparable to those used in the DNS of turbulent rigid-lid open-channel flows (Yao, Chen & Hussain Reference Yao, Chen and Hussain2022) and turbulent free-surface open-channel flows (Yoshimura & Fujita Reference Yoshimura and Fujita2020). Figure 3 compares the free-surface fluctuation magnitudes ![]() $\eta_{rms}$, where rms denotes the root-mean-sqaure value, in the present simulations with the literature (Yokojima & Nakayama Reference Yokojima and Nakayama2002; Yoshimura & Fujita Reference Yoshimura and Fujita2020). We find that the present results agree well with their data. The profiles of the mean velocity and Reynolds stresses are also in agreement with the results reported by Yoshimura & Fujita (Reference Yoshimura and Fujita2020) (not plotted).

$\eta_{rms}$, where rms denotes the root-mean-sqaure value, in the present simulations with the literature (Yokojima & Nakayama Reference Yokojima and Nakayama2002; Yoshimura & Fujita Reference Yoshimura and Fujita2020). We find that the present results agree well with their data. The profiles of the mean velocity and Reynolds stresses are also in agreement with the results reported by Yoshimura & Fujita (Reference Yoshimura and Fujita2020) (not plotted).

Figure 3. Comparison of the root-mean-square (rms) free-surface fluctuations ![]() $\eta_{rms}$ with the literature: the present DNS results (

$\eta_{rms}$ with the literature: the present DNS results (![]() $\blacksquare$) and the DNS results of Yoshimura & Fujita (Reference Yoshimura and Fujita2020) (

$\blacksquare$) and the DNS results of Yoshimura & Fujita (Reference Yoshimura and Fujita2020) (![]() $\bullet$) and Yokojima & Nakayama (Reference Yokojima and Nakayama2002) (

$\bullet$) and Yokojima & Nakayama (Reference Yokojima and Nakayama2002) (![]() $\blacktriangle$) are compared. The dashed line (– – –) is an approximated parameterisation of

$\blacktriangle$) are compared. The dashed line (– – –) is an approximated parameterisation of ![]() $\eta _{rms}/h$ proposed by Yoshimura & Fujita (Reference Yoshimura and Fujita2020); i.e.

$\eta _{rms}/h$ proposed by Yoshimura & Fujita (Reference Yoshimura and Fujita2020); i.e. ![]() $\eta _{rms}/h= 4.5\times 10^{-3}{Fr}^2$ where

$\eta _{rms}/h= 4.5\times 10^{-3}{Fr}^2$ where ![]() $Fr=\bar {U}/\sqrt {gh}$ is defined based on the bulk mean velocity

$Fr=\bar {U}/\sqrt {gh}$ is defined based on the bulk mean velocity ![]() $\bar {U}$ and, in the present study,

$\bar {U}$ and, in the present study, ![]() $\bar {U}=15.6 u_\tau$.

$\bar {U}=15.6 u_\tau$.

2.3.2. Dataset preprocessing and training

For each case, the dataset consists of a series of instantaneous flow snapshots stored at constant intervals for a period of time. The intervals and the total number of samples collected, denoted by ![]() $\Delta t u_\tau /h$ and

$\Delta t u_\tau /h$ and ![]() $N$, respectively, are listed in table 2. We note that the simulations are performed on a computational grid of size

$N$, respectively, are listed in table 2. We note that the simulations are performed on a computational grid of size ![]() $N_x \times N_y \times N_z=256\times 256 \times 128$. The simulation data are interpolated onto the input and output grids, which are uniform grids with coarser resolutions, as listed in table 1, to reduce the computational cost of the training process and the consumption of GPU memory. Although the reduced resolutions remove some fine scale structures from the training data compared with the original DNS data, we find that an increased resolution has a negligible effect on the reconstruction (see details in § 3 of the supplementary material), indicating that the present resolution is adequate for resolving the reconstructed structures. We apply random translations to the flow fields in the horizontal periodic directions, i.e. in the

$N_x \times N_y \times N_z=256\times 256 \times 128$. The simulation data are interpolated onto the input and output grids, which are uniform grids with coarser resolutions, as listed in table 1, to reduce the computational cost of the training process and the consumption of GPU memory. Although the reduced resolutions remove some fine scale structures from the training data compared with the original DNS data, we find that an increased resolution has a negligible effect on the reconstruction (see details in § 3 of the supplementary material), indicating that the present resolution is adequate for resolving the reconstructed structures. We apply random translations to the flow fields in the horizontal periodic directions, i.e. in the ![]() $x$ and

$x$ and ![]() $y$ directions, and obtain a total of

$y$ directions, and obtain a total of ![]() $N_i$ samples, as listed in table 3. This process, known as data augmentation, which artificially increases the amount of data via simple transformations, can help reduce overfitting and increase the generalisation performance of NNs, especially when it is difficult to obtain big data (Shorten & Khoshgoftaar Reference Shorten and Khoshgoftaar2019). Here, owing to the high computational cost of free-surface flow simulations, we adopt this technique to expand the datasets. As discussed in § 2.1, this process can also improve the translation invariance of the CNN (Kauderer-Abrams Reference Kauderer-Abrams2017). The surface elevation in the input is normalised by

$N_i$ samples, as listed in table 3. This process, known as data augmentation, which artificially increases the amount of data via simple transformations, can help reduce overfitting and increase the generalisation performance of NNs, especially when it is difficult to obtain big data (Shorten & Khoshgoftaar Reference Shorten and Khoshgoftaar2019). Here, owing to the high computational cost of free-surface flow simulations, we adopt this technique to expand the datasets. As discussed in § 2.1, this process can also improve the translation invariance of the CNN (Kauderer-Abrams Reference Kauderer-Abrams2017). The surface elevation in the input is normalised by ![]() $\eta _{rms}$ of each case; the surface velocities are normalised by the root-mean-square value of all three velocity components,

$\eta _{rms}$ of each case; the surface velocities are normalised by the root-mean-square value of all three velocity components, ![]() ${\langle (u_s^2 + v_s^2 + w_s^2)/3 \rangle }^{1/2}$; the subsurface velocities are normalised by the root-mean-square magnitude of velocity fluctuations in the subsurface flow field,

${\langle (u_s^2 + v_s^2 + w_s^2)/3 \rangle }^{1/2}$; the subsurface velocities are normalised by the root-mean-square magnitude of velocity fluctuations in the subsurface flow field, ![]() ${\langle (u'^2+v'^2+w'^2)/3 \rangle }^{1/2}$. This normalisation uniformly scales three velocity components because it is necessary to obtain a divergence-free velocity.

${\langle (u'^2+v'^2+w'^2)/3 \rangle }^{1/2}$. This normalisation uniformly scales three velocity components because it is necessary to obtain a divergence-free velocity.

Table 3. The sampling parameters and the sizes of the datasets, including: the non-dimensionalised sampling interval ![]() $\Delta t u_\tau /h$, the total number of snapshots sampled from simulations

$\Delta t u_\tau /h$, the total number of snapshots sampled from simulations ![]() $N$, the total number of snapshots after augmentation

$N$, the total number of snapshots after augmentation ![]() $N_i$, the number of snapshots in the training set

$N_i$, the number of snapshots in the training set ![]() $N_{train}$, the number of snapshots in the validation set

$N_{train}$, the number of snapshots in the validation set ![]() $N_{{val}}$ and the number of snapshots in the test set

$N_{{val}}$ and the number of snapshots in the test set ![]() $N_{test}$.

$N_{test}$.

Each dataset is split into training, validation and test sets with ![]() $75\,\%$,

$75\,\%$, ![]() $10\,\%$ and

$10\,\%$ and ![]() $15\,\%$ of the total snapshots, respectively. The numbers of snapshots in each set are listed in table 3. The training set is used to train the network and the validation set is used to monitor the performance of the network during the training process. The training procedure is stopped when the loss function (2.4) computed over the validation set no longer improves, an indication that the model is overfitting (see § 5 of the supplementary material for learning curves). The test set is used to evaluate the network. It should be noted that the training, validation and test sets are obtained from different time segments of the simulations such that the flow snapshots in the test set do not have strong correlations with those in the training set.

$15\,\%$ of the total snapshots, respectively. The numbers of snapshots in each set are listed in table 3. The training set is used to train the network and the validation set is used to monitor the performance of the network during the training process. The training procedure is stopped when the loss function (2.4) computed over the validation set no longer improves, an indication that the model is overfitting (see § 5 of the supplementary material for learning curves). The test set is used to evaluate the network. It should be noted that the training, validation and test sets are obtained from different time segments of the simulations such that the flow snapshots in the test set do not have strong correlations with those in the training set.

The LSE also uses the same training set as in CNN to determine the operator ![]() $\mathcal {L}$, i.e. the LSE also uses the interpolated simulation data for reconstructions. However, random translations are not applied to the dataset for the LSE reconstructions because the LSE method is inherently translation-invariant.

$\mathcal {L}$, i.e. the LSE also uses the interpolated simulation data for reconstructions. However, random translations are not applied to the dataset for the LSE reconstructions because the LSE method is inherently translation-invariant.

3. Flow reconstruction performance

In this section, the performance of the CNN and LSE models in terms of reconstructing turbulent free-surface open-channel flows is compared both qualitatively and quantitatively. The subsurface flow structure features that can be inferred by the two models are also discussed. It should be noted that in this section, the cases with different ![]() ${Fr}_\tau$ are processed individually, i.e. each case has its own kernel weights for the CNN or linear operator for the LSE such that the reconstruction errors are minimised for that specific case.

${Fr}_\tau$ are processed individually, i.e. each case has its own kernel weights for the CNN or linear operator for the LSE such that the reconstruction errors are minimised for that specific case.

3.1. Instantaneous flow features

We first inspect the instantaneous flow fields to qualitatively assess the performance of the CNN and LSE methods. In this section, unless specified otherwise, we use one snapshot in the test set for the case with ![]() ${Fr}_\tau =0.08$ as an example to compare the reconstructed flow field and the ground truth from DNS. It should also be noted that hereafter, unless specified otherwise, the DNS results as the ground truth refer to the velocity fields on the uniform reconstruction grid as described in § 2.3.2.

${Fr}_\tau =0.08$ as an example to compare the reconstructed flow field and the ground truth from DNS. It should also be noted that hereafter, unless specified otherwise, the DNS results as the ground truth refer to the velocity fields on the uniform reconstruction grid as described in § 2.3.2.

Figure 4 compares the subsurface streamwise velocity fluctuations estimated by the CNN and LSE methods with the DNS results. Near the surface at ![]() $z/h=0.9$, the DNS result (figure 4a) shows that the regions with negative

$z/h=0.9$, the DNS result (figure 4a) shows that the regions with negative ![]() $u'$ values, i.e. the regions with low-speed streamwise velocities, are characterised by patchy shapes. The high-speed regions distributed around the low-speed patches can appear in narrow-band-like patterns. The patchy low-speed and streaky high-speed structures of the near-surface streamwise motions are consistent with the open-channel flow simulation results obtained by Yamamoto & Kunugi (Reference Yamamoto and Kunugi2011). Despite some minor differences, both the CNN and LSE methods provide reasonably accurate reconstructions. In the high-speed regions, the LSE method (figure 4c) predicts the amplitudes of the velocity fluctuations slightly more accurately than the CNN method (figure 4b). Moreover, the contours of the LSE reconstruction, especially in high-speed regions, have sharper edges, indicating that the LSE method can predict higher gradients than the CNN method. This result suggests that the LSE approach can capture more small-scale motions, thereby yielding slightly better reconstruction performance in the high-speed regions with narrow-band patterns. On the other hand, the CNN method can more accurately predict the low-speed patches, whereas the LSE method tends to underestimate the fluctuations in the low-speed regions. Overall, both methods perform similarly well in the near-surface regions.

$u'$ values, i.e. the regions with low-speed streamwise velocities, are characterised by patchy shapes. The high-speed regions distributed around the low-speed patches can appear in narrow-band-like patterns. The patchy low-speed and streaky high-speed structures of the near-surface streamwise motions are consistent with the open-channel flow simulation results obtained by Yamamoto & Kunugi (Reference Yamamoto and Kunugi2011). Despite some minor differences, both the CNN and LSE methods provide reasonably accurate reconstructions. In the high-speed regions, the LSE method (figure 4c) predicts the amplitudes of the velocity fluctuations slightly more accurately than the CNN method (figure 4b). Moreover, the contours of the LSE reconstruction, especially in high-speed regions, have sharper edges, indicating that the LSE method can predict higher gradients than the CNN method. This result suggests that the LSE approach can capture more small-scale motions, thereby yielding slightly better reconstruction performance in the high-speed regions with narrow-band patterns. On the other hand, the CNN method can more accurately predict the low-speed patches, whereas the LSE method tends to underestimate the fluctuations in the low-speed regions. Overall, both methods perform similarly well in the near-surface regions.

Figure 4. Comparisons among the instantaneous streamwise velocity fluctuations ![]() $u'$ (a,d,g) obtained from the DNS results and the fields reconstructed by (b,e,h) the CNN and (c, f,i) the LSE methods. The

$u'$ (a,d,g) obtained from the DNS results and the fields reconstructed by (b,e,h) the CNN and (c, f,i) the LSE methods. The ![]() $x$–

$x$–![]() $y$ planes at (a–c)

$y$ planes at (a–c) ![]() $z/h=0.9$, (d–f)

$z/h=0.9$, (d–f) ![]() $z/h=0.6$ and (g–i)

$z/h=0.6$ and (g–i) ![]() $z/h=0.3$ are plotted for the case of

$z/h=0.3$ are plotted for the case of ![]() ${Fr}_\tau =0.08$.

${Fr}_\tau =0.08$.

Away from the free surface, close to the centre of the channel (![]() $z/h=0.6$) (figure 4d), we can observe increases in the streamwise scales of

$z/h=0.6$) (figure 4d), we can observe increases in the streamwise scales of ![]() $u'$ compared with those of the near-surface region (

$u'$ compared with those of the near-surface region (![]() $z/h=0.9$). The high-speed and low-speed regions start to appear as relatively long streamwise streaks and alternate in the spanwise direction. At this height, the reconstructions provided by both the CNN and LSE methods are not as accurate as their reconstructions near the surface. However, the CNN method (figure 4e) provides a better reconstruction than the LSE method (figure 4f) in that the former better captures the streaky pattern of

$z/h=0.9$). The high-speed and low-speed regions start to appear as relatively long streamwise streaks and alternate in the spanwise direction. At this height, the reconstructions provided by both the CNN and LSE methods are not as accurate as their reconstructions near the surface. However, the CNN method (figure 4e) provides a better reconstruction than the LSE method (figure 4f) in that the former better captures the streaky pattern of ![]() $u'$. The structures predicted by the LSE method generally appear shorter than those in the ground truth. Furthermore, although the reconstructed velocities provided by both methods exhibit underpredicted magnitudes, the velocity magnitudes predicted by the CNN method are clearly higher than those predicted by the LSE method and follow the DNS results more closely.

$u'$. The structures predicted by the LSE method generally appear shorter than those in the ground truth. Furthermore, although the reconstructed velocities provided by both methods exhibit underpredicted magnitudes, the velocity magnitudes predicted by the CNN method are clearly higher than those predicted by the LSE method and follow the DNS results more closely.

Farther away from the surface at ![]() $z/h=0.3$, the streamwise streaks become more pronounced, and the intensities of the fluctuations become stronger (figure 4g); these are the typical features of turbulent flows affected by strong shear near the wall. The CNN method (figure 4h), despite its underestimated velocity magnitudes and missing small-scale structures, can still predict distributions for the large-scale streaks that are similar to the DNS result. In contrast, the streaky structures in the reconstruction provided by LSE (figure 4i) are far less discernible, and the magnitudes of the velocity fluctuations are also weaker.