1. Introduction

Turbulence is a chaotic, spatio-temporal multi-scale nonlinear phenomenon. Thus, it generally requires huge costs to accurately measure or simulate with sufficiently high resolution. In particular, direct numerical simulation has been actively used in the study of turbulence. However, securing the computational resources needed to resolve even the smallest-scale motions of turbulence is progressively challenging with high Reynolds numbers. To help resolve this problem, a neural network (NN), which has the ability to approximate arbitrary nonlinear functions (Hornik, Stinchcombe & White Reference Hornik, Stinchcombe and White1989), as well as linear-theory based methods such as proper orthogonal decomposition (Berkooz, Holmes & Lumley Reference Berkooz, Holmes and Lumley1993), linear stochastic estimation and Kalman filter have been studied. Indeed, there have been attempts to apply neural networks to represent turbulence (Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Milano & Koumoutsakos Reference Milano and Koumoutsakos2002). However, those applications were based on shallow learning and, thus, were restricted to the extraction of simple correlations between turbulence quantities at two close locations in a near-wall flow. In recent years, deep neural networks (DNN) have been extended to various fields of turbulence research, owing to the development of data-driven learning algorithms (e.g. deep learning LeCun, Bengio & Hinton Reference LeCun, Bengio and Hinton2015), computational equipment (e.g. graphical process units), big data (e.g. Johns–Hopkins Turbulence Database (JHTDB) Perlman et al. Reference Perlman, Burns, Li and Meneveau2007) and open-source code (e.g. TensorFlow Abadi et al. Reference Abadi2015).

Various deep-learning applications have recently been developed for broad areas of turbulence research (Kutz Reference Kutz2017; Brenner, Eldredge & Freund Reference Brenner, Eldredge and Freund2019; Duraisamy, Iaccarino & Xiao Reference Duraisamy, Iaccarino and Xiao2019; Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2020a; Pandey, Schumacher & Sreenivasan Reference Pandey, Schumacher and Sreenivasan2020). Ling, Kurzawski & Templeton (Reference Ling, Kurzawski and Templeton2016) proposed a tensor-based NN by embedding the Galilean invariance of a Reynolds-averaged Navier–Stokes (RANS) model, showing a greater performance improvement than linear and nonlinear eddy viscosity models. Parish & Duraisamy (Reference Parish and Duraisamy2016), Wang, Wu & Xiao (Reference Wang, Wu and Xiao2017), and other researchers have actively engaged in improving RANS models (e.g. Kutz Reference Kutz2017; Duraisamy et al. Reference Duraisamy, Iaccarino and Xiao2019). On the other hand, Gamahara & Hattori (Reference Gamahara and Hattori2017) proposed a large-eddy simulation (LES)-closure model based on DNN for wall-bounded turbulence. It was then extended to other flows, such as two-dimensional (2-D) turbulence (Maulik et al. Reference Maulik, San, Rasheed and Vedula2019) and homogeneous isotropic turbulence (Beck, Flad & Munz Reference Beck, Flad and Munz2019; Xie et al. Reference Xie, Wang, Li and Ma2019). Additionally, the prediction of the temporal evolution of turbulent flows has been actively pursued. As a fundamental example, Lee & You (Reference Lee and You2019) studied the historical prediction of flow around a cylinder using generative adversarial networks. Srinivasan et al. (Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019) predicted the temporal behaviour of simplified shear turbulence expressed as solutions of nine ordinary differential equations using a recurrent NN (RNN). Kim & Lee (Reference Kim and Lee2020a) proposed a high-resolution inflow turbulence generator at various Reynolds numbers, combining a generative adversarial network (GAN) and an RNN. As another noticeable attempt to apply machine learning to fluid dynamics, Raissi, Yazdani & Karniadakis (Reference Raissi, Yazdani and Karniadakis2020) reconstructed velocity and pressure fields from only visualizable concentration data based on a physics-informed NN framework. Recently, deep-reinforcement learning has been applied to fluid dynamics, such as observations of how swimmers efficiently use energy (Verma, Novati & Koumoutsakos Reference Verma, Novati and Koumoutsakos2018) and the development of a new flow-control scheme (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019).

Apart from the above studies, the super-resolution reconstruction of turbulent flows has recently emerged as an interesting topic. This capability would help researchers overcome environments in which only partial or low-resolution spatio-temporal data are available, owing to the limitations of measurement equipment or computational resources. Particularly, if direct numerical simulation (DNS)-quality data could be reconstructed from data obtained via LES, it would be very helpful for subgrid scale modelling. Maulik & San (Reference Maulik and San2017) proposed a shallow NN model that could recover a turbulent flow field from a filtered or noise-added one. Fukami, Fukagata & Taira (Reference Fukami, Fukagata and Taira2019a, Reference Fukami, Fukagata and Taira2020b) and Liu et al. (Reference Liu, Tang, Huang and Lu2020) reconstructed a flow field from a low-resolution filtered field using a convolutional NN (CNN). The method shows significant potential. The CNNs were trained to reduce the mean-squared error (MSE) between the predicted and true values of target quantities. However, small-scale structures were not represented well, and non-physical features were observed when the resolution ratio between the target and input fields was large. Deng et al. (Reference Deng, He, Liu and Kim2019) considered flow data around a cylinder measured using particle image velocimetry (PIV) in a learning network using a GAN in which the small-scale structures were better expressed than when only MSE was used. Similarly, Xie et al. (Reference Xie, Franz, Chu and Thuerey2018) and Werhahn et al. (Reference Werhahn, Xie, Chu and Thuerey2019) applied GANs on super-resolution smoke data. In all these prior studies, researchers used a supervised deep-learning model, which required labelled low- and high-resolution data for training. Therefore, paired data were artificially generated by filtering or averaging so that supervised learning could be made possible. In a more practical environment, however, only unpaired data are available (e.g. LES data in the absence of corresponding DNS data or measured data using PIV with limited resolution). For more practical and wider applications, a more generalized model that can be applied, even when paired data are not available, is needed. Kim & Lee (Reference Kim and Lee2020a) recently showed that unsupervised learning networks could generate turbulent flow fields for inflow boundary conditions from random initial seeds. This indeed demonstrates that a DNN can learn and reflect hidden similarities in unpaired turbulence. Based on this evidence, we presume that super-resolution reconstruction of unpaired turbulence is now possible by learning the similarities among the unpaired data. Such an extension would enable outcomes previously thought impossible. For example, the simultaneous learning of LES and DNS data becomes possible, and, thus, high-resolution turbulence fields with DNS quality can be reconstructed from LES fields. This can be useful for the development of subgrid-scale models through the production of paired data. Another example is the denoising and resolution enhancement of real-world data such as PIV measurements. This can be accomplished by learning noise-added experimental data and high-fidelity (experimental or simulation) data simultaneously. These are only a few examples of the new possibilities.

In this paper we propose an unsupervised deep-learning model that can be used, even in the absence of labelled turbulent data. For a super-resolution reconstruction using unpaired data, we apply a cycle-consistent GAN (CycleGAN) (Zhu et al. Reference Zhu, Park, Isola and Efros2017) to various turbulent flows as an unsupervised learning model. The detailed methodology is presented in § 2. For comparison, we use bicubic interpolation and supervised learning models (i.e. CNN and conditional GAN (cGAN)). The models are applied to three examples, as shown in figure 1. First, with homogeneous isotropic turbulence, a reconstruction of the DNS flow field from a top-hat-filtered (i.e. low-resolution) one is considered in § 3.1. Next, in § 3.2 we cover the reconstruction of full DNS data from a partially measured (i.e. low-resolution) one in wall-bounded turbulence. In §§ 3.1 and 3.2 we train our CycleGAN model using unpaired datasets with supervised learning models using paired ones. Finally, in § 3.3 DNS-quality reconstruction from LES is addressed using independently obtained LES and DNS data of wall-bounded turbulence. In this case, only the unsupervised learning model is applicable. We conclude our study with a discussion in § 4.

Figure 1. Illustration of present work. The proposed CycleGAN is an unsupervised learning model for the super-resolution reconstruction of turbulence. For comparison, CNN and cGAN are used as supervised learning models. In this study, three examples including filtered homogeneous and isotropic turbulence, partially measured wall-bounded turbulence, and large-eddy simulation (LES), are considered.

2. Methodology

In this study we apply CycleGAN to an unsupervised learning task. A typical GAN model consists of two networks: a generator network (![]() $G$) and a discriminator network (

$G$) and a discriminator network (![]() $D$) (Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014). In the field of image generation,

$D$) (Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014). In the field of image generation, ![]() $G$ generates a fake image similar to the real one by applying convolution and upsampling to random noise

$G$ generates a fake image similar to the real one by applying convolution and upsampling to random noise ![]() $z$;

$z$; ![]() $D$ distinguishes between the fake image and the real one and returns a probability value between 0 and 1 by applying convolution and downsampling. The final goal is to obtain

$D$ distinguishes between the fake image and the real one and returns a probability value between 0 and 1 by applying convolution and downsampling. The final goal is to obtain ![]() $G$, which can generate fake images that are difficult to distinguish from real ones. This process is similar to a min–max two-player game for the value function,

$G$, which can generate fake images that are difficult to distinguish from real ones. This process is similar to a min–max two-player game for the value function, ![]() $V(D,G)$,

$V(D,G)$,

where ![]() $X$ is a real image set, and

$X$ is a real image set, and ![]() $x\sim P_{X}$ means that

$x\sim P_{X}$ means that ![]() $x$ is sampled from the real image distribution. Here

$x$ is sampled from the real image distribution. Here ![]() $z$ is a random noise vector of latent space used as the input to the generator. Network

$z$ is a random noise vector of latent space used as the input to the generator. Network ![]() $G$ is expected to generate a fake image similar to the real one. Thus, trainable parameters in

$G$ is expected to generate a fake image similar to the real one. Thus, trainable parameters in ![]() $G$ are trained in the direction of

$G$ are trained in the direction of ![]() $D(G(z))$, having a value close to 1. On the other hand, those in

$D(G(z))$, having a value close to 1. On the other hand, those in ![]() $D$ are trained in the direction of

$D$ are trained in the direction of ![]() $D(x)$, returning a value close to 1. The term

$D(x)$, returning a value close to 1. The term ![]() $D(G(z))$ returns a value close to 0. Thus, even a slight difference between the real image and the generated one can be distinguished. In other words, the

$D(G(z))$ returns a value close to 0. Thus, even a slight difference between the real image and the generated one can be distinguished. In other words, the ![]() $G$ parameters are adjusted in a direction that minimizes

$G$ parameters are adjusted in a direction that minimizes ![]() $V(D,G)$, and

$V(D,G)$, and ![]() $D$ parameters are adjusted in a direction that maximizes

$D$ parameters are adjusted in a direction that maximizes ![]() $V(D,G)$. From this competitive learning, we can expect to obtain a generator,

$V(D,G)$. From this competitive learning, we can expect to obtain a generator, ![]() $G$, capable of providing a new image having a distribution similar to a real one. In the present work, GAN is applied to super-resolution reconstruction in the frame of finding an input–output mapping function, and, instead of random noise, low-resolution image data are used as the input of

$G$, capable of providing a new image having a distribution similar to a real one. In the present work, GAN is applied to super-resolution reconstruction in the frame of finding an input–output mapping function, and, instead of random noise, low-resolution image data are used as the input of ![]() $G$, as illustrated in figure 2.

$G$, as illustrated in figure 2.

Figure 2. The GAN architecture consists of two networks, the generator (G) and the discriminator (D). Here ![]() $G$ learns to reconstruct the high-resolution flow field (

$G$ learns to reconstruct the high-resolution flow field (![]() $\tilde {Y}^{HR}$) from the low-resolution field (

$\tilde {Y}^{HR}$) from the low-resolution field (![]() ${X}^{LR}$), while

${X}^{LR}$), while ![]() $D$ learns to distinguish

$D$ learns to distinguish ![]() $\tilde {Y}^{HR}$ from the target field (

$\tilde {Y}^{HR}$ from the target field (![]() $Y^{HR}$).

$Y^{HR}$).

For an unsupervised learning model of unpaired turbulence, we adopt CycleGAN (Zhu et al. Reference Zhu, Park, Isola and Efros2017) to find a mapping function between unpaired data, ![]() $X$ and

$X$ and ![]() $Y$. We aim to obtain a model that performs super-resolution reconstruction when the low- and high-resolution flow fields are not matched. Here

$Y$. We aim to obtain a model that performs super-resolution reconstruction when the low- and high-resolution flow fields are not matched. Here ![]() $X$ and

$X$ and ![]() $Y$ are low- and high-resolution datasets, respectively. CycleGAN consists of two generator networks,

$Y$ are low- and high-resolution datasets, respectively. CycleGAN consists of two generator networks, ![]() $(G, F)$, and two discriminator networks,

$(G, F)$, and two discriminator networks, ![]() $(D_Y, D_X)$, as shown in figure 3(a,b). Generators

$(D_Y, D_X)$, as shown in figure 3(a,b). Generators ![]() $G$ and

$G$ and ![]() $F$ are networks mapping

$F$ are networks mapping ![]() $X \longrightarrow Y$ and

$X \longrightarrow Y$ and ![]() $Y \longrightarrow X$, respectively. Discriminators

$Y \longrightarrow X$, respectively. Discriminators ![]() $D_Y$ and

$D_Y$ and ![]() $D_X$ distinguish between a fake image from generators and a real image, returning a probability value. Here

$D_X$ distinguish between a fake image from generators and a real image, returning a probability value. Here ![]() $D_Y$ distinguishes between

$D_Y$ distinguishes between ![]() $G(x)$ generated by

$G(x)$ generated by ![]() $G$ and

$G$ and ![]() $y$ from

$y$ from ![]() $Y$, whereas

$Y$, whereas ![]() $D_X$ distinguishes between

$D_X$ distinguishes between ![]() $F(y)$ generated by

$F(y)$ generated by ![]() $F$ and

$F$ and ![]() $x$ from

$x$ from ![]() $X$. The objective function of CycleGAN consists of the GAN and cycle-consistency losses. The GAN loss helps the generators find the distribution of the target image. The cycle-consistency loss connects two generators,

$X$. The objective function of CycleGAN consists of the GAN and cycle-consistency losses. The GAN loss helps the generators find the distribution of the target image. The cycle-consistency loss connects two generators, ![]() $(G,F)$, and reflects the dependency of input on them. First, the GAN loss function is used as

$(G,F)$, and reflects the dependency of input on them. First, the GAN loss function is used as

where ![]() $x$ and

$x$ and ![]() $y$ are the images sampled from

$y$ are the images sampled from ![]() $X$ and

$X$ and ![]() $Y$ datasets, respectively. Here

$Y$ datasets, respectively. Here ![]() $G$ is trained in a direction to minimize

$G$ is trained in a direction to minimize ![]() $\mathcal {L}_{GAN}(G,D_Y)$, and discriminator

$\mathcal {L}_{GAN}(G,D_Y)$, and discriminator ![]() $D_Y$ is trained in a direction to maximize

$D_Y$ is trained in a direction to maximize ![]() $\mathcal {L}_{GAN}(G,D_Y)$;

$\mathcal {L}_{GAN}(G,D_Y)$; ![]() $F$ and

$F$ and ![]() $D_X$ in (2.3) are trained in the same way.

$D_X$ in (2.3) are trained in the same way.

Figure 3. CycleGAN architecture consisting of (a) forward GAN and (b) backward GAN. Here ![]() $G$ and

$G$ and ![]() $F$ are generators, and

$F$ are generators, and ![]() $D_Y$ and

$D_Y$ and ![]() $D_X$ are discriminators. (c) Forward cycle-consistency loss:

$D_X$ are discriminators. (c) Forward cycle-consistency loss: ![]() $x\rightarrow G(x)\rightarrow F(G(x)) \approx x$, and backward cycle-consistency loss:

$x\rightarrow G(x)\rightarrow F(G(x)) \approx x$, and backward cycle-consistency loss: ![]() $y\rightarrow F(y)\rightarrow G(F(y)) \approx y$.

$y\rightarrow F(y)\rightarrow G(F(y)) \approx y$.

In principle, the properly trained generators, ![]() $G$ and

$G$ and ![]() $F$, can provide data having a similar distributions as the target data,

$F$, can provide data having a similar distributions as the target data, ![]() $Y$ and

$Y$ and ![]() $X$. However, the above loss cannot guarantee that the generated image will be properly dependent upon the input image. In other words, the high-resolution image,

$X$. However, the above loss cannot guarantee that the generated image will be properly dependent upon the input image. In other words, the high-resolution image, ![]() $G(x)$, from the low-resolution one,

$G(x)$, from the low-resolution one, ![]() $x$, could have the characteristics of target datasets,

$x$, could have the characteristics of target datasets, ![]() $Y$, and the reconstructed image,

$Y$, and the reconstructed image, ![]() $G(x)$, might not have a large-scale similarity to the low-resolution one,

$G(x)$, might not have a large-scale similarity to the low-resolution one, ![]() $x$. Therefore, a cycle-consistent loss that reduces the space of the mapping function with

$x$. Therefore, a cycle-consistent loss that reduces the space of the mapping function with ![]() $G$ and

$G$ and ![]() $F$ is additionally used (see figure 3c). The cycle-consistent loss helps to ensure the dependency of the generated data on the input. This loss function consists of two terms for domains

$F$ is additionally used (see figure 3c). The cycle-consistent loss helps to ensure the dependency of the generated data on the input. This loss function consists of two terms for domains ![]() $X$ and

$X$ and ![]() $Y$. In the left panel of figure 3(c), the forward cycle-consistency loss reduces the space of image

$Y$. In the left panel of figure 3(c), the forward cycle-consistency loss reduces the space of image ![]() $x$ and

$x$ and ![]() $F(G(x))$ in domain

$F(G(x))$ in domain ![]() $X$. It makes

$X$. It makes ![]() $G(x)$ dependent upon

$G(x)$ dependent upon ![]() $x$ (

$x$ (![]() $x \rightarrow G(x) \rightarrow F(G(x)) \approx x$). Similarly, in the right panel of figure 3(c), the backward cycle-consistency loss reduces the space of image

$x \rightarrow G(x) \rightarrow F(G(x)) \approx x$). Similarly, in the right panel of figure 3(c), the backward cycle-consistency loss reduces the space of image ![]() $y$ and

$y$ and ![]() $G(F(y))$ in domain

$G(F(y))$ in domain ![]() $Y$ and makes

$Y$ and makes ![]() $F(y)$ dependent upon

$F(y)$ dependent upon ![]() $y$ (

$y$ (![]() $y \rightarrow F(y) \rightarrow G(F(y)) \approx y$). The cycle-consistency losses can be expressed as

$y \rightarrow F(y) \rightarrow G(F(y)) \approx y$). The cycle-consistency losses can be expressed as

where the first term on the right-hand side is the forward cycle-consistency loss, and the second term is the backward cycle-consistency loss. Here ![]() $\parallel \parallel ^2_2$ denotes mean-squared error, which is normalized by vector size. The MSE between

$\parallel \parallel ^2_2$ denotes mean-squared error, which is normalized by vector size. The MSE between ![]() $F(G(x))$ and

$F(G(x))$ and ![]() $x$ and that between

$x$ and that between ![]() $G(F(y))$ and

$G(F(y))$ and ![]() $y$ are used. The cycle-consistency loss provides a decisive effect on learning the unpaired data. The final objective function used in this study is

$y$ are used. The cycle-consistency loss provides a decisive effect on learning the unpaired data. The final objective function used in this study is

where ![]() $\lambda$ is a weight factor and is fixed at

$\lambda$ is a weight factor and is fixed at ![]() $10$. Generators

$10$. Generators ![]() $G$ and

$G$ and ![]() $F$ are trained in the direction of minimizing

$F$ are trained in the direction of minimizing ![]() $\mathcal {L}(G,F,D_Y,D_X)$, whereas discriminators

$\mathcal {L}(G,F,D_Y,D_X)$, whereas discriminators ![]() $D_Y$ and

$D_Y$ and ![]() $D_X$ are trained in the direction of maximizing

$D_X$ are trained in the direction of maximizing ![]() $\mathcal {L}(G,F,D_Y,D_X)$. Learning with the above GAN loss often diverges, because the discriminator easily distinguishes between the generated image and the target one before parameters in the generator are sufficiently trained. Additionally, there is a well-known problem (i.e. mode collapse) in which the generation distribution is restricted to a small domain, although training does not diverge. To solve this problem, we change the above GAN loss to a Wasserstein GAN (WGAN) having a gradient penalty (GP) loss (Gulrajani et al. Reference Gulrajani, Ahmed, Arjovsky, Dumoulin and Courville2017). With the WGAN-GP loss, the GP term is added, and the probabilistic divergence between the real image and the generated one becomes continuous with respect to the parameters of the generator. Training and performance can, therefore, be stabilized and improved.

$\mathcal {L}(G,F,D_Y,D_X)$. Learning with the above GAN loss often diverges, because the discriminator easily distinguishes between the generated image and the target one before parameters in the generator are sufficiently trained. Additionally, there is a well-known problem (i.e. mode collapse) in which the generation distribution is restricted to a small domain, although training does not diverge. To solve this problem, we change the above GAN loss to a Wasserstein GAN (WGAN) having a gradient penalty (GP) loss (Gulrajani et al. Reference Gulrajani, Ahmed, Arjovsky, Dumoulin and Courville2017). With the WGAN-GP loss, the GP term is added, and the probabilistic divergence between the real image and the generated one becomes continuous with respect to the parameters of the generator. Training and performance can, therefore, be stabilized and improved.

To effectively handle the spatial structures of turbulence, a CNN comprising discrete convolution operations and nonlinear functions is used as generators ![]() $G$ and

$G$ and ![]() $F$ and discriminators

$F$ and discriminators ![]() $D_Y$ and

$D_Y$ and ![]() $D_X$, respectively. To change the dimension of the image (i.e. the flow field), up- and down-sampling are applied to generators

$D_X$, respectively. To change the dimension of the image (i.e. the flow field), up- and down-sampling are applied to generators ![]() $G$ and

$G$ and ![]() $F$, respectively. Downsampling is used for discriminators

$F$, respectively. Downsampling is used for discriminators ![]() $D_X$ and

$D_X$ and ![]() $D_Y$. Additionally, the fully connected layer is used in the last two layers for the discriminators. As a nonlinear function, a leaky rectified linear unit (ReLU) is used, i.e.

$D_Y$. Additionally, the fully connected layer is used in the last two layers for the discriminators. As a nonlinear function, a leaky rectified linear unit (ReLU) is used, i.e.

\begin{equation} f(x) = \left\{\begin{array}{@{}ll} x, & x\geqslant 0,\\ \alpha x, & x<0, \end{array} \right. \end{equation}

\begin{equation} f(x) = \left\{\begin{array}{@{}ll} x, & x\geqslant 0,\\ \alpha x, & x<0, \end{array} \right. \end{equation}

where ![]() $\alpha = 0.2$. This nonlinear function reliably updates the weight by avoiding the dead-ReLU problem that produces an output,

$\alpha = 0.2$. This nonlinear function reliably updates the weight by avoiding the dead-ReLU problem that produces an output, ![]() $0$, for the negative input. We have tried both the ReLU and the leaky ReLU functions. The latter had a slightly better qualitative performance, although both functions performed well enough. We also tested a linear activation function and observed significantly poor performance compared to the nonlinear models. It indicates that the strongly nonlinear model, such as the deep neural network, is needed to capture the relation between low- and high-resolution turbulences. Detailed hyperparameters used for training and network architecture are provided in appendix A. For implementation, we use the TensorFlow open-source library (Abadi et al. Reference Abadi2015).

$0$, for the negative input. We have tried both the ReLU and the leaky ReLU functions. The latter had a slightly better qualitative performance, although both functions performed well enough. We also tested a linear activation function and observed significantly poor performance compared to the nonlinear models. It indicates that the strongly nonlinear model, such as the deep neural network, is needed to capture the relation between low- and high-resolution turbulences. Detailed hyperparameters used for training and network architecture are provided in appendix A. For implementation, we use the TensorFlow open-source library (Abadi et al. Reference Abadi2015).

To assess our unsupervised learning, we consider supervised learning that adopts CNN and cGAN. Their generators comprise the same network as does ![]() $G$ in the CycleGAN. In our study we did not consider a fully connected NN because of the inefficiently large number of trainable parameters versus the performance. The CNN is trained with the MSE that represents the pixel loss between the target flow field and the reconstructed one. With an L2 regularization added to prevent overfitting, the objective function of the CNN consists of the sum of MSE and L2 regularization loss, as follows:

$G$ in the CycleGAN. In our study we did not consider a fully connected NN because of the inefficiently large number of trainable parameters versus the performance. The CNN is trained with the MSE that represents the pixel loss between the target flow field and the reconstructed one. With an L2 regularization added to prevent overfitting, the objective function of the CNN consists of the sum of MSE and L2 regularization loss, as follows:

Here, in the MSE of the data sampled during training, ![]() $y$ and

$y$ and ![]() $G(x)$ are the DNS flow field and the predicted one, respectively. The second term is the L2 regularization loss, where

$G(x)$ are the DNS flow field and the predicted one, respectively. The second term is the L2 regularization loss, where ![]() $w$ represents trainable weights. The strength of the regularization is denoted by

$w$ represents trainable weights. The strength of the regularization is denoted by ![]() $\lambda$, fixed at 0.0001. The CNN is trained in the direction of minimizing

$\lambda$, fixed at 0.0001. The CNN is trained in the direction of minimizing ![]() $\mathcal {L}_{CNN}$ to accurately predict the target flow field. Conditional GAN, as proposed by Mirza & Osindero (Reference Mirza and Osindero2014), is similar to GAN. The cGAN model applies the generator input as a condition to the discriminator to constrain the output of the generator to be dependent upon the input. In this study, the dependency of low-resolution data is effectively reflected in the reconstruction of high-resolution data using low-resolution data as the condition. Thus, the correlation between the large-scale structure and the reconstructed small-scale structures of turbulence can be more accurately represented. The objective function of the cGAN is

$\mathcal {L}_{CNN}$ to accurately predict the target flow field. Conditional GAN, as proposed by Mirza & Osindero (Reference Mirza and Osindero2014), is similar to GAN. The cGAN model applies the generator input as a condition to the discriminator to constrain the output of the generator to be dependent upon the input. In this study, the dependency of low-resolution data is effectively reflected in the reconstruction of high-resolution data using low-resolution data as the condition. Thus, the correlation between the large-scale structure and the reconstructed small-scale structures of turbulence can be more accurately represented. The objective function of the cGAN is

where ![]() $x$ and

$x$ and ![]() $y$ are sampled low- and high-resolution turbulent flow fields, respectively. A low-resolution field is used as the input of the discriminator in addition to the high-resolution one (

$y$ are sampled low- and high-resolution turbulent flow fields, respectively. A low-resolution field is used as the input of the discriminator in addition to the high-resolution one (![]() $y$ or

$y$ or ![]() $G(x)$). For example, flow-field information, comprising a total of six channels, including high-resolution velocity vector fields and paired low-resolution fields, are used as input. Note that we can use cGAN only when paired data are provided.

$G(x)$). For example, flow-field information, comprising a total of six channels, including high-resolution velocity vector fields and paired low-resolution fields, are used as input. Note that we can use cGAN only when paired data are provided.

In this study, the unpaired low- and high-resolution turbulent fields are used when training the CycleGAN, whereas the paired data are used when training the CNN and the cGAN. In the first two examples (§§ 3.1 and 3.2), paired data exist, because low-resolution data are obtained from high-resolution DNS data. When learning the CycleGAN, low- and high-resolution data are shuffled and unpaired intentionally. In § 3.3 LES and DNS data are unpaired naturally. Thus, we cannot train the CNN and the cGAN, whereas we can train the CycleGAN in the same way as explained in §§ 3.1 and 3.2.

3. Results and discussion

3.1. Example 1: filtered homogeneous isotropic turbulence

In this section, using various resolution ratios, super-resolution reconstruction leveraging both supervised and unsupervised learning are considered for homogeneous isotropic turbulence at a Taylor-scale Reynolds number, ![]() $Re_\lambda = 418$. Here

$Re_\lambda = 418$. Here ![]() $Re_\lambda = \lambda u' / \nu$ where the Taylor microscale

$Re_\lambda = \lambda u' / \nu$ where the Taylor microscale ![]() $\lambda = (15\nu u'^2/\epsilon )^{1/2}$, the root-mean-squared velocity

$\lambda = (15\nu u'^2/\epsilon )^{1/2}$, the root-mean-squared velocity ![]() $u' = (\langle u_iu_i \rangle /3)^{1/2}$, and

$u' = (\langle u_iu_i \rangle /3)^{1/2}$, and ![]() $\nu$ and

$\nu$ and ![]() $\epsilon$ are the kinematic viscosity and dissipation rate, respectively. Data were obtained from the JHTDB. The governing equations were incompressible Navier–Stokes equations. Direct numerical simulation was performed based on the pseudo-spectral method, and the domain and mesh size were

$\epsilon$ are the kinematic viscosity and dissipation rate, respectively. Data were obtained from the JHTDB. The governing equations were incompressible Navier–Stokes equations. Direct numerical simulation was performed based on the pseudo-spectral method, and the domain and mesh size were ![]() $2{\rm \pi} \times 2{\rm \pi} \times 2{\rm \pi}$ and

$2{\rm \pi} \times 2{\rm \pi} \times 2{\rm \pi}$ and ![]() $1024\times 1024\times 1024$, respectively. Kinematic viscosity

$1024\times 1024\times 1024$, respectively. Kinematic viscosity ![]() $\nu =0.000185$, and the Kolmogorov length scale

$\nu =0.000185$, and the Kolmogorov length scale ![]() $\eta = 0.00280$. Details are given in Perlman et al. (Reference Perlman, Burns, Li and Meneveau2007) and Li et al. (Reference Li, Perlman, Wan, Yang, Meneveau, Burns, Chen, Szalay and Eyink2008). We used 200 fields with

$\eta = 0.00280$. Details are given in Perlman et al. (Reference Perlman, Burns, Li and Meneveau2007) and Li et al. (Reference Li, Perlman, Wan, Yang, Meneveau, Burns, Chen, Szalay and Eyink2008). We used 200 fields with ![]() ${\rm \Delta} t = 0.02$ for training and 10 fields with

${\rm \Delta} t = 0.02$ for training and 10 fields with ![]() ${\rm \Delta} t = 0.2$ for validation. The fields for validation were well separated from the fields in the training data by the large-eddy turnover time. In the current study, we focus on the best performance of both supervised and unsupervised learnings that we can achieve. Therefore, we tried to collect as much independent data as possible. We restricted our scope to the reconstruction of 2-D fields of three-dimensional (3-D) turbulent fields to confirm the plausibility of reconstructing turbulence using an unsupervised learning. Input and output data were 2-D velocity fields (

${\rm \Delta} t = 0.2$ for validation. The fields for validation were well separated from the fields in the training data by the large-eddy turnover time. In the current study, we focus on the best performance of both supervised and unsupervised learnings that we can achieve. Therefore, we tried to collect as much independent data as possible. We restricted our scope to the reconstruction of 2-D fields of three-dimensional (3-D) turbulent fields to confirm the plausibility of reconstructing turbulence using an unsupervised learning. Input and output data were 2-D velocity fields (![]() $u, v, w$) in an

$u, v, w$) in an ![]() $x-y$ plane. All velocity components are closely related with one another, although statistically, the three components are not correlated with one another in isotropic turbulence. Therefore, we combined all the components in the construction of the learning network. Low-resolution velocity fields and filtered DNS (fDNS) data were obtained by applying downsampling and average pooling (i.e. top-hat filter) to high-resolution DNS data. Average pooling is a local average operation that extracts the mean value over some area of the velocity fields. The size of DNS data was

$x-y$ plane. All velocity components are closely related with one another, although statistically, the three components are not correlated with one another in isotropic turbulence. Therefore, we combined all the components in the construction of the learning network. Low-resolution velocity fields and filtered DNS (fDNS) data were obtained by applying downsampling and average pooling (i.e. top-hat filter) to high-resolution DNS data. Average pooling is a local average operation that extracts the mean value over some area of the velocity fields. The size of DNS data was ![]() $N_x \times N_y$, and that of the low resolution was

$N_x \times N_y$, and that of the low resolution was ![]() $N_x / r \times N_y / r$, where

$N_x / r \times N_y / r$, where ![]() $r$ is the resolution ratio. We considered three cases:

$r$ is the resolution ratio. We considered three cases: ![]() $r=4$,

$r=4$, ![]() $8$ and

$8$ and ![]() $16$. For training, the target (high-resolution) size was fixed at

$16$. For training, the target (high-resolution) size was fixed at ![]() $N_x \times N_y = 128\times 128$, which was a sub-region extracted from the training fields. This choice of input and target-domain sizes was made based on our observation that the domain length of

$N_x \times N_y = 128\times 128$, which was a sub-region extracted from the training fields. This choice of input and target-domain sizes was made based on our observation that the domain length of ![]() $128 {\rm \Delta} x ({=} 0.785)$ was greater than the integral length scale of the longitudinal two-point velocity autocorrelation of 0.373. This condition is an important guideline for the choice of the input domain, because high-resolution data at any point in the same domain can be reconstructed restrictively based on all of the data in the input domain.

$128 {\rm \Delta} x ({=} 0.785)$ was greater than the integral length scale of the longitudinal two-point velocity autocorrelation of 0.373. This condition is an important guideline for the choice of the input domain, because high-resolution data at any point in the same domain can be reconstructed restrictively based on all of the data in the input domain.

To demonstrate the performance of unsupervised learning using CycleGAN for the super-resolution reconstruction of turbulent flows, we tested a bicubic interpolation and two kinds of supervised learning by adopting CNN and cGAN. Bicubic interpolation is a simple method of generating high-resolution images through interpolation using data at 16 adjacent pixels without learning. CycleGAN was trained using unpaired fDNS and DNS fields, and CNN and cGAN were trained using paired fDNS and DNS fields. The same hyperparameters, except those of the network architecture, were used for each resolution ratio, ![]() $r$. The velocity,

$r$. The velocity, ![]() $u$, of the reconstructed 2-D field, using the test data, is presented in figure 4. Bicubic interpolation tends to blur the target turbulence and, thus, cannot reconstruct well the small scales of the target flow field, regardless of the resolution ratio. This obviously indicates that the bicubic interpolation is unsuitable for small-scale reconstruction of turbulence. However, data-driven approaches can fairly well-reconstruct small-scale structures that are not included in the input data. Convolutional neural networks can reconstruct a velocity field similar to that of the target data when

$u$, of the reconstructed 2-D field, using the test data, is presented in figure 4. Bicubic interpolation tends to blur the target turbulence and, thus, cannot reconstruct well the small scales of the target flow field, regardless of the resolution ratio. This obviously indicates that the bicubic interpolation is unsuitable for small-scale reconstruction of turbulence. However, data-driven approaches can fairly well-reconstruct small-scale structures that are not included in the input data. Convolutional neural networks can reconstruct a velocity field similar to that of the target data when ![]() $r=4$. As

$r=4$. As ![]() $r$ increases, the CNN shows only slight improvement over bicubic interpolation. Meanwhile, cGAN can generate high-quality velocity fields similar to the DNS ones, regardless of the input data resolution.

$r$ increases, the CNN shows only slight improvement over bicubic interpolation. Meanwhile, cGAN can generate high-quality velocity fields similar to the DNS ones, regardless of the input data resolution.

Figure 4. Reconstructed instantaneous velocity field ![]() $(u)$ from a given low-resolution input field with homogeneous isotropic turbulence by various deep-learning models. The low-resolution field was obtained through top-hat filtering on the DNS field.

$(u)$ from a given low-resolution input field with homogeneous isotropic turbulence by various deep-learning models. The low-resolution field was obtained through top-hat filtering on the DNS field.

As also shown in figure 4, CycleGAN showed excellent performance in reconstructing the velocity field, reflecting the characteristics of the target, given that it used unsupervised learning. When ![]() $r=4$ and

$r=4$ and ![]() $8$, our model produced a flow field quite similar to that of the target and that of the cGAN reconstruction trained using paired data. When

$8$, our model produced a flow field quite similar to that of the target and that of the cGAN reconstruction trained using paired data. When ![]() $r=16$, the generated field by CycleGAN had a slightly different point-by-point value from the target. However, our model showed similar performance as cGAN.

$r=16$, the generated field by CycleGAN had a slightly different point-by-point value from the target. However, our model showed similar performance as cGAN.

Vorticity field ![]() $(\omega _z)$, obtained from the reconstructed velocity information, is presented in figure 5. Vorticity was not directly considered during the training process. Similar to velocity fields, bicubic interpolation and CNN were unable to reconstruct vorticity structures shown in the DNS, because the resolution of the input data decreased. Especially, CNN represents non-physical vortical structures and under-estimates magnitude of them. This phenomenon becomes very severe as

$(\omega _z)$, obtained from the reconstructed velocity information, is presented in figure 5. Vorticity was not directly considered during the training process. Similar to velocity fields, bicubic interpolation and CNN were unable to reconstruct vorticity structures shown in the DNS, because the resolution of the input data decreased. Especially, CNN represents non-physical vortical structures and under-estimates magnitude of them. This phenomenon becomes very severe as ![]() $r$ increases. However, both cGAN and CycleGAN generated vorticity structures similar to the DNS ones, although performance was a bit deteriorated when

$r$ increases. However, both cGAN and CycleGAN generated vorticity structures similar to the DNS ones, although performance was a bit deteriorated when ![]() $r =16$. This indicates that GAN-based models reflect the spatial correlation between velocity components well, unlike the CNN model. We first investigated the MSE to rigorously compare the differences between the target and the reconstructed flow fields, as shown in table 1. The CNN had the lowest error in both velocity and vorticity, whereas cGAN and CycleGAN had relatively large errors for all

$r =16$. This indicates that GAN-based models reflect the spatial correlation between velocity components well, unlike the CNN model. We first investigated the MSE to rigorously compare the differences between the target and the reconstructed flow fields, as shown in table 1. The CNN had the lowest error in both velocity and vorticity, whereas cGAN and CycleGAN had relatively large errors for all ![]() $r$. A possible explanation for this is as follows. In turbulent flow, a low-resolution (or filtered) flow field obviously lacks the information to fully reconstruct a high-resolution field identical to the target field. As

$r$. A possible explanation for this is as follows. In turbulent flow, a low-resolution (or filtered) flow field obviously lacks the information to fully reconstruct a high-resolution field identical to the target field. As ![]() $r$ increases, the possible high-resolution solution space for a given low-resolution field becomes larger. In this situation, the best that a CNN trained to minimize the pointwise error against the DNS solution can possibly achieve is to generate the average of all the possible solutions. The reconstructed field therefore has non-physical features and lower magnitudes than the DNS field. On the other hand, the GAN-based models are trained by minimizing more sophisticated losses and reflect the spatial correlation and important features in the turbulence through the latent vector in the generation and discrimination stages. As a result, the GAN-based models recover flow fields within the possible solution space which better reflect the physical characteristics at the expense of relatively large pointwise errors. As we confirmed in figures 4 and 5, cGAN and CycleGAN have superior capability to reconstruct small-scale turbulence compared with CNN. It appears that considering only the pointwise error can lead to misinterpretations in the super-resolution reconstruction of turbulent flows, because even bicubic interpolation produces a smaller pointwise error than cGAN and CycleGAN. As will be detailed below, a comprehensive diagnosis of the reconstructed flow fields including statistics such as the energy spectrum, spatial correlation and probability distributions seems to be more effective in assessing the representation of small-scale turbulence structures.

$r$ increases, the possible high-resolution solution space for a given low-resolution field becomes larger. In this situation, the best that a CNN trained to minimize the pointwise error against the DNS solution can possibly achieve is to generate the average of all the possible solutions. The reconstructed field therefore has non-physical features and lower magnitudes than the DNS field. On the other hand, the GAN-based models are trained by minimizing more sophisticated losses and reflect the spatial correlation and important features in the turbulence through the latent vector in the generation and discrimination stages. As a result, the GAN-based models recover flow fields within the possible solution space which better reflect the physical characteristics at the expense of relatively large pointwise errors. As we confirmed in figures 4 and 5, cGAN and CycleGAN have superior capability to reconstruct small-scale turbulence compared with CNN. It appears that considering only the pointwise error can lead to misinterpretations in the super-resolution reconstruction of turbulent flows, because even bicubic interpolation produces a smaller pointwise error than cGAN and CycleGAN. As will be detailed below, a comprehensive diagnosis of the reconstructed flow fields including statistics such as the energy spectrum, spatial correlation and probability distributions seems to be more effective in assessing the representation of small-scale turbulence structures.

Figure 5. Vorticity field calculated from the reconstructed velocity fields obtained by various deep-learning models. The low-resolution velocity field was obtained through top-hat filtering on the DNS field.

Table 1. Mean-squared error of generated velocity and vorticity field for the resolution ratio, ![]() $r$. The velocity and vorticity were normalized using the standard deviation of the DNS field.

$r$. The velocity and vorticity were normalized using the standard deviation of the DNS field.

For more quantitative assessment of the performance of learning models, the probability density function (p.d.f.) of vorticity, ![]() $\textrm {p.d.f.}(\omega _z)$, for three resolution ratios are given in figure 6(a–c). For obvious reasons, bicubic interpolation could not produce a wider distribution of the p.d.f. of vorticity for DNS data, and CNN performed very poorly. On the other hand, the p.d.f. of cGAN and CycleGAN recovered the DNS well, regardless of

$\textrm {p.d.f.}(\omega _z)$, for three resolution ratios are given in figure 6(a–c). For obvious reasons, bicubic interpolation could not produce a wider distribution of the p.d.f. of vorticity for DNS data, and CNN performed very poorly. On the other hand, the p.d.f. of cGAN and CycleGAN recovered the DNS well, regardless of ![]() $r$. The performance of learning models in representing small-scale structures of turbulence can be better investigated using an energy spectrum. The

$r$. The performance of learning models in representing small-scale structures of turbulence can be better investigated using an energy spectrum. The ![]() $x$-directional energy spectrum is defined as

$x$-directional energy spectrum is defined as

where

Here, ![]() $\langle \rangle$ denotes an average operation, and

$\langle \rangle$ denotes an average operation, and ![]() $V_i$ represents the velocity components;

$V_i$ represents the velocity components; ![]() $R_{V_iV_i}(p)$ is the

$R_{V_iV_i}(p)$ is the ![]() $x$-directional two-point correlation of velocity. The transverse energy spectrum is obtained by the average of the

$x$-directional two-point correlation of velocity. The transverse energy spectrum is obtained by the average of the ![]() $y$-directional spectrum of

$y$-directional spectrum of ![]() $u$, the

$u$, the ![]() $x$-directional spectrum of

$x$-directional spectrum of ![]() $v$, and the

$v$, and the ![]() $x$- and

$x$- and ![]() $y$-directional spectra of

$y$-directional spectra of ![]() $w$. The transverse energy spectra for

$w$. The transverse energy spectra for ![]() $r=4$, 8 and 16 are presented in figures 6(d), 6(e) and 6(f), respectively. The vertical dotted line indicates the cutoff wavenumber, which is the maximum wavenumber of low-resolution fields. Bicubic interpolation and CNN cannot represent the energy of wavenumbers higher than the cutoff one. However, cGAN and unsupervised CycleGAN show great performance in recovering the energy of the DNS in the high-wavenumber regions, which is not included in the input data. Because we are reconstructing two-dimensional sliced snapshots of a three-dimensional simulation, there can be differences in the statistical accuracies between the velocity components. However, the present CycleGAN shows almost identical distributions for the transverse energy spectra of the horizontal velocities (

$r=4$, 8 and 16 are presented in figures 6(d), 6(e) and 6(f), respectively. The vertical dotted line indicates the cutoff wavenumber, which is the maximum wavenumber of low-resolution fields. Bicubic interpolation and CNN cannot represent the energy of wavenumbers higher than the cutoff one. However, cGAN and unsupervised CycleGAN show great performance in recovering the energy of the DNS in the high-wavenumber regions, which is not included in the input data. Because we are reconstructing two-dimensional sliced snapshots of a three-dimensional simulation, there can be differences in the statistical accuracies between the velocity components. However, the present CycleGAN shows almost identical distributions for the transverse energy spectra of the horizontal velocities (![]() $u$ and

$u$ and ![]() $v$) and the vertical velocity (

$v$) and the vertical velocity (![]() $w$), and reproduces the isotropic characteristics well (figure not shown here).

$w$), and reproduces the isotropic characteristics well (figure not shown here).

Figure 6. Probability density function of vorticity and transverse energy spectra for various resolution ratio, ![]() $r$. (a–c) Probability density functions of vorticity corresponding to

$r$. (a–c) Probability density functions of vorticity corresponding to ![]() $r=4$, 8 and 16, respectively. (d–f) Energy spectra for

$r=4$, 8 and 16, respectively. (d–f) Energy spectra for ![]() $r=4$, 8 and 16.

$r=4$, 8 and 16.

Furthermore, we assessed the generalization ability of the present model with respect to the Reynolds number. For this purpose, we tested the model using data with a higher Taylor-scale Reynolds number ![]() $Re_\lambda = 611$ (Yeung, Donzis & Sreenivasan Reference Yeung, Donzis and Sreenivasan2012) from JHTDB than the trained Reynolds number

$Re_\lambda = 611$ (Yeung, Donzis & Sreenivasan Reference Yeung, Donzis and Sreenivasan2012) from JHTDB than the trained Reynolds number ![]() $Re_\lambda = 418$. Here, the major assumption is that there exists a universal relation between low-resolution fields and the corresponding high-resolution fields non-dimensionalized by the Kolmogorov length scale

$Re_\lambda = 418$. Here, the major assumption is that there exists a universal relation between low-resolution fields and the corresponding high-resolution fields non-dimensionalized by the Kolmogorov length scale ![]() $\eta$ and velocity scale

$\eta$ and velocity scale ![]() $u_\eta$. Here

$u_\eta$. Here ![]() $\eta$ for

$\eta$ for ![]() $Re_\lambda = 611$ is

$Re_\lambda = 611$ is ![]() $0.00138$ and approximately half that of

$0.00138$ and approximately half that of ![]() $Re_\lambda = 418$. The grid interval at the higher Reynolds number, which was normalized by

$Re_\lambda = 418$. The grid interval at the higher Reynolds number, which was normalized by ![]() $\eta$, was set almost identical to the grid interval used for training. High-resolution data were sub-sampled from the simulation grids to set the grid interval of both Reynolds numbers to almost the same interval. The high-resolution grid size for the test is

$\eta$, was set almost identical to the grid interval used for training. High-resolution data were sub-sampled from the simulation grids to set the grid interval of both Reynolds numbers to almost the same interval. The high-resolution grid size for the test is ![]() $2048\times 2048$. We considered the resolution ratio

$2048\times 2048$. We considered the resolution ratio ![]() $r=8$ so that the dimension of the low-resolution data generated by applying the top-hat filter to the high-resolution data is

$r=8$ so that the dimension of the low-resolution data generated by applying the top-hat filter to the high-resolution data is ![]() $256\times 256$. The resulting reconstructed velocity and vorticity fields are provided in figure 7(a). Although we only considered a single Reynolds number for the training, the present model, CycleGAN, performs very well in the reconstruction at a higher Reynolds number, indicating its ability to extrapolate successfully. Similar to figures 4 and 5 where the same Reynolds number was used for both training and testing, pointwise differences from the reference DNS field are naturally observed because the low-resolution turbulence lacks the information to uniquely determine the high-resolution solution at

$256\times 256$. The resulting reconstructed velocity and vorticity fields are provided in figure 7(a). Although we only considered a single Reynolds number for the training, the present model, CycleGAN, performs very well in the reconstruction at a higher Reynolds number, indicating its ability to extrapolate successfully. Similar to figures 4 and 5 where the same Reynolds number was used for both training and testing, pointwise differences from the reference DNS field are naturally observed because the low-resolution turbulence lacks the information to uniquely determine the high-resolution solution at ![]() $r=8$. However, the p.d.f. and the energy spectrum obtained by our model in figures 7(b) and 7(c) are almost perfectly consistent with those of DNS, indicating that the deep-learning model can be a universal mapping function between the filtered flow field and the recovered flow field. There is a slight deviation at very high wavenumbers, but this deviation is similar to the deviation present when the testing and training Reynolds numbers were the same (figure 6).

$r=8$. However, the p.d.f. and the energy spectrum obtained by our model in figures 7(b) and 7(c) are almost perfectly consistent with those of DNS, indicating that the deep-learning model can be a universal mapping function between the filtered flow field and the recovered flow field. There is a slight deviation at very high wavenumbers, but this deviation is similar to the deviation present when the testing and training Reynolds numbers were the same (figure 6).

Figure 7. Test of CycleGAN for a higher ![]() $Re_\lambda = 611$ than the trained value. The network was trained at

$Re_\lambda = 611$ than the trained value. The network was trained at ![]() $Re_\lambda =418$. The resolution ratio

$Re_\lambda =418$. The resolution ratio ![]() $r$ for both training and testing was 8. Panel (a) shows the recovered velocity fields (top panels) from the filtered low-resolution field and the vorticity fields (bottom panels) calculated from the recovered velocity field. Panel (b) shows the p.d.f. of the vorticity. (c) Transverse energy spectrum. The vertical dashed line denotes the cutoff wavenumber used for the low-resolution field.

$r$ for both training and testing was 8. Panel (a) shows the recovered velocity fields (top panels) from the filtered low-resolution field and the vorticity fields (bottom panels) calculated from the recovered velocity field. Panel (b) shows the p.d.f. of the vorticity. (c) Transverse energy spectrum. The vertical dashed line denotes the cutoff wavenumber used for the low-resolution field.

Test results in this section clearly indicate that CycleGAN is an effective model for super-resolution reconstruction of turbulent flows when low- and high-resolution data are unpaired. The CycleGAN model can provide statistically accurate high-resolution fields for various resolution ratios. Reconstructed velocities are very similar to targets at all ![]() $r$. Although training with unpaired data, CycleGAN performs nearly equally to cGAN, showing the best performance among supervised learning models. It appears that repetitive convolution operations and up- or down-sampling of turbulence fields in the generator and discriminator capture the essential characteristics of turbulence, which are otherwise difficult to describe.

$r$. Although training with unpaired data, CycleGAN performs nearly equally to cGAN, showing the best performance among supervised learning models. It appears that repetitive convolution operations and up- or down-sampling of turbulence fields in the generator and discriminator capture the essential characteristics of turbulence, which are otherwise difficult to describe.

3.2. Example 2: measured wall-bounded turbulence

To evaluate the performance of our model for anisotropic turbulence, in this section we attempt a high-resolution reconstruction of low-resolution data for wall-bounded flows. This time, the low-resolution data were extracted from high-resolution DNS data from pointwise measurement at sparse grids instead of the local average. This is similar to experimental situations in which PIV measurements had limited spatial resolution. We used JHTDB data collected through DNS of turbulent channel flows for solving incompressible Navier–Stokes equations. The flow was driven by the mean pressure gradient in the streamwise ![]() $(x)$ direction, and a no-slip condition was imposed on the top and bottom walls. Periodicity was imposed in the streamwise,

$(x)$ direction, and a no-slip condition was imposed on the top and bottom walls. Periodicity was imposed in the streamwise, ![]() $x$, and spanwise,

$x$, and spanwise, ![]() $z$, directions, and a non-uniform grid was used in the wall-normal direction,

$z$, directions, and a non-uniform grid was used in the wall-normal direction, ![]() $y$. Detailed numerical methods were provided in Graham et al. (Reference Graham2015). The friction Reynolds number,

$y$. Detailed numerical methods were provided in Graham et al. (Reference Graham2015). The friction Reynolds number, ![]() $Re_\tau =u_\tau \delta / \nu$, was defined by the friction velocity

$Re_\tau =u_\tau \delta / \nu$, was defined by the friction velocity ![]() $u_\tau$, channel half-width

$u_\tau$, channel half-width ![]() $\delta$ and the kinetic viscosity

$\delta$ and the kinetic viscosity ![]() $\nu$ is 1000. Velocity and length were normalized by

$\nu$ is 1000. Velocity and length were normalized by ![]() $u_\tau$ and

$u_\tau$ and ![]() $\delta$, respectively, and superscript (

$\delta$, respectively, and superscript (![]() $+$) was a quantity non-dimensionalized with

$+$) was a quantity non-dimensionalized with ![]() $u_\tau$ and

$u_\tau$ and ![]() $\nu$. The domain length and grid resolution were

$\nu$. The domain length and grid resolution were ![]() $L_x \times L_y \times L_z = 8{\rm \pi} \delta \times 2 \delta \times 3{\rm \pi} \delta$ and

$L_x \times L_y \times L_z = 8{\rm \pi} \delta \times 2 \delta \times 3{\rm \pi} \delta$ and ![]() $N_{x} \times N_{y} \times N_{z} = 2048\times 512\times 1536$, respectively. The simulation time step,

$N_{x} \times N_{y} \times N_{z} = 2048\times 512\times 1536$, respectively. The simulation time step, ![]() ${\rm \Delta} t$, which was non-dimensionalized by

${\rm \Delta} t$, which was non-dimensionalized by ![]() $u_\tau$ and

$u_\tau$ and ![]() $\delta$, was

$\delta$, was ![]() $6.5 \times 10^{-5}$. The learning target was the streamwise velocity,

$6.5 \times 10^{-5}$. The learning target was the streamwise velocity, ![]() $u$, the wall-normal velocity,

$u$, the wall-normal velocity, ![]() $v$, and the spanwise velocity,

$v$, and the spanwise velocity, ![]() $w$, in the

$w$, in the ![]() $x$–

$x$–![]() $z$ plane at

$z$ plane at ![]() $y^+=15$ and

$y^+=15$ and ![]() $y^+=100$. Here

$y^+=100$. Here ![]() $y^+=15$ is the near-wall location with maximum fluctuation intensity of

$y^+=15$ is the near-wall location with maximum fluctuation intensity of ![]() $u$, and

$u$, and ![]() $y^+=100$ (

$y^+=100$ (![]() $y / \delta =0.1$) is in the outer region. Each model was trained separately for each wall-normal location. Similar to what we did in § 3.1 for isotropic turbulence, we trained the model using all the velocity components (

$y / \delta =0.1$) is in the outer region. Each model was trained separately for each wall-normal location. Similar to what we did in § 3.1 for isotropic turbulence, we trained the model using all the velocity components (![]() $u,v,w$) simultaneously although only

$u,v,w$) simultaneously although only ![]() $u$ and

$u$ and ![]() $v$ are statistically correlated. For training and validation data, 100 fields separated by an interval,

$v$ are statistically correlated. For training and validation data, 100 fields separated by an interval, ![]() ${\rm \Delta} t = 3.25 \times 10^{-3}$, and 10 fields separated by

${\rm \Delta} t = 3.25 \times 10^{-3}$, and 10 fields separated by ![]() ${\rm \Delta} t = 3.25 \times 10^{-2}$ were used, respectively. After training, we verified the trained model using 10 fields separated by an interval of

${\rm \Delta} t = 3.25 \times 10^{-2}$ were used, respectively. After training, we verified the trained model using 10 fields separated by an interval of ![]() ${\rm \Delta} t = 3.25 \times 10^{-2}$ as test data. These fields are sufficiently separated in time from the training data by longer than 10 times the integral time scale of the streamwise velocity. Low-resolution partially measured data were extracted at eight-grid intervals in the streamwise and spanwise directions in the DNS fully measured data. Similar to the previous learning example in § 3.1, during training, input and target sizes were fixed at

${\rm \Delta} t = 3.25 \times 10^{-2}$ as test data. These fields are sufficiently separated in time from the training data by longer than 10 times the integral time scale of the streamwise velocity. Low-resolution partially measured data were extracted at eight-grid intervals in the streamwise and spanwise directions in the DNS fully measured data. Similar to the previous learning example in § 3.1, during training, input and target sizes were fixed at ![]() $16\times 16$ and

$16\times 16$ and ![]() $128\times 128$, respectively. They were sub-region extracted from training fields. Here, the streamwise input domain length was

$128\times 128$, respectively. They were sub-region extracted from training fields. Here, the streamwise input domain length was ![]() $128{\rm \Delta} x=1.57$, which was greater than the integral length scale of the two-point correlation of the streamwise velocity, 1.14.

$128{\rm \Delta} x=1.57$, which was greater than the integral length scale of the two-point correlation of the streamwise velocity, 1.14.

In this example, because the low-resolution data were pointwise accurate, reconstruction implies the restoration of data in-between grids where low-resolution data are given. Therefore, a stabler model can be obtained by utilizing the known values of the flow field during reconstruction. To account for the known information, a new loss term (i.e. pixel loss) is added to the existing loss function (see (2.5)). The pixel-loss function used in the unsupervised learning model, CycleGAN, is expressed as

\begin{equation} \mathcal{L}_{pixel} = \lambda\mathbb{E}_{x\sim P_{X}}\left[\frac{1}{N_{p}}\sum_{i=1}^{N_p}(x^{LR}(p_i)-y^{DL}(p_i))^2\right], \end{equation}

\begin{equation} \mathcal{L}_{pixel} = \lambda\mathbb{E}_{x\sim P_{X}}\left[\frac{1}{N_{p}}\sum_{i=1}^{N_p}(x^{LR}(p_i)-y^{DL}(p_i))^2\right], \end{equation}

where ![]() $y^{DL}$ is the reconstructed velocity field, and

$y^{DL}$ is the reconstructed velocity field, and ![]() $x^{LR}$ is the low-resolution one;

$x^{LR}$ is the low-resolution one; ![]() $p_{i}$ is a measured position and

$p_{i}$ is a measured position and ![]() $N_p$ is the number of measured points,

$N_p$ is the number of measured points, ![]() $\lambda$ is a weight value and we fix it to

$\lambda$ is a weight value and we fix it to ![]() $10$. Although trial and error was required to select the optimal value of

$10$. Although trial and error was required to select the optimal value of ![]() $\lambda$, learning with

$\lambda$, learning with ![]() $\lambda =10$ was quite successful in all the cases considered in this paper. CycleGAN is trained to minimize

$\lambda =10$ was quite successful in all the cases considered in this paper. CycleGAN is trained to minimize ![]() $\mathcal {L}_{pixel}$. Table 2 shows the error of the test dataset, depending on the use of the pixel loss. When the pixel loss is used, the smaller error occurs at the position where exact values are known. Thus, the entire error of the reconstructed field becomes small. In the situation where a partial region is measured, a simple pixel loss could improve reconstruction accuracy for entire positions in addition to measured ones. The point-by-point accuracy can be further improved through the fine tuning of

$\mathcal {L}_{pixel}$. Table 2 shows the error of the test dataset, depending on the use of the pixel loss. When the pixel loss is used, the smaller error occurs at the position where exact values are known. Thus, the entire error of the reconstructed field becomes small. In the situation where a partial region is measured, a simple pixel loss could improve reconstruction accuracy for entire positions in addition to measured ones. The point-by-point accuracy can be further improved through the fine tuning of ![]() $\lambda$.

$\lambda$.

Table 2. Error of measured positions (i.e. pixel error) and error of entirety (i.e. entire error) for CycleGAN with and without pixel loss. The error is normalized by the standard deviation of the velocity of DNS.

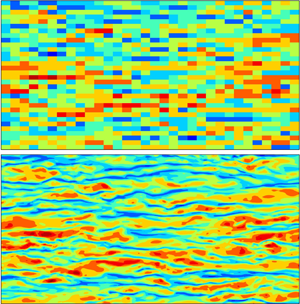

The absolute phase error of the Fourier coefficients in an instantaneous flow field reconstructed from test data using CycleGAN is given in figure 8. The phase error obtained without pixel loss at ![]() $y^+=15$, that with pixel loss at

$y^+=15$, that with pixel loss at ![]() $y^+=15$, and that with pixel loss at

$y^+=15$, and that with pixel loss at ![]() $y^+=100$ are shown in figures 8(a), 8(b) and 8(c), respectively. The maximum wavenumbers of the low-resolution field,

$y^+=100$ are shown in figures 8(a), 8(b) and 8(c), respectively. The maximum wavenumbers of the low-resolution field, ![]() $\kappa _{x,cutoff}$ and

$\kappa _{x,cutoff}$ and ![]() $\kappa _{z,cutoff}$, are indicated by a white line. When pixel loss was not used, a large phase error and a phase shift occurred for specific-size structures. This happened, because, when spatially homogeneous data are used for unsupervised learning, the discriminator cannot prevent the phase shift of high-resolution data. On the other hand, when pixel loss is used for training, the phase of all velocity components (

$\kappa _{z,cutoff}$, are indicated by a white line. When pixel loss was not used, a large phase error and a phase shift occurred for specific-size structures. This happened, because, when spatially homogeneous data are used for unsupervised learning, the discriminator cannot prevent the phase shift of high-resolution data. On the other hand, when pixel loss is used for training, the phase of all velocity components (![]() $u, v, w$) is accurate in the area satisfying

$u, v, w$) is accurate in the area satisfying ![]() $\kappa \leqslant \kappa _{cutoff}$. This means that, although the large-scale structures located in the low-resolution field were well captured, the small-scale structures were reconstructed. We also noticed that the reconstructed flow field near the wall (

$\kappa \leqslant \kappa _{cutoff}$. This means that, although the large-scale structures located in the low-resolution field were well captured, the small-scale structures were reconstructed. We also noticed that the reconstructed flow field near the wall (![]() $y^+=15$) had higher accuracy than that away from the wall (

$y^+=15$) had higher accuracy than that away from the wall (![]() $y^+=100$) in the spanwise direction (as shown in figure 8b,c). This might be related to the fact that the energy of the spanwise small scale was larger in the flow field near the wall. Furthermore, as shown in figure 8(b), the streamwise velocity,

$y^+=100$) in the spanwise direction (as shown in figure 8b,c). This might be related to the fact that the energy of the spanwise small scale was larger in the flow field near the wall. Furthermore, as shown in figure 8(b), the streamwise velocity, ![]() $(u)$, at

$(u)$, at ![]() $y^+=15$ had a higher-phase accuracy in the spanwise direction compared with other velocity components,

$y^+=15$ had a higher-phase accuracy in the spanwise direction compared with other velocity components, ![]() $(v, w)$. The reason might be that the energy of the streamwise velocity in high spanwise wavenumbers was higher. Overall, the higher the root-mean-square (RMS) of fluctuation, the higher the phase accuracy of the reconstructed flow field.

$(v, w)$. The reason might be that the energy of the streamwise velocity in high spanwise wavenumbers was higher. Overall, the higher the root-mean-square (RMS) of fluctuation, the higher the phase accuracy of the reconstructed flow field.

Figure 8. Phase error defined by ![]() $|phase(\hat {u}_{i}^{CycleGAN})-phase(\hat {u}_{i}^{DNS})|$ between the Fourier coefficients of the DNS field and the generated one by CycleGAN. (a) CycleGAN without pixel loss at

$|phase(\hat {u}_{i}^{CycleGAN})-phase(\hat {u}_{i}^{DNS})|$ between the Fourier coefficients of the DNS field and the generated one by CycleGAN. (a) CycleGAN without pixel loss at ![]() $y^+=15$, (b) CycleGAN with pixel loss at

$y^+=15$, (b) CycleGAN with pixel loss at ![]() $y^+=15$, and (c) CycleGAN with pixel loss at

$y^+=15$, and (c) CycleGAN with pixel loss at ![]() $y^+=100$. The box with the white line denotes the range of low-resolution input fields,

$y^+=100$. The box with the white line denotes the range of low-resolution input fields, ![]() $|\kappa _x|\leqslant \kappa _{x,cutoff}$ and

$|\kappa _x|\leqslant \kappa _{x,cutoff}$ and ![]() $|\kappa _z| \leqslant \kappa _{z,cutoff}$.

$|\kappa _z| \leqslant \kappa _{z,cutoff}$.

Figure 9 shows the velocity field (![]() $u, v, w$) reconstructed by various deep-learning processes from partially measured test data at

$u, v, w$) reconstructed by various deep-learning processes from partially measured test data at ![]() $y^+=15$. For CycleGAN, an unsupervised learning model, the network was trained using unpaired data with pixel loss, and the supervised learning models (i.e. CNN and cGAN) were trained using paired data with the loss function presented in § 2. Bicubic interpolation smoothed the low-resolution data. Thus, it could not at all capture the characteristics of the wall-normal velocity of the DNS (target). Convolutional neural networks yielded slightly better results, but it had limitations in generating a flow field that reflected small-scale structures observed in the DNS field. On the other hand, cGAN demonstrated excellent capability to reconstruct the flow field, including features of each velocity value. It accurately produced a wall-normal velocity, where small scales were especially prominent. CycleGAN, an unsupervised learning model, showed similar results as cGAN, although unpaired data were used. CycleGAN reconstructed both streak structures of the streamwise velocity and small strong structures of the wall-normal velocity, similar to the DNS field.

$y^+=15$. For CycleGAN, an unsupervised learning model, the network was trained using unpaired data with pixel loss, and the supervised learning models (i.e. CNN and cGAN) were trained using paired data with the loss function presented in § 2. Bicubic interpolation smoothed the low-resolution data. Thus, it could not at all capture the characteristics of the wall-normal velocity of the DNS (target). Convolutional neural networks yielded slightly better results, but it had limitations in generating a flow field that reflected small-scale structures observed in the DNS field. On the other hand, cGAN demonstrated excellent capability to reconstruct the flow field, including features of each velocity value. It accurately produced a wall-normal velocity, where small scales were especially prominent. CycleGAN, an unsupervised learning model, showed similar results as cGAN, although unpaired data were used. CycleGAN reconstructed both streak structures of the streamwise velocity and small strong structures of the wall-normal velocity, similar to the DNS field.

Figure 9. Reconstructed instantaneous velocity field from a partially measured field at ![]() $y^+=15$ obtained by various deep-learning models.

$y^+=15$ obtained by various deep-learning models.

To better evaluate the performance in reconstructing the small-scale structures, we present the vorticity fields obtained from the reconstructed velocities in figure 10. Here, the performance difference between the CNN model and GAN-based models is clearly observed. In the field obtained from CNN, the vorticity magnitude was highly underestimated compared to the one from DNS because only the velocity pointwise error was targeted during the training. Furthermore, the small-scale structures were not well captured by the CNN. On the other hand, the GAN-based models, CycleGAN and cGAN, could reconstruct the vortical structures with characteristics similar to those from DNS. The small-scale structures in the fields by GAN-based models are slightly different from those from DNS. This is natural because the low-resolution information is not sufficient to determine the high-resolution information uniquely. It can hence be concluded that GAN-based models are more effective in representing the small-scale structures than the CNN model. Although user-defined loss related to the vorticity or spatial correlation in the turbulence was not directly considered during learning, the GAN models are able to pick up these turbulence characteristics very well.

Figure 10. Vorticity field calculated from the reconstructed velocity fields at ![]() $y^+=15$. The velocity was obtained from a sparsely measured field using various deep-learning models.

$y^+=15$. The velocity was obtained from a sparsely measured field using various deep-learning models.

The streamwise and spanwise energy spectrum of each velocity component are shown in figures 11(a–c) and 11(d–f), respectively. Statistics are averaged using test datasets. In the streamwise energy spectrum, bicubic interpolation and CNN could not reproduce DNS statistics at high wavenumbers. On the other hand, cGAN accurately expressed DNS statistics at high wavenumbers. Despite using unpaired data, CycleGAN, an unsupervised learning model, showed similar results as cGAN. The spanwise energy spectrum showed similar results as the streamwise one. However, both low-resolution data and bicubic interpolation had higher energies than did DNS statistics at low wavenumbers, as shown in figure 11(e). These results are closely related to the structure size of the reconstructed velocity field. In figure 9 the reconstructed field through bicubic interpolation includes structures larger than those observed in the DNS flow field in the spanwise direction. Notably, an artificial large-scale structure can be generated by interpolation if the measuring is not carried out with sufficient density. The predicted flow through the CNN requires overall smaller energy than does the DNS statistics. Particularly, this phenomenon is prominent at relatively high wavenumbers. When the input (i.e. low-resolution field) and target (i.e. high-resolution field) are not uniquely connected, CNN tends to underestimate the energy. On the other hand, cGAN and CycleGAN can accurately describe the statistics of the DNS at both low and high wavenumbers. The reconstructed flow field and statistics of the energy spectrum at ![]() $y^+=100$ show similar results (see appendix B).

$y^+=100$ show similar results (see appendix B).

Figure 11. One-dimensional energy spectra of reconstructed flow field using deep-learning models at ![]() $y^+=15$. Streamwise energy spectra for (a) streamwise velocity, (b) wall-normal velocity and (c) spanwise velocity; spanwise energy spectra for (d) streamwise velocity, (e) wall-normal velocity and (f) spanwise velocity.

$y^+=15$. Streamwise energy spectra for (a) streamwise velocity, (b) wall-normal velocity and (c) spanwise velocity; spanwise energy spectra for (d) streamwise velocity, (e) wall-normal velocity and (f) spanwise velocity.