Introduction

The demands for virtual reality (VR) applications are increasing rapidly in various fields nowadays, such as entertainment, education, healthcare, and engineering, and there is a significant need to understand how people interact with the designed virtual world (Argelaguet et al., Reference Argelaguet, Hoyet, Trico and Lécuyer2016; Yu et al., Reference Yu, Gorbachev, Eck, Pankratz, Navab and Roth2021; Wang et al., Reference Wang, Huang, Yang, Liang, Han and Sun2022). Different avatars and tasks can be created in VR by designers for specific virtual scenes. The avatars, or their parts (e.g., virtual hands) that are designed to represent users’ physical selves, play a central role in the self-presentation in virtual environments (VEs) (Argelaguet et al., Reference Argelaguet, Hoyet, Trico and Lécuyer2016; Freeman and Maloney, Reference Freeman and Maloney2021; Wang et al., Reference Wang, Huang, Yang, Liang, Han and Sun2022). Besides, it is worth noting that the characteristics of virtual avatars can affect the users’ attitudes and behaviors when they experience the activities in VEs (Freeman and Maloney, Reference Freeman and Maloney2021).

The virtual world brings immersive experiences for users to gain different perceptions and feeling toward various avatars (Gonzalez-Franco and Peck, Reference Gonzalez-Franco and Peck2018; Yu et al., Reference Yu, Gorbachev, Eck, Pankratz, Navab and Roth2021). The sense of embodiment as one of the important user perceptions in VR is regarded as the feeling of owning, controlling, and being inside a virtual body (Kilteni et al., Reference Kilteni, Groten and Slater2012), which corresponds to its three subcomponents respectively: the sense of body ownership (namely the feeling of owning the virtual body), agency (namely the ability to control the virtual body's actions), and self-location (namely the perceived virtual body's location relative to the physical self). Studies have found that the VE can induce the sense of embodiment with various avatar designs, including virtual bodies or hands, which have drawn the attention of researchers (Argelaguet et al., Reference Argelaguet, Hoyet, Trico and Lécuyer2016; Zhang et al., Reference Zhang, Huang, Zhao, Yang, Liang, Han and Sun2020, Reference Zhang, Huang, Liao and Yang2022a, Reference Zhang, Huang, Tang, Wang and Yang2022b; Krogmeier and Mousas, Reference Krogmeier and Mousas2021). However, few works explored the effect of virtual hands on the sense of embodiment by studying its three subcomponents, and only subjective measures were used to evaluate the embodiment in these studies (Ogawa et al., Reference Ogawa, Narumi and Hirose2019; Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020).

To further explore how virtual hand designs affect users’ sense of embodiment, it can be beneficial to integrate subjective and objective data to achieve more accurate and reliable conclusions. Prior studies mainly used self-reports (questionnaires) to assess the embodiment subjectively. In this study, it is found that implicit measures originating from the field of psychology can be adopted for the embodiment research, such as the intentional binding for the agency (Nataraj et al., Reference Nataraj, Hollinger, Liu and Shah2020a, Reference Nataraj, Sanford, Shah and Liu2020b) and proprioceptive measurement for self-location (Ingram et al., Reference Ingram, Butler, Gandevia and Walsh2019). Besides, compared with the traditional research method, biometric measures are valuable methods to assist researchers in understanding the user experience (Borgianni and Maccioni, Reference Borgianni and Maccioni2020; Krogmeier and Mousas, Reference Krogmeier and Mousas2021). Recent studies suggested adding physiological detection to experiments for objective measurements, and eye tracking is a promising objective method for investigating user experiences in VR (Lu et al., Reference Lu, Rawlinson and Harter2019; Krogmeier and Mousas, Reference Krogmeier and Mousas2020, Reference Krogmeier and Mousas2021; Zhang et al., Reference Zhang, Huang, Liao and Yang2022a), such as eye tracking for exploring the sense of embodiment (Krogmeier and Mousas, Reference Krogmeier and Mousas2020, Reference Krogmeier and Mousas2021; Zhang et al., Reference Zhang, Huang, Liao and Yang2022a). In addition, the degrees of user attention can also be analyzed by classifying eye tracking data, which could help us understand the relationship between the embodiment and user attention. The integration of subject self-report, implicit measure, and physiological detection offers new possibilities for exploring the influences of virtual hand designs on users’ embodiment in VEs.

This paper aims to investigate the sense of embodiment based on its three subcomponents: body ownership, agency, and self-location with various virtual hand designs. In view of insufficient evidence about the impact of hand design on embodiment in the literature, this study combines subjective ratings, implicit measures derived from psychology, and an objective method of eye tracking for systematic investigation. This study brings new insights into users’ embodied experience in VR and offers important evidence about embodiment including its three subcomponents and the link with user attention under different hand designs. Furthermore, this study provides practical design recommendations for virtual hand design in future VR applications.

Related work

Virtual hand design

The VR environment can deceive the user's brain, and the sense of embodiment can make users believe that the virtual bodies are their own bodies (Wolf et al., Reference Wolf, Merdan, Dollinger, Mal, Wienrich, Botsch and Latoschik2021). Virtual avatars or hands can be used to create a familiar connection between users’ physical bodies and the virtual world. Participants can interact with the virtual world by controlling the virtual hands (e.g., grabbing or moving) in first-person perspective. The users’ upper limbs interact more with the virtual world through motion tracking techniques (e.g., Leap Motion hand tracking or handheld controllers) (Argelaguet et al., Reference Argelaguet, Hoyet, Trico and Lécuyer2016; Ogawa et al., Reference Ogawa, Narumi and Hirose2019). In addition, the appearance of virtual hands can be created with various realism levels by the shape of the hand and the rendering style. It has been established that participants would react differently according to different hand designs, and the representation of virtual hands could vary from highly abstract to highly realistic (Ogawa et al., Reference Ogawa, Narumi and Hirose2019; Zhang et al., Reference Zhang, Huang, Zhao, Yang, Liang, Han and Sun2020, Reference Zhang, Huang, Liao and Yang2022a). Meanwhile, it is worth noting that there is a phenomenon defined as the Uncanny Valley, referring to people's attitudes changing from positive to negative when they watch a robot or a virtual avatar or hand that looks like a natural person (Hepperle et al., Reference Hepperle, Purps, Deuchler and Woelfel2021).

Evidence from studies revealed that various virtual hand designs could influence users’ embodiment, and limited research evaluated the embodiment by the three subcomponents (body ownership, agency, and self-location) simultaneously (Ogawa et al., Reference Ogawa, Narumi and Hirose2019; Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020). A previous article indicated that hand realism (realistic, iconic, and abstract hands) affected perceived object sizes as the size of the virtual hand changed, and the result was the more realistic hand with a stronger sense of embodiment (including three subcomponents) (Ogawa et al., Reference Ogawa, Narumi and Hirose2019). A recent study investigated that four types of realism of non-mirrored and mirrored hand representations (personalized, realistic, robot, and wood hands) altered perceived embodiment (Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020). In this experimental task, a higher sense of agency was found with the realistic hand, and the author argued the lack of importance of self-location toward embodiment. These inconsistent conclusions of three subcomponents were assessed by subjective rates; thus, it is necessary to utilize multiple measurement methods to help understand the influence of hand designs on the embodiment in this study.

Sense of embodiment

Embodiment as a critical perception mechanism in VEs refers to the feeling that participants’ own virtual bodies (e.g., virtual avatars) or body parts (e.g., virtual hands or feet) as their own body (Kilteni et al., Reference Kilteni, Groten and Slater2012; Toet et al., Reference Toet, Kuling, Krom and Van Erp2020). When a virtual body or hand generate congruent information with the real one (including visual, tactile, motion, and proprioceptive information), participants can feel the illusory perception of the embodiment (Ehrsson, Reference Ehrsson2012). The sense of embodiment has been defined as the sense of owning, controlling, and being inside a virtual body, and these three statuses correspond to the sense of body ownership, agency, and self-location, respectively (Kilteni et al., Reference Kilteni, Groten and Slater2012).

The sense of body ownership is referred to as users’ self-attribution of a virtual body. The famous rubber hand illusion experiments demonstrate that people can create the illusion that – “the fake rubber hand is their own hand” through multi-sensory interaction (Botvinick and Cohen, Reference Botvinick and Cohen1998). Researchers have utilized advanced VR equipment to replicate and explore the users’ responses to rubber hand experiments, known as the virtual hand illusion experiment (Perez-Marcos et al., Reference Perez-Marcos, Slater and Sanchez-Vives2009). It is worth noting that the coherent and synchronized visual feedback of the virtual arm movement is sufficient to create the ownership illusion of the arm (Kokkinara and Slater, Reference Kokkinara and Slater2014). The illusion experience of body ownership can be evaluated by subjective and objective methods. The most common subjective method is self-report (questionnaire). Moreover, eye tracking as one objective physiological method can be used to explore the sense of body ownership in the literature (Krogmeier and Mousas, Reference Krogmeier and Mousas2020, Reference Krogmeier and Mousas2021). It has been shown that more body ownership could be reported from participants when they looked at a virtual avatar sooner.

Agency refers to the virtual body's experience of action and control in active movements. When the users’ motion is mapped to the virtual body in real-time or near real-time, the sense of agency can be generated. Research has shown that the synchronicity of vision-related movements affects the development of the agency (Wang et al., Reference Wang, Huang, Wang, Liao, Yang, Zhang and Sun2021). There are subjective and objective methods for measuring the sense of agency. Self-report is a subjective way to evaluate the sense of agency, which is the only method adopted in most works. Beyond self-report, intentional binding as one implicit method of the agency has been used in several interaction tasks recently (Suzuki et al., Reference Suzuki, Lush, Seth and Roseboom2019; Nataraj et al., Reference Nataraj, Hollinger, Liu and Shah2020a; Van den Bussche et al., Reference Van den Bussche, Alves, Murray and Hughes2020; Nataraj and Sanford, Reference Nataraj and Sanford2021). The standard basis of the intentional binding paradigm is the estimation of the time interval between an action (e.g., a button press) and a sensory result (e.g., sound feedback) (Suzuki et al., Reference Suzuki, Lush, Seth and Roseboom2019; Nataraj et al., Reference Nataraj, Hollinger, Liu and Shah2020a, Reference Nataraj, Sanford, Shah and Liu2020b; Van den Bussche et al., Reference Van den Bussche, Alves, Murray and Hughes2020; Nataraj and Sanford, Reference Nataraj and Sanford2021). It is an implicit way to evaluate the agency by measuring the time perception of the action effect, and participants are asked to estimate the time interval between action and consequence. In addition, based on VR-based eye tracking technology, one study has indicated the potential link between self-reported agency and eye tracking data under three virtual hands (Zhang et al., Reference Zhang, Huang, Liao and Yang2022a).

Self-location refers to the subjective feeling of “where I am in space”, and a detailed explanation is a spatial experience of being inside a virtual body (Kilteni et al., Reference Kilteni, Groten and Slater2012; Toet et al., Reference Toet, Kuling, Krom and Van Erp2020). Visual perspective is essential for participants to experience the self-location inside a virtual avatar, especially in the first-person perspective (Gorisse et al., Reference Gorisse, Christmann, Amato and Richir2017; Huang et al., Reference Huang, Lee, Chen and Liang2017). The self-report is a commonly subjective method for assessing self-location, but the objective method seems limited based on the literature. One objective solution was derived from the field of psychology, and it was proposed that proprioceptive measurement addressed hand position in the case of non-VR (Ingram et al., Reference Ingram, Butler, Gandevia and Walsh2019). The location value that indicated how far the proprioceptive self-location was perceived toward visual self-location from the actual hand position. Participants were required to use their left hand to identify the landmarks of their right hand on a board with position references, and the difference between perceived and actual hand positions was recorded to assess perceived self-location (Ingram et al., Reference Ingram, Butler, Gandevia and Walsh2019). It is an inspiration to evaluate the self-location by this proprioceptive position method. In addition, a recent work reported a weak negative correlation between the data of eye tracking and self-location, and it found less self-location when participants watched their virtual avatar for more time (Krogmeier and Mousas, Reference Krogmeier and Mousas2020).

It is worth understanding user attention in the virtual world in the current study, which is a valuable way to help us explain users’ embodied experience (including the sense of embodiment) more comprehensively. For example, exploring whether users pay more attention to the virtual avatar representations or others during the interaction with the virtual world. Limited articles have speculated about the relationship between the sense of embodiment and user attention in VR, but it still lacks supportive evidence in the literature (Tieri et al., Reference Tieri, Gioia, Scandola, Pavone and Aglioti2017; Cui and Mousas, Reference Cui and Mousas2021). Users’ attention can be analyzed through the recorded eye behaviors data of users in VEs through eye tracking (Batliner et al., Reference Batliner, Hess, Ehrlich-Adam, Lohmeyer and Meboldt2020; Li et al., Reference Li, Lee, Feng, Trappey and Gilani2021; Mousas et al., Reference Mousas, Koilias, Rekabdar, Kao and Anastaslou2021). Large amounts of participants’ eye tracking data will be collected in this study and then classify them using the K-means clustering that group information in an unsupervised way. K-means algorithm is a widely used source of data mining, and its classification effectively reduces large amounts of data into smaller sections in artificial intelligence (Huang et al., Reference Huang, Khalil, Luciani, Melesse and Ning2018). The previous work captured user attention by fixation spans, and clustering fixation spans in groups by the K-means algorithm reflected different degrees of user attention (Dohan and Mu, Reference Dohan and Mu2019). However, when it comes to studying the sense of embodiment by user attention, very little is known. Clustering these eye tracking data provides new ideas for this article to help understand and explore the sense of embodiment by analyzing user attention in VR.

Methodology

An experiment was designed to systematically investigate the sense of embodiment with virtual hand designs through self-report, implicit, and objective measurements. The experiment involves five virtual hand designs (with realism levels from low to high) to induce the participants’ sense of embodiment when performing interactive tasks in VEs. There was a self-report for assessing the embodiment and implicit methods for evaluating agency and self-location. Additionally, the eye tracking data was applied to explore the sense of embodiment.

Apparatus

The physical experiment equipment includes the VR system (HTC Vive Pro Eye), a Windows-based computer (Intel(R) Core (TM) i7-9800X CPU), and the hand tracking module (Leap Motion). The virtual experimental environment was designed and run by a game platform named Unity 3D (2019.4.16). Applying Leap Motion instead of traditional VR controllers changed how participants interacted with VEs. The front of the VR head-mounted display (HMD) installed the hand tracking module that can capture the participants’ hands and fingers (see Fig. 2). When participants wore the HMD, they could see a virtual lab room, including a wooden table and several buttons on the table. If they started the experiment, their right hand was tracked, and the virtual scene presented one of the virtual hand models.

Participants

Sixty-two participants (34 males and 28 females; 22.74 ± 3.90 (SD) years old) as volunteers were involved in this study, and they had normal or corrected-to-normal visions. Most participants were young students from university, and only three were above 30 years old. Moreover, only two participants were left-handed, and they reported that completing the tasks using their right hand did not affect their experimental experience. Forty-eight participants have experienced VR before, and 22 participants have used Leap motion.

The university ethics committee approved this study, and all participants signed the informed consent form before the experiment. In addition, considering the accuracy data of capturing eye tracking, they were asked to complete the eye calibration first when they wore the HMD. All 62 participants performed the Whack-a-Mole task. In order to avoid possible participants’ fatigue caused by the long experiment time, they were randomly assigned to one of the following parts (intentional binding or proprioceptive measurement) in the second step after completing the first step. In the intentional binding part, there were 30 participants (20.25 ± 2.70 (SD) years old), including 13 males and 17 females, and 32 participants (21 males and 11 females; 25.47 ± 3.96 (SD)) involved in the proprioceptive measurement part.

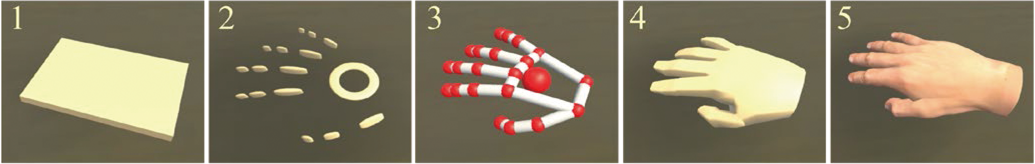

Virtual hand designs

Based on the virtual hands applied in the literature and VR applications, the entire region of virtual hand realism was divided into five categories, from low to high realism. One typical case was selected from each category as the research object in this study. Five types of hand designs (block hand, minimal hand, capsule hand, low-poly hand, and human hand) that vary in five levels of realism were designed (see Fig. 1). According to controlling the experiment's unique variable, all participants would use the same five virtual hand models with the same size to perform the whole experiment.

-

Block hand (Low realism): The shape of this virtual hand looks like a simplification of the palm part of the actual human hand. The palm model is represented by a rectangle with a light-yellow surface, but without five fingers and wrists.

-

Minimal hand (Low to medium realism): The minimal hand with the light-yellow surface is composed of a circle and some small ellipses blocks, representing palm and knuckles, respectively. These knuckles are separate and look like the hand skeleton.

-

Capsule hand (Medium realism): The capsule hand is composed of a combination of several spheres and cylinders. These red spheres connect the framework of the virtual hand, and these white cylinders represent the hand's knuckles.

-

Low-poly hand (Medium to high realism): The number of fingers and hand joints of the low-poly hand is similar to the real hand, but the low-poly hand has an angular appearance. The surface of the hand is rendered with a smooth light-yellow material.

-

Human hand (High realism): This hand model, including five fingers and fingernails, is highly similar to real people's hands, and the skin texture of this virtual hand is neutral. There are fine hairs and blood vessels on the hand skin.

Fig. 1. Five hand designs in five types of realism levels (1: block hand; 2: minimal hand; 3: capsule hand; 4: low-poly hand; 5: human hand).

Procedures

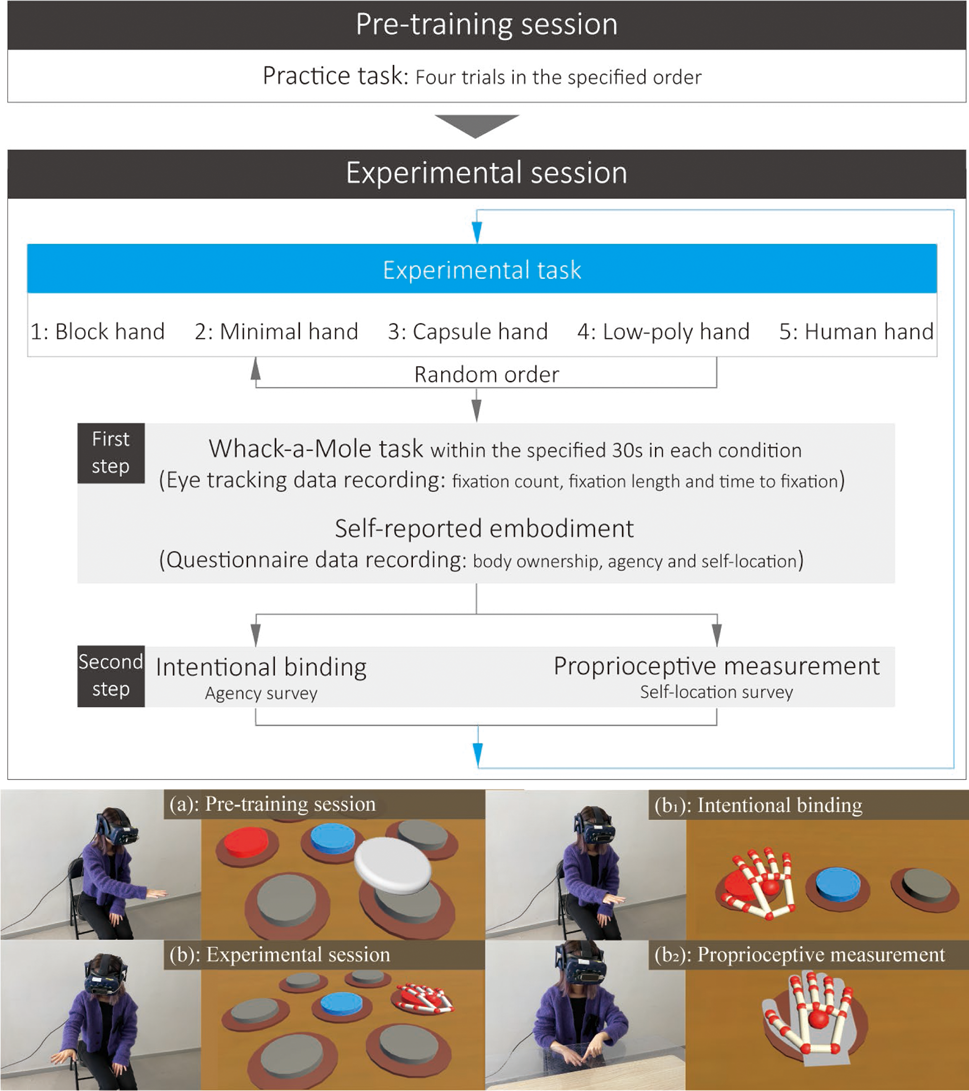

In order to collect objective and subjective data on the embodiment, the experiment was set to allow participants to interact actively with their virtual hands in the virtual scene. Considering all types of virtual hands could perform interactive experiments smoothly to induce user perceptions during the continuous time. The experiment in this study consists of two sessions: a pre-training session and an experimental session (see Fig. 2).

Fig. 2. Experimental procedures (Top: flow diagram of the experiment; Bottom: experimental scene of real and virtual environments).

The essential operation of experiment tasks requires participants to perform the Whack-a-Mole task that the button with a 10-cm diameter represents the mole hole. One of these buttons changed color randomly to guide the participant to press the color-changing button. The following random button started to change color after they successfully pressed it, and the duration of the entire process was 30 s as one task. According to experimental requirements, participants needed to press virtual buttons with a virtual hand during the specified 30 s for each condition, and each of them was asked to use these five virtual hands randomly for five tasks.

Pre-training session

Pre-training: This session aims to allow participants to get familiar with the virtual scene and interaction in the task with an oblate hand, which can take approximately 2 min. To distinguish the oblate hand (in the pre-training session) from these five virtual hands (in the following experiment), it is designed as a simple gray round pie without a rendering surface (see Fig. 2a). Only the three buttons in the middle row can be used in this session. At the beginning of this session, participants were required to locate their virtual hand in the initial position (the central button with blue color). Participants pressed the central button first to initiate the task; then another button would turn red to guide the participant to press it. The participant pressed the three buttons in the specified order (first the central button, then the left button, and last the right button), and they repeated this operation twice to familiarize the virtual scene and interaction in this session.

Experimental session

Experimental task: Participants were asked to perform the experimental task using five virtual hands randomly, and five test blocks correspond to different hand designs. In the first step, when participants were ready, each virtual hand was used to complete the Whack-a-Mole task based on accuracy. Then, participants were asked to accomplish the self-report to evaluate users’ embodiment. The second step was the survey of the sense of agency and self-location by implicit methods (intentional binding and proprioceptive measurement, respectively).

First step: Whack-a-Mole task and self-reported embodiment

When participants started to test in random order, they first observed the virtual hand and moved hand fingers to familiarize themselves with it, which took approximately 20 s. Then, as in the pre-training session, this task required participants to locate their virtual hand in the initial position (the central button with blue color) at the beginning of the task. After participants first pressed the central button, one of the six surrounding buttons would randomly change its color to red. Within the specified 30 s of one task, participants needed to press the red button and follow the color stimulus to press the corresponding button one after another (see Fig. 2b). All experimental virtual scenarios of each task were identical, except for using different hand models. The eye tracking data from participants can be collected during the 30 s task, including the total duration of fixations and the number of fixations for virtual hands. The order of test blocks was randomly set with 2–5 min break between every two blocks. The first step of the experimental task session (Whack-a-Mole task and self-report part) with five virtual hands took approximately 30 min to complete. In addition, participants were reminded to use their palms rather than fingers to perform the task, because the research paid more attention to participants’ experience of hand movement than hand speed in this study.

Eye tracking data recording in Whack-a-Mole task: In this experiment, eye tracking data in VR is collected using HTC Vive Pro Eye that combines VR and eye tracking technology. This device provides an opportunity for researchers to perform eye gaze behavior analysis and gaze position prediction under a natural virtual viewing condition with experimental control. Through the eye tracking software algorithm (SRanipal SDK provided by HTC Corporation) implemented in the virtual scene, the eye movement raw data from participants can be collected. The data of participants’ eye tracking during the process as objective information was collected for later analysis of user perceptions. For instance, when participants started the tasks, the number of fixations about what they viewed as virtual hands or other places during the specified time was recorded in each task. These eye tracking data include eye behaviors under five tasks, such as fixation count, fixation length, and time to fixation.

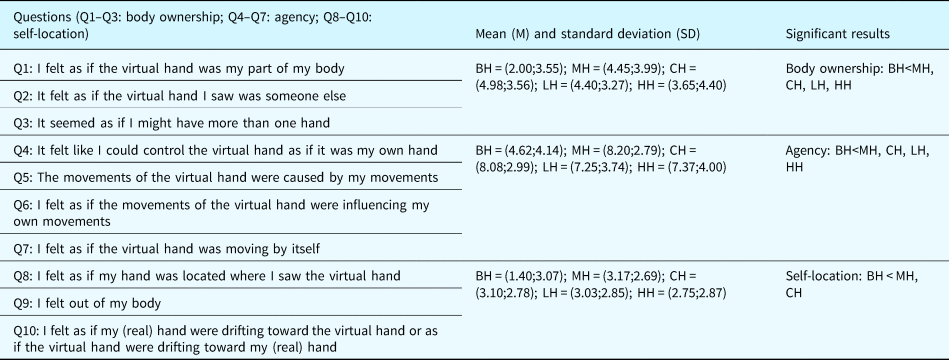

Questionnaire data recording in the self-reported embodiment: This part took place after each Whack-a-Mole task with five hands, and the aim was to collect the participants’ perception data through the embodiment questionnaire. Participants needed to assess the sense of embodiment by the standardized questionnaire (Kilteni et al., Reference Kilteni, Groten and Slater2012; Gonzalez-Franco and Peck, Reference Gonzalez-Franco and Peck2018). There are ten questions about the sense of embodiment (Q1–Q3: body ownership; Q4–Q7: agency; Q8–Q10: self-location), which can be seen in Table 1. They were required to tell the experimenter the results of each question orally. The application of the virtual questionnaire in this study allows participants to answer questions directly in VEs, which can avoid the influence of frequent behavior about putting on a VR headset on experimental data (Schwind et al., Reference Schwind, Knierim, Tasci, Franczak, Haas and Henze2017). The virtual questionnaire with a 7-point Likert scale would pop up in front of participants, and they needed to finish these 10 questions about the embodiment (see Table 1).

Table 1. Questionnaires results

BH, block hand; MH, minimal hand; CH, capsule hand; LH, low-poly hand; HH, human hand.

Second step: intentional binding and proprioceptive measurement

The purposes of the intentional binding and proprioceptive measurement parts are to assess the sense of agency and self-location (two subcomponents of the embodiment) with different hand designs by implicit methods from psychology, respectively.

Intentional binding: The implicit method of measuring the sense of agency under five virtual hands was shown in this part. The participants’ perceptions of the time interval between the press and the audio feedback with five virtual hands were collected. In order to reduce participants’ habitual actions on the first step and prevent participants from confusing the sound and feedback in one trial, the number of virtual buttons in this virtual scene has been reduced to three (see Fig. 2b1).

One trial in this part started with participants pressing the middle blue button (the initial position), and then pressing the left button when its color changed to red. The audio feedback was generated with a specific time interval after the left button pressing, and they needed to verbally tell the experimenter the estimated time interval to end one trial. Then, the subsequent trial followed the same procedure, but participants were required to press the right button. There were four pre-training trials and six training trials for each hand design, and participants would be given 2 min break after finishing one test block to reduce fatigue. There were five test blocks in total in this part (which took approximately 15 min) corresponding to the five hand designs, and the order of hands was randomly set.

The procedure of this part was composed of two steps: four pre-training trials and six training trials. Pre-training trials: The first step was to allow participants to get familiar with 0.1 s time interval under four trials (pressing two left and two right buttons), which served as a reference for the interval estimation in the next step. Training trials: After the first steps, the second was to ask participants to estimate the randomly implemented interval between button pressings and beep feedback. Participants were informed in advance that the interval was randomly set, ranging from 0.1 to 1 s in each trial in this part. However, the actual interval was set to random 0.1, 0.3, 0.5, 0.7, or 0.9 s.

Proprioceptive measurement: This part implicitly measured the sense of self-location with five virtual hands. In order to reduce the visual impact of other redundant buttons, the virtual scene reserved one button with a prompt pattern to hint hand position on the table in VR (see Fig. 2b2). Instead of pressing virtual buttons, participants were asked to place their hands in the fixed position in this part. A transparent acrylic board with coordinates was placed in front of participants to assist in recording the position of their right hand (see Fig. 3). Based on the thickness of each participant's palm, the height of the board was fine-tuned to roughly 1 cm higher from their right hand.

Fig. 3. Participants pointed the hand positions of the middle fingertip (a) and wrist (b) on the transparent acrylic board with coordinates.

Participants placed the right hand on the fixed hand pattern in VEs, and they needed to use their left hand to identify the perceptive location of the right virtual hand (by pointing the positions of the middle fingertip and wrist) on the acrylic board (see Fig. 3a,b respectively), which process was repeated twice under one virtual hand. The experimenter recorded the actual and perceived positions of the virtual hand through the coordinates on the board, which would be used later to implicitly analyze the users’ sense of self-location. The five virtual hands appeared randomly, and the whole part took about 15 min.

Measurement and analysis

Self-report

The questionnaires, including body ownership, agency, and self-location, were used for evaluating the embodiment through its three subcomponents, and the detailed questions can be seen in Table 1. The subjects were asked to rate the answers between −3 (totally disagree) to 3 (totally agree) using a 7-Likert scale. The method (adding and subtracting the data from the Likert scale) used in this study is an accepted and common way to calculate the self-reported embodiment (including its three subcomponents and total embodiment) in the literature (Gonzalez-Franco and Peck, Reference Gonzalez-Franco and Peck2018; Roth and Latoschik, Reference Roth and Latoschik2020; Peck and Gonzalez-Franco, Reference Peck and Gonzalez-Franco2021). Following the arithmetic addition and subtraction of question scores, the values of three subcomponents (body ownership, agency, and self-location) and total embodiment were analyzed by this classical method in this study.

• Body ownership = Q1−Q2−Q3

• Agency = Q4 + Q5−Q6−Q7

• Self-location = Q8−Q9−Q10

• Total Embodiment = ((Body ownership/3) + (Agency/4) + (Self-location/3))/3

Intentional binding

The implicit agency method was to compare the differences between the actual and estimated time interval (Δt) between action and consequence via intentional binding, which referred that the sense of agency was defined as Δt (actual) − Δt (estimated). Participants judged the time interval between the action (pressing the virtual buttons) and the consequence (delayed sound beep between 100 and 1000 ms randomly). The values of Δt (actual) − Δt (estimated) with different virtual hand designs were analyzed. The estimated time interval that was relatively compressed indicated a positive measurement of the agency. The more positive value is interpreted as greater agency due to underestimating actual time intervals.

Proprioceptive measurement

The participants’ actual and perceived hand positions were recorded in the proprioceptive measurement part, which was an implicit measurement of self-location. The hand position is defined by the coordinates of two points (the participant's middle fingertip and wrist illustrated in Fig. 3). The position data of the actual hand and perceived hand from one participant, as an example, are shown in the left image of Figure 4, with A representing the point of middle fingertip and B representing the point of the wrist. Participants were asked to identify each hand position of the middle fingertip and wrist twice, and the average positions as final coordinates were calculated in this study. Based on the final coordinates of actual and perceived hand positions, the right image of Figure 4 shows an example of proprioceptive measurement data of block hand from all participants.

Fig. 4. Left: Explanation about the positions and actual hand (orange color) and perceived hand (blue color); Right: An example of proprioceptive measurement data of block hand from all participants.

The related formulas for calculating the hand position location are shown below:

• Coordinate of actual hand's middle fingertip: A (a 1, a 2)

• Coordinate of actual hand's wrist: B (b 1, b 2)

• Coordinate of perceived hand's middle fingertip: A′ (a′ 1, a′ 2)

• Coordinate of perceived hand's wrist: B′ (b′ 1, b′ 2)

• Length of actual hand:

$\vert {{AB}} \vert = \sqrt {{( {{a}_1-{b}_1} ) }^2 + {( {{a}_2-{b}_2} ) }^2}$

$\vert {{AB}} \vert = \sqrt {{( {{a}_1-{b}_1} ) }^2 + {( {{a}_2-{b}_2} ) }^2}$• Length of perceived hand:

$\vert {{{A}^{\prime}{B}^{\prime}}} \vert = \sqrt {{( {{{a}^{\prime}}_1-{{b}^{\prime}}_1} ) }^2 + {( {{{a}^{\prime}}_2-{{b}^{\prime}}_2} ) }^2}$

$\vert {{{A}^{\prime}{B}^{\prime}}} \vert = \sqrt {{( {{{a}^{\prime}}_1-{{b}^{\prime}}_1} ) }^2 + {( {{{a}^{\prime}}_2-{{b}^{\prime}}_2} ) }^2}$• Error between actual and perceived hand position (middle fingertip):

$\vert {{A{A}^{\prime}}} \vert = \sqrt {{( {{a}_1-{{a}^{\prime}}_1} ) }^2 + {( {{a}_2-{{a}^{\prime}}_2} ) }^2}$

$\vert {{A{A}^{\prime}}} \vert = \sqrt {{( {{a}_1-{{a}^{\prime}}_1} ) }^2 + {( {{a}_2-{{a}^{\prime}}_2} ) }^2}$• Error between actual and perceived hand position (wrist):

$\vert {B{B}^{\prime}} \vert = \sqrt {{( {{b}_1-{{b}^{\prime}}_1} ) }^2 + {( {{b}_2-{{b}^{\prime}}_2} ) }^2}$

$\vert {B{B}^{\prime}} \vert = \sqrt {{( {{b}_1-{{b}^{\prime}}_1} ) }^2 + {( {{b}_2-{{b}^{\prime}}_2} ) }^2}$• Error between actual and perceived hand length: |AB| − |A′B′|

Eye tracking

Based on previous studies (Dohan and Mu, Reference Dohan and Mu2019; Krogmeier and Mousas, Reference Krogmeier and Mousas2020, Reference Krogmeier and Mousas2021), the data on eye gaze behaviors could be collected and analyzed by fixation count, fixation length, and time to fixation in this study. The fixation count shows the entire number of eye fixations recorded on these virtual hands, the fixation length refers to the amount of time each fixation lasted, and the period it takes to first fixation at these virtual hands is explained as time to fixation.

In addition, according to this basic eye tracking data (such as fixation count, fixation length, and time to fixation) of virtual hands collected in this study, the users’ attention on these five virtual hands can be analyzed by the clustering algorithm (Dohan and Mu, Reference Dohan and Mu2019). The clustering algorithm named the K-means, as one of the more commonly used classification techniques, was used in this study, and it is a valuable technique for finding conceptually meaningful groups with a similar state. The algorithm has the number of clusters as research parameters that creates center reference points in an unsupervised way.

Results

All results obtained from the study were summarized in this section, and the analyses were performed using Excel and IBM SPSS statistics analysis software. The normality assumption of the subjective ratings was evaluated using the Kolmogorov–Smirnov test. The Kruskal–Wallis test, as a non-parametric test, compared samples from more groups of independent observations. Bonferroni's post-hoc test was conducted to check the significance for multiple comparisons, and the significance level α is at 0.05.

Self-report

The questionnaires including body ownership (Q1–Q3), agency (Q4–Q7), and self-location (Q8–Q10) were used in the study for assessing the embodiment through its three subcomponents (see Table 1). According to the results of the Kolmogorov–Smirnov test, the non-parametric test for the remaining analysis was used. The Kruskal–Wallis test has statistically evaluated the data from each question. The results of each subcomponent of the embodiment are shown in Table 1, with M standing for mean and SD standing for standard deviation, and M and SD are used to assist in understanding the final results after statistical calculations of significant differences.

Body ownership

The significant effect with P < 0.05 for body ownership-related questions (Q1–Q3) was found in this study (H = 24.534, P < 0.001). The minimal (M = 4.45; SD = 3.99) and capsule hands (M = 4.98; SD = 3.56) showed higher mean values than other virtual hands. However, the human hand (M = 3.65; SD = 4.40) reported a lower mean value of body ownership. By applying post-hoc tests, significant differences between the block hand and other hands (P < 0.05) were found, and the block hand (M = 2.00; SD = 3.55) induced the lowest sense of body ownership.

Agency

Based on the results of agency questions (Q4–Q7), this work found a significant effect of hand designs on the sense of agency (H = 33.181, P < 0.001). These four virtual hands proved similar scores denoting a higher mean value of agency than the block hand. In addition, the minimal (M = 8.20; SD = 2.79) and capsule hands (M = 8.08; SD = 2.99) showed the highest mean value of agency. The block hand (M = 4.62; SD = 4.14) was rated significantly lower than the minimal, capsule, low-poly, and human hand by pairwise comparisons.

Self-location

There was a significant result (H = 12.268, P = 0.015) from self-location questions (Q8–Q10). The minimal hand (M = 3.17; SD = 2.69) and capsule hand (M = 3.10; SD = 2.78) revealed a higher mean value about self-location. In addition, the human hand (M = 2.75; SD = 2.87) showed a lower mean value over the minimal hand and capsule hand. Pairwise comparisons proved significant differences between the block and the other two (minimal and capsule) hands. The block hand (M = 1.40; SD = 3.07) was rated significantly lowest.

Total embodiment

The total embodiment showed a significant result (H = 28.998, P < 0.001) in this research. Except the block hand (M = 0.76; SD = 0.90), minimal hand (M = 1.53; SD = 0.81), capsule hand (M = 1.57; SD = 0.80), low-poly hand (M = 1.43; SD = 0.84), and human hand (M = 1.33; SD = 0.96) revealed similar mean values. Pairwise comparisons of embodiment showed significant differences (P < 0.05), and the block hand indicated the lowest embodiment scores among these five hand designs.

Intentional binding

This data between the actual and estimated time interval was not following a normal distribution by the Kolmogorov–Smirnov test. However, for the results of the estimated time interval, no significant differences (H = 1.392, P = 0.846) were found under these five virtual hands by the Kruskal–Wallis test. The block hand (M = 65.00; SD = 245.29) revealed the lowest mean value among the five hands. The mean values of other hands were reported in the minimal hand (M = 86.11; SD = 193.40), capsule hand (M = 70.56; SD = 227.62), low-poly hand (M = 88.33; SD = 209.09), and human hand (M = 95.00; SD = 223.05).

Proprioceptive measurement

The Kolmogorov–Smirnov test (P < 0.05) determined that all position data were not normally distributed, then the non-parametric statistical test was required. The errors between the actual and perceived hand positions (including the middle fingertip and wrist) were obtained, and the hand lengths of participants’ actual and perceived were calculated. The Kruskal–Wallis test also analyzed differences in participants’ proprioceptive perceptions among five different virtual hands. The final coordinates for these two positions (middle fingertip and wrist) with five hands were recorded in Figure 4.

1) Error between the actual and perceived hand positions (middle fingertip and wrist): According to calculating the relative value between the coordinates of the actual and perceived hand positions (middle fingertip and wrist), the error between them could be obtained.

Middle fingertip: The average errors between the actual and perceived hand middle fingertip were shown in the block hand (4.55 cm), minimal hand (4.08 cm), capsule hand (4.26 cm), low-poly hand (4.00 cm), and human hand (4.16 cm). The Kruskal–Wallis test showed no significant difference in the position of the middle fingertip (H = 2.398, P = 0.663) under five virtual hands.

Wrist: The average errors of the wrist between the actual and perceived hand were listed below: block hand (3.39 cm), minimal hand (3.74 cm), capsule hand (3.64 cm), low-poly hand (4.36 cm), and human hand (4.08 cm). There was no significant difference in the wrist position among these five hands by the Kruskal–Wallis test (H = 5.304, P = 0.257).

2) Error between the actual and perceived hand length: There are relative errors between the actual and perceived hand length among these five virtual hands, such as the block hand (3.03 cm), minimal hand (2.48 cm), capsule hand (–2.83 cm), low-poly hand (–1.88 cm), and human hand (–2.57 cm). There is a significant difference in relative error between the actual and perceived hand length (H = 75.541, P < 0.001) with different hands observed by the Kruskal–Wallis test. Based on the relative values of real and perceived hand lengths, it was noticed that participants underestimated the actual length of their hands when they perceived capsule hand, low-poly hand, and human hand.

Eye tracking

To determine whether various virtual hand designs affected eye behaviors (fixation count, fixation length, and time to fixation) in VR, the Kruskal–Wallis test was used to explore eye tracking data toward these five hands, and the post-hoc test was conducted to check the significance for pairwise comparisons.

1) Eye behaviors data: There were significant differences in fixation count (H = 56.866, P < 0.001), and fixation length (H = 88.317, P < 0.001) with five virtual hands shown in Figure 5, while no significant difference in time to fixation (H = 1.104, P = 0.894). Post-hoc test using a Bonferroni correction was used to compare all pairs of groups.

Fixation count: Pairwise comparisons about fixation count showed that participants who experienced the Whack-a-Mole task looked significantly less times at minimal hand (M = 33.04; SD = 14.07) than other hands (all P < 0.05). The human hand (M = 51.23; SD = 12.63; P < 0.001) and capsule hand (M = 50.64; SD = 11.67; P < 0.001) with similar and higher mean values were found the significant difference between the low-poly hand (M = 42.73; SD = 12.44). Meanwhile, the block hand (M = 43.13; SD = 12.75) was rated significantly lower than the human hand and capsule hand.

In addition, the number of times participants looked at buttons and virtual environments were also recorded in this study. However, no significant differences in participants’ viewing of virtual buttons (H = 3.779, P = 0.437) and environments (H = 2.342, P = 0.673) were found among these five virtual hands.

Fixation length: Based on the post-hoc comparisons, the result of fixation length indicated that participants looked significantly less at the minimal hand (M = 2.85; SD = 1.71) than at other hands. The highest mean value of the human hand (M = 7.69; SD = 3.48) showed a significant difference between the block hand (M = 4.80; SD = 2.23; P < 0.001) and low-poly hand (M = 5.39; SD = 2.51; P = 0.014). Moreover, the capsule (M = 6.93; SD = 3.00) hand was rated significantly higher than the block hand and low-poly hand.

A significant difference was found in the amount of time participants spent watching the buttons (H = 6.859, P < 0.001), while not the virtual environment (H = 4.249, P = 0.373). Participants looked significantly more at the minimal hand (M = 15.29; SD = 4.75) than other hands by post-hoc comparisons.

Time to fixation: There were no significant effects in the result of time to fixation, such as virtual hands, buttons, and environments. The low-poly hand (M = 15.01; SD = 4.10) revealed the lowest mean value and standard deviation than other four hands. The block hand (M = 15.70; SD = 5.53) and human hand (M = 15.37; SD = 5.29) have similar standard deviations. The capsule hand (M = 15.72; SD = 6.79) and minimal hand (M = 16.18; SD = 7.42) with similar mean values have higher standard deviations among these hands.

2) Correlations between eye behaviors data and self-reported embodiment: Due to the non-parametric data, the Spearman test was used to evaluate the relationship between self-report and eye tracking data for each condition with five hands (see Table 2). The Spearman correlation of different values was considered negative at –1 to 0 and positive at 0 to 1, and the coefficient was defined as: weak (0≤|r s|<0.3), moderate (0.3≤|r s|<0.6), strong (0.6≤|r s|<1), and perfect 1. However, there was no relationship between the eye fixation data of virtual hands and the self-reported body ownership, agency, and self-location (all P > 0.05).

3) Clustering eye behaviors data: According to the significant results about fixation count and fixation length, participants’ gaze data (fixation count and length) were separated into three degrees based on the distribution of the numerical values, such as low attention, middle attention, and high attention. The levels of gaze in three clusters of participants’ attention with five virtual hands are plotted in Figure 6, and the figure indicates different levels of attention across five hands tasks from all participants.

Fig. 5. The fixation count (left) and fixation length (right) for five virtual hand designs.

Fig. 6. Clustering results using K-means algorithm under five virtual hands.

Table 2. Correlations between eye fixations and self-report data

After removing outliers, 56 participants’ fixation count and fixation length data were collected. For each hand, all participants were grouped into three clusters including low attention (red cluster), middle attention (yellow cluster), and high attention (blue cluster), which can be seen in Table 3 and Figure 6. There were three center reference points of these three clusters in each hand condition, and the center reference points of participants’ eye tracking data across five hands were different. According to different locations of reference points, the distribution of three degrees of attention was also diverse in these five conditions.

Table 3. Distribution of participants with three degrees of attention

The result revealed that close to 30 participants used the block hand with middle attention, and about 10 participants with low attention. More than 40 participants showed low (24 participants) and middle attention (21 participants) to the minimal hand. Only 6 participants paid low attention to the capsule hand, and over 20 participants paid middle and high attention, respectively. For similar attention results of low-poly hand and human hand, just more than 20 participants owned middle attention. Most participants paid middle and high attention to these three hands (such as the block hand, low-poly hand, and human hand), but most participants used the minimal hand with low and middle attention. Less participants with low attention and most participants with middle attention were shown using the capsule hand.

Discussion

Theoretical implication

Self-reported embodiment

The questionnaire results of this study have proved that all these five virtual hand designs (block, minimal, capsule, low-poly, and human hand) in five realism levels could elicit the sense of embodiment (body ownership, agency, and self-location) in users. The findings also indicated that the low-realism hand design represented by a primitive geometry without the shape of fingers, the block hand in this study, induced the lowest sense of embodiment compared with other hand designs with higher realism, which corroborated the previous findings in the literature (Ogawa et al., Reference Ogawa, Narumi and Hirose2019; Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020).

Previous studies discussed that strong body ownership over a virtual avatar in VR played a crucial role when attributing its actions to oneself (Freeman and Maloney, Reference Freeman and Maloney2021; Wolf et al., Reference Wolf, Merdan, Dollinger, Mal, Wienrich, Botsch and Latoschik2021). In this study, participants’ ratings from questionnaire results revealed significantly lower body ownership with the block hand. Earlier articles that explored the influence of different appearances on body ownership also reported similar conclusions (Argelaguet et al., Reference Argelaguet, Hoyet, Trico and Lécuyer2016; Zhang et al., Reference Zhang, Huang, Zhao, Yang, Liang, Han and Sun2020). The low-realism hand rated less body ownership than hands with other realism levels. A possible explanation is that it is difficult for users to believe that less realistic representations can replace their actual hands. For example, the block hand lacking fingers did not change significantly when participants’ fingers moved in VEs. There was no significant difference between the human hand and the other three (minimal, capsule, and low-poly) hands, which was contrary to the high realism levels of hand expected in previous research to induce a stronger sense of body ownership (Schwind et al., Reference Schwind, Knierim, Tasci, Franczak, Haas and Henze2017; Ogawa et al., Reference Ogawa, Narumi and Hirose2019). Due to the Uncanny Valley effect, the previous article explained that several people could feel scared when they used the realistic hand (Schwind et al., Reference Schwind, Knierim, Tasci, Franczak, Haas and Henze2017; Hepperle et al., Reference Hepperle, Purps, Deuchler and Woelfel2021). Based on participants’ comments after the experiment, some reported higher expectations of the high-realism hand at first, but they finally felt more disappointed with it because of the unnatural experience during the experiment.

Interestingly, there were controversial results in the literature about whether the more realistic hand brought a higher sense of agency (Ogawa et al., Reference Ogawa, Narumi and Hirose2019; Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020; Zhang et al., Reference Zhang, Huang, Zhao, Yang, Liang, Han and Sun2020). The reasons for these contradictory results may be related to the different interaction methods in VR, such as using traditional VR controllers (Zhang et al., Reference Zhang, Huang, Zhao, Yang, Liang, Han and Sun2020) or hand tracking models (Ogawa et al., Reference Ogawa, Narumi and Hirose2019; Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020). This study showed significant differences in the agency from self-report results with Leap motion (hand tracking model). The minimal, capsule, low-poly, and human hands were significantly rated higher than the block hand. The result was in keeping with a previous observational study, which found that embodiment scores in the low-realism hand were significantly lower than in other realism conditions (Ogawa et al., Reference Ogawa, Narumi and Hirose2019). This result may be explained by the fact that virtual hand designs that own fingers can enhance peoples’ controlling experience by providing real-time visual feedback of fine movements of the hand when the hand tracking device is applied. It is concluded that the agency can be generated when participants’ hands move synchronized with virtual hands, and these hand designs can influence the sense of agency.

One focus of this research is exploring the self-location of embodiment, because there are not clear how virtual hand representations affect self-location in VEs (Ogawa et al., Reference Ogawa, Narumi and Hirose2019; Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020). A work reported that hand appearances affect self-body perception in the embodiment questionnaire: low-realism hand with a lower embodiment score including self-location (Ogawa et al., Reference Ogawa, Narumi and Hirose2019). In addition, an author argued that the sense of self-location contributed minimally toward embodiment than the other two subcomponents (Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020). In this study, the self-location value indicated a significant effect. Consistent with one literature (Ogawa et al., Reference Ogawa, Narumi and Hirose2019), this research found that the block hand was rated significantly lowest self-location over the minimal and capsule hand from questionnaire results. The possible explanation for this is that the low-realism hand without fingers could affect the feeling of hand position, and several participants indicated that they judged their hand position by the position of the fingertip of the middle finger instead of the wrist when they felt the position of the hand.

Implicit embodiment

This study did not significantly differ in intentional binding under five virtual hand designs, even though there was a significant difference in self-reported results. Participants felt the difference in time interval when they completed the experiment, but the difference in estimating the time interval with different virtual hands was not noticeable. In fact, the relationship between the self-reported and implicit (intentional binding) method of the agency was debated in the literature (Dewey and Knoblich, Reference Dewey and Knoblich2014; Bergström et al., Reference Bergström, Knibbe, Pohl and Hornbæk2022). Research has shown that there are dissociations between self-reported and implicit agency judgments (Dewey and Knoblich, Reference Dewey and Knoblich2014), while the correlation may be moderated by the performance of a recent study (Bergström et al., Reference Bergström, Knibbe, Pohl and Hornbæk2022). The finding formed the study also accords with the earlier observation (Dewey and Knoblich, Reference Dewey and Knoblich2014), which showed no relationship between the correlation of these two methods. It is possible that the information sources of the process when participants answer the questionnaire and perceive time intervals are different. A study reported that the self-attributions of self-reported and intentional binding relied on overlapping cues (Dewey and Knoblich, Reference Dewey and Knoblich2014). As a self-reported measure, participants needed to report whether they had produced the sense of agency or not after the experimental task. They needed to rely on their own recollections to make judgments, while implicit measures focused on participants’ current estimates of the time interval.

The implicit result of self-location showed no significant effect in error between the positions of actual and perceived hands under five virtual hands, although self-reported results had. Based on a significant result about relative errors in real and perceived hand lengths, participants underestimated the actual length of their hands with capsule hand, low-poly hand, and human hand. Several participants reported after the proprioceptive measurement that when they saw the block hand lacking fingers, they relied more on their perceptual information than virtual vision information to estimate hand positions, especially in the middle fingertip. In addition, according to the data of proprioceptive measurement, it had a deviation between the perception of actual and virtual hand position from participants, even though there was no significant difference among these five hands. Participants perceived the position of the middle fingertip to the right side as closer to their body than the actual position, which was a similar result in a non-VR study (Ingram et al., Reference Ingram, Butler, Gandevia and Walsh2019). It is possible that the sense of self-location relies on a combination of additional cues, including informative vision and the perceived position of the virtual hand.

Eye tracking and embodiment

As mentioned in previous articles, eye tracking data is a new method to help explore the effect of virtual hands on the sense of embodiment and understand how participants pay attention to different virtual hands in VR. It has been noted that the utility and versatility of using biometric tools and measures in design fields, such as eye tracking data. There is no need to collect subjective data alone, and these biometric measurements can objectively investigate more content and increase meaning (Borgianni and Maccioni, Reference Borgianni and Maccioni2020).

Previous literature has reported significant correlations between eye tracking data and three components of embodiment: body ownership and self-location (Krogmeier and Mousas, Reference Krogmeier and Mousas2020, Reference Krogmeier and Mousas2021), and agency (Zhang et al., Reference Zhang, Huang, Liao and Yang2022a). However, this work did not find a connection between self-reported embodiment and eye tracking data. A previous article suggested the potential importance of time to fixation in eliciting the sense of body ownership: participants would report more body ownership when they sooner looked at a virtual avatar during the virtual experience. As mentioned in the literature review, the potential relationship between the body ownership and eye tracking data: a weak negative relationship between body ownership and time to fixation (Krogmeier and Mousas, Reference Krogmeier and Mousas2020). For example, it has been demonstrated that participants watched the virtual zombie avatar significantly sooner than the mannequin, and more body ownership was reported with the zombie (Krogmeier and Mousas, Reference Krogmeier and Mousas2020). A previous study has investigated a weak negative correlation between self-reported self-location and self-fixation count (Krogmeier and Mousas, Reference Krogmeier and Mousas2020). For instance, participants reported looking at their self-avatar more times with less self-location (Krogmeier and Mousas, Reference Krogmeier and Mousas2020). The potential link between the agency and eye tracking data under three virtual hands was found in an article, and the less times participants watched one hand implied the less sense of agency (Zhang et al., Reference Zhang, Huang, Liao and Yang2022a). Nevertheless, this study's result was different to previous studies, and no significant effect of the relationship between self-reported embodiment (body ownership, agency, and self-location) and eye tracking data (fixation count, fixation length, and time to fixation) was found under these hand designs.

It has been reported that users’ attention might affect participants’ perception of virtual avatars, such as the sense of embodiment, although there is not enough evidence yet (Cui and Mousas, Reference Cui and Mousas2021). Its results revealed that on-site participants paid more attention to hand appearance than remote participants, which may be related to their high degrees of attention. In order to continue to explore the relationship between the embodiment and participants’ attention, eye tracking data was recorded, and cluster analysis by K-means was conducted to classify user attention under different virtual hands.

According to the results about eye behaviors, there were significant effects in fixation count and fixation length with five hand designs, while no time to fixation. Participants looked significantly less times and length at the minimal hand than other hands and looked more at virtual buttons and environments. It may be interpreted that the skeleton of the minimal hand is smaller than other hands. When participants could see virtual buttons under the virtual hand more clearly, they may pay more attention to the task or environment than the virtual hand itself. A study also reported that participants would focus on the task rather than the tool in VEs when they embodied a tool (Alzayat et al., Reference Alzayat, Hancock and Nacenta2019). Interestingly, although the questionnaire's conclusion of body ownership had a lower value for the human hand, most participants with the human hand showed middle and high attention in the clustering results. The possible explanation is that participants may pay attention to the virtual human hand when its interaction is not perfectly matched with their own hand. As mentioned in the previous article, participants may focus on performing the project rather than different hand designs if they interact more smoothly in VEs. Moreover, complex hand designs or users’ familiarity may affect participants’ attention to some extent, which could be considered.

Summarized embodiment

Significant differences in the sense of embodiment with various hand designs were found in questionnaire results, even not in implicit measures. It has a reasonable explanation that these two measurements rely on different informative sources from participants. In addition, several participants commented that the questionnaire seemed to have similar questions when they answered them, especially in the sense of body ownership and agency. Previous works also proposed that the inter-relations between the three subcomponents probably influenced the sense of embodiment (Kilteni et al., Reference Kilteni, Groten and Slater2012; Fribourg et al., Reference Fribourg, Argelaguet, Lécuyer and Hoyet2020), even though there is no definitive conclusion yet.

The questionnaire result on body ownership showed a significant difference, and there was no consistent result with previous literature about the correlation between body ownership and eye tracking data (Krogmeier and Mousas, Reference Krogmeier and Mousas2020). It is possible that few participants with VR experience bring in the knowledge that the virtual hands are not their real hands in advance, which influence their perceptions of embodiment, especially in the sense of body ownership. Although this study did not compare the perceptions of participants with different prior VR experiences, it could be considered in future work. In addition, several participants had prior experience with the hand tracking model (Leap motion) and paid more attention to virtual hand movements with their own synchronizing rather than hand representations. Concerning eye tracking data, a previous study found that the sense of body ownership was related to how quickly participants viewed the virtual avatar. However, the speed at which they viewed the different virtual hands was similar in this study.

There was a significant effect in the self-reported agency, and this study had not found different binding for different time intervals with five hand designs. The general conclusions about self-reported agency in the literature were contradictory, but a significant result was obtained in this study. Compared with a previous study (Zhang et al., Reference Zhang, Huang, Zhao, Yang, Liang, Han and Sun2020), different interaction modes may be a factor affecting the agency. The implicit method may be a new way to assess agency, but it is also controversial in the research community (Bergström et al., Reference Bergström, Knibbe, Pohl and Hornbæk2022). The self-report and other measures of agency (implicit and objective) were not significantly correlated with each other in this study, and the finding still did not fully support the link between self-reported agency and the data of intentional binding and eye tracking.

It has been shown that a significant effect from questionnaire results on self-location, but there was no correlation between the proprioceptive measurement and the self-reported result. Previous literature reported that the contribution of the sense of self-location was relatively small (Heinrich et al., Reference Heinrich, Cook, Langlotz and Regenbrecht2020), but there were deviations with all virtual hands between the perceived and actual positions by the implicit measurement in this study. Although there is no significant effect with the five hands, the application of the implicit method in this study could be a reference for further research about exploring the sense of self-location in VR. In this study, there was no relationship between self-reported self-location and eye tracking data, while the correlation between self-location and time to fixation was reported from a previous finding (Krogmeier and Mousas, Reference Krogmeier and Mousas2020). Therefore, the current conclusion about the relationship between self-location and eye tracking is unclear.

A surprising result about perceiving hand length was found in this study. Participants underestimated their real hand length when using virtual hands. They perceived their hands in VEs to be shorter than their actual length, which was an interesting conclusion in this study. Similarly, an article with a psychological background reported underestimating finger length, even though no reasonable explanation was put forward (Ingram et al., Reference Ingram, Butler, Gandevia and Walsh2019). In our study, users’ eye tracking data were detected and analyzed to help explore their embodied experience in VEs. Physiological data of users are becoming promising methods of user experience evaluation for researchers and designers, such as eye tracking (Zhang et al., Reference Zhang, Huang, Liao and Yang2022a) and electroencephalography (Alchalabi et al., Reference Alchalabi, Faubert and Labbe2019), along with the development of artificial intelligence for physiological data processing (Batliner et al., Reference Batliner, Hess, Ehrlich-Adam, Lohmeyer and Meboldt2020; Wan et al., Reference Wan, Yang, Huang, Zeng and Liu2021; Yu et al., Reference Yu, Yang and Huang2022). Using eye tracking and other physiological data to infer user reactions and perceptions toward difference virtual avatar designs could be an exciting direction for future work. In the current study, only a neutral virtual hand representation was used for the realistic hand design without reflecting any gender or personalization. Distinguishing different genders of virtual hands or designing personalized virtual hands for VR applications may lead to a deeper investigation of users’ embodied cognition in future. Furthermore, it is suggested that implementing cognitive artificial intelligence in VR systems can make them capable of analyzing and making decisions like human users (Zhao et al., Reference Zhao, Li and Xu2022), which can help import the cognition capability to systems for better interaction between people and VR.

Design consideration

The current study found that participants produced the feeling of owning, controlling, and being inside virtual hands with different hand representations, and they experienced these virtual hands as their own hands to some extent by pressing the virtual buttons. Participants rated lower embodiment with the block hand. There are no significant findings on the other four hands, but the virtual human hand with the highest expectation owned a lower response about body ownership. These findings about the impact of hand representation on the sense of embodiment in VR might contribute to virtual avatar design in future VR applications in the design industry.

Design virtual hands that can clearly show finger movements to increase users’ embodiment. When participants used hand (including finger) tracking technology to interact with the virtual world, they reported the lowest embodiment on block hand in this study. Participants were expected to apply virtual hands with fingers, which could present the detailed movement of each finger. They could feel bored when completing virtual tasks with no-finger hand. Therefore, it is recommended to design virtual hands that can clearly show the finger movements to increase the embodiment in VEs, when users are expected to interact through finger tracking.

Virtual hand design with hollowed representations can be a good solution when the virtual scene beneath the hand is important during the interaction. Several participants who used Leap motion before reported that they felt more comfortable using the capsule hand than other hands for interaction in VR, which may affect subjective conclusions to some extent. In addition, participants commented after the experiment that they could watch more virtual space with minimal and capsule hands, due to these hollowed representations. These two hands have the unique advantage due to their hollowed appearance, which allows users to understand better what is happening beneath the virtual hand and eventually leads to a better feeling of control during the interaction. When the virtual scene beneath the virtual hand is important for manipulation in VR applications, the minimal and capsule hands could be good choices for designers and developers, resulting in a better controlling experience for users.

The realistic hand with high realism level should be used with particular attention to avoid negative influence on user experience. The human hand (with a high realism level) that was similar to users’ own hands came with the highest expectations, and participants were more likely to carefully compare the difference between the virtual hand and their own hand. A sense of loss could be generated if they felt that the human hand did not match their own hands perfectly. Meanwhile, the fear toward a realistic hand could be generated due to the Uncanny Valley effect. Additionally, it was noticed that most females had a negative attitude toward the human hand with dissimilar hand sizes and appearances. The findings from this study suggest that the realistic hand need to be applied in VR applications with caution. Because it looks like the real hand and leads to high expectations, users will feel a sense of loss if it does not meet the prospects after comparing it with their real hands. The application of the human hand in VR applications needs to consider many aspects, such as size, gender and personalization, and avoid the negative effect of Uncanny Valley.

Conclusion

This research investigated the impact of five virtual hand designs on users’ embodiment in VR by systematically integrating subjective and objective measurements. This study has offered new insights into the subcomponents of embodiment, that is, the sense of body ownership, agency, and self-location, respectively. Besides self-reporting measures, this study evaluated the agency and self-location through implicit methods derived from psychology. In addition, eye tracking data were used as the objective method to help explore the connection between the virtual hand designs and the sense of embodiment. Our findings indicated that the low realism level of hand (block hand) led to the lowest scores of three subcomponents of embodiment from self-reports, while implicit methods showed no significant differences. We also found that the results of using the human hand showed middle and high user attention. The theoretical implication of the results of the embodiment and its three subcomponents were discussed, and the practical implication was provided on the design of virtual hands. This study makes an essential contribution to further understanding users’ embodiment with various virtual hand designs in VR and the practical design considerations for virtual hand design in VR applications.

Financial support

This work has been approved by the University Ethics Committee in XJTLU and is supported by the Key Program Special Fund in XJTLU (KSF-E-34), the Research Development Fund of XJTLU (RDF-18-02-30) and the Natural Science Foundation of the Jiangsu Higher Education Institutions of China (20KJB520034).

Conflict of interests statement

The authors do not have any competing interests to report.

Data availability statement

The datasets generated during the current study are available from the corresponding author upon reasonable request.

Jingjing Zhang is a PhD student at the University of Liverpool, Liverpool, UK. Her research focuses on human–computer interaction and embodied user experience in virtual reality. She received her BEng degree in Industrial Design from Xi'an Jiaotong-Liverpool University and the University of Liverpool in 2019.

Mengjie Huang is an Assistant Professor in the Design School at Xi'an Jiaotong-Liverpool University, China. She received the BEng degree from Sichuan University and the PhD degree from the National University of Singapore. Her research interests lie in human–computer interaction design, with special focuses on hum an factors, user experience, and brain decoding. Her recent research projects relate to virtual/augmented reality and brain–computer interface.

Rui Yang is an Associate Professor in the School of Advanced Technology at Xi'an Jiaotong-Liverpool University, China. He received the BEng degree in Computer Engineering and the PhD degree in Electrical and Computer Engineering from the National University of Singapore. His research interests include machine learning-based data analysis and applications. He is an active reviewer for international journals and conferences, and is currently serving as an Associate Editor for Neurocomputing.

Yiqi Wang holds an MSc in Information and Computing Science from the University College London, UK, in 2022. She received her BSc at Xi'an Jiaotong-Liverpool University in 2021, and her research interests include human–computer interaction and user experience.

Xiaohang Tang is an undergraduate student at the University of Liverpool, Liverpool, UK. He is interested in human–computer interaction and virtual reality.

Ji Han is a Senior Lecturer (Associate Professor) in Design and Innovation at the Department of Innovation, Technology, and Entrepreneurship at the University of Exeter. His research addresses various topics relating to design and creativity and places a strong emphasis on exploring new design approaches and developing advanced design support tools. His general interests include design creativity, data-driven design, AI in design, and virtual reality.

Hai-Ning Liang is a Professor in the Department of Computing at Xi'an Jiaotong-Liverpool University, China. He obtained his PhD in Computer Science from the University of Western Ontario, Canada. His research interests fall within human–computer interaction, focusing on developing novel interactive techniques and applications for virtual/augmented reality, gaming, visualization, and learning systems.