1 Introduction

1.1 The

$\kappa $

-color BBS

$\kappa $

-color BBS

The box-ball systems (BBS) are integrable cellular automata in 1+1 dimension whose longtime behavior is characterized by soliton solutions. The

![]() $\kappa $

-color BBS is a cellular automaton on the half-integer lattice

$\kappa $

-color BBS is a cellular automaton on the half-integer lattice

![]() $\mathbb {N}$

, which we think of as an array of boxes that can fit at most one ball of any of the

$\mathbb {N}$

, which we think of as an array of boxes that can fit at most one ball of any of the

![]() $\kappa $

colors. At each discrete time

$\kappa $

colors. At each discrete time

![]() $t\ge 0$

, the system configuration is given by a coloring

$t\ge 0$

, the system configuration is given by a coloring

![]() $\xi ^{(t)}:\mathbb {N}\rightarrow \mathbb {Z}_{\kappa +1}:=\mathbb {Z}/(\kappa +1) \mathbb {Z}=\{0,1,\cdots , \kappa \}$

with finite support – that is, such that

$\xi ^{(t)}:\mathbb {N}\rightarrow \mathbb {Z}_{\kappa +1}:=\mathbb {Z}/(\kappa +1) \mathbb {Z}=\{0,1,\cdots , \kappa \}$

with finite support – that is, such that

![]() $\xi ^{(t)}_{x}=0$

for all but finitely many sites x. When

$\xi ^{(t)}_{x}=0$

for all but finitely many sites x. When

![]() $\xi _{x}^{(t)}=i$

, we say the site x is empty at time t if

$\xi _{x}^{(t)}=i$

, we say the site x is empty at time t if

![]() $i=0$

and occupied with a ball of color i at time t if

$i=0$

and occupied with a ball of color i at time t if

![]() $1\le i \le \kappa $

. To define the time evolution rule, for each

$1\le i \le \kappa $

. To define the time evolution rule, for each

![]() $1\le a \le \kappa $

, let

$1\le a \le \kappa $

, let

![]() $K_{a}$

be the operator on the subset

$K_{a}$

be the operator on the subset

![]() $(\mathbb {Z}_{\kappa +1})^{\mathbb {N}}$

of all

$(\mathbb {Z}_{\kappa +1})^{\mathbb {N}}$

of all

![]() $(\kappa +1)$

-colorings on

$(\kappa +1)$

-colorings on

![]() $\mathbb {N}$

with finite support defined as follows:

$\mathbb {N}$

with finite support defined as follows:

-

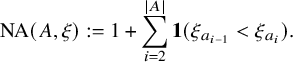

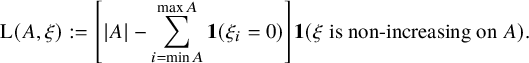

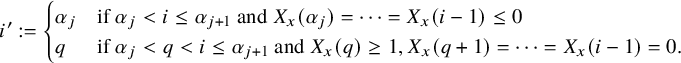

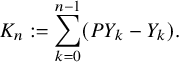

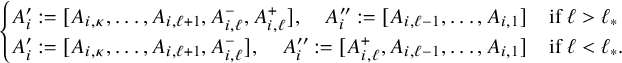

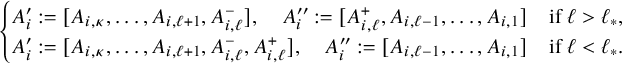

1. (i) Label the balls of color a from left as

$a_{1},a_{2},\cdots ,a_{m}$

.

$a_{1},a_{2},\cdots ,a_{m}$

. -

2. (ii) Starting from

$k=1$

to m, successively move ball

$k=1$

to m, successively move ball

$a_{k}$

to the leftmost empty site to its right.

$a_{k}$

to the leftmost empty site to its right.

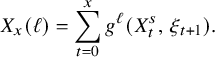

Then the time evolution

![]() $(X_{t})_{t\ge 0}$

of the basic

$(X_{t})_{t\ge 0}$

of the basic

![]() $\kappa $

-color BBS is given by

$\kappa $

-color BBS is given by

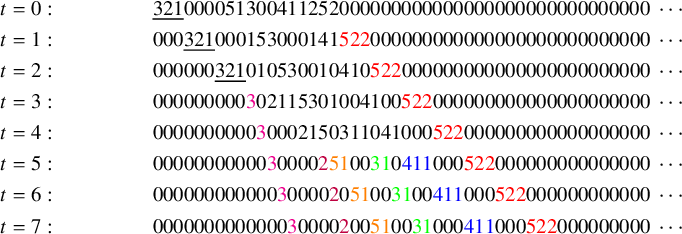

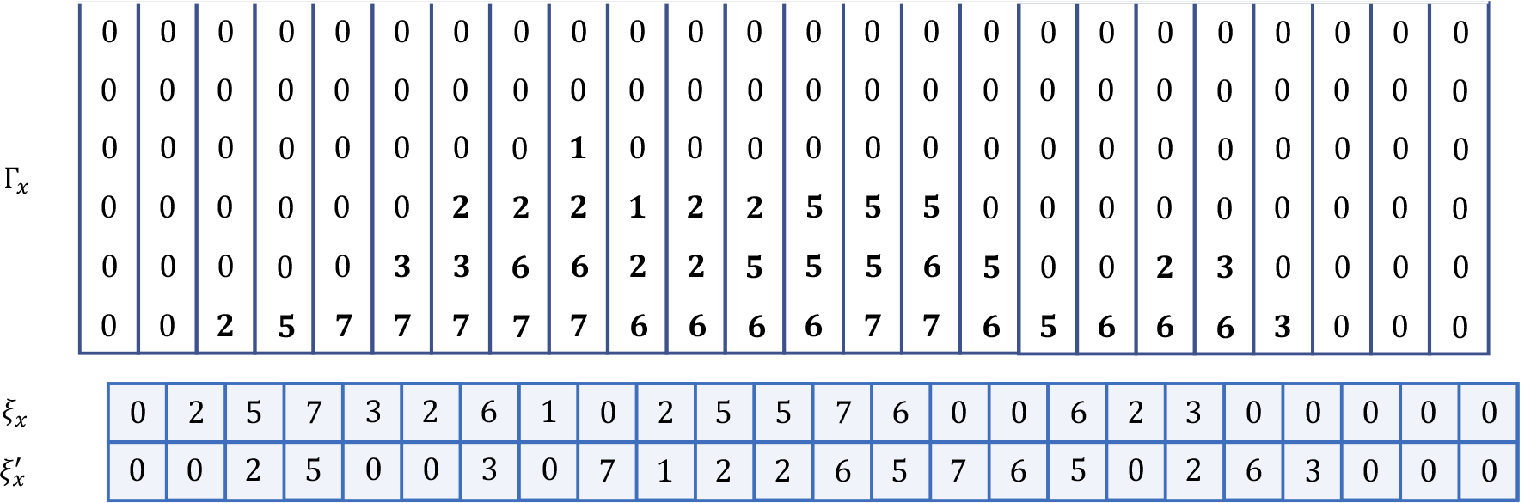

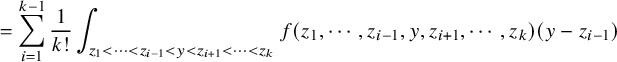

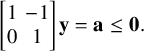

A typical 5-color BBS trajectory is shown below.

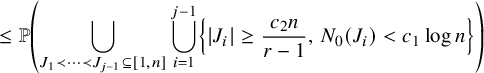

$$ \begin{align*} t=0: && \underline{3 2 1} 0 0 0 0 5 1 3 0 0 4 1 1 2 5 2 0 0 00000000 0000 000 0000 000 00 0000\ \cdots\hphantom{.} \\t=1: && 0 0 0 \underline{3 2 1} 0 0 0 1 5 3 0 0 0 1 4 1 {\color{red}5 2 2} 0000 000 0000 000 00000 0000 0000\ \cdots\hphantom{.} \\t=2: && 0 0 0 0 0 0 \underline{3 2 1} 0 1 0 5 3 0 0 1 0 4 1 0 {\color{red}5 2 2} 0000 000 0000 0000 0000 00000\ \cdots\hphantom{.} \\t=3: && 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 2 1 1 5 3 0 1 0 0 4 1 0 0 {\color{red}5 2 2} 0000 000 0000 0000 000000\ \cdots\hphantom{.} \\t=4: && 0 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 0 0 2 1 5 0 3 1 1 0 4 1 0 0 0 {\color{red}5 2 2} 0000 000 000000 0 0000\ \cdots\hphantom{.} \\t=5: &&\quad 0 0 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 0 0 0 {\color{purple}2} {\color{orange}5 1} 0 0 {\color{green}3 1} 0 {\color{blue}4 1 1} 0 0 0 {\color{red}5 2 2} 0000 0000 000000 0\ \cdots\hphantom{.} \\t = 6: && 0 0 0 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 0 0 0 {\color{purple}2} 0 {\color{orange}5 1} 0 0 {\color{green}3 1} 0 0 {\color{blue}4 1 1} 0 0 0 {\color{red}5 2 2} 0 0 0 0 0 0 0 0 0 000\ \cdots\hphantom{.} \\t = 7: && 0 0 0 0 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 0 0 0 {\color{purple}2} 0 0 {\color{orange}5 1} 0 0 {\color{green}3 1} 0 0 0 {\color{blue}4 1 1} 0 0 0 {\color{red}5 2 2} 000000000\ \cdots\hphantom{.} \end{align*} $$

$$ \begin{align*} t=0: && \underline{3 2 1} 0 0 0 0 5 1 3 0 0 4 1 1 2 5 2 0 0 00000000 0000 000 0000 000 00 0000\ \cdots\hphantom{.} \\t=1: && 0 0 0 \underline{3 2 1} 0 0 0 1 5 3 0 0 0 1 4 1 {\color{red}5 2 2} 0000 000 0000 000 00000 0000 0000\ \cdots\hphantom{.} \\t=2: && 0 0 0 0 0 0 \underline{3 2 1} 0 1 0 5 3 0 0 1 0 4 1 0 {\color{red}5 2 2} 0000 000 0000 0000 0000 00000\ \cdots\hphantom{.} \\t=3: && 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 2 1 1 5 3 0 1 0 0 4 1 0 0 {\color{red}5 2 2} 0000 000 0000 0000 000000\ \cdots\hphantom{.} \\t=4: && 0 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 0 0 2 1 5 0 3 1 1 0 4 1 0 0 0 {\color{red}5 2 2} 0000 000 000000 0 0000\ \cdots\hphantom{.} \\t=5: &&\quad 0 0 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 0 0 0 {\color{purple}2} {\color{orange}5 1} 0 0 {\color{green}3 1} 0 {\color{blue}4 1 1} 0 0 0 {\color{red}5 2 2} 0000 0000 000000 0\ \cdots\hphantom{.} \\t = 6: && 0 0 0 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 0 0 0 {\color{purple}2} 0 {\color{orange}5 1} 0 0 {\color{green}3 1} 0 0 {\color{blue}4 1 1} 0 0 0 {\color{red}5 2 2} 0 0 0 0 0 0 0 0 0 000\ \cdots\hphantom{.} \\t = 7: && 0 0 0 0 0 0 0 0 0 0 0 0 0 {\color{magenta}3} 0 0 0 0 {\color{purple}2} 0 0 {\color{orange}5 1} 0 0 {\color{green}3 1} 0 0 0 {\color{blue}4 1 1} 0 0 0 {\color{red}5 2 2} 000000000\ \cdots\hphantom{.} \end{align*} $$

The grounding observation in the

![]() $\kappa $

-color BBS with finitely many balls of positive colors is that the system eventually decomposes into solitons, which are sequences of consecutive balls of positive and nonincreasing colors, whose length and content are preserved by the BBS dynamics in all future steps. For instance, all of the nonincreasing consecutive sequences of balls in

$\kappa $

-color BBS with finitely many balls of positive colors is that the system eventually decomposes into solitons, which are sequences of consecutive balls of positive and nonincreasing colors, whose length and content are preserved by the BBS dynamics in all future steps. For instance, all of the nonincreasing consecutive sequences of balls in

![]() $\xi ^{(6)}$

in the example (specifically,

$\xi ^{(6)}$

in the example (specifically,

![]() ${\color {magenta}3}, {\color {purple}2}, {\color {orange}51}, {\color {green}31}, {\color {blue}411}, {\color {red}522}$

) above are solitons, and they are preserved in

${\color {magenta}3}, {\color {purple}2}, {\color {orange}51}, {\color {green}31}, {\color {blue}411}, {\color {red}522}$

) above are solitons, and they are preserved in

![]() $\xi ^{(7)}$

up to their location and will be so in all future configurations. Note that a soliton of length k travels to the right with speed k. Therefore, the lengths of solitons in a soliton decomposition must be nondecreasing from left to right. In the early dynamics, longer solitons can collide into shorter solitons (e.g.,

$\xi ^{(7)}$

up to their location and will be so in all future configurations. Note that a soliton of length k travels to the right with speed k. Therefore, the lengths of solitons in a soliton decomposition must be nondecreasing from left to right. In the early dynamics, longer solitons can collide into shorter solitons (e.g.,

![]() $\underline {321}$

during

$\underline {321}$

during

![]() $t=0,1,2$

) and undergo a nonlinear interaction.

$t=0,1,2$

) and undergo a nonlinear interaction.

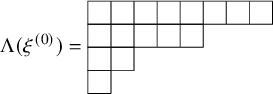

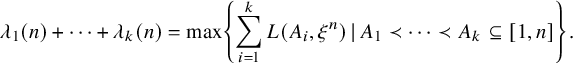

The soliton decomposition of the BBS trajectory initialized at

![]() $\xi ^{(0)}$

can be encoded in a Young diagram

$\xi ^{(0)}$

can be encoded in a Young diagram

![]() $\Lambda =\Lambda (\xi ^{(0)})$

having

$\Lambda =\Lambda (\xi ^{(0)})$

having

![]() $j^{\text {th}}$

column equal in length to the

$j^{\text {th}}$

column equal in length to the

![]() $j^{\text {th}}$

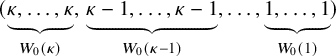

-longest soliton. For instance, the Young diagram corresponding to the soliton decomposition of the instance of the 5-color BBS given before is

$j^{\text {th}}$

-longest soliton. For instance, the Young diagram corresponding to the soliton decomposition of the instance of the 5-color BBS given before is

Note that the ith row of the Young diagram

![]() $\Lambda (\xi ^{(0)})$

is precisely the number of solitons of length at least i.

$\Lambda (\xi ^{(0)})$

is precisely the number of solitons of length at least i.

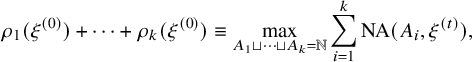

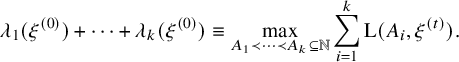

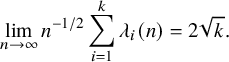

1.2 Overview of main results

We consider the

![]() $\kappa $

-color BBS initialized by a random BBS configuration of system size n and analyze the limiting shape of the random Young diagrams as n tends to infinity. We consider two models that we call the ‘permutation model’ and ‘independence model’. For both models, we denote the kth row and column lengths of the Young diagram encoding the soliton decomposition by

$\kappa $

-color BBS initialized by a random BBS configuration of system size n and analyze the limiting shape of the random Young diagrams as n tends to infinity. We consider two models that we call the ‘permutation model’ and ‘independence model’. For both models, we denote the kth row and column lengths of the Young diagram encoding the soliton decomposition by

![]() $\rho _{k}(n)$

and

$\rho _{k}(n)$

and

![]() $\lambda _{k}(n)$

, respectively,

$\lambda _{k}(n)$

, respectively,

In the permutation model, the BBS is initialized by a uniformly chosen random permutation

![]() $\Sigma ^{n}$

of colors

$\Sigma ^{n}$

of colors

![]() $\{1,2,\cdots , n\}$

. A classical way of associating a Young diagram to a permutation is via the Robinson-Schensted correspondence (see [Reference SaganSag01, Ch. 3.1]). A famous result of Baik, Deift and Johansson [Reference Baik, Deift and JohanssonBDJ99] tells us that the row and column lengths of the random Young diagram constructed from

$\{1,2,\cdots , n\}$

. A classical way of associating a Young diagram to a permutation is via the Robinson-Schensted correspondence (see [Reference SaganSag01, Ch. 3.1]). A famous result of Baik, Deift and Johansson [Reference Baik, Deift and JohanssonBDJ99] tells us that the row and column lengths of the random Young diagram constructed from

![]() $\Sigma ^{n}$

via the RS correspondence scale as

$\Sigma ^{n}$

via the RS correspondence scale as

![]() $\sqrt {n}$

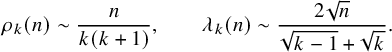

. In Theorem 2.1, we show that for the random Young diagram constructed via BBS, the columns scale as

$\sqrt {n}$

. In Theorem 2.1, we show that for the random Young diagram constructed via BBS, the columns scale as

![]() $\sqrt {n}$

but the rows scale as n. Namely,

$\sqrt {n}$

but the rows scale as n. Namely,

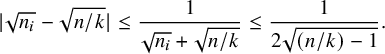

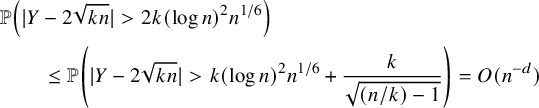

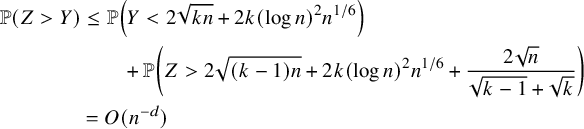

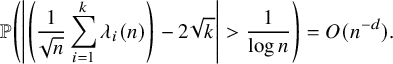

$$ \begin{align} \rho_{k}(n) \sim \frac{n}{k(k+1)}, \qquad \lambda_{k}(n) \sim \frac{2\sqrt{n}}{\sqrt{k-1}+\sqrt{k}}. \end{align} $$

$$ \begin{align} \rho_{k}(n) \sim \frac{n}{k(k+1)}, \qquad \lambda_{k}(n) \sim \frac{2\sqrt{n}}{\sqrt{k-1}+\sqrt{k}}. \end{align} $$

While the row lengths in RS-constructed Young diagram are related to the longest increasing subsequences, we show that the row lengths in the BBS-constructed Young diagram are related to the number of ascents (Lemma 3.5). This will show that the majority of solitons have a length of order

![]() $O(1)$

. Hence, the row and column scalings in (3) are consistent.

$O(1)$

. Hence, the row and column scalings in (3) are consistent.

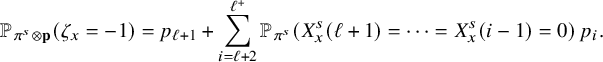

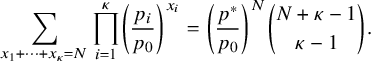

In the independence model, which we denote

![]() $\xi ^{n,\mathbf {p}}$

, the colors of the sites in the interval

$\xi ^{n,\mathbf {p}}$

, the colors of the sites in the interval

![]() $[1,n]$

are independently drawn from a fixed distribution

$[1,n]$

are independently drawn from a fixed distribution

![]() $\mathbf {p}=(p_{0},p_{1},\cdots ,p_{\kappa })$

on

$\mathbf {p}=(p_{0},p_{1},\cdots ,p_{\kappa })$

on

![]() $\mathbb {Z}_{\kappa +1}$

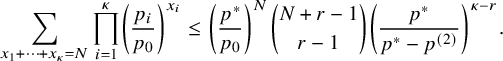

. Recently, Lyu and Kuniba obtained sharp asymptotics for the row lengths as well as their large deviations principle in this independence model [Reference Kuniba and LyuKL20]. In Theorems 2.4–2.7, we establish a sharp scaling limit for the column lengths for the independence model, as summarized in Table 1 and as bullet points below. Let

$\mathbb {Z}_{\kappa +1}$

. Recently, Lyu and Kuniba obtained sharp asymptotics for the row lengths as well as their large deviations principle in this independence model [Reference Kuniba and LyuKL20]. In Theorems 2.4–2.7, we establish a sharp scaling limit for the column lengths for the independence model, as summarized in Table 1 and as bullet points below. Let

![]() $p^{*}:=\max (p_{1},\dots ,p_{\kappa })$

denote the density of the maximum positive color and let r denote the multiplicity of

$p^{*}:=\max (p_{1},\dots ,p_{\kappa })$

denote the density of the maximum positive color and let r denote the multiplicity of

![]() $p^{*}$

(i.e., number of

$p^{*}$

(i.e., number of

![]() $p_{i}$

’s such that

$p_{i}$

’s such that

![]() $p_{i}=p^{*}$

for

$p_{i}=p^{*}$

for

![]() $i=1,\dots ,\kappa $

).

$i=1,\dots ,\kappa $

).

-

• In the subcritical regime (

$p_{0}>p^{*}$

), top soliton lengths have sharp scaling

$p_{0}>p^{*}$

), top soliton lengths have sharp scaling

$\log _{\theta } n + (r-1)\log _{\theta } \log n +O(1)$

, where

$\log _{\theta } n + (r-1)\log _{\theta } \log n +O(1)$

, where

$\theta =p^{*}/p_{0}$

.

$\theta =p^{*}/p_{0}$

. -

• In the critical regime (

$p_{0}=p^{*}$

),

$p_{0}=p^{*}$

),

$n^{-1/2}\lambda _{1}(n)$

converges weakly to the maximum

$n^{-1/2}\lambda _{1}(n)$

converges weakly to the maximum

$L_{1}$

-norm of a

$L_{1}$

-norm of a

$\kappa $

-dimensional semimartingale reflecting Brownian motion (SRBM).

$\kappa $

-dimensional semimartingale reflecting Brownian motion (SRBM). -

• In the supercritical regime (

$p_{0}<p^{*}$

),

$p_{0}<p^{*}$

),

$\lambda _{1}(n)= (p^{*}-p_{0})n+\Theta (\sqrt {n})$

. If

$\lambda _{1}(n)= (p^{*}-p_{0})n+\Theta (\sqrt {n})$

. If

$r=1$

, then all subsequent top solitons are of order

$r=1$

, then all subsequent top solitons are of order

$\log n$

; if

$\log n$

; if

$r\ge 2$

, they are of order

$r\ge 2$

, they are of order

$\sqrt {n}$

.

$\sqrt {n}$

. -

• The fluctuation of

$\lambda _{1}(n)$

depends explicitly on a

$\lambda _{1}(n)$

depends explicitly on a

$\kappa $

-dimensional SRBM, which arises as the diffusive scaling limit of the associated carrier process.

$\kappa $

-dimensional SRBM, which arises as the diffusive scaling limit of the associated carrier process.

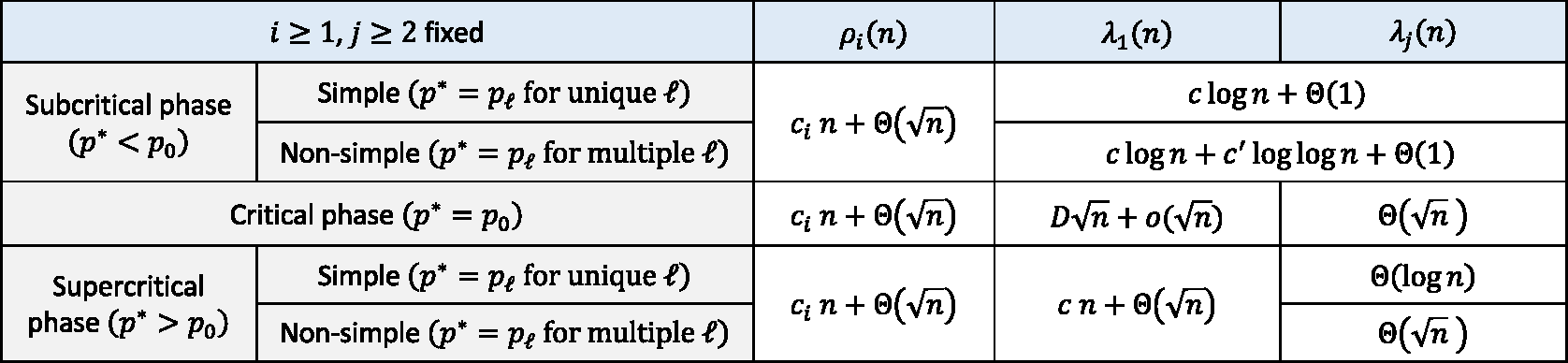

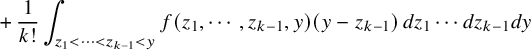

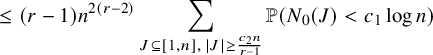

Table 1 Asymptotic scaling of the ith row length

![]() $\rho _{i}$

and the jth column length

$\rho _{i}$

and the jth column length

![]() $\lambda _{j}$

for the independence model with ball density

$\lambda _{j}$

for the independence model with ball density

![]() $\mathbf {p}=(p_{0},p_{1},\cdots ,p_{\kappa })$

and

$\mathbf {p}=(p_{0},p_{1},\cdots ,p_{\kappa })$

and

![]() $p^{*}=\max (p_{1},\cdots ,p_{\kappa })$

. The asymptotic soliton lengths undergo a similar ‘double-jump’ phase transition depending on

$p^{*}=\max (p_{1},\cdots ,p_{\kappa })$

. The asymptotic soliton lengths undergo a similar ‘double-jump’ phase transition depending on

![]() $p^{*}-p_{0}$

as in the

$p^{*}-p_{0}$

as in the

![]() $\kappa =1$

case established in [Reference Levine, Lyu and PikeLLP20], but the scaling inside the subcritical and supercritical regimes depends on the multiplicity of the maximum positive color

$\kappa =1$

case established in [Reference Levine, Lyu and PikeLLP20], but the scaling inside the subcritical and supercritical regimes depends on the multiplicity of the maximum positive color

![]() $p^{*}$

. Sharp asymptotics for the row lengths have been obtained in [Reference Kuniba and LyuKL20].

$p^{*}$

. Sharp asymptotics for the row lengths have been obtained in [Reference Kuniba and LyuKL20].

![]() $c_{i}$

’s are constants depending on

$c_{i}$

’s are constants depending on

![]() $\mathbf {p}$

and i; Constnts

$\mathbf {p}$

and i; Constnts

![]() $c,c'$

do not depend on j; D is a nonnegative and nondegenerate random variable.

$c,c'$

do not depend on j; D is a nonnegative and nondegenerate random variable.

We establish a similar ‘double-jump’ phase transition for the

![]() $\kappa =1$

case established by Levine, Lyu and Pike [Reference Levine, Lyu and PikeLLP20]. We find that in the multicolor (

$\kappa =1$

case established by Levine, Lyu and Pike [Reference Levine, Lyu and PikeLLP20]. We find that in the multicolor (

![]() $\kappa \ge 2$

) case, the maximum positive ball density

$\kappa \ge 2$

) case, the maximum positive ball density

![]() $p^{*}=\max (p_{1},\cdots ,p_{\kappa })$

compared to the zero density

$p^{*}=\max (p_{1},\cdots ,p_{\kappa })$

compared to the zero density

![]() $p_{0}$

dictates general phase transition structure. However, we find that the scaling inside the subcritical and supercritical regimes depends on the multiplicity r of the maximum positive color

$p_{0}$

dictates general phase transition structure. However, we find that the scaling inside the subcritical and supercritical regimes depends on the multiplicity r of the maximum positive color

![]() $p^{*}$

. Furthermore, the fluctuation of the top soliton length

$p^{*}$

. Furthermore, the fluctuation of the top soliton length

![]() $\lambda _{1}(n)$

about its mean behavior is described by a

$\lambda _{1}(n)$

about its mean behavior is described by a

![]() $\kappa $

-dimensional semimartingale reflecting Brownian motion (SRBM) lurking behind, whose covariance matrix depends on

$\kappa $

-dimensional semimartingale reflecting Brownian motion (SRBM) lurking behind, whose covariance matrix depends on

![]() $\mathbf {p}$

explicitly. Such SRBM arises as the diffusive scaling limit of the associated carrier process.

$\mathbf {p}$

explicitly. Such SRBM arises as the diffusive scaling limit of the associated carrier process.

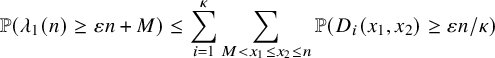

A large part of our analysis is devoted to studying the associated carrier process, which is a Markov chain on the

![]() $\kappa $

-dimensional nonnegative integer orthant, whose excursions and running maxima are closely related to soliton lengths (see Lemmas 3.1-3.2). We establish its sharp scaling of ruin probabilities, strong law of large numbers and weak diffusive scaling limit to an SRBM with explicit parameters (Theorems 2.3–2.5). We also establish and utilize alternative descriptions of the soliton lengths and numbers in terms of the modified Greene-Kleitman invariants for the box-ball systems (Lemma 3.5) and associated circular exclusion processes.

$\kappa $

-dimensional nonnegative integer orthant, whose excursions and running maxima are closely related to soliton lengths (see Lemmas 3.1-3.2). We establish its sharp scaling of ruin probabilities, strong law of large numbers and weak diffusive scaling limit to an SRBM with explicit parameters (Theorems 2.3–2.5). We also establish and utilize alternative descriptions of the soliton lengths and numbers in terms of the modified Greene-Kleitman invariants for the box-ball systems (Lemma 3.5) and associated circular exclusion processes.

1.3 Background and related works

The

![]() $\kappa $

-color BBS was introduced in [Reference TakahashiTak93], generalizing the original

$\kappa $

-color BBS was introduced in [Reference TakahashiTak93], generalizing the original

![]() $\kappa =1$

BBS first invented by Takahashi and Satsuma in 1990 [Reference Takahashi and SatsumaTS90]. In the most general form of the BBS, each site accommodates a semistandard tableau of rectangular shape with letters from

$\kappa =1$

BBS first invented by Takahashi and Satsuma in 1990 [Reference Takahashi and SatsumaTS90]. In the most general form of the BBS, each site accommodates a semistandard tableau of rectangular shape with letters from

![]() $\{0,1,\cdots ,\kappa \}$

, and the time evolution is defined by successive application of the combinatorial R (cf. [Reference Fukuda, Yamada and OkadoFYO00, Reference Hatayama, Hikami, Inoue, Kuniba, Takagi and TokihiroHHI+01, Reference Kuniba, Okado, Sakamoto, Takagi and YamadaKOS+06, Reference Inoue, Kuniba and TakagiIKT12]). For a friendly introduction to the combinatorial R, see [Reference Kuniba and LyuKL20, Sec. 3]. The

$\{0,1,\cdots ,\kappa \}$

, and the time evolution is defined by successive application of the combinatorial R (cf. [Reference Fukuda, Yamada and OkadoFYO00, Reference Hatayama, Hikami, Inoue, Kuniba, Takagi and TokihiroHHI+01, Reference Kuniba, Okado, Sakamoto, Takagi and YamadaKOS+06, Reference Inoue, Kuniba and TakagiIKT12]). For a friendly introduction to the combinatorial R, see [Reference Kuniba and LyuKL20, Sec. 3]. The

![]() $\kappa $

-color BBS treated in this paper corresponds to the case where the tableau shape is a single box, which was called the basic

$\kappa $

-color BBS treated in this paper corresponds to the case where the tableau shape is a single box, which was called the basic

![]() $\kappa $

-color BBS in [Reference Kuniba and LyuKL20, Reference KondoKon20]. The BBS is known to arise both from the quantum and classical integrable systems by the procedures called crystallization and ultradiscretization, respectively. This double origin of the integrability of BBS lies behind its deep connections to quantum groups, crystal base theory, solvable lattice models, the Bethe ansatz, soliton equations, ultradiscretization of the Korteweg-de Vries equation, tropical geometry and so forth; see, for example, the review [Reference Inoue, Kuniba and TakagiIKT12] and the references therein.

$\kappa $

-color BBS in [Reference Kuniba and LyuKL20, Reference KondoKon20]. The BBS is known to arise both from the quantum and classical integrable systems by the procedures called crystallization and ultradiscretization, respectively. This double origin of the integrability of BBS lies behind its deep connections to quantum groups, crystal base theory, solvable lattice models, the Bethe ansatz, soliton equations, ultradiscretization of the Korteweg-de Vries equation, tropical geometry and so forth; see, for example, the review [Reference Inoue, Kuniba and TakagiIKT12] and the references therein.

BBS with random initial configuration is an emerging topic in the probability literature and has gained considerable attention with a number of recent works [Reference Levine, Lyu and PikeLLP20, Reference Croydon, Kato, Sasada and TsujimotoCKST18, Reference Kuniba and LyuKL20, Reference Ferrari and GabrielliFG18, Reference Kuniba and LyuKL20, Reference Croydon and SasadaCS19a, Reference Croydon and SasadaCS19b]. There are roughly two central questions that the researchers are aiming to answer: 1) If the random initial configuration is one-sided, what is the limiting shape of the invariant random Young diagram as the system size tends to infinity? 2) If one considers the two-sided BBS (where the initial configuration is a bi-directional array of balls), what are the two-sided random initial configurations that are invariant under the BBS dynamics? Some of these questions have been addressed for the basic

![]() $1$

-color BBS [Reference Levine, Lyu and PikeLLP20, Reference Ferrari, Nguyen, Rolla and WangFNRW18, Reference Ferrari and GabrielliFG18, Reference Croydon, Kato, Sasada and TsujimotoCKST18] as well as for the multicolor case [Reference Kuniba and LyuKL20, Reference Kuniba, Lyu and OkadoKLO18, Reference KondoKon20]. More recently, invariant measures of the discrete KdV and Toda-type systems have been investigated [Reference Croydon and SasadaCS20].

$1$

-color BBS [Reference Levine, Lyu and PikeLLP20, Reference Ferrari, Nguyen, Rolla and WangFNRW18, Reference Ferrari and GabrielliFG18, Reference Croydon, Kato, Sasada and TsujimotoCKST18] as well as for the multicolor case [Reference Kuniba and LyuKL20, Reference Kuniba, Lyu and OkadoKLO18, Reference KondoKon20]. More recently, invariant measures of the discrete KdV and Toda-type systems have been investigated [Reference Croydon and SasadaCS20].

Three important works are strongly related to this paper. In [Reference Levine, Lyu and PikeLLP20], Levine, Lyu and Pike studied various soliton statistics of the basic

![]() $1$

-color BBS when the system is initialized according to a Bernoulli product measure with ball density p on the first n boxes. One of their main results is that the length of the longest soliton is of order

$1$

-color BBS when the system is initialized according to a Bernoulli product measure with ball density p on the first n boxes. One of their main results is that the length of the longest soliton is of order

![]() $\log n$

for

$\log n$

for

![]() $p<1/2$

, order

$p<1/2$

, order

![]() $\sqrt {n}$

for

$\sqrt {n}$

for

![]() $p=1/2$

, and order n for

$p=1/2$

, and order n for

![]() $p>1/2$

. Additionally, there is a condensation toward the longest soliton in the supercritical

$p>1/2$

. Additionally, there is a condensation toward the longest soliton in the supercritical

![]() $p>1/2$

regime in the sense that, for each fixed

$p>1/2$

regime in the sense that, for each fixed

![]() $j\geq 1$

, the top j soliton lengths have the same order as the longest for

$j\geq 1$

, the top j soliton lengths have the same order as the longest for

![]() $p\leq 1/2$

, whereas all but the longest have order

$p\leq 1/2$

, whereas all but the longest have order

![]() $\log n$

for

$\log n$

for

![]() $p>1/2$

. Their analysis is based on geometric mappings from the associated simple random walks to the invariant Young diagrams, which enable a robust analysis of the scaling limit of the invariant Young diagram. However, this connection is not apparent in the general

$p>1/2$

. Their analysis is based on geometric mappings from the associated simple random walks to the invariant Young diagrams, which enable a robust analysis of the scaling limit of the invariant Young diagram. However, this connection is not apparent in the general

![]() $\kappa \ge 1$

case. In fact, one of the main difficulties in analyzing the soliton lengths in the multicolor BBS is that within a single regime, there is a mixture of behaviors that we see from different regimes in the single-color case.

$\kappa \ge 1$

case. In fact, one of the main difficulties in analyzing the soliton lengths in the multicolor BBS is that within a single regime, there is a mixture of behaviors that we see from different regimes in the single-color case.

The row lengths in the multicolor BBS are well-understood due to recent works by Kuniba, Lyu and Okado [Reference Kuniba, Lyu and OkadoKLO18] and Kuniba and Lyu [Reference Kuniba and LyuKL20]. The central observation is that, when the initial configuration is given by a product measure, the sum of row lengths can be computed via some additive functional (called ‘energy’) of carrier processes of various shapes, which are finite-state Markov chains whose time evolution is given by combinatorial R. In [Reference Kuniba, Lyu and OkadoKLO18], the ‘stationary shape’ of the Young diagram for the most general type of BBS is identified by the logarithmic derivative of a deformed character of the KR modules (or Schur polynomials in the basic case). In [Reference Kuniba and LyuKL20], for the (basic)

![]() $\kappa $

-color BBS that we consider in the present paper, it was shown that the row lengths satisfy a large deviations principle, and hence, the Young diagram converges to the stationary shape at an exponential rate, in the sense of row scaling.

$\kappa $

-color BBS that we consider in the present paper, it was shown that the row lengths satisfy a large deviations principle, and hence, the Young diagram converges to the stationary shape at an exponential rate, in the sense of row scaling.

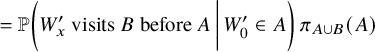

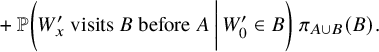

The central subject of this paper is the column lengths of the Young diagram for the basic

![]() $\kappa $

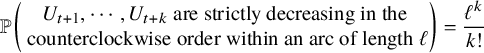

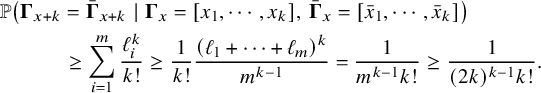

-color BBS. We develop two main tools for our analysis, which are a modified version of Greene-Kleitman invariants for BBS (Section 3.3) and the carrier process (see Definition 2.2). For the independence model, we obtain the scaling limit of the carrier process as an SRBM [Reference WilliamsWil95], and it plays a central role in our analysis. For the permutation model, the carrier process gives rise to a ‘circular exclusion process’, which can be regarded as a circular version of the well-known Totally Asymmetric Simple Exclusion Process (TASEP) on a line (see, for example, [Reference FerrariF+18, Reference Borodin, Ferrari, Prähofer and SasamotoBFPS07, Reference Borodin, Ferrari and SasamotoBFS08]). For its rough description, consider the following process on the unit circle

$\kappa $

-color BBS. We develop two main tools for our analysis, which are a modified version of Greene-Kleitman invariants for BBS (Section 3.3) and the carrier process (see Definition 2.2). For the independence model, we obtain the scaling limit of the carrier process as an SRBM [Reference WilliamsWil95], and it plays a central role in our analysis. For the permutation model, the carrier process gives rise to a ‘circular exclusion process’, which can be regarded as a circular version of the well-known Totally Asymmetric Simple Exclusion Process (TASEP) on a line (see, for example, [Reference FerrariF+18, Reference Borodin, Ferrari, Prähofer and SasamotoBFPS07, Reference Borodin, Ferrari and SasamotoBFS08]). For its rough description, consider the following process on the unit circle

![]() $S^{1}$

. Starting from some finite number of points, at each time, a new point is added to

$S^{1}$

. Starting from some finite number of points, at each time, a new point is added to

![]() $S^{1}$

independently from a fixed distribution, which then deletes the nearest counterclockwise point already on the circle. Equivalently, one can think of each point in the circle trying to jump in the clockwise direction. It turns out that this process is crucial in analyzing the permutation model (Section 4.2), whereas for the independence model, the relevant circular exclusion process is defined on the integer ring

$S^{1}$

independently from a fixed distribution, which then deletes the nearest counterclockwise point already on the circle. Equivalently, one can think of each point in the circle trying to jump in the clockwise direction. It turns out that this process is crucial in analyzing the permutation model (Section 4.2), whereas for the independence model, the relevant circular exclusion process is defined on the integer ring

![]() $\mathbb {Z}_{\kappa +1}$

where points can stack up at the same location (Section 3.1). Interestingly, a cylindric version of Schur functions has been used to study rigged configurations and BBS [Reference Lam, Pylyavskyy and SakamotoLPS14].

$\mathbb {Z}_{\kappa +1}$

where points can stack up at the same location (Section 3.1). Interestingly, a cylindric version of Schur functions has been used to study rigged configurations and BBS [Reference Lam, Pylyavskyy and SakamotoLPS14].

1.4 Organization

In Section 2, we define the carrier process, state the permutation and the independence model for the

![]() $\kappa $

-color BBS, and state our main results. We also provide numerical simulation to validate our results empirically. In Section 3, we introduce infinite and finite capacity carrier processes for the

$\kappa $

-color BBS, and state our main results. We also provide numerical simulation to validate our results empirically. In Section 3, we introduce infinite and finite capacity carrier processes for the

![]() $\kappa $

-color BBS and state the three key combinatorial lemmas (Lemmas 3.1, 3.3, 3.5). In Section 4, we prove our main result for the permutation model (Theorem 2.1) by using the modified GK invariants for BBS (Lemma 3.5) and analyzing the associated circular exclusion process. In Section 5, we prove Theorem 2.3 (i) about the stationary behavior of the subcritical carrier process. Next, in Section 6, we introduce the ‘decoupled carrier process’ and develop the ‘Skorokhod decomposition’ of the carrier process. These will play critical roles in the analysis in the following sections. In Section 7, we analyze the decoupled carrier process over the i.i.d. ball configuration. In Section 8, we prove Theorem 2.3 (ii) and Theorem 2.4. In Sections 9 and 10, we establish a linear and diffusive scaling limit of the carrier process, which is stated in Theorem 2.5. Background on SRBM and an invariance principle for SRBM are also provided in Section 10. In Section 11, we prove Theorems 2.6 and 2.7. Lastly, in Section 12, we provide postponed proofs for the combinatorial lemmas stated in Section 3.

$\kappa $

-color BBS and state the three key combinatorial lemmas (Lemmas 3.1, 3.3, 3.5). In Section 4, we prove our main result for the permutation model (Theorem 2.1) by using the modified GK invariants for BBS (Lemma 3.5) and analyzing the associated circular exclusion process. In Section 5, we prove Theorem 2.3 (i) about the stationary behavior of the subcritical carrier process. Next, in Section 6, we introduce the ‘decoupled carrier process’ and develop the ‘Skorokhod decomposition’ of the carrier process. These will play critical roles in the analysis in the following sections. In Section 7, we analyze the decoupled carrier process over the i.i.d. ball configuration. In Section 8, we prove Theorem 2.3 (ii) and Theorem 2.4. In Sections 9 and 10, we establish a linear and diffusive scaling limit of the carrier process, which is stated in Theorem 2.5. Background on SRBM and an invariance principle for SRBM are also provided in Section 10. In Section 11, we prove Theorems 2.6 and 2.7. Lastly, in Section 12, we provide postponed proofs for the combinatorial lemmas stated in Section 3.

1.5 Notation

We use the convention that summation and product over the empty index set equal zero and one, respectively. For any probability space

![]() $(\Omega ,\mathcal {F},\mathbb {P})$

and any event

$(\Omega ,\mathcal {F},\mathbb {P})$

and any event

![]() $A\in \mathcal {F}$

, we let

$A\in \mathcal {F}$

, we let

![]() $\mathbf {1}(A)$

denote the indicator variable of A. Let

$\mathbf {1}(A)$

denote the indicator variable of A. Let

![]() $C^{d}(0,\infty )$

denote the space of continuous functions

$C^{d}(0,\infty )$

denote the space of continuous functions

![]() $f:[0,\infty )\rightarrow \mathbb {R}^{d}$

endowed with the topology of uniform convergence on compact intervals. We let

$f:[0,\infty )\rightarrow \mathbb {R}^{d}$

endowed with the topology of uniform convergence on compact intervals. We let

![]() $\mathrm {tridiagonal}_{d}(a,b,c)$

denote the

$\mathrm {tridiagonal}_{d}(a,b,c)$

denote the

![]() $d\times d$

matrix which has a on its subdiagonal, b on its diagonal and c on its superdiagonal entries, and zeros elsewhere.

$d\times d$

matrix which has a on its subdiagonal, b on its diagonal and c on its superdiagonal entries, and zeros elsewhere.

We adopt the notations

![]() $\mathbb {R}^{+}=[0,\infty )$

,

$\mathbb {R}^{+}=[0,\infty )$

,

![]() $\mathbb {N}=\{1,2,3,\ldots \}$

and

$\mathbb {N}=\{1,2,3,\ldots \}$

and

![]() $\mathbb {Z}_{\ge 0}=\mathbb {N}\cup \{0\}$

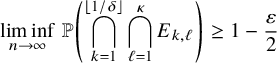

throughout. For a sequence of events

$\mathbb {Z}_{\ge 0}=\mathbb {N}\cup \{0\}$

throughout. For a sequence of events

![]() $(A_{n})_{n\ge 1}$

, we say

$(A_{n})_{n\ge 1}$

, we say

![]() $A_{n}$

occurs with high probability if

$A_{n}$

occurs with high probability if

![]() ${\mathbb {P}}(A_{n})\rightarrow 1$

as

${\mathbb {P}}(A_{n})\rightarrow 1$

as

![]() $n\rightarrow \infty $

. We employ the Landau notations

$n\rightarrow \infty $

. We employ the Landau notations

![]() $O(\cdot ),\, \Omega (\cdot ),\, \Theta (\cdot )$

in the sense of stochastic boundedness. That is, given

$O(\cdot ),\, \Omega (\cdot ),\, \Theta (\cdot )$

in the sense of stochastic boundedness. That is, given

![]() $\{a_{n}\}_{n=1}^{\infty }\subset \mathbb {R}^{+}$

and a sequence

$\{a_{n}\}_{n=1}^{\infty }\subset \mathbb {R}^{+}$

and a sequence

![]() $\{W_{n}\}_{n=1}^{\infty }$

of nonnegative random variables, we say that

$\{W_{n}\}_{n=1}^{\infty }$

of nonnegative random variables, we say that

![]() $W_{n}=O(a_{n})$

with high probability if for each

$W_{n}=O(a_{n})$

with high probability if for each

![]() $\varepsilon>0$

, there is a constant

$\varepsilon>0$

, there is a constant

![]() $C\in (0,\infty )$

such that

$C\in (0,\infty )$

such that

![]() ${\mathbb {P}}( W_{n}<Ca_{n} )\ge 1-\varepsilon $

for all sufficiently large n. We say that

${\mathbb {P}}( W_{n}<Ca_{n} )\ge 1-\varepsilon $

for all sufficiently large n. We say that

![]() $W_{n}=\Omega (a_{n})$

if for each

$W_{n}=\Omega (a_{n})$

if for each

![]() $\varepsilon>0$

, there is a

$\varepsilon>0$

, there is a

![]() $c\in (0,\infty )$

such that

$c\in (0,\infty )$

such that

![]() ${\mathbb {P}}(W_{n}>ca_{n})\ge 1-\varepsilon $

for all sufficiently large n, and we say

${\mathbb {P}}(W_{n}>ca_{n})\ge 1-\varepsilon $

for all sufficiently large n, and we say

![]() $W_{n}=\Theta (a_{n})$

with high probability if

$W_{n}=\Theta (a_{n})$

with high probability if

![]() $W_{n}=O(a_{n})$

and

$W_{n}=O(a_{n})$

and

![]() $W_{n}=\Omega (a_{n})$

both with high probability. In all of these Landau notations, we require that the constants

$W_{n}=\Omega (a_{n})$

both with high probability. In all of these Landau notations, we require that the constants

![]() $c,C$

do not depend on n.

$c,C$

do not depend on n.

2 Statement of results

Our main results concern the asymptotic behavior of top soliton lengths associated with the

![]() $\kappa $

-color BBS trajectory for two models of random initial configuration

$\kappa $

-color BBS trajectory for two models of random initial configuration

![]() $\xi $

: (1)

$\xi $

: (1)

![]() $\kappa =n$

and

$\kappa =n$

and

![]() $\xi [1,n]$

is a random uniform permutation of length n; (2)

$\xi [1,n]$

is a random uniform permutation of length n; (2)

![]() $\kappa $

is fixed and

$\kappa $

is fixed and

![]() $\xi _{x}=i$

independently with a fixed probability

$\xi _{x}=i$

independently with a fixed probability

![]() $p_{i}$

,

$p_{i}$

,

![]() $i\in \mathbb {Z}_{\kappa +1}$

for each

$i\in \mathbb {Z}_{\kappa +1}$

for each

![]() $x\in [1,n]$

.

$x\in [1,n]$

.

2.1 The permutation model

For the permutation model, let

![]() $(U_{x})_{x\ge 1}$

be a sequence of i.i.d.

$(U_{x})_{x\ge 1}$

be a sequence of i.i.d.

![]() $\mathrm {Uniform}([0,1])$

random variables. For each integer

$\mathrm {Uniform}([0,1])$

random variables. For each integer

![]() $n\ge 1$

, we denote by

$n\ge 1$

, we denote by

![]() $V_{1:n}<V_{2:n}<\cdots <V_{n:n}$

the order statistics of

$V_{1:n}<V_{2:n}<\cdots <V_{n:n}$

the order statistics of

![]() $U_{1},U_{2},\cdots , U_{n}$

. Then it is easy to see that the random permutation

$U_{1},U_{2},\cdots , U_{n}$

. Then it is easy to see that the random permutation

![]() $\Sigma ^{n}$

on

$\Sigma ^{n}$

on

![]() $[n]$

such that

$[n]$

such that

![]() $V_{i:n}=U_{\Sigma ^{n}(i)}$

for all

$V_{i:n}=U_{\Sigma ^{n}(i)}$

for all

![]() $1\le i \le n$

is uniformly distributed among all permutations on

$1\le i \le n$

is uniformly distributed among all permutations on

![]() $[n]$

. Define

$[n]$

. Define

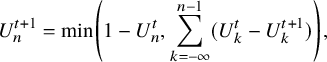

We now state our main result for the permutation model. We obtain a precise first-order asymptotic for the largest k rows and columns, as stated in the following theorem.

Theorem 2.1 (The permutation model).

Let

![]() $\xi ^{n}$

be the permutation model as above. For each

$\xi ^{n}$

be the permutation model as above. For each

![]() $k\ge 1$

, denote

$k\ge 1$

, denote

![]() $\rho _{k}(n)=\rho _{k}(\xi ^{n})$

and

$\rho _{k}(n)=\rho _{k}(\xi ^{n})$

and

![]() $\lambda _{k}(n)=\lambda _{k}(\xi ^{n})$

. Then for each fixed

$\lambda _{k}(n)=\lambda _{k}(\xi ^{n})$

. Then for each fixed

![]() $k\ge 1$

, almost surely,

$k\ge 1$

, almost surely,

Our proof of Theorem 2.1 proceeds as follows. We first establish a combinatorial lemma (Lemma 3.5) that associates the soliton lengths and numbers with a modified version of Greene-Kleitman invariants for BBS. We then utilize the tail bounds on longest increasing subsequences in uniformly random permutations in Baik, Deift and Johansson [Reference Baik, Deift and JohanssonBDJ99] for establishing the scaling limit for the lengths of the columns. For the row lengths, we use the characterization of soliton numbers as an additive functional of finite-capacity carrier processes [Reference Kuniba and LyuKL20]. Such a process becomes an exclusion process on the unit circle for the permutation model.

2.2 The independence model

To define the independence model, fix integers

![]() $n,\kappa \ge 1$

. Let

$n,\kappa \ge 1$

. Let

![]() $\mathbf {p}=(p_{0},p_{1},\cdots ,p_{\kappa })$

be a probability distribution on

$\mathbf {p}=(p_{0},p_{1},\cdots ,p_{\kappa })$

be a probability distribution on

![]() $\{ 0,1,\cdots ,\kappa \}$

. Let

$\{ 0,1,\cdots ,\kappa \}$

. Let

![]() $\xi =\xi ^{\mathbf {p}}$

be the sequence

$\xi =\xi ^{\mathbf {p}}$

be the sequence

![]() $(\xi _{x})_{x\in \mathbb {N}}$

of i.i.d. random variables

$(\xi _{x})_{x\in \mathbb {N}}$

of i.i.d. random variables

![]() $\xi _{x}$

where

$\xi _{x}$

where

For each integer

![]() $n\ge 1$

, define

$n\ge 1$

, define

![]() $\kappa $

-color BBS configuration

$\kappa $

-color BBS configuration

![]() $\xi ^{n,\mathbf {p}}$

of size n by

$\xi ^{n,\mathbf {p}}$

of size n by

We may further assume, without loss of generality, that

![]() $p_{i}>0$

for all

$p_{i}>0$

for all

![]() $1\le i\le \kappa $

. Indeed, if

$1\le i\le \kappa $

. Indeed, if

![]() $p_{i}=0$

for some i, then we can omit the color i entirely and consider the system as a

$p_{i}=0$

for some i, then we can omit the color i entirely and consider the system as a

![]() $(\kappa -1)$

-color BBS by shifting the colors

$(\kappa -1)$

-color BBS by shifting the colors

![]() $\{i+1,\cdots , \kappa \}$

to

$\{i+1,\cdots , \kappa \}$

to

![]() $\{i,\cdots , \kappa -1 \}$

.

$\{i,\cdots , \kappa -1 \}$

.

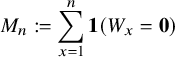

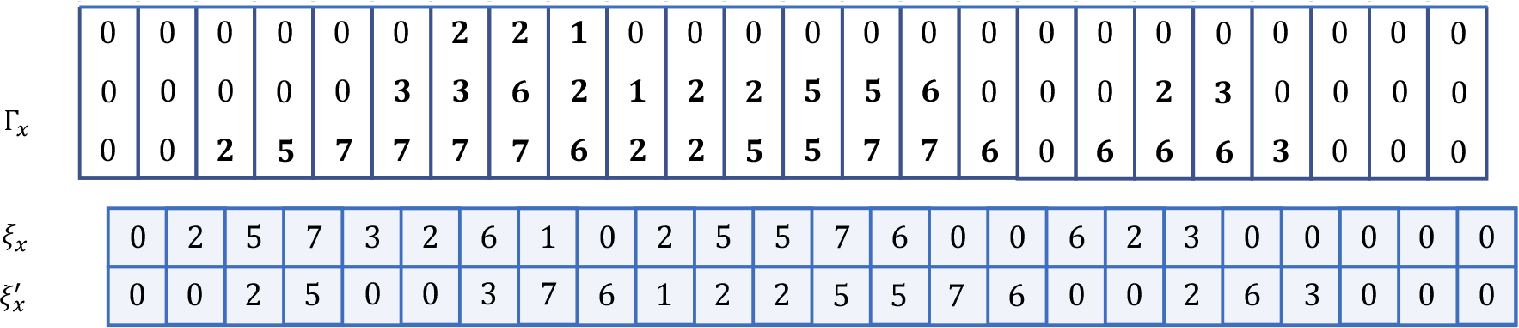

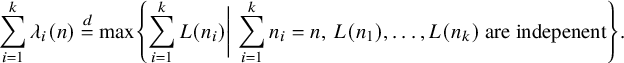

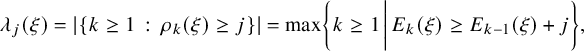

Through various combinatorial lemmas (see Section 3), we will establish that the soliton lengths

![]() $\lambda _{j}(n)$

of for the i.i.d. model are closely related to the extreme behavior of a Markov chain

$\lambda _{j}(n)$

of for the i.i.d. model are closely related to the extreme behavior of a Markov chain

![]() $(W_{x})_{x\in \mathbb {N}}$

defined on the nonnegative integer orthant

$(W_{x})_{x\in \mathbb {N}}$

defined on the nonnegative integer orthant

![]() $\mathbb {Z}_{\ge 0}^{\kappa }$

, which we call the ‘

$\mathbb {Z}_{\ge 0}^{\kappa }$

, which we call the ‘

![]() $\kappa $

-color carrier process’. Denote

$\kappa $

-color carrier process’. Denote

![]() $\mathbf {e}_{i}\in \mathbb {Z}^{\kappa }$

whose coordinates are all zero except the ith coordinate being 1.

$\mathbf {e}_{i}\in \mathbb {Z}^{\kappa }$

whose coordinates are all zero except the ith coordinate being 1.

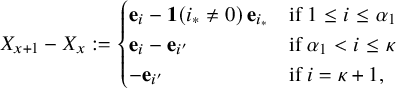

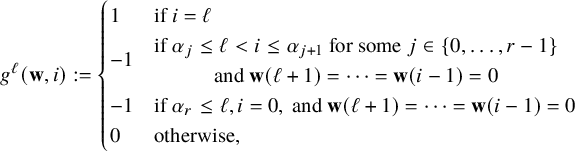

Definition 2.2 (

$\kappa $

-color carrier process).

$\kappa $

-color carrier process).

Let

![]() $\xi :=(\xi _{x})_{x\in \mathbb {N}}$

be

$\xi :=(\xi _{x})_{x\in \mathbb {N}}$

be

![]() $\kappa $

-color ball configuration. The (

$\kappa $

-color ball configuration. The (

![]() $\kappa $

-color) carrier process over

$\kappa $

-color) carrier process over

![]() $\xi $

is a process

$\xi $

is a process

![]() $(W_{x})_{x\in \mathbb {N}}$

on the state space

$(W_{x})_{x\in \mathbb {N}}$

on the state space

![]() $\Omega :=\mathbb {Z}^{\kappa }_{\ge 0}$

defined by the following evolution rule: Denoting

$\Omega :=\mathbb {Z}^{\kappa }_{\ge 0}$

defined by the following evolution rule: Denoting

![]() $i:=\xi _{x+1}$

if

$i:=\xi _{x+1}$

if

![]() $\xi _{x+1}\in \{1,\dots ,\kappa \}$

and

$\xi _{x+1}\in \{1,\dots ,\kappa \}$

and

![]() $i:=\kappa +1$

if

$i:=\kappa +1$

if

![]() $\xi _{x+1}=0$

,

$\xi _{x+1}=0$

,

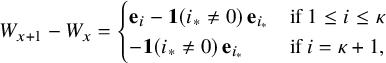

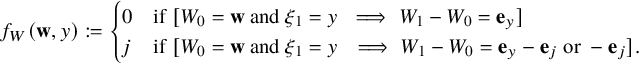

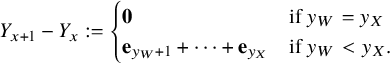

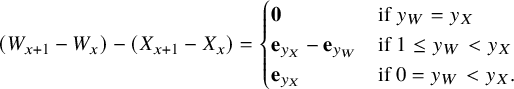

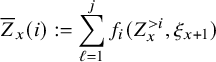

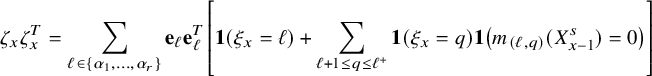

$$ \begin{align} W_{x+1} - W_{x} = \begin{cases} \mathbf{e}_{i} - \mathbf{1}(i_{*}\ne 0) \, \mathbf{e}_{i_{*}} & \text{if } 1\le i \le \kappa \\ -\mathbf{1}(i_{*}\ne 0) \, \mathbf{e}_{i_{*}} & \text{if } i=\kappa+1, \end{cases} \end{align} $$

$$ \begin{align} W_{x+1} - W_{x} = \begin{cases} \mathbf{e}_{i} - \mathbf{1}(i_{*}\ne 0) \, \mathbf{e}_{i_{*}} & \text{if } 1\le i \le \kappa \\ -\mathbf{1}(i_{*}\ne 0) \, \mathbf{e}_{i_{*}} & \text{if } i=\kappa+1, \end{cases} \end{align} $$

where

![]() $ i_{*}:=\sup \{ 1\le j< i \,:\, W_{x}(j)\ge 1 \}$

with the convention

$ i_{*}:=\sup \{ 1\le j< i \,:\, W_{x}(j)\ge 1 \}$

with the convention

![]() $\sup \emptyset = 0$

. Unless otherwise mentioned, we take

$\sup \emptyset = 0$

. Unless otherwise mentioned, we take

![]() $W_{0}=\mathbf {0}$

and

$W_{0}=\mathbf {0}$

and

![]() $\xi =\xi ^{\mathbf {p}}$

with density

$\xi =\xi ^{\mathbf {p}}$

with density

![]() $\mathbf {p}=(p_{0},\dots ,p_{\kappa })$

.

$\mathbf {p}=(p_{0},\dots ,p_{\kappa })$

.

In words, at location x, the carrier holds

![]() $W_{x}(i)$

balls of color i for

$W_{x}(i)$

balls of color i for

![]() $i=1,\dots ,\kappa $

. When a new ball of color

$i=1,\dots ,\kappa $

. When a new ball of color

![]() $1\le \xi _{x+1}\le \kappa $

is inserted into the carrier

$1\le \xi _{x+1}\le \kappa $

is inserted into the carrier

![]() $W_{x}$

, then a ball of the largest available color that is smaller than

$W_{x}$

, then a ball of the largest available color that is smaller than

![]() $\xi _{x}$

is excluded from

$\xi _{x}$

is excluded from

![]() $W_{x}$

; if there is no such ball in

$W_{x}$

; if there is no such ball in

![]() $W_{x}$

, then no ball is excluded. If

$W_{x}$

, then no ball is excluded. If

![]() $\xi _{x+1}=0$

, then no new ball is inserted, and a ball of the largest available color that is smaller than

$\xi _{x+1}=0$

, then no new ball is inserted, and a ball of the largest available color that is smaller than

![]() $\xi _{x}$

is excluded from

$\xi _{x}$

is excluded from

![]() $W_{x}$

. The resulting state of the carrier is

$W_{x}$

. The resulting state of the carrier is

![]() $W_{x+1}$

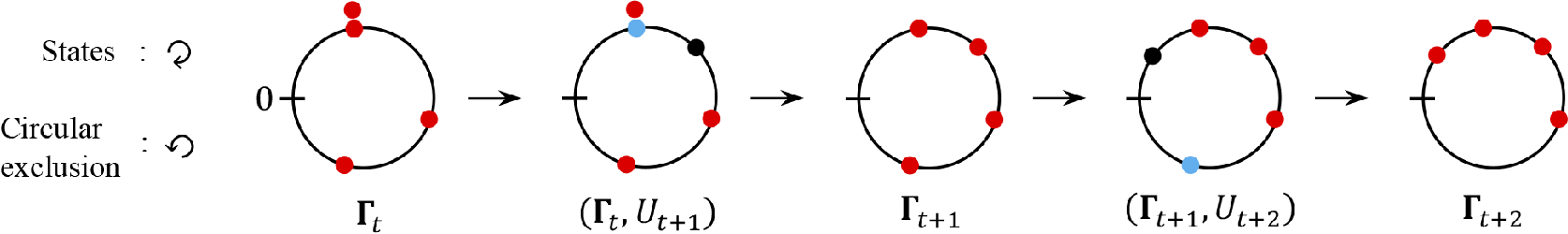

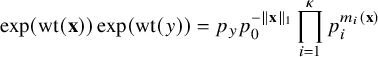

. We call the transition rule (8) as the ‘circular exclusion’ (since a ball in the carrier’s possession is excluded from the carrier upon the insertion of a new ball according to the circular ordering). One can also view the carrier process as a multitype queuing system, where

$W_{x+1}$

. We call the transition rule (8) as the ‘circular exclusion’ (since a ball in the carrier’s possession is excluded from the carrier upon the insertion of a new ball according to the circular ordering). One can also view the carrier process as a multitype queuing system, where

![]() $W_{x}$

denotes the state of the queue and

$W_{x}$

denotes the state of the queue and

![]() $W_{x}(i)$

is the number of jobs of ‘cyclic hierarchy’ i to be processed.

$W_{x}(i)$

is the number of jobs of ‘cyclic hierarchy’ i to be processed.

A large portion of this paper will be devoted to analyzing scaling limits of the carrier process

![]() $W_{x}$

over the i.i.d. configuration

$W_{x}$

over the i.i.d. configuration

![]() $\xi ^{\mathbf {p}}$

. In this case,

$\xi ^{\mathbf {p}}$

. In this case,

![]() $W_{x}$

is a Markov chain on the state space of the nonnegative integer orthant

$W_{x}$

is a Markov chain on the state space of the nonnegative integer orthant

![]() $\Omega $

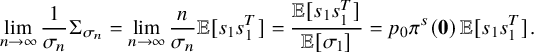

. See Figure 1 for an illustration.

$\Omega $

. See Figure 1 for an illustration.

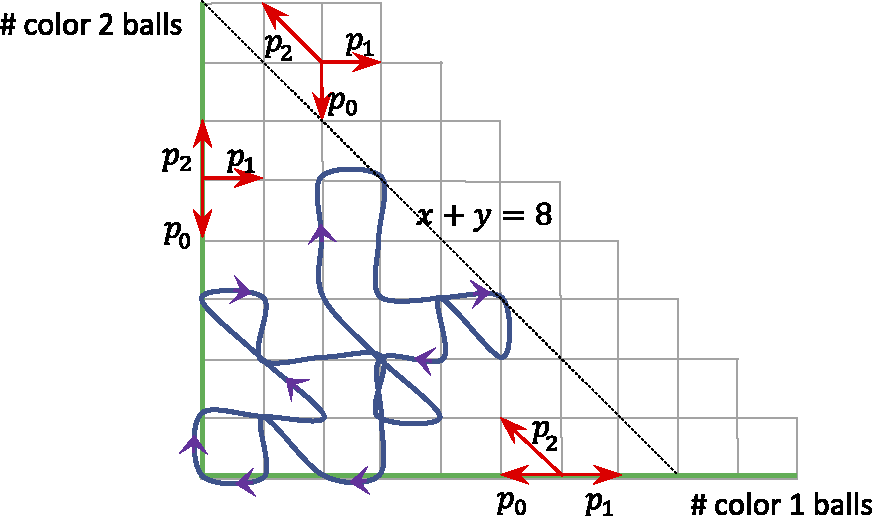

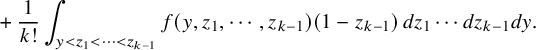

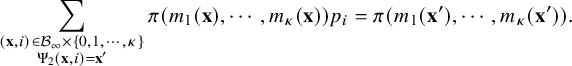

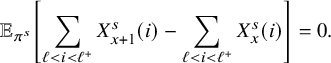

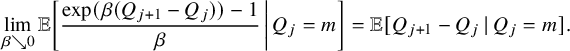

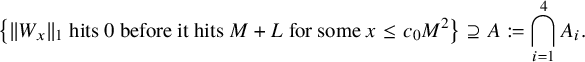

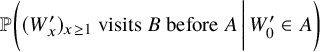

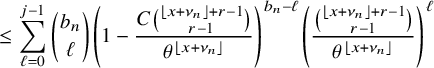

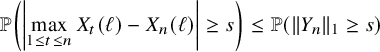

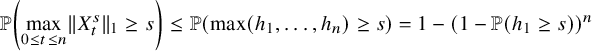

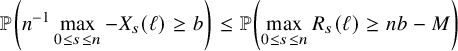

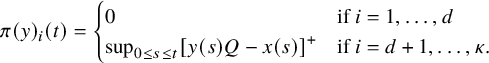

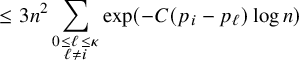

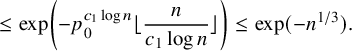

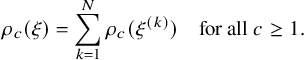

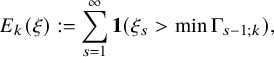

Figure 1 State space diagram for the carrier process

![]() $W_{x}$

for

$W_{x}$

for

![]() $\kappa =2$

. Red arrows illustrate the transition kernel at the ‘interior’ (gray) and ‘boundary’ (green) points in the state space. A single excursion (starting and ending at the origin) of ‘height’

$\kappa =2$

. Red arrows illustrate the transition kernel at the ‘interior’ (gray) and ‘boundary’ (green) points in the state space. A single excursion (starting and ending at the origin) of ‘height’

![]() $8$

is shown in a blue path with arrows.

$8$

is shown in a blue path with arrows.

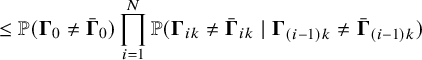

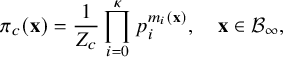

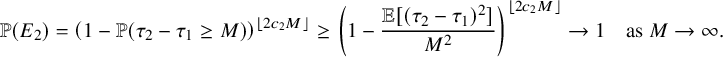

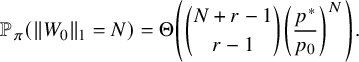

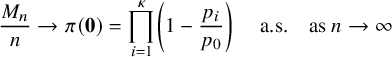

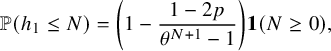

Theorem 2.3 states the behavior of the carrier process in the subcritical regime

![]() $p_0>\max (p_1, \cdots , p_{\kappa })$

. Define a function

$p_0>\max (p_1, \cdots , p_{\kappa })$

. Define a function

![]() $\pi :\Omega \rightarrow \mathbb {R}$

by

$\pi :\Omega \rightarrow \mathbb {R}$

by

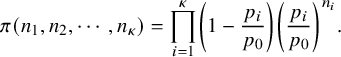

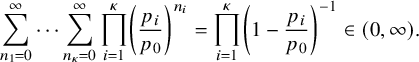

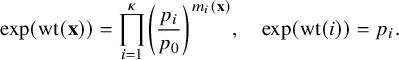

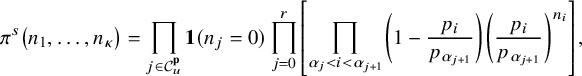

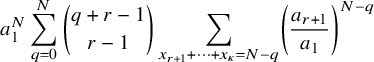

$$ \begin{align} \pi(n_{1},n_{2},\cdots, n_{\kappa}) = \prod_{i=1}^{\kappa} \left(1-\frac{p_{i}}{p_{0}} \right) \left( \frac{p_{i}}{p_{0}} \right)^{n_{i}}. \end{align} $$

$$ \begin{align} \pi(n_{1},n_{2},\cdots, n_{\kappa}) = \prod_{i=1}^{\kappa} \left(1-\frac{p_{i}}{p_{0}} \right) \left( \frac{p_{i}}{p_{0}} \right)^{n_{i}}. \end{align} $$

This is a valid probability distribution on

![]() $\Omega $

when

$\Omega $

when

![]() $p_{0}>\max (p_{1},\cdots ,p_{\kappa })$

since

$p_{0}>\max (p_{1},\cdots ,p_{\kappa })$

since

$$ \begin{align} \sum_{n_{1}=0}^{\infty}\cdots \sum_{n_{\kappa}=0}^{\infty} \prod_{i=1}^{\kappa} \left( \frac{p_{i}}{p_{0}} \right)^{n_{i}} = \prod_{i=1}^{\kappa} \left(1-\frac{p_{i}}{p_{0}} \right)^{-1} \in (0,\infty). \end{align} $$

$$ \begin{align} \sum_{n_{1}=0}^{\infty}\cdots \sum_{n_{\kappa}=0}^{\infty} \prod_{i=1}^{\kappa} \left( \frac{p_{i}}{p_{0}} \right)^{n_{i}} = \prod_{i=1}^{\kappa} \left(1-\frac{p_{i}}{p_{0}} \right)^{-1} \in (0,\infty). \end{align} $$

Note that

![]() $\pi $

is the the product of geometric distributions of means

$\pi $

is the the product of geometric distributions of means

![]() $p_{i}/(p_{0}-p_{i})>0$

for

$p_{i}/(p_{0}-p_{i})>0$

for

![]() $i=1,\dots ,\kappa $

.

$i=1,\dots ,\kappa $

.

Theorem 2.3 (The carrier process at the subcritical regime).

Let

![]() $p^{*}:=\max (p_{1},\cdots , p_{\kappa })$

and suppose

$p^{*}:=\max (p_{1},\cdots , p_{\kappa })$

and suppose

![]() $p_{0}>p^{*}$

. Let r denote the multiplicity of

$p_{0}>p^{*}$

. Let r denote the multiplicity of

![]() $p^{*}$

(i.e., number of i’s in

$p^{*}$

(i.e., number of i’s in

![]() $\{1,\dots ,\kappa \}$

s.t.

$\{1,\dots ,\kappa \}$

s.t.

![]() $p_{i}=p^{*}$

).

$p_{i}=p^{*}$

).

-

(i) (Convergence) The carrier process

$W_{x}$

is an irreducible, aperiodic and positive recurrent Markov chain on

$W_{x}$

is an irreducible, aperiodic and positive recurrent Markov chain on

$\mathbb {Z}^{\kappa }_{\ge 0}$

with

$\mathbb {Z}^{\kappa }_{\ge 0}$

with

$\pi $

in (9) as its unique stationary distribution. Thus, writing

$\pi $

in (9) as its unique stationary distribution. Thus, writing

$d_{TV}$

for the total variation distance and denoting the distribution of

$d_{TV}$

for the total variation distance and denoting the distribution of

$W_{x}$

by

$W_{x}$

by

$\pi _{x}$

, then (11)

$\pi _{x}$

, then (11) $$ \begin{align} \lim_{x\rightarrow \infty} d_{TV}(\pi_{x},\pi) = 0. \end{align} $$

$$ \begin{align} \lim_{x\rightarrow \infty} d_{TV}(\pi_{x},\pi) = 0. \end{align} $$

-

(ii) (Multidimensional Gambler’s ruin) Let

$T_{1}$

denote the first return time of

$T_{1}$

denote the first return time of

$W_{x}$

to the origin and let

$W_{x}$

to the origin and let

$h_{1}:=\max _{0\le x \le T_{1}} \lVert W_{x} \rVert _{1}$

. Then for all

$h_{1}:=\max _{0\le x \le T_{1}} \lVert W_{x} \rVert _{1}$

. Then for all

$N\ge 1$

, there exists a constant

$N\ge 1$

, there exists a constant

$\delta>0$

such that (12)where

$\delta>0$

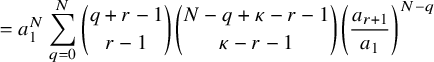

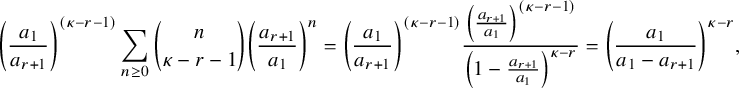

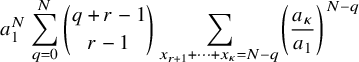

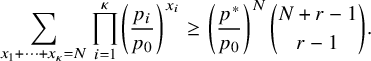

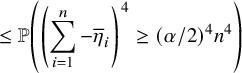

such that (12)where $$ \begin{align} \delta\, \binom{N+r-1}{r-1} \left( \frac{p^{*}}{p_{0}} \right)^{N} \, \le\, {\mathbb{P}}( h_{1}\ge N ) \, \le\, C \binom{N+r-1}{r-1} \left( \frac{p^{*}}{p_{0}} \right)^{N}, \end{align} $$

$$ \begin{align} \delta\, \binom{N+r-1}{r-1} \left( \frac{p^{*}}{p_{0}} \right)^{N} \, \le\, {\mathbb{P}}( h_{1}\ge N ) \, \le\, C \binom{N+r-1}{r-1} \left( \frac{p^{*}}{p_{0}} \right)^{N}, \end{align} $$

$C=1$

if

$C=1$

if

$r=\kappa $

and

$r=\kappa $

and

$C=\left ( \frac {p^{*}}{p^{*}-p^{(2)}} \right )^{\kappa -r}$

if

$C=\left ( \frac {p^{*}}{p^{*}-p^{(2)}} \right )^{\kappa -r}$

if

$r<\kappa $

with

$r<\kappa $

with

$p^{(2)}$

being the second largest value among

$p^{(2)}$

being the second largest value among

$p_{1},\dots ,p_{\kappa }$

.

$p_{1},\dots ,p_{\kappa }$

.

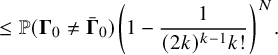

By using Theorem 2.3, we establish sharp scaling limit of soliton lengths for the independence model in the subcritical regime, which is stated in Theorem 2.4 below. (See Section 1.5 for a precise definition of Landau notations.)

Theorem 2.4 (The independence model – Subcritical regime).

Fix

![]() $\kappa \ge 1$

and let

$\kappa \ge 1$

and let

![]() $\xi ^{n,\mathbf {p}}$

be as the i.i.d. model above. Denote

$\xi ^{n,\mathbf {p}}$

be as the i.i.d. model above. Denote

![]() $\lambda _{j}(n)=\lambda _{j}(\xi ^{n,\mathbf {p}})$

,

$\lambda _{j}(n)=\lambda _{j}(\xi ^{n,\mathbf {p}})$

,

![]() $p^{*} := \max _{1\le i \le \kappa } p_{i}$

, and

$p^{*} := \max _{1\le i \le \kappa } p_{i}$

, and

![]() $r:=| \{ 1\le i \le \kappa \,\colon \, p_{i}=p^{*}\}|$

. Suppose

$r:=| \{ 1\le i \le \kappa \,\colon \, p_{i}=p^{*}\}|$

. Suppose

![]() $p_{0}>p^{*}$

and denote

$p_{0}>p^{*}$

and denote

![]() $\theta := p^{*}/p_{0}$

. Then for each fixed

$\theta := p^{*}/p_{0}$

. Then for each fixed

![]() $j\ge 1$

,

$j\ge 1$

,

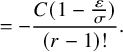

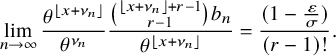

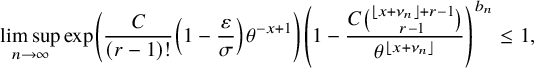

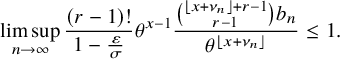

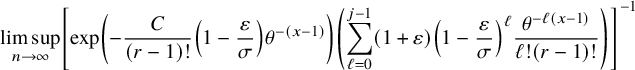

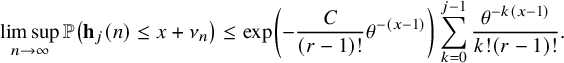

Furthermore, denote

![]() $\nu _{n}:=(1+\delta _{n}) \log _{\theta }\left ( \sigma n /(r-1)! \right )$

, where

$\nu _{n}:=(1+\delta _{n}) \log _{\theta }\left ( \sigma n /(r-1)! \right )$

, where

![]() $\sigma := \prod _{i=1}^{\kappa }\left ( 1-\frac {p_{i}}{p_{0}}\right )$

and

$\sigma := \prod _{i=1}^{\kappa }\left ( 1-\frac {p_{i}}{p_{0}}\right )$

and

![]() $\delta _{n} := \frac {(r-1) \log \log _{\theta }\left ( \sigma n /(r-1)! \right ) + \log (r-1)! }{\log \sigma n / (r-1)!}$

. Then for all

$\delta _{n} := \frac {(r-1) \log \log _{\theta }\left ( \sigma n /(r-1)! \right ) + \log (r-1)! }{\log \sigma n / (r-1)!}$

. Then for all

![]() $x\in \mathbb {R}$

,

$x\in \mathbb {R}$

,

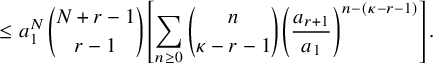

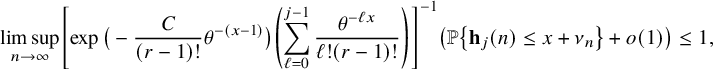

$$ \begin{align} &\leq {\mathop{\mathrm{lim\,sup}}\limits_{n\rightarrow \infty}}{\mathbb{P}}\left( \lambda_{j}(n) \leq x+\nu_{n} \right) \leq \exp\left(-\frac{C}{(r-1)!} \theta^{-(x-1)} \right)\sum_{k=0}^{j-1} \frac{\theta^{-k(x-1)}}{k! (r-1)! }, \end{align} $$

$$ \begin{align} &\leq {\mathop{\mathrm{lim\,sup}}\limits_{n\rightarrow \infty}}{\mathbb{P}}\left( \lambda_{j}(n) \leq x+\nu_{n} \right) \leq \exp\left(-\frac{C}{(r-1)!} \theta^{-(x-1)} \right)\sum_{k=0}^{j-1} \frac{\theta^{-k(x-1)}}{k! (r-1)! }, \end{align} $$

where

![]() $\delta>0$

,

$\delta>0$

,

![]() $C\ge 1$

are constants in Theorem 2.3.

$C\ge 1$

are constants in Theorem 2.3.

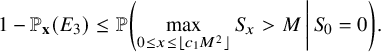

Next, we turn our attention to the critical and the supercritical regime, where

![]() $p_{0} \le \max (p_{1},\cdots , p_{\kappa })$

. In this regime, the carrier process does not have a stationary distribution, and we are interested in identifying the limit of the carrier process in the linear and diffusive scales. A natural candidate for the diffusive scaling limit (if it exists) would be the semimartingale reflecting Brownian motion (SRBM) [Reference WilliamsWil95], whose definition we recall in Section 10. Roughly speaking, an SRBM on a domain

$p_{0} \le \max (p_{1},\cdots , p_{\kappa })$

. In this regime, the carrier process does not have a stationary distribution, and we are interested in identifying the limit of the carrier process in the linear and diffusive scales. A natural candidate for the diffusive scaling limit (if it exists) would be the semimartingale reflecting Brownian motion (SRBM) [Reference WilliamsWil95], whose definition we recall in Section 10. Roughly speaking, an SRBM on a domain

![]() $S\subseteq \mathbb {R}^{\kappa }$

is a stochastic process

$S\subseteq \mathbb {R}^{\kappa }$

is a stochastic process

![]() $\mathcal {W}$

that admits a Skorokhod-type decomposition

$\mathcal {W}$

that admits a Skorokhod-type decomposition

where X is a

![]() $\kappa $

-dimensional Brownian motion with drift

$\kappa $

-dimensional Brownian motion with drift

![]() $\theta $

, covariance matrix

$\theta $

, covariance matrix

![]() $\Sigma $

and initial distribution

$\Sigma $

and initial distribution

![]() $\nu $

. The ‘interior process’ X gives the behavior of

$\nu $

. The ‘interior process’ X gives the behavior of

![]() $\mathcal {W}$

in the interior of S. When it is at the boundary of S, it is pushed instantaneously toward the interior of S along the direction specified by the ‘reflection matrix’ R and an associated ‘pushing process’ Y. We say such

$\mathcal {W}$

in the interior of S. When it is at the boundary of S, it is pushed instantaneously toward the interior of S along the direction specified by the ‘reflection matrix’ R and an associated ‘pushing process’ Y. We say such

![]() $\mathcal {W}$

is a SRBM associated with

$\mathcal {W}$

is a SRBM associated with

![]() $(S, \theta , \Sigma , R, \nu )$

. If

$(S, \theta , \Sigma , R, \nu )$

. If

![]() $R=I-Q$

for some nonnegative matrix Q with spectral radius less than one, then such

$R=I-Q$

for some nonnegative matrix Q with spectral radius less than one, then such

![]() $\mathcal {W}$

is unique (pathwise) for possibly degenerate

$\mathcal {W}$

is unique (pathwise) for possibly degenerate

![]() $\Sigma $

when

$\Sigma $

when

![]() $S=\mathbb {R}^{\kappa }_{\ge 0}$

[Reference Harrison and ReimanHR81]. If

$S=\mathbb {R}^{\kappa }_{\ge 0}$

[Reference Harrison and ReimanHR81]. If

![]() $\Sigma $

is nondegenerate and S is a polyhedron, a necessary and sufficient condition for the existence and uniqueness of such SRBM is that R is ‘completely-

$\Sigma $

is nondegenerate and S is a polyhedron, a necessary and sufficient condition for the existence and uniqueness of such SRBM is that R is ‘completely-

![]() $\mathcal {S}$

’ (see Definition 10.2) [Reference WilliamsWil95, Reference Kang and WilliamsKW07].

$\mathcal {S}$

’ (see Definition 10.2) [Reference WilliamsWil95, Reference Kang and WilliamsKW07].

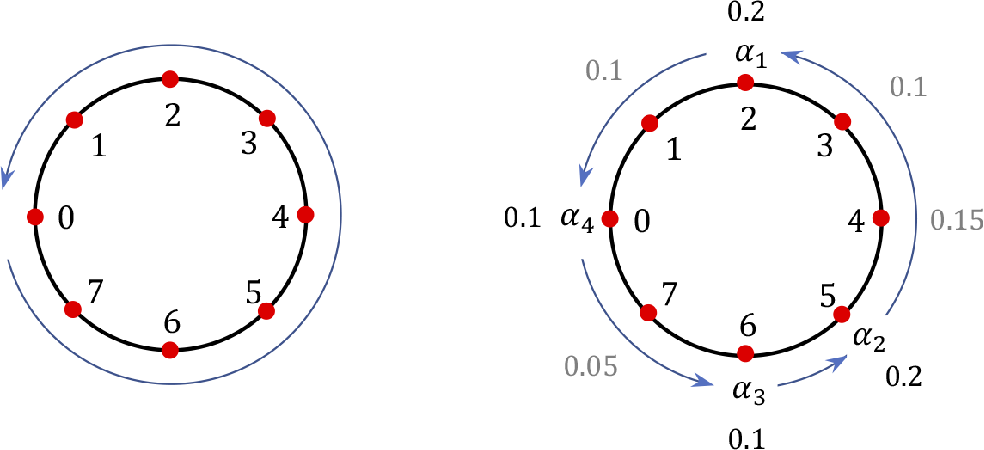

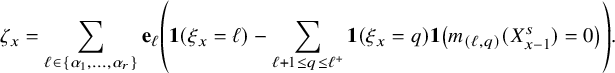

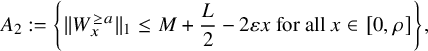

A crucial observation for analyzing the carrier process in the critical and supercritical regimes is the following. Of all the

![]() $\kappa $

coordinates of

$\kappa $

coordinates of

![]() $W_{x}$

, some have a negative drift and some others do not. We call an integer

$W_{x}$

, some have a negative drift and some others do not. We call an integer

![]() $1\le i\le \kappa $

an unstable color if

$1\le i\le \kappa $

an unstable color if

![]() $p_{i}\ge \max (p_{i+1},\cdots ,p_{\kappa },p_{0})$

and a stable color otherwise. Since balls of color i can only be excluded by balls of colors in

$p_{i}\ge \max (p_{i+1},\cdots ,p_{\kappa },p_{0})$

and a stable color otherwise. Since balls of color i can only be excluded by balls of colors in

![]() $\{i+1,\dots ,\kappa ,0\}$

, then the coordinate

$\{i+1,\dots ,\kappa ,0\}$

, then the coordinate

![]() $W_{x}(i)$

is likely to diminish if the color i is stable but not if i is unstable. Denote the set of all unstable colors by

$W_{x}(i)$

is likely to diminish if the color i is stable but not if i is unstable. Denote the set of all unstable colors by

![]() $\mathcal {C}_{u}^{\mathbf {p}}=\{\alpha _{1},\cdots , \alpha _{r}\}$

with

$\mathcal {C}_{u}^{\mathbf {p}}=\{\alpha _{1},\cdots , \alpha _{r}\}$

with

![]() $\alpha _{1}<\cdots <\alpha _{r}$

and let

$\alpha _{1}<\cdots <\alpha _{r}$

and let

![]() $\mathcal {C}_{s}^{\mathbf {p}}:=\{0,1,\cdots ,\kappa \}\setminus \mathcal {C}_{u}^{\mathbf {p}}$

denote the set of stable colors. (See Figure 8 for illustration.) By definition, we have

$\mathcal {C}_{s}^{\mathbf {p}}:=\{0,1,\cdots ,\kappa \}\setminus \mathcal {C}_{u}^{\mathbf {p}}$

denote the set of stable colors. (See Figure 8 for illustration.) By definition, we have

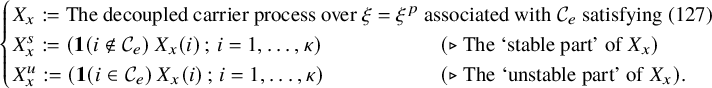

Now, we will construct a new process

![]() $X_{x}$

, which we call the ‘decoupled carrier process’ (see Section 6.1), that mimics the behavior of

$X_{x}$

, which we call the ‘decoupled carrier process’ (see Section 6.1), that mimics the behavior of

![]() $W_{x}$

, but the values of

$W_{x}$

, but the values of

![]() $X_{x}$

on the unstable colors are unconstrained and thus can be negative. Since

$X_{x}$

on the unstable colors are unconstrained and thus can be negative. Since

![]() $W_{x}$

is confined in the nonnegative orthant

$W_{x}$

is confined in the nonnegative orthant

![]() $\mathbb {Z}^{\kappa }_{\ge 0}$

but

$\mathbb {Z}^{\kappa }_{\ge 0}$

but

![]() $X_{x}$

is not, we need to add some correction process to

$X_{x}$

is not, we need to add some correction process to

![]() $X_{x}$

that ‘pushes’ it toward the orthant

$X_{x}$

that ‘pushes’ it toward the orthant

![]() $\mathbb {Z}^{\kappa }_{\ge 0}$

whenever

$\mathbb {Z}^{\kappa }_{\ge 0}$

whenever

![]() $X_{x}$

has some of its coordinates going to negative. More precisely, in Lemma 6.3, we identify a ‘reflection matrix’

$X_{x}$

has some of its coordinates going to negative. More precisely, in Lemma 6.3, we identify a ‘reflection matrix’

![]() $R\in \mathbb {R}^{\kappa \times \kappa }$

and a ‘pushing process’

$R\in \mathbb {R}^{\kappa \times \kappa }$

and a ‘pushing process’

![]() $Y_{x}$

on

$Y_{x}$

on

![]() $\mathbb {Z}^{\kappa }$

such that

$\mathbb {Z}^{\kappa }$

such that

where

![]() $Y_{0}=\mathbf {0}$

, and for each

$Y_{0}=\mathbf {0}$

, and for each

![]() $i\in \{1,\dots ,\kappa \}$

, the ith coordinate of

$i\in \{1,\dots ,\kappa \}$

, the ith coordinate of

![]() $Y_{x}$

is nondecreasing in x and can only increase when

$Y_{x}$

is nondecreasing in x and can only increase when

![]() $W_{x}(i)=0$

. We call the above as a Skorokhod decomposition of the carrier process (Our definition is motivated by the Skorokhod problem; see Definition 10.3.) This and the classical invariance principle for SRBM [Reference Reiman and WilliamsRW88] are the keys to establishing the following result on the scaling limit of the carrier process.

$W_{x}(i)=0$

. We call the above as a Skorokhod decomposition of the carrier process (Our definition is motivated by the Skorokhod problem; see Definition 10.3.) This and the classical invariance principle for SRBM [Reference Reiman and WilliamsRW88] are the keys to establishing the following result on the scaling limit of the carrier process.

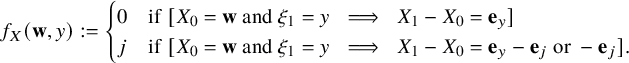

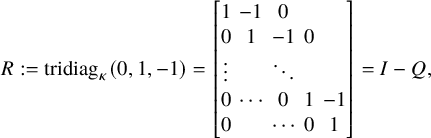

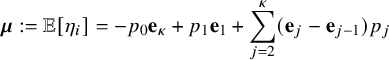

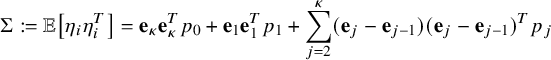

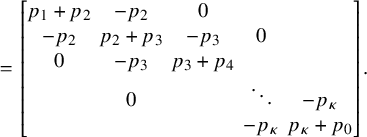

Theorem 2.5 (Linear and diffusive scaling limit of the carrier process).

Suppose

![]() $p_{0} \le \max (p_{1},\cdots , p_{\kappa })$

. Let

$p_{0} \le \max (p_{1},\cdots , p_{\kappa })$

. Let

![]() $\alpha _{1}<\cdots <\alpha _{r}$

as before and define

$\alpha _{1}<\cdots <\alpha _{r}$

as before and define

$$ \begin{align} \boldsymbol{\mu}=(\mu_{1},\dots,\mu_{\kappa}):=\sum_{j=1}^{r} \mathbf{e}_{\alpha_{j}} (p_{\alpha_{j}}- p_{\alpha_{j+1}}), \end{align} $$

$$ \begin{align} \boldsymbol{\mu}=(\mu_{1},\dots,\mu_{\kappa}):=\sum_{j=1}^{r} \mathbf{e}_{\alpha_{j}} (p_{\alpha_{j}}- p_{\alpha_{j+1}}), \end{align} $$

where we let

![]() $p_{\alpha _{r+1}}=p_{0}$

.

$p_{\alpha _{r+1}}=p_{0}$

.

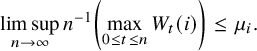

-

(i) (Linear scaling) Almost surely,

(20) $$ \begin{align} \lim_{x\rightarrow\infty} \, x^{-1} W_{x} =\lim_{x\rightarrow\infty} \, x^{-1} \left( \max_{0\le t \le x}W_{t}(i)\,;\, i=1,\dots,\kappa \right)= \boldsymbol{\mu}. \end{align} $$

$$ \begin{align} \lim_{x\rightarrow\infty} \, x^{-1} W_{x} =\lim_{x\rightarrow\infty} \, x^{-1} \left( \max_{0\le t \le x}W_{t}(i)\,;\, i=1,\dots,\kappa \right)= \boldsymbol{\mu}. \end{align} $$

-

(ii) (Diffusive scaling) Let

$(\overline {W}_{t})_{t\in \mathbb {R}_{\ge 0}}$

denote the linear interpolation of

$(\overline {W}_{t})_{t\in \mathbb {R}_{\ge 0}}$

denote the linear interpolation of

$(W_{x}-x \boldsymbol {\mu })_{x\in \mathbb {N}}$

. Then as

$(W_{x}-x \boldsymbol {\mu })_{x\in \mathbb {N}}$

. Then as

$n\rightarrow \infty $

, (21)where

$n\rightarrow \infty $

, (21)where $$ \begin{align} (x^{-1/2}\overline{W}_{xt} \,;\, 0\leq t \leq 1 ) \Longrightarrow \mathcal{W} \, \text{ in }\, C([0,1]), \end{align} $$

$$ \begin{align} (x^{-1/2}\overline{W}_{xt} \,;\, 0\leq t \leq 1 ) \Longrightarrow \mathcal{W} \, \text{ in }\, C([0,1]), \end{align} $$

$\mathcal {W}$

is an SRBM associated with data

$\mathcal {W}$

is an SRBM associated with data

$(S, \mathbf {0}, \Sigma , R, \delta _{\mathbf {0}})$

(see Definition 10.1) with

$(S, \mathbf {0}, \Sigma , R, \delta _{\mathbf {0}})$

(see Definition 10.1) with

$S:=\{ (x_{1},\dots ,x_{\kappa })\in \mathbb {R}^{\kappa } \,:\, x_{i}\ge 0 \,\, \text {if } \mu _{i}=0 \}$

,

$S:=\{ (x_{1},\dots ,x_{\kappa })\in \mathbb {R}^{\kappa } \,:\, x_{i}\ge 0 \,\, \text {if } \mu _{i}=0 \}$

,

$\Sigma $

the limiting covariance matrix (possibly degenerate) in (177),

$\Sigma $

the limiting covariance matrix (possibly degenerate) in (177),

$R := \mathrm {tridiag}_{\kappa }(0,1,-1)$

, and

$R := \mathrm {tridiag}_{\kappa }(0,1,-1)$

, and

$\delta _{\mathbf {0}}$

the point mass at

$\delta _{\mathbf {0}}$

the point mass at

$\mathbf {0}$

.

$\mathbf {0}$

.

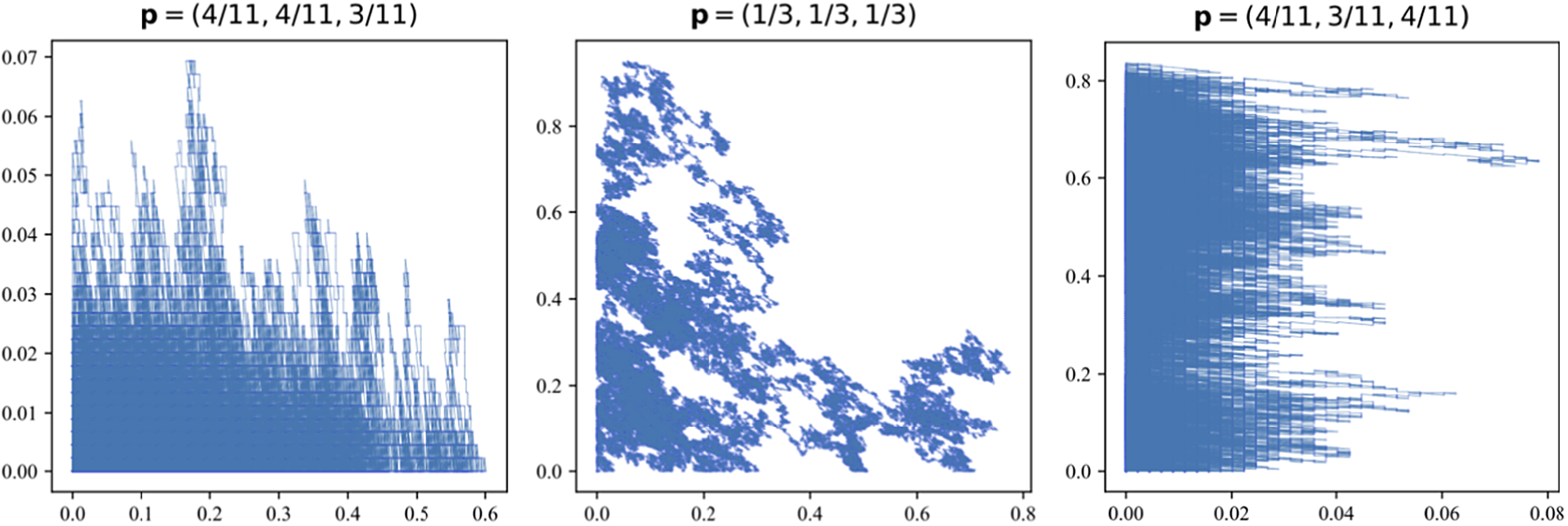

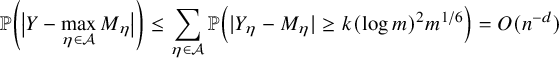

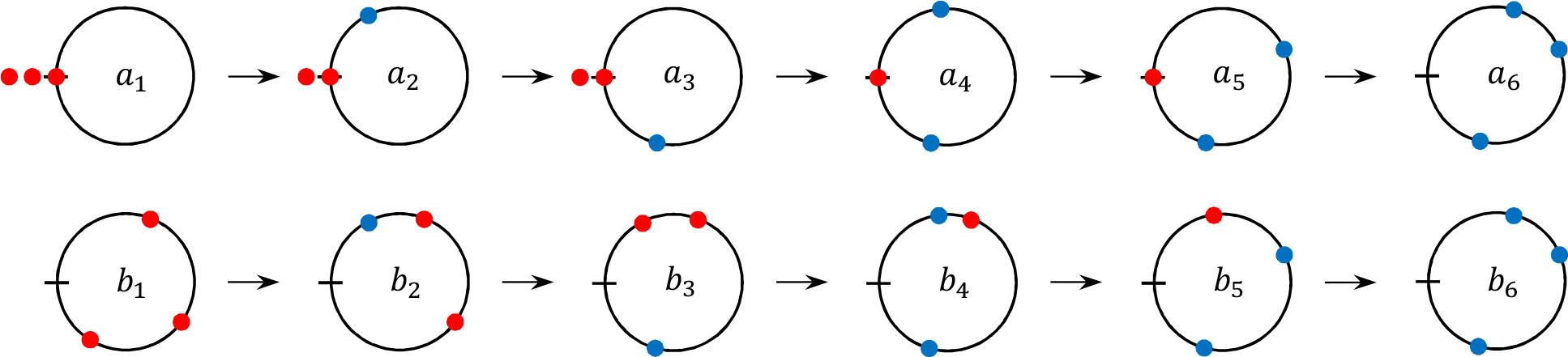

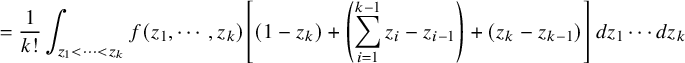

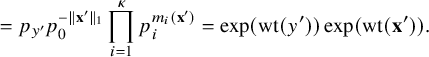

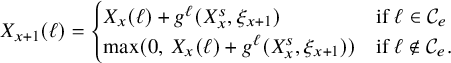

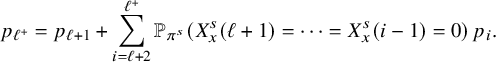

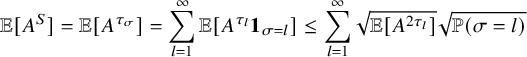

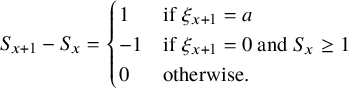

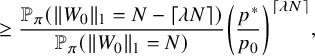

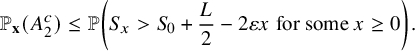

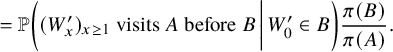

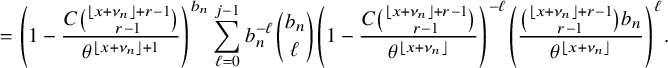

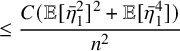

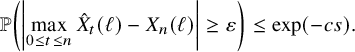

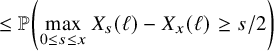

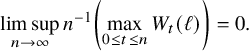

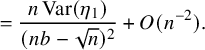

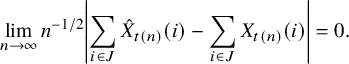

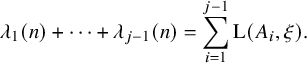

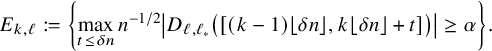

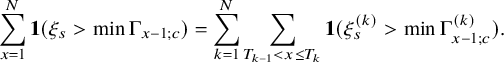

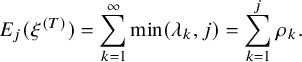

In Figures 2 and 3, we provide simulations of the carrier process

![]() $W_{x}=(W_{x}(1), W_{x}(2))$

for

$W_{x}=(W_{x}(1), W_{x}(2))$

for

![]() $\kappa =2$

in various regimes, numerically verifying Theorem 2.5. In Figure 2, we show the carrier process in diffusive scaling (

$\kappa =2$

in various regimes, numerically verifying Theorem 2.5. In Figure 2, we show the carrier process in diffusive scaling (

![]() $n^{-1/2}$

) at three different critical ball densities

$n^{-1/2}$

) at three different critical ball densities

![]() $\mathbf {p}$

. The carrier process in diffusive scaling converges weakly to an SRBM in

$\mathbf {p}$

. The carrier process in diffusive scaling converges weakly to an SRBM in

![]() $\mathbb {R}^{2}_{\ge 0}$

, whose covariance matrix depends on

$\mathbb {R}^{2}_{\ge 0}$

, whose covariance matrix depends on

![]() $\mathbf {p}$

and can be degenerate. For instance, at

$\mathbf {p}$

and can be degenerate. For instance, at

![]() $\mathbf {p}=(4/11,4/11,3/11)$

,

$\mathbf {p}=(4/11,4/11,3/11)$

,

![]() $W_{x}(2)$

is subcritical (since

$W_{x}(2)$

is subcritical (since

![]() $p_{2}=3/11<4/11=p_{0}$

), and

$p_{2}=3/11<4/11=p_{0}$

), and

![]() $W_{x}(1)$

is critical, so the SRBM degenerates in the second axes.

$W_{x}(1)$

is critical, so the SRBM degenerates in the second axes.

Figure 2 Simulation of the carrier process

![]() $W_{x}$

in diffusive scaling for

$W_{x}$

in diffusive scaling for

![]() $\kappa =2$

,

$\kappa =2$

,

![]() $n=2\times 10^{5}$

, at three critical ball densities (left)

$n=2\times 10^{5}$

, at three critical ball densities (left)

![]() $\mathbf {p}=(4/11,4/11,3/11)$

, (middle)

$\mathbf {p}=(4/11,4/11,3/11)$

, (middle)

![]() $\mathbf {p}=(1/3,1/3,1/3)$

and (right)

$\mathbf {p}=(1/3,1/3,1/3)$

and (right)

![]() $\mathbf {p}=(4/11,3/11,4/11)$

. In all cases, the process converges weakly to a semimartingale reflecting Brownian motion on

$\mathbf {p}=(4/11,3/11,4/11)$

. In all cases, the process converges weakly to a semimartingale reflecting Brownian motion on

![]() $\mathbb {R}^{2}_{\ge 0}$

whose covariance matrix is nondegenerate in the middle and degenerate in the other two cases.

$\mathbb {R}^{2}_{\ge 0}$

whose covariance matrix is nondegenerate in the middle and degenerate in the other two cases.

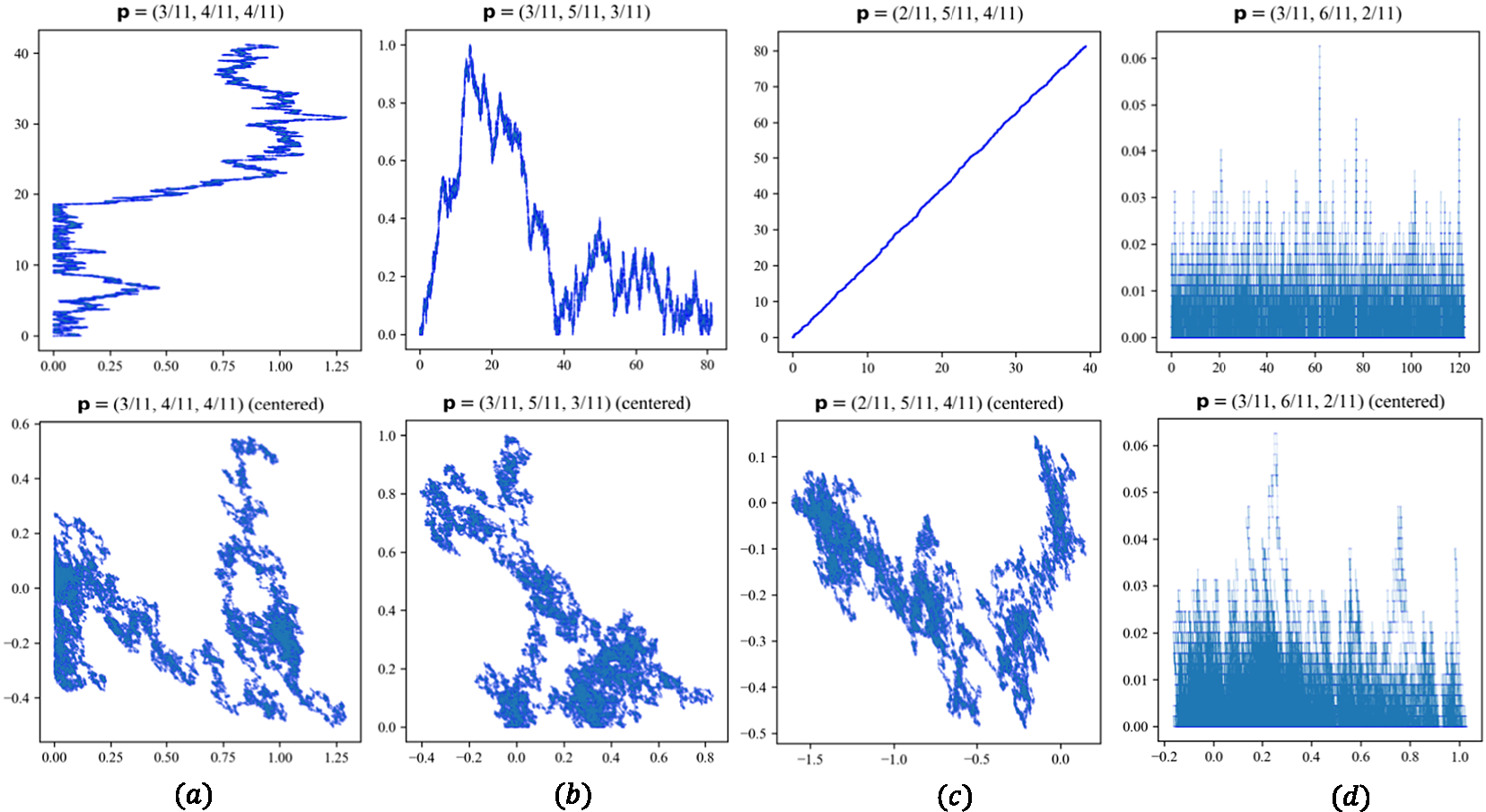

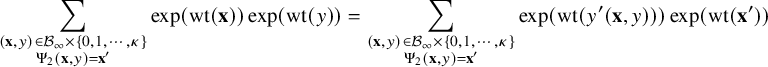

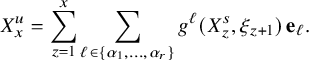

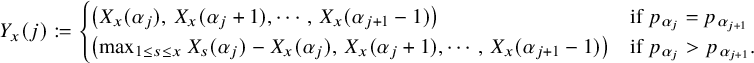

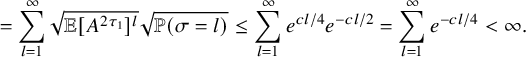

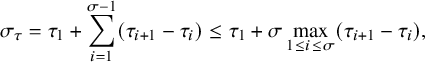

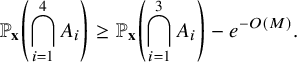

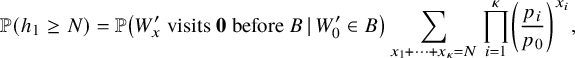

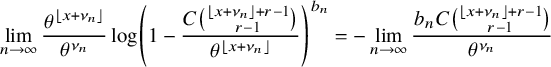

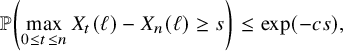

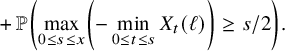

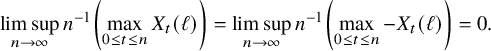

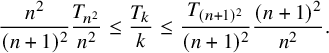

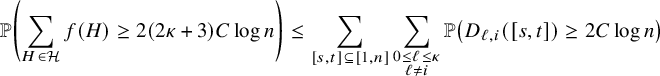

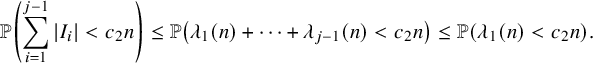

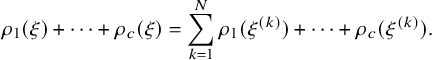

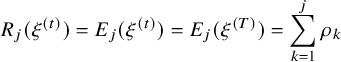

Figure 3 Simulation of the carrier process

![]() $W_{x}$

in diffusive scaling for

$W_{x}$

in diffusive scaling for

![]() $\kappa =2$

,

$\kappa =2$

,

![]() $n=2\times 10^{5}$

, at four supercritical ball densities

$n=2\times 10^{5}$

, at four supercritical ball densities

![]() $(a)$

$(a)$

![]() $\mathbf {p}=(3/11,4/11,4/11)$

,

$\mathbf {p}=(3/11,4/11,4/11)$

,

![]() $(b)$

$(b)$

![]() $\mathbf {p}=(3/11,5/11,3/11)$

,

$\mathbf {p}=(3/11,5/11,3/11)$

,

![]() $(c)$

$(c)$

![]() $\mathbf {p}=(2/11,5/11,4/11)$

and

$\mathbf {p}=(2/11,5/11,4/11)$

and

![]() $(d)$

$(d)$

![]() $\mathbf {p}=(3/11,6/11,2/11)$

. The processes grow linearly at least in one dimension (the top row shows uncentered processes in diffusive scaling). As shown in the second row, after centering by the mean drift

$\mathbf {p}=(3/11,6/11,2/11)$

. The processes grow linearly at least in one dimension (the top row shows uncentered processes in diffusive scaling). As shown in the second row, after centering by the mean drift

![]() $\boldsymbol {\mu }$

, the processes converge weakly to semimartingale reflecting Brownian motion on domains

$\boldsymbol {\mu }$

, the processes converge weakly to semimartingale reflecting Brownian motion on domains

![]() $(a)$

$(a)$

![]() $\mathbb {R}_{\ge 0}\times \mathbb {R}$

,

$\mathbb {R}_{\ge 0}\times \mathbb {R}$

,

![]() $(b)$

$(b)$

![]() $\mathbb {R}\times \mathbb {R}_{\ge 0}$

,

$\mathbb {R}\times \mathbb {R}_{\ge 0}$

,

![]() $(c)$

$(c)$

![]() $\mathbb {R}^{2}$

(no reflection) and

$\mathbb {R}^{2}$

(no reflection) and

![]() $(d)$

$(d)$

![]() $\mathbb {R}\times \mathbb {R}_{\ge 0}$

(with a degenerate covariance matrix).

$\mathbb {R}\times \mathbb {R}_{\ge 0}$

(with a degenerate covariance matrix).

In Figure 3, we show the carrier process in diffusive scaling at three different supercritical ball densities

![]() $\mathbf {p}$

. The carrier process has a nonzero drift

$\mathbf {p}$

. The carrier process has a nonzero drift

![]() $\boldsymbol {\mu }=(\mu _{1},\mu _{2})\in \mathbb {R}^{2}_{\ge 0}$

. If

$\boldsymbol {\mu }=(\mu _{1},\mu _{2})\in \mathbb {R}^{2}_{\ge 0}$

. If

![]() $\mu _{1},\mu _{2}>0$

, then the centered carrier process

$\mu _{1},\mu _{2}>0$

, then the centered carrier process

![]() $W_{x}-x \boldsymbol {\mu }$

converges weakly to a 2-dimensional Brownian motion in diffusive scaling. If either

$W_{x}-x \boldsymbol {\mu }$

converges weakly to a 2-dimensional Brownian motion in diffusive scaling. If either

![]() $\mu _{1}$

or

$\mu _{1}$

or

![]() $\mu _{2}$

equals zero, then the diffusive scaling limit is an SRBM on

$\mu _{2}$

equals zero, then the diffusive scaling limit is an SRBM on

![]() $\mathbb {R}_{\ge 0} \times \mathbb {R}$

or

$\mathbb {R}_{\ge 0} \times \mathbb {R}$

or

![]() $\mathbb {R}\times \mathbb {R}_{\ge 0}$

, which is the domain S in the statement of Theorem 2.5 (ii). For instance, for

$\mathbb {R}\times \mathbb {R}_{\ge 0}$

, which is the domain S in the statement of Theorem 2.5 (ii). For instance, for

![]() $\mathbf {p}=(3/11, 6/11, 2/11)$

as in Figure 3 (d), the SRBM is on domain

$\mathbf {p}=(3/11, 6/11, 2/11)$

as in Figure 3 (d), the SRBM is on domain

![]() $S=\mathbb {R}\times \mathbb {R}_{\ge 0}$

and has a degenerate covariance matrix, since

$S=\mathbb {R}\times \mathbb {R}_{\ge 0}$

and has a degenerate covariance matrix, since

![]() $W_{x}(2)$

is subcritical and vanishes in the diffusive scale.

$W_{x}(2)$

is subcritical and vanishes in the diffusive scale.

Using the linear and the diffusive scaling limit of the carrier process in Theorem 2.5, we obtain a sharp scaling limit of soliton lengths for the independence model in the critical and subcritical regimes. These results are stated in Theorems 2.6 and 2.7 below.

Theorem 2.6 (The independence model – Critical regime).

Suppose

![]() $p^{*}=p_{0}$

. Then for each fixed

$p^{*}=p_{0}$

. Then for each fixed

![]() $j\ge 1$

,

$j\ge 1$

,

![]() $\lambda _{j}(n)=\Theta (\sqrt {n})$

. Furthermore, let

$\lambda _{j}(n)=\Theta (\sqrt {n})$

. Furthermore, let

![]() $\Sigma $

be a

$\Sigma $

be a

![]() $\kappa \times \kappa $

covariance matrix defined explicitly in (177) and

$\kappa \times \kappa $

covariance matrix defined explicitly in (177) and

![]() $R=\mathrm {tridiag}_{\kappa \times \kappa }(0,1,-1)$

. Let

$R=\mathrm {tridiag}_{\kappa \times \kappa }(0,1,-1)$

. Let

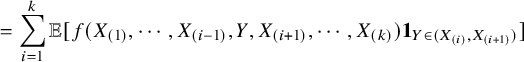

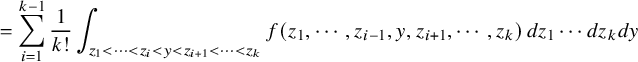

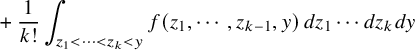

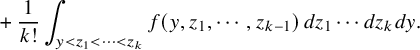

![]() $\mathcal {W}$