1. Introduction

In this paper, we continue the line of research from [Reference Benjamini, Cohen and Shinkar2, Reference Rao and Shinkar15, Reference Johnston and Scott9] studying geometric similarities between different subsets of the hypercube

![]() $\mathcal{H}_n = \{0,1\}^n$

. Given a set

$\mathcal{H}_n = \{0,1\}^n$

. Given a set

![]() $A \subseteq \mathcal{H}_n$

of size

$A \subseteq \mathcal{H}_n$

of size

![]() $\left |A\right | = 2^{n-1}$

and a bijection

$\left |A\right | = 2^{n-1}$

and a bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

, we define

the average stretch

of

$\phi \,:\, \mathcal{H}_{n-1} \to A$

, we define

the average stretch

of

![]() $\phi$

as

$\phi$

as

where

![]() ${{\sf dist}}(x,y)$

is defined as the number of coordinates

${{\sf dist}}(x,y)$

is defined as the number of coordinates

![]() $i \in [n]$

such that

$i \in [n]$

such that

![]() $x_i \neq y_i$

, and the expectation is taken over a uniformly random

$x_i \neq y_i$

, and the expectation is taken over a uniformly random

![]() $x,x^{\prime} \in \mathcal{H}_{n-1}$

that differ in exactly one coordinate.Footnote

1

$x,x^{\prime} \in \mathcal{H}_{n-1}$

that differ in exactly one coordinate.Footnote

1

The origin of this notion is motivated by the study of the complexity of distributions [Reference Goldreich, Goldwasser and Nussboim5, Reference Viola17, Reference Lovett and Viola12]. In this line of research, given a distribution

![]() $\mathcal{D}$

on

$\mathcal{D}$

on

![]() $\mathcal{H}_n$

the goal is to find a mapping

$\mathcal{H}_n$

the goal is to find a mapping

![]() $h \,:\, \mathcal{H}_m \to \mathcal{H}_n$

such that if

$h \,:\, \mathcal{H}_m \to \mathcal{H}_n$

such that if

![]() $U_m$

is the uniform distribution over

$U_m$

is the uniform distribution over

![]() $\mathcal{H}_m$

, then

$\mathcal{H}_m$

, then

![]() $h(U_m)$

is (close to) the distribution

$h(U_m)$

is (close to) the distribution

![]() $\mathcal{D}$

, and each output bit

$\mathcal{D}$

, and each output bit

![]() $h_i$

of the function

$h_i$

of the function

![]() $h$

is computable efficiently (e.g., computable in

$h$

is computable efficiently (e.g., computable in

![]() ${AC}_0$

, i.e., by polynomial size circuits of constant depth).

${AC}_0$

, i.e., by polynomial size circuits of constant depth).

Motivated by the goal of proving lower bounds for sampling from the uniform distribution on some set

![]() $A \subseteq \mathcal{H}_n$

, Lovett and Viola [Reference Lovett and Viola12] suggested the restricted problem of proving that no bijection from

$A \subseteq \mathcal{H}_n$

, Lovett and Viola [Reference Lovett and Viola12] suggested the restricted problem of proving that no bijection from

![]() $\mathcal{H}_{n-1}$

to

$\mathcal{H}_{n-1}$

to

![]() $A$

can be computed in

$A$

can be computed in

![]() ${AC}_0$

. Toward this goal they noted that it suffices to prove that any such bijection requires large average stretch. Indeed, by the structural results of [Reference Håstad7, Reference Boppana4, Reference Linial, Mansour and Nisan11] it is known that any such mapping

${AC}_0$

. Toward this goal they noted that it suffices to prove that any such bijection requires large average stretch. Indeed, by the structural results of [Reference Håstad7, Reference Boppana4, Reference Linial, Mansour and Nisan11] it is known that any such mapping

![]() $\phi$

that is computable by a polynomial size circuit of depth

$\phi$

that is computable by a polynomial size circuit of depth

![]() $d$

has

$d$

has

![]() ${\sf avgStretch}(\phi ) \lt \log (n)^{O(d)}$

, and hence proving that any bijection requires super-polylogarithmic average stretch implies that it cannot be computed in

${\sf avgStretch}(\phi ) \lt \log (n)^{O(d)}$

, and hence proving that any bijection requires super-polylogarithmic average stretch implies that it cannot be computed in

![]() ${AC}_0$

. Proving a lower bound for sampling using this approach remains an open problem.

${AC}_0$

. Proving a lower bound for sampling using this approach remains an open problem.

Studying this problem, [Reference Benjamini, Cohen and Shinkar2] have shown that if

![]() $n$

is odd, and

$n$

is odd, and

![]() $A_{{\sf maj}} \subseteq \mathcal{H}_n$

is the hamming ball of density

$A_{{\sf maj}} \subseteq \mathcal{H}_n$

is the hamming ball of density

![]() $1/2$

, that is

$1/2$

, that is

![]() $A_{{\sf maj}} = \{x \in \mathcal{H}_n \,:\, \sum _i x_i \gt n/2\}$

, then there is a

$A_{{\sf maj}} = \{x \in \mathcal{H}_n \,:\, \sum _i x_i \gt n/2\}$

, then there is a

![]() $O(1)$

-bi-Lipschitz mapping from

$O(1)$

-bi-Lipschitz mapping from

![]() $\mathcal{H}_{n-1}$

to

$\mathcal{H}_{n-1}$

to

![]() $A_{{\sf maj}}$

, thus suggesting that proving a lower bound for a bijection from

$A_{{\sf maj}}$

, thus suggesting that proving a lower bound for a bijection from

![]() $\mathcal{H}_{n-1}$

to

$\mathcal{H}_{n-1}$

to

![]() $A_{{\sf maj}}$

requires new ideas beyond the sensitivity-based structural results of [Reference Håstad7, Reference Boppana4, Reference Linial, Mansour and Nisan11] mentioned above. In [Reference Rao and Shinkar15] it has been shown that if a subset

$A_{{\sf maj}}$

requires new ideas beyond the sensitivity-based structural results of [Reference Håstad7, Reference Boppana4, Reference Linial, Mansour and Nisan11] mentioned above. In [Reference Rao and Shinkar15] it has been shown that if a subset

![]() $A_{{\sf rand}}$

of density

$A_{{\sf rand}}$

of density

![]() $1/2$

is chosen uniformly at random, then with high probability there is a bijection

$1/2$

is chosen uniformly at random, then with high probability there is a bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A_{{\sf rand}}$

with

$\phi \,:\, \mathcal{H}_{n-1} \to A_{{\sf rand}}$

with

![]() ${\sf avgStretch}(\phi ) = O(1)$

. This result has recently been improved by Johnston and Scott [Reference Johnston and Scott9], who showed that for a random set

${\sf avgStretch}(\phi ) = O(1)$

. This result has recently been improved by Johnston and Scott [Reference Johnston and Scott9], who showed that for a random set

![]() $A_{{\sf rand}} \subseteq \mathcal{H}_n$

of density

$A_{{\sf rand}} \subseteq \mathcal{H}_n$

of density

![]() $1/2$

there exists a

$1/2$

there exists a

![]() $O(1)$

-Lipschitz bijection from

$O(1)$

-Lipschitz bijection from

![]() $\mathcal{H}_{n-1}$

to

$\mathcal{H}_{n-1}$

to

![]() $A_{{\sf rand}}$

with high probability.

$A_{{\sf rand}}$

with high probability.

The following problem was posed in [Reference Benjamini, Cohen and Shinkar2], and repeated in [Reference Rao and Shinkar15, Reference Johnston and Scott9].

Problem 1.1. Exhibit a subset

![]() $A \subseteq \mathcal{H}_n$

of density

$A \subseteq \mathcal{H}_n$

of density

![]() $1/2$

such that any bijection

$1/2$

such that any bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

has

$\phi \,:\, \mathcal{H}_{n-1} \to A$

has

![]() ${\sf avgStretch}(\phi ) = \omega (1)$

, or prove that no such subset exists.Footnote

2

${\sf avgStretch}(\phi ) = \omega (1)$

, or prove that no such subset exists.Footnote

2

Remark. Note that it is easy to construct a set of density

![]() $1/2$

such that any bijection

$1/2$

such that any bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

must have a worst case stretch at least

$\phi \,:\, \mathcal{H}_{n-1} \to A$

must have a worst case stretch at least

![]() $n/2$

. For example, for odd

$n/2$

. For example, for odd

![]() $n$

consider the set

$n$

consider the set

![]() $A = \{y \in \mathcal{H}_n \,:\, n/2 \lt \sum _i y_i \lt n\} \cup \{0^n\}$

. Then any bijection

$A = \{y \in \mathcal{H}_n \,:\, n/2 \lt \sum _i y_i \lt n\} \cup \{0^n\}$

. Then any bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

must map some point

$\phi \,:\, \mathcal{H}_{n-1} \to A$

must map some point

![]() $x \in \mathcal{H}_{n-1}$

to

$x \in \mathcal{H}_{n-1}$

to

![]() $0^n$

, while all neighbours

$0^n$

, while all neighbours

![]() $x^{\prime}$

of

$x^{\prime}$

of

![]() $x$

are mapped to some

$x$

are mapped to some

![]() $\phi (x^{\prime})$

with weight at least

$\phi (x^{\prime})$

with weight at least

![]() $n/2$

. Hence, the worst case stretch of

$n/2$

. Hence, the worst case stretch of

![]() $\phi$

is at least

$\phi$

is at least

![]() $n/2$

. In contrast, Problem 1.1 does not seem to have a non-trivial solution.

$n/2$

. In contrast, Problem 1.1 does not seem to have a non-trivial solution.

To rephrase Problem 1.1, we are interested in determining a tight upper bound on the

![]() $\sf avgStretch$

that holds uniformly for all sets

$\sf avgStretch$

that holds uniformly for all sets

![]() $A \subseteq \mathcal{H}_n$

of density

$A \subseteq \mathcal{H}_n$

of density

![]() $1/2$

. Note that since the diameter of

$1/2$

. Note that since the diameter of

![]() $\mathcal{H}_n$

is

$\mathcal{H}_n$

is

![]() $n$

, for any set

$n$

, for any set

![]() $A \subseteq \mathcal{H}_n$

of density

$A \subseteq \mathcal{H}_n$

of density

![]() $1/2$

and any bijection

$1/2$

and any bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

it holds that

$\phi \,:\, \mathcal{H}_{n-1} \to A$

it holds that

![]() ${\sf avgStretch}(\phi ) \leq n$

. It is natural to ask how tight this bound is, that is, whether there exists

${\sf avgStretch}(\phi ) \leq n$

. It is natural to ask how tight this bound is, that is, whether there exists

![]() $A \subseteq \mathcal{H}_n$

of density

$A \subseteq \mathcal{H}_n$

of density

![]() $1/2$

such that any bijection

$1/2$

such that any bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

requires linear average stretch.

$\phi \,:\, \mathcal{H}_{n-1} \to A$

requires linear average stretch.

It is consistent with our current knowledge (though hard to believe) that for any set

![]() $A$

of density

$A$

of density

![]() $1/2$

there is a mapping

$1/2$

there is a mapping

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

with

$\phi \,:\, \mathcal{H}_{n-1} \to A$

with

![]() ${\sf avgStretch}(\phi ) \leq 2$

. The strongest lower bound we are aware of is for the set

${\sf avgStretch}(\phi ) \leq 2$

. The strongest lower bound we are aware of is for the set

![]() $A_{\oplus } = \left\{x \in \mathcal{H}_n \,:\, \sum _{i}x_i \equiv 0 \pmod 2\right\}$

. Note that the distance between any two points in

$A_{\oplus } = \left\{x \in \mathcal{H}_n \,:\, \sum _{i}x_i \equiv 0 \pmod 2\right\}$

. Note that the distance between any two points in

![]() $A_{\oplus }$

is at least 2, and hence

$A_{\oplus }$

is at least 2, and hence

![]() ${\sf avgStretch}(\phi ) \geq 2$

for any mapping

${\sf avgStretch}(\phi ) \geq 2$

for any mapping

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A_{\oplus }$

. Proving a lower bound strictly greater than 2 for any set

$\phi \,:\, \mathcal{H}_{n-1} \to A_{\oplus }$

. Proving a lower bound strictly greater than 2 for any set

![]() $A$

is an open problem, and prior to this work we are not aware of any sublinear upper bounds that apply uniformly to all sets.

$A$

is an open problem, and prior to this work we are not aware of any sublinear upper bounds that apply uniformly to all sets.

Most of the research on metric embedding, we are aware of, focuses on worst case stretch. For a survey on metric embeddings of finite spaces see [Reference Linial10]. There has been a lot of research on the question of embedding into the Boolean cube. For example, see [Reference Angel and Benjamini1, Reference Håstad, Leighton and Newman8] for work on embeddings between random subsets of the Boolean cube, and [Reference Graham6] for isometric embeddings of arbitrary graphs into the Boolean cube.

1.1. A uniform upper bound on the average stretch

We prove a non-trivial uniform upper bound on the average stretch of a mapping

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

that applies to all sets

$\phi \,:\, \mathcal{H}_{n-1} \to A$

that applies to all sets

![]() $A \subseteq \mathcal{H}_n$

of density

$A \subseteq \mathcal{H}_n$

of density

![]() $1/2$

.

$1/2$

.

Theorem 1.2.

For any set

![]() $A \subseteq \mathcal{H}_n$

of density

$A \subseteq \mathcal{H}_n$

of density

![]() $\mu _n(A) = 1/2$

, there exists a bijection

$\mu _n(A) = 1/2$

, there exists a bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

such that

$\phi \,:\, \mathcal{H}_{n-1} \to A$

such that

![]() ${\sf avgStretch}(\phi ) = O\left(\sqrt{n}\right)$

.

${\sf avgStretch}(\phi ) = O\left(\sqrt{n}\right)$

.

Toward this goal we prove a stronger result bounding the average transportation distance between two arbitrary sets of density

![]() $1/2$

. Specifically, we prove the following theorem.

$1/2$

. Specifically, we prove the following theorem.

Theorem 1.3.

For any two sets

![]() $A,B \subseteq \mathcal{H}_n$

of density

$A,B \subseteq \mathcal{H}_n$

of density

![]() $\mu _n(A) = \mu _n(B) = 1/2$

, there exists a bijection

$\mu _n(A) = \mu _n(B) = 1/2$

, there exists a bijection

![]() $\phi \,:\, A \to B$

such that

$\phi \,:\, A \to B$

such that

![]() ${\mathbb E}\left[{{\sf dist}}\left(x,\phi (x)\right)\right] \leq \sqrt{2n}$

.

${\mathbb E}\left[{{\sf dist}}\left(x,\phi (x)\right)\right] \leq \sqrt{2n}$

.

Note that Theorem 1.2 follows immediately from Theorem 1.3 by the following simple argument.

Proposition 1.4.

Fix a bijection

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A$

. Then

$\phi \,:\, \mathcal{H}_{n-1} \to A$

. Then

![]() ${\sf avgStretch}(\phi ) \leq 2{\mathbb E}_{x \in \mathcal{H}_{n-1}}[{{\sf dist}}$

${\sf avgStretch}(\phi ) \leq 2{\mathbb E}_{x \in \mathcal{H}_{n-1}}[{{\sf dist}}$

![]() $(x,\phi (x))] + 1$

.

$(x,\phi (x))] + 1$

.

Proof. Using the triangle inequality we have

\begin{eqnarray*}{\sf avgStretch}(\phi ) & = &{\mathbb E}_{\substack{x \in \mathcal{H}_{n-1} \\ i \in [n-1]}}[{{\sf dist}}(\phi (x),\phi (x+e_i))] \\ & \leq &{\mathbb E}[{{\sf dist}}(x, \phi (x)) +{{\sf dist}}(x, x+e_i) +{{\sf dist}}(x+e_i,\phi (x+e_i))] \\ & = &{\mathbb E}[{{\sf dist}}(x,\phi (x))] + 1 +{\mathbb E}[{{\sf dist}}(x+e_i,\phi (x+e_i))] \\ & = & 2{\mathbb E}[{{\sf dist}}(x,\phi (x))] + 1, \end{eqnarray*}

\begin{eqnarray*}{\sf avgStretch}(\phi ) & = &{\mathbb E}_{\substack{x \in \mathcal{H}_{n-1} \\ i \in [n-1]}}[{{\sf dist}}(\phi (x),\phi (x+e_i))] \\ & \leq &{\mathbb E}[{{\sf dist}}(x, \phi (x)) +{{\sf dist}}(x, x+e_i) +{{\sf dist}}(x+e_i,\phi (x+e_i))] \\ & = &{\mathbb E}[{{\sf dist}}(x,\phi (x))] + 1 +{\mathbb E}[{{\sf dist}}(x+e_i,\phi (x+e_i))] \\ & = & 2{\mathbb E}[{{\sf dist}}(x,\phi (x))] + 1, \end{eqnarray*}

as required.

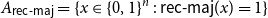

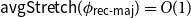

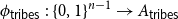

1.2. Bounds on the average stretch for specific sets

Next, we study two specific subsets of

![]() $\mathcal{H}_n$

defined by Boolean functions commonly studied in the field “Analysis of Boolean functions” [Reference O’Donnell13]. Specifically, we study two monotone noise-sensitive functions: the recursive majority of 3’s, and the tribes function.

$\mathcal{H}_n$

defined by Boolean functions commonly studied in the field “Analysis of Boolean functions” [Reference O’Donnell13]. Specifically, we study two monotone noise-sensitive functions: the recursive majority of 3’s, and the tribes function.

It was suggested in [Reference Benjamini, Cohen and Shinkar2] that the set of ones of these functions

![]() $A_f = f^{-1}(1)$

may be such that any mapping

$A_f = f^{-1}(1)$

may be such that any mapping

![]() $\phi \,:\, \mathcal{H}_{n-1} \to A_f$

requires large

$\phi \,:\, \mathcal{H}_{n-1} \to A_f$

requires large

![]() $\sf avgStretch$

. We show that for the recursive majority function there is a mapping

$\sf avgStretch$

. We show that for the recursive majority function there is a mapping

![]() $\phi _{{\textsf{rec-maj}}} \,:\, \mathcal{H}_{n-1} \to{\textsf{rec-maj}}^{-1}(1)$

with

$\phi _{{\textsf{rec-maj}}} \,:\, \mathcal{H}_{n-1} \to{\textsf{rec-maj}}^{-1}(1)$

with

![]() ${\sf avgStretch}(\phi _{{\textsf{rec-maj}}}) = O(1)$

. For the tribes function we show a mapping

${\sf avgStretch}(\phi _{{\textsf{rec-maj}}}) = O(1)$

. For the tribes function we show a mapping

![]() $\phi _{{\sf tribes}} \,:\, \mathcal{H}_{n-1} \to{\sf tribes}^{-1}(1)$

with

$\phi _{{\sf tribes}} \,:\, \mathcal{H}_{n-1} \to{\sf tribes}^{-1}(1)$

with

![]() ${\sf avgStretch}\left(\phi _{{\sf tribes}}\right) = O(\!\log (n))$

. Below we formally define the functions, and discuss our results.

${\sf avgStretch}\left(\phi _{{\sf tribes}}\right) = O(\!\log (n))$

. Below we formally define the functions, and discuss our results.

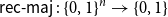

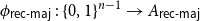

1.2.1. Recursive majority of 3’s

The recursive majority of 3’s function is defined as follows.

Definition 1.5.

Let

![]() $k \in{\mathbb N}$

be a positive integer. Define the function

recursive majority of 3’s

$k \in{\mathbb N}$

be a positive integer. Define the function

recursive majority of 3’s

![]() ${\textsf{rec-maj}}_{k} \,:\, \mathcal{H}_{3^k} \to \{0,1\}$

as follows.

${\textsf{rec-maj}}_{k} \,:\, \mathcal{H}_{3^k} \to \{0,1\}$

as follows.

For

$k = 1$

the function

$k = 1$

the function

${\textsf{rec-maj}}_{1}$

is the majority function on the

${\textsf{rec-maj}}_{1}$

is the majority function on the

$3$

input bits.

$3$

input bits.

For

$k \gt 1$

the function

$k \gt 1$

the function

${\textsf{rec-maj}}_{k} \,:\, \mathcal{H}_{3^k} \to \{0,1\}$

is defined recursively as follows. For each

${\textsf{rec-maj}}_{k} \,:\, \mathcal{H}_{3^k} \to \{0,1\}$

is defined recursively as follows. For each

$x \in \mathcal{H}_{3^k}$

write

$x \in \mathcal{H}_{3^k}$

write

$x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where each

$x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where each

$x^{({r})} \in \mathcal{H}_{3^{k-1}}$

for each

$x^{({r})} \in \mathcal{H}_{3^{k-1}}$

for each

${r} \in [3]$

. Then,

${r} \in [3]$

. Then,

${\textsf{rec-maj}}_{k}(x) ={\sf maj}\left({\textsf{rec-maj}}_{k-1}\big(x^{(1)}\big),{\textsf{rec-maj}}_{k-1}\big(x^{(2)}\big),{\textsf{rec-maj}}_{k-1}\big(x^{(3)}\big)\right)$

.

${\textsf{rec-maj}}_{k}(x) ={\sf maj}\left({\textsf{rec-maj}}_{k-1}\big(x^{(1)}\big),{\textsf{rec-maj}}_{k-1}\big(x^{(2)}\big),{\textsf{rec-maj}}_{k-1}\big(x^{(3)}\big)\right)$

.

Note that

![]() ${\textsf{rec-maj}}_{k}(x) = 1-{\textsf{rec-maj}}_{k}({\textbf{1}}-x)$

for all

${\textsf{rec-maj}}_{k}(x) = 1-{\textsf{rec-maj}}_{k}({\textbf{1}}-x)$

for all

![]() $x \in \mathcal{H}_n$

, and hence the density of the set

$x \in \mathcal{H}_n$

, and hence the density of the set

![]() ${A_{\textsf{rec-maj}_{k}}} = \left\{x \in \mathcal{H}_{n} \,:\,{\textsf{rec-maj}}_{k}(x) = 1\right\}$

is

${A_{\textsf{rec-maj}_{k}}} = \left\{x \in \mathcal{H}_{n} \,:\,{\textsf{rec-maj}}_{k}(x) = 1\right\}$

is

![]() $\mu _n\left({A_{\textsf{rec-maj}_{k}}}\right) = 1/2$

. We prove the following result regarding the set

$\mu _n\left({A_{\textsf{rec-maj}_{k}}}\right) = 1/2$

. We prove the following result regarding the set

![]() $A_{\textsf{rec-maj}_{k}}$

.

$A_{\textsf{rec-maj}_{k}}$

.

Theorem 1.6.

Let

![]() $k$

be a positive integer, let

$k$

be a positive integer, let

![]() $n = 3^k$

, and let

$n = 3^k$

, and let

![]() ${A_{\textsf{rec-maj}_{k}}} = \left\{x \in \mathcal{H}_{n} \,:\,{\textsf{rec-maj}}_{k}(x) = 1\right\}$

. There exists a mapping

${A_{\textsf{rec-maj}_{k}}} = \left\{x \in \mathcal{H}_{n} \,:\,{\textsf{rec-maj}}_{k}(x) = 1\right\}$

. There exists a mapping

![]() $\phi _{{\textsf{rec-maj}}_{k}} \,:\, \mathcal{H}_{n-1} \to{A_{\textsf{rec-maj}_{k}}}$

such that

$\phi _{{\textsf{rec-maj}}_{k}} \,:\, \mathcal{H}_{n-1} \to{A_{\textsf{rec-maj}_{k}}}$

such that

![]() ${\sf avgStretch}\left(\phi _{{\textsf{rec-maj}}_{k}}\right) \leq 20$

.

${\sf avgStretch}\left(\phi _{{\textsf{rec-maj}}_{k}}\right) \leq 20$

.

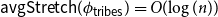

1.2.2. The tribes function

The tribes function is defined as follows.

Definition 1.7.

Let

![]() $s, w \in{\mathbb N}$

be two positive integers, and let

$s, w \in{\mathbb N}$

be two positive integers, and let

![]() $n = s \cdot w$

. The function

$n = s \cdot w$

. The function

![]() ${\sf tribes} \,:\, \mathcal{H}_n \to \{0,1\}$

is defined as a DNF consisting of

${\sf tribes} \,:\, \mathcal{H}_n \to \{0,1\}$

is defined as a DNF consisting of

![]() $s$

disjoint clauses of width

$s$

disjoint clauses of width

![]() $w$

.

$w$

.

That is, the function

![]() $\sf tribes$

partitions

$\sf tribes$

partitions

![]() $n = sw$

inputs into

$n = sw$

inputs into

![]() $s$

disjoint ‘tribes’ each of size

$s$

disjoint ‘tribes’ each of size

![]() $w$

, and returns 1 if and only if at least one of the tribes ‘votes’ 1 unanimously.

$w$

, and returns 1 if and only if at least one of the tribes ‘votes’ 1 unanimously.

It is clear that

![]() $\mathbb{P}_{x \in \mathcal{H}_n}[{\sf tribes}(x) = 1] = 1 - (1-2^{-w})^s$

. The interesting settings of parameters

$\mathbb{P}_{x \in \mathcal{H}_n}[{\sf tribes}(x) = 1] = 1 - (1-2^{-w})^s$

. The interesting settings of parameters

![]() $w$

and

$w$

and

![]() $s$

are such that the function is close to balanced, that is, this probability is close to

$s$

are such that the function is close to balanced, that is, this probability is close to

![]() $1/2$

. Given

$1/2$

. Given

![]() $w \in{\mathbb N}$

, let

$w \in{\mathbb N}$

, let

![]() $s = s_w = \ln (2) 2^w \pm \Theta (1)$

be the largest integer such that

$s = s_w = \ln (2) 2^w \pm \Theta (1)$

be the largest integer such that

![]() $1 - (1-2^{-w})^s \leq 1/2$

. For such choice of the parameters we have

$1 - (1-2^{-w})^s \leq 1/2$

. For such choice of the parameters we have

![]() $\mathbb{P}_{x \in \mathcal{H}_n}[{\sf tribes}(x) = 1] = \frac{1}{2} - O\left (\frac{\log (n)}{n}\right )$

(see, e.g., [[Reference O’Donnell13], Section 4.2]).

$\mathbb{P}_{x \in \mathcal{H}_n}[{\sf tribes}(x) = 1] = \frac{1}{2} - O\left (\frac{\log (n)}{n}\right )$

(see, e.g., [[Reference O’Donnell13], Section 4.2]).

Consider the set

![]() ${A_{{\sf tribes}}} = \left\{x \in \mathcal{H}_n \,:\,{\sf tribes}(x) = 1\right\}$

. Since the density of

${A_{{\sf tribes}}} = \left\{x \in \mathcal{H}_n \,:\,{\sf tribes}(x) = 1\right\}$

. Since the density of

![]() $A_{{\sf tribes}}$

is not necessarily equal to

$A_{{\sf tribes}}$

is not necessarily equal to

![]() $1/2$

, we cannot talk about a bijection from

$1/2$

, we cannot talk about a bijection from

![]() $\mathcal{H}_{n-1}$

to

$\mathcal{H}_{n-1}$

to

![]() $A_{{\sf tribes}}$

. In order to overcome this technical issue, let

$A_{{\sf tribes}}$

. In order to overcome this technical issue, let

![]() $A^*_{{\sf tribes}}$

be an arbitrary superset of

$A^*_{{\sf tribes}}$

be an arbitrary superset of

![]() $A_{{\sf tribes}}$

of density

$A_{{\sf tribes}}$

of density

![]() $1/2$

. We prove that there is a mapping

$1/2$

. We prove that there is a mapping

![]() $\phi _{\sf tribes}$

from

$\phi _{\sf tribes}$

from

![]() $\mathcal{H}_{n-1}$

to

$\mathcal{H}_{n-1}$

to

![]() $A^*_{{\sf tribes}}$

with average stretch

$A^*_{{\sf tribes}}$

with average stretch

![]() ${\sf avgStretch}(\phi _{\sf tribes}) = O(\!\log (n))$

. In fact, we prove a stronger result, namely that the average transportation distance of

${\sf avgStretch}(\phi _{\sf tribes}) = O(\!\log (n))$

. In fact, we prove a stronger result, namely that the average transportation distance of

![]() $\phi _{\sf tribes}$

is

$\phi _{\sf tribes}$

is

![]() $O(\!\log (n))$

.

$O(\!\log (n))$

.

Theorem 1.8.

Let

![]() $w$

be a positive integer, and let

$w$

be a positive integer, and let

![]() $s$

be the largest integer such that

$s$

be the largest integer such that

![]() $1 - (1-2^{-w})^s \leq 1/2$

. For

$1 - (1-2^{-w})^s \leq 1/2$

. For

![]() $n=s \cdot w$

let

$n=s \cdot w$

let

![]() ${\sf tribes} \,:\, \mathcal{H}_n \to \{0,1\}$

be defined as a DNF consisting of

${\sf tribes} \,:\, \mathcal{H}_n \to \{0,1\}$

be defined as a DNF consisting of

![]() $s$

disjoint clauses of width

$s$

disjoint clauses of width

![]() $w$

. Let

$w$

. Let

![]() ${A_{{\sf tribes}}} = \left\{x \in \mathcal{H}_n \,:\,{\sf tribes}(x) = 1\right\}$

, and let

${A_{{\sf tribes}}} = \left\{x \in \mathcal{H}_n \,:\,{\sf tribes}(x) = 1\right\}$

, and let

![]() ${A^*_{{\sf tribes}}} \subseteq \mathcal{H}_n$

be an arbitrary superset of

${A^*_{{\sf tribes}}} \subseteq \mathcal{H}_n$

be an arbitrary superset of

![]() $A_{{\sf tribes}}$

of density

$A_{{\sf tribes}}$

of density

![]() $\mu _n\left({A^*_{{\sf tribes}}}\right) = 1/2$

. Then, there exists a bijection

$\mu _n\left({A^*_{{\sf tribes}}}\right) = 1/2$

. Then, there exists a bijection

![]() $\phi _{{\sf tribes}} \,:\, \mathcal{H}_{n-1} \to{A^*_{{\sf tribes}}}$

such that

$\phi _{{\sf tribes}} \,:\, \mathcal{H}_{n-1} \to{A^*_{{\sf tribes}}}$

such that

![]() ${\mathbb E}[{{\sf dist}}(x,\phi _{{\sf tribes}}(x))] = O(\!\log (n))$

. In particular,

${\mathbb E}[{{\sf dist}}(x,\phi _{{\sf tribes}}(x))] = O(\!\log (n))$

. In particular,

![]() ${\sf avgStretch}(\phi _{\sf tribes}) = O(\!\log (n))$

.

${\sf avgStretch}(\phi _{\sf tribes}) = O(\!\log (n))$

.

2. Proof of Theorem 1.3

We provide two different proofs of Theorem 1.3. The first proof, in subsection 2.1 shows a slightly weaker bound of

![]() $O\left (\sqrt{n \ln (n)}\right )$

on the average stretch using the Gale-Shapley result on the stable marriage problem. The idea of using the stable marriage problem was suggested in [Reference Benjamini, Cohen and Shinkar2], and we implement this approach. Then, in subsection 2.2, we show the bound of

$O\left (\sqrt{n \ln (n)}\right )$

on the average stretch using the Gale-Shapley result on the stable marriage problem. The idea of using the stable marriage problem was suggested in [Reference Benjamini, Cohen and Shinkar2], and we implement this approach. Then, in subsection 2.2, we show the bound of

![]() $O\left(\sqrt{n}\right)$

by relating the average stretch of a mapping between two sets to known estimates on the Wasserstein distance on the hypercube.

$O\left(\sqrt{n}\right)$

by relating the average stretch of a mapping between two sets to known estimates on the Wasserstein distance on the hypercube.

2.1. Upper bound on the average transportation distance using stable marriage

Recall the Gale-Shapley theorem on the stable marriage problem. In the stable marriage problem we are given two sets of elements

![]() $A$

and

$A$

and

![]() $B$

each of size

$B$

each of size

![]() $N$

. For each element

$N$

. For each element

![]() $a \in A$

(resp.

$a \in A$

(resp.

![]() $b \in B$

) we have a ranking of the elements of

$b \in B$

) we have a ranking of the elements of

![]() $B$

(resp.

$B$

(resp.

![]() $A$

) given as an bijection

$A$

) given as an bijection

![]() $rk_a \,:\, A \to [N]$

$rk_a \,:\, A \to [N]$

![]() $\left(rk_b \,:\, B \to [N]\right)$

representing the preferences of each

$\left(rk_b \,:\, B \to [N]\right)$

representing the preferences of each

![]() $a$

(resp.

$a$

(resp.

![]() $b$

). A matching (or a bijection)

$b$

). A matching (or a bijection)

![]() $\phi \,:\, A \to B$

is said to be unstable if there are

$\phi \,:\, A \to B$

is said to be unstable if there are

![]() $a,a^{\prime} \in A$

, and

$a,a^{\prime} \in A$

, and

![]() $b,b^{\prime} \in B$

such that

$b,b^{\prime} \in B$

such that

![]() $\phi (a) = b^{\prime}$

,

$\phi (a) = b^{\prime}$

,

![]() $\phi (a^{\prime}) = b$

, but

$\phi (a^{\prime}) = b$

, but

![]() $rk_a(b) \lt rk_a(b^{\prime})$

, and

$rk_a(b) \lt rk_a(b^{\prime})$

, and

![]() $rk_b(a) \lt rk_b(a^{\prime})$

; that is, both

$rk_b(a) \lt rk_b(a^{\prime})$

; that is, both

![]() $a$

and

$a$

and

![]() $b$

would prefer to be mapped to each other over their mappings given by

$b$

would prefer to be mapped to each other over their mappings given by

![]() $\phi$

. We say that a matching

$\phi$

. We say that a matching

![]() $\phi \,:\, A \to B$

is stable otherwise.

$\phi \,:\, A \to B$

is stable otherwise.

Theorem 2.1. (Gale-Shapley theorem) For any two sets

![]() $A$

and

$A$

and

![]() $B$

of equal size and any choice of rankings for each

$B$

of equal size and any choice of rankings for each

![]() $a \in A$

and

$a \in A$

and

![]() $b \in B$

there exists a stable matching

$b \in B$

there exists a stable matching

![]() $m \,:\, A \to B$

.

$m \,:\, A \to B$

.

For the proof of Theorem 1.3 the sets

![]() $A$

and

$A$

and

![]() $B$

are subsets of

$B$

are subsets of

![]() $\mathcal{H}_n$

of density 1/2. We define the preferences between points based on the distances between them in

$\mathcal{H}_n$

of density 1/2. We define the preferences between points based on the distances between them in

![]() $\mathcal{H}_n$

. That is, for each

$\mathcal{H}_n$

. That is, for each

![]() $a \in A$

we have

$a \in A$

we have

![]() $rk_a(b) \lt rk_a(b^{\prime})$

if and only if

$rk_a(b) \lt rk_a(b^{\prime})$

if and only if

![]() ${{\sf dist}}(a,b) \lt{{\sf dist}}(a,b^{\prime})$

with ties broken arbitrarily. Similarly, for each

${{\sf dist}}(a,b) \lt{{\sf dist}}(a,b^{\prime})$

with ties broken arbitrarily. Similarly, for each

![]() $b \in B$

we have

$b \in B$

we have

![]() $rk_b(a) \lt rk_b(a^{\prime})$

if and only if

$rk_b(a) \lt rk_b(a^{\prime})$

if and only if

![]() ${{\sf dist}}(a,b) \lt{{\sf dist}}(a^{\prime},b)$

with ties broken arbitrarily.

${{\sf dist}}(a,b) \lt{{\sf dist}}(a^{\prime},b)$

with ties broken arbitrarily.

Let

![]() $\phi \,:\, A \to B$

be a bijection. We show that if

$\phi \,:\, A \to B$

be a bijection. We show that if

![]() ${\mathbb E}_{x \in A}[{{\sf dist}}(x, \phi (x))]\gt 3k$

for

${\mathbb E}_{x \in A}[{{\sf dist}}(x, \phi (x))]\gt 3k$

for

![]() $k=\left \lceil \sqrt{n \ln (n)}\right \rceil$

, then

$k=\left \lceil \sqrt{n \ln (n)}\right \rceil$

, then

![]() $\phi$

is not a stable matching. Consider the set

$\phi$

is not a stable matching. Consider the set

Note that since the diameter of

![]() $\mathcal{H}_n$

is

$\mathcal{H}_n$

is

![]() $n$

, and

$n$

, and

![]() ${\mathbb E}_{x \in A}[{{\sf dist}}(x, \phi (x))] \gt 3k$

, it follows that

${\mathbb E}_{x \in A}[{{\sf dist}}(x, \phi (x))] \gt 3k$

, it follows that

![]() $\mu _n(F) \gt \frac{k}{n}$

. Indeed, we have

$\mu _n(F) \gt \frac{k}{n}$

. Indeed, we have

![]() $3k \lt{\mathbb E}_{x \in A}[{{\sf dist}}(x, \phi (x))] \leq n \cdot \frac{\mu _n(F)}{\mu _n(A)} + k \cdot \left(1-\frac{\mu _n(F)}{\mu _n(A)}\right) \leq n \cdot \frac{\mu _n(F)}{\mu _n(A)} + k$

, and thus

$3k \lt{\mathbb E}_{x \in A}[{{\sf dist}}(x, \phi (x))] \leq n \cdot \frac{\mu _n(F)}{\mu _n(A)} + k \cdot \left(1-\frac{\mu _n(F)}{\mu _n(A)}\right) \leq n \cdot \frac{\mu _n(F)}{\mu _n(A)} + k$

, and thus

![]() $\mu _n(F) \gt \frac{2k}{n} \cdot \mu _n(A)$

. Next, we use Talagrand’s concentration inequality.

$\mu _n(F) \gt \frac{2k}{n} \cdot \mu _n(A)$

. Next, we use Talagrand’s concentration inequality.

Theorem 2.2. ([[Reference Talagrand16], Proposition 2.1.1]) Let

![]() $k \leq n$

be two positive integers, and let

$k \leq n$

be two positive integers, and let

![]() $F \subseteq \mathcal{H}_n$

. Let

$F \subseteq \mathcal{H}_n$

. Let

![]() $F_{\geq k} = \left\{x \in \mathcal{H}_n \,:\,{{\sf dist}}(x,y) \geq k \ \forall y \in F \right\}$

be the set of all

$F_{\geq k} = \left\{x \in \mathcal{H}_n \,:\,{{\sf dist}}(x,y) \geq k \ \forall y \in F \right\}$

be the set of all

![]() $x \in \mathcal{H}_n$

whose distance from

$x \in \mathcal{H}_n$

whose distance from

![]() $F$

is at least

$F$

is at least

![]() $k$

. Then

$k$

. Then

![]() $\mu _n\left(F_{\geq k}\right) \leq e^{-k^2/n}/ \mu _n(F)$

.

$\mu _n\left(F_{\geq k}\right) \leq e^{-k^2/n}/ \mu _n(F)$

.

By Theorem 2.2 we have

![]() $\mu _n\left(F_{\geq k}\right) \leq e^{-k^2/n}/ \mu _n(F)$

, and hence, for

$\mu _n\left(F_{\geq k}\right) \leq e^{-k^2/n}/ \mu _n(F)$

, and hence, for

![]() $k = \left \lceil \sqrt{n \ln (n)}\right \rceil$

it holds that

$k = \left \lceil \sqrt{n \ln (n)}\right \rceil$

it holds that

In particular, since

![]() $\mu _n(\phi (F)) =\mu _n(F) \gt k/n \gt 1/k \geq \mu _n\left(F_{\geq k}\right)$

, there is some

$\mu _n(\phi (F)) =\mu _n(F) \gt k/n \gt 1/k \geq \mu _n\left(F_{\geq k}\right)$

, there is some

![]() $b \in \phi (F)$

that does not belong to

$b \in \phi (F)$

that does not belong to

![]() $F_{\geq k}$

. That is, there is some

$F_{\geq k}$

. That is, there is some

![]() $a \in F$

and

$a \in F$

and

![]() $b \in \phi (F)$

such that

$b \in \phi (F)$

such that

![]() ${{\sf dist}}(a,b) \lt k$

. On the other hand, for

${{\sf dist}}(a,b) \lt k$

. On the other hand, for

![]() $a^{\prime} = \phi ^{-1}(b)$

, by the definition of

$a^{\prime} = \phi ^{-1}(b)$

, by the definition of

![]() $F$

we have

$F$

we have

![]() ${{\sf dist}}(a,\phi (a)) \geq k$

and

${{\sf dist}}(a,\phi (a)) \geq k$

and

![]() ${{\sf dist}}(a^{\prime},b=$

${{\sf dist}}(a^{\prime},b=$

![]() $\phi (a^{\prime})) \geq k$

, and hence

$\phi (a^{\prime})) \geq k$

, and hence

![]() $\phi$

is not stable, as

$\phi$

is not stable, as

![]() $a$

and

$a$

and

![]() $b$

prefer to be mapped to each other over their current matching. Therefore, in a stable matching

$b$

prefer to be mapped to each other over their current matching. Therefore, in a stable matching

![]() ${\mathbb E}_{x \in A}[{{\sf dist}}(x, \phi (x))] \leq 3\left \lceil \sqrt{n \ln (n)}\right \rceil$

, and by the Gale-Shapley theorem such a matching does, indeed, exist.

${\mathbb E}_{x \in A}[{{\sf dist}}(x, \phi (x))] \leq 3\left \lceil \sqrt{n \ln (n)}\right \rceil$

, and by the Gale-Shapley theorem such a matching does, indeed, exist.

2.2. Proof of Theorem 1.3 using transportation theory

Next we prove Theorem 1.3, by relating our problem to a known estimate on the Wasserstein distance between two measures on the hypercube. Recall that the

![]() $\ell _1$

-Wasserstein distance between two measures

$\ell _1$

-Wasserstein distance between two measures

![]() $\mu$

and

$\mu$

and

![]() $\nu$

on

$\nu$

on

![]() $\mathcal{H}_n$

is defined as

$\mathcal{H}_n$

is defined as

where the infimum is taken over all couplings

![]() $q$

of

$q$

of

![]() $\mu$

and

$\mu$

and

![]() $\nu$

, that is,

$\nu$

, that is,

![]() $\sum _{y^{\prime}} q(x,y^{\prime}) = \mu (x)$

and

$\sum _{y^{\prime}} q(x,y^{\prime}) = \mu (x)$

and

![]() $\sum _{x^{\prime}} q(x^{\prime},y) = \nu (y)$

for all

$\sum _{x^{\prime}} q(x^{\prime},y) = \nu (y)$

for all

![]() $x,y \in \mathcal{H}_n$

. That is, we consider an optimal coupling

$x,y \in \mathcal{H}_n$

. That is, we consider an optimal coupling

![]() $q$

of

$q$

of

![]() $\mu$

and

$\mu$

and

![]() $\nu$

minimizing

$\nu$

minimizing

![]() ${\mathbb E}_{(x,y) \sim q}[{{\sf dist}}(x,y)]$

, the expected distance between

${\mathbb E}_{(x,y) \sim q}[{{\sf dist}}(x,y)]$

, the expected distance between

![]() $x$

and

$x$

and

![]() $y$

, where

$y$

, where

![]() $x$

is distributed according to

$x$

is distributed according to

![]() $\mu$

and

$\mu$

and

![]() $y$

is distributed according to

$y$

is distributed according to

![]() $\nu$

.

$\nu$

.

We prove the theorem using the following two claims.

Claim 2.3.

Let

![]() $\mu _A$

and

$\mu _A$

and

![]() $\mu _B$

be uniform measures over the sets

$\mu _B$

be uniform measures over the sets

![]() $A$

and

$A$

and

![]() $B$

respectively. Then, there exists a bijection

$B$

respectively. Then, there exists a bijection

![]() $\phi$

from

$\phi$

from

![]() $A$

to

$A$

to

![]() $B$

such that

$B$

such that

![]() ${\mathbb E}[{{\sf dist}}(x,\phi (x))] = W_1(\mu _A,\mu _B)$

.

${\mathbb E}[{{\sf dist}}(x,\phi (x))] = W_1(\mu _A,\mu _B)$

.

Claim 2.4.

Let

![]() $\mu _A$

and

$\mu _A$

and

![]() $\mu _B$

be uniform measures over the sets

$\mu _B$

be uniform measures over the sets

![]() $A$

and

$A$

and

![]() $B$

respectively. Then

$B$

respectively. Then

![]() $W_1(\mu _A, \mu _B) \leq \sqrt{2n}$

.

$W_1(\mu _A, \mu _B) \leq \sqrt{2n}$

.

Proof of Claim 2.3. Observe that any bijection

![]() $\phi$

from

$\phi$

from

![]() $A$

to

$A$

to

![]() $B$

naturally defines a coupling

$B$

naturally defines a coupling

![]() $q$

of

$q$

of

![]() $\mu _A$

and

$\mu _A$

and

![]() $\mu _B$

, defined as

$\mu _B$

, defined as

\begin{equation*} q(x, y) = \begin {cases} \frac {1}{|A|} & \mbox {if $x \in A$ and $y=\phi (x)$}, \\[4pt] 0 & \mbox {otherwise.} \end {cases} \end{equation*}

\begin{equation*} q(x, y) = \begin {cases} \frac {1}{|A|} & \mbox {if $x \in A$ and $y=\phi (x)$}, \\[4pt] 0 & \mbox {otherwise.} \end {cases} \end{equation*}

Therefore,

![]() $W_1(\mu _A,\mu _B) \leq{\mathbb E}_{x \in A}[{{\sf dist}}(x,\phi (x))]$

.

$W_1(\mu _A,\mu _B) \leq{\mathbb E}_{x \in A}[{{\sf dist}}(x,\phi (x))]$

.

For the other direction note that in the definition of

![]() $W_1$

we are looking for the infimum of the linear function

$W_1$

we are looking for the infimum of the linear function

![]() $L(q) = \sum _{(x,y) \in A \times B}{{\sf dist}}(x,y) q(x,y)$

, where the infimum is taken over the Birkhoff polytope of all

$L(q) = \sum _{(x,y) \in A \times B}{{\sf dist}}(x,y) q(x,y)$

, where the infimum is taken over the Birkhoff polytope of all

![]() $n \times n$

doubly stochastic matrices. By the Birkhoff-von Neumann theorem [Reference Birkhoff3, Reference von Neumann18] this polytope is the convex hull whose extremal points are precisely the permutation matrices. The optimum is obtained on such an extremal point, and hence there exists a bijection

$n \times n$

doubly stochastic matrices. By the Birkhoff-von Neumann theorem [Reference Birkhoff3, Reference von Neumann18] this polytope is the convex hull whose extremal points are precisely the permutation matrices. The optimum is obtained on such an extremal point, and hence there exists a bijection

![]() $\phi$

from

$\phi$

from

![]() $A$

to

$A$

to

![]() $B$

such that

$B$

such that

![]() $W_1(\mu _A,\mu _B) ={\mathbb E}[{{\sf dist}}(x,\phi (x))]$

.

$W_1(\mu _A,\mu _B) ={\mathbb E}[{{\sf dist}}(x,\phi (x))]$

.

Proof of Claim 2.4. The proof of the claim follows rather directly from the techniques in transportation theory (see [[Reference Lovett and Viola12], Section 3.4]). Specifically, using Definition 3.4.2 and combining Proposition 3.4.1, equation 3.4.42, and Proposition 3.4.3, where

![]() $\mathcal{X} = \{0,1\}$

, and

$\mathcal{X} = \{0,1\}$

, and

![]() $\mu$

is the uniform distribution on

$\mu$

is the uniform distribution on

![]() $\mathcal{X}$

we have the following theorem.

$\mathcal{X}$

we have the following theorem.

Theorem 2.5.

Let

![]() $\nu$

be an arbitrary distribution on the discrete hypercube

$\nu$

be an arbitrary distribution on the discrete hypercube

![]() $\mathcal{H}_n$

, and let

$\mathcal{H}_n$

, and let

![]() $\mu _n$

be the uniform distribution on

$\mu _n$

be the uniform distribution on

![]() $\mathcal{H}_n$

. Then

$\mathcal{H}_n$

. Then

where

![]() $D(\nu \mid \mid \mu )$

is the Kullback-Leibler divergence defined as

$D(\nu \mid \mid \mu )$

is the Kullback-Leibler divergence defined as

![]() $D(\nu \mid \mid \mu ) = \sum _{x} \nu (x) \log\! \left(\frac{\nu (x)}{\mu (x)}\right)$

.

$D(\nu \mid \mid \mu ) = \sum _{x} \nu (x) \log\! \left(\frac{\nu (x)}{\mu (x)}\right)$

.

In particular, by letting

![]() $\nu = \mu _A$

be the uniform distribution over the set

$\nu = \mu _A$

be the uniform distribution over the set

![]() $A$

of cardinality

$A$

of cardinality

![]() $2^{n-1}$

, we have

$2^{n-1}$

, we have

![]() $D(\mu _A \mid \mid \mu _n) = \sum _{x \in A} \mu _A(x)\log \left (\frac{\mu _A(x)}{\mu _n(x)}\right ) = \sum _{x \in A} \frac{1}{|A|}\log (2) = 1$

, and hence

$D(\mu _A \mid \mid \mu _n) = \sum _{x \in A} \mu _A(x)\log \left (\frac{\mu _A(x)}{\mu _n(x)}\right ) = \sum _{x \in A} \frac{1}{|A|}\log (2) = 1$

, and hence

![]() $W_1(\mu _n,\mu _A) \leq \sqrt{\frac{1}{2} n \cdot D(\mu _n \mid \mid \nu )} = \sqrt{n/2}$

. Analogously, we have

$W_1(\mu _n,\mu _A) \leq \sqrt{\frac{1}{2} n \cdot D(\mu _n \mid \mid \nu )} = \sqrt{n/2}$

. Analogously, we have

![]() $W_1(\mu _n,\mu _B) \leq \sqrt{n/2}$

. Therefore, by the triangle inequality, we conclude that

$W_1(\mu _n,\mu _B) \leq \sqrt{n/2}$

. Therefore, by the triangle inequality, we conclude that

![]() $W_1(\mu _A,\mu _B) \leq W_1(\mu _A,\mu _n) + W_1(\mu _n,\mu _B) \leq \sqrt{2n}$

, as required.

$W_1(\mu _A,\mu _B) \leq W_1(\mu _A,\mu _n) + W_1(\mu _n,\mu _B) \leq \sqrt{2n}$

, as required.

This completes the proof of Theorem 1.3.

3. Average stretch for recursive majority of 3’s

In this section we prove Theorem 1.6, showing a mapping from

![]() $\mathcal{H}_n$

to

$\mathcal{H}_n$

to

![]() $A_{\textsf{rec-maj}_{k}}$

with constant average stretch. The key step in the proof is the following lemma.

$A_{\textsf{rec-maj}_{k}}$

with constant average stretch. The key step in the proof is the following lemma.

Lemma 3.1.

Let

![]() $k$

be a positive integer, and let

$k$

be a positive integer, and let

![]() $n = 3^k$

. There exists

$n = 3^k$

. There exists

![]() $f_k \,:\, \mathcal{H}_n \to{A_{\textsf{rec-maj}_{k}}}$

satisfying the following properties.

$f_k \,:\, \mathcal{H}_n \to{A_{\textsf{rec-maj}_{k}}}$

satisfying the following properties.

-

1.

$f_k(x) =x$

for all

$f_k(x) =x$

for all

$x \in{A_{\textsf{rec-maj}_{k}}}$

.

$x \in{A_{\textsf{rec-maj}_{k}}}$

. -

2. For each

$x \in{A_{\textsf{rec-maj}_{k}}}$

there is a unique

$x \in{A_{\textsf{rec-maj}_{k}}}$

there is a unique

$z \in{Z_{\textsf{rec-maj}_{k}}}{\,:\!=\,} \mathcal{H}_n \setminus{A_{\textsf{rec-maj}_{k}}}$

such that

$z \in{Z_{\textsf{rec-maj}_{k}}}{\,:\!=\,} \mathcal{H}_n \setminus{A_{\textsf{rec-maj}_{k}}}$

such that

$f_k(z) = x$

.

$f_k(z) = x$

. -

3. For every

$i \in [n]$

we have

$i \in [n]$

we have

${\mathbb E}_{x \in \mathcal{H}_n}\left[{{\sf dist}}\left(f_k(x),f_k\left(x+e_i\right)\right) \right] \leq 10$

.

${\mathbb E}_{x \in \mathcal{H}_n}\left[{{\sf dist}}\left(f_k(x),f_k\left(x+e_i\right)\right) \right] \leq 10$

.

We postpone the proof of Lemma 3.1 for now, and show how it implies Theorem 1.6.

Proof of Theorem 1.6. Let

![]() $f_k$

be the mapping from Lemma 3.1. Define

$f_k$

be the mapping from Lemma 3.1. Define

![]() $\psi _0,\psi _1 \,:\, \mathcal{H}_{n-1} \to{A_{\textsf{rec-maj}_{k}}}$

as

$\psi _0,\psi _1 \,:\, \mathcal{H}_{n-1} \to{A_{\textsf{rec-maj}_{k}}}$

as

![]() $\psi _b(x) = f_k(x \circ b)$

, where

$\psi _b(x) = f_k(x \circ b)$

, where

![]() $x \circ b \in \mathcal{H}_n$

is the string obtained from

$x \circ b \in \mathcal{H}_n$

is the string obtained from

![]() $x$

by appending to it

$x$

by appending to it

![]() $b$

as the

$b$

as the

![]() $n$

’th coordinate.

$n$

’th coordinate.

The mappings

![]() $\psi _0,\psi _1$

naturally induce a bipartite graph

$\psi _0,\psi _1$

naturally induce a bipartite graph

![]() $G = (V,E)$

, where

$G = (V,E)$

, where

![]() $V = \mathcal{H}_{n-1} \cup{A_{\textsf{rec-maj}_{k}}}$

and

$V = \mathcal{H}_{n-1} \cup{A_{\textsf{rec-maj}_{k}}}$

and

![]() $E = \left\{(x,\psi _b(x)) \,:\, x \in \mathcal{H}_{n-1}, b \in \{0,1\} \right\}$

, possibly, containing parallel edges. Note that by the first two items of Lemma 3.1 the graph

$E = \left\{(x,\psi _b(x)) \,:\, x \in \mathcal{H}_{n-1}, b \in \{0,1\} \right\}$

, possibly, containing parallel edges. Note that by the first two items of Lemma 3.1 the graph

![]() $G$

is 2-regular. Indeed, for each

$G$

is 2-regular. Indeed, for each

![]() $x \in \mathcal{H}_n$

the neighbours of

$x \in \mathcal{H}_n$

the neighbours of

![]() $x$

are

$x$

are

![]() $N(x) = \{ \psi _0(x) = f_k(x \circ 0), \psi _1(x) = f_k(x \circ 1) \}$

, and for each

$N(x) = \{ \psi _0(x) = f_k(x \circ 0), \psi _1(x) = f_k(x \circ 1) \}$

, and for each

![]() $y \in{A_{\textsf{rec-maj}_{k}}}$

there is a unique

$y \in{A_{\textsf{rec-maj}_{k}}}$

there is a unique

![]() $x \in{A_{\textsf{rec-maj}_{k}}}$

and a unique

$x \in{A_{\textsf{rec-maj}_{k}}}$

and a unique

![]() $z \in{Z_{\textsf{rec-maj}_{k}}}$

such that

$z \in{Z_{\textsf{rec-maj}_{k}}}$

such that

![]() $f_k(x) = f_k(z) = 1$

, and hence

$f_k(x) = f_k(z) = 1$

, and hence

![]() $N(y) = \left\{ x_{[1,\dots,n-1]}, z_{[1,\dots,n-1]} \right\}$

.

$N(y) = \left\{ x_{[1,\dots,n-1]}, z_{[1,\dots,n-1]} \right\}$

.

Since the bipartite graph

![]() $G$

is 2-regular, it has a perfect matching. Let

$G$

is 2-regular, it has a perfect matching. Let

![]() $\phi$

be the bijection from

$\phi$

be the bijection from

![]() $\mathcal{H}_{n-1}$

to

$\mathcal{H}_{n-1}$

to

![]() $A_{\textsf{rec-maj}_{k}}$

induced by a perfect matching in

$A_{\textsf{rec-maj}_{k}}$

induced by a perfect matching in

![]() $G$

, and for each

$G$

, and for each

![]() $x \in \mathcal{H}_n$

let

$x \in \mathcal{H}_n$

let

![]() $b_x \in \mathcal{H}_n$

be such that

$b_x \in \mathcal{H}_n$

be such that

![]() $\phi (x) = \psi _{b_x}(x)$

. We claim that

$\phi (x) = \psi _{b_x}(x)$

. We claim that

![]() ${\sf avgStretch}(\phi ) = O(1)$

. Let

${\sf avgStretch}(\phi ) = O(1)$

. Let

![]() $x \sim x^{\prime}$

be uniformly random vertices in

$x \sim x^{\prime}$

be uniformly random vertices in

![]() $\mathcal{H}_{n-1}$

that differ in exactly one coordinate, and let

$\mathcal{H}_{n-1}$

that differ in exactly one coordinate, and let

![]() $r \in \{0,1\}$

be uniformly random. Then

$r \in \{0,1\}$

be uniformly random. Then

\begin{eqnarray*}{\mathbb E}\left[{{\sf dist}}\left(\phi (x), \phi \left(x^{\prime}\right)\right)\right] & = &{\mathbb E}\left[{{\sf dist}}\left(f_k\left(x \circ b_x\right), f_k\left(x^{\prime} \circ b_{x^{\prime}}\right)\right)\right] \\ & \leq &{\mathbb E}\left[{{\sf dist}}\left(f_k\left(x \circ b_x\right), f_k\left(x \circ r\right)\right)\right] +{\mathbb E}\left[{{\sf dist}}\left(f_k\left(x \circ r\right), f_k\left(x^{\prime} \circ r\right)\right)\right] \\ & & +\,{\mathbb E}\left[{{\sf dist}}\left(f_k\left(x^{\prime} \circ r\right), f_k\left(x^{\prime} \circ b_{x^{\prime}}\right)\right)\right]. \end{eqnarray*}

\begin{eqnarray*}{\mathbb E}\left[{{\sf dist}}\left(\phi (x), \phi \left(x^{\prime}\right)\right)\right] & = &{\mathbb E}\left[{{\sf dist}}\left(f_k\left(x \circ b_x\right), f_k\left(x^{\prime} \circ b_{x^{\prime}}\right)\right)\right] \\ & \leq &{\mathbb E}\left[{{\sf dist}}\left(f_k\left(x \circ b_x\right), f_k\left(x \circ r\right)\right)\right] +{\mathbb E}\left[{{\sf dist}}\left(f_k\left(x \circ r\right), f_k\left(x^{\prime} \circ r\right)\right)\right] \\ & & +\,{\mathbb E}\left[{{\sf dist}}\left(f_k\left(x^{\prime} \circ r\right), f_k\left(x^{\prime} \circ b_{x^{\prime}}\right)\right)\right]. \end{eqnarray*}

For the first term, since

![]() $r$

is equal to

$r$

is equal to

![]() $b_x$

with probability

$b_x$

with probability

![]() $1/2$

by Lemma 3.1 Item 3 we get that

$1/2$

by Lemma 3.1 Item 3 we get that

![]() ${\mathbb E}\left[{{\sf dist}}\left(f_k\left(x \circ b_x\right), f_k(x \circ r)\right)\right]\leq 5$

. Analogously the third term is bounded by

${\mathbb E}\left[{{\sf dist}}\left(f_k\left(x \circ b_x\right), f_k(x \circ r)\right)\right]\leq 5$

. Analogously the third term is bounded by

![]() $5$

. In the second term we consider the expected distance between

$5$

. In the second term we consider the expected distance between

![]() $f({\cdot})$

applied on inputs that differ in a random coordinate

$f({\cdot})$

applied on inputs that differ in a random coordinate

![]() $i \in [n-1]$

, which is at most

$i \in [n-1]$

, which is at most

![]() $10$

, again, by Lemma 3.1 Item 3. Therefore,

$10$

, again, by Lemma 3.1 Item 3. Therefore,

![]() ${\mathbb E}\left[{{\sf dist}}\left(\phi (x), \phi \left(x^{\prime}\right)\right)\right] \leq 20$

.

${\mathbb E}\left[{{\sf dist}}\left(\phi (x), \phi \left(x^{\prime}\right)\right)\right] \leq 20$

.

We return to the proof of Lemma 3.1.

Proof of Lemma 3.1. Define

![]() $f_k \,:\, \mathcal{H}_n \to{A_{\textsf{rec-maj}_{k}}}$

by induction on

$f_k \,:\, \mathcal{H}_n \to{A_{\textsf{rec-maj}_{k}}}$

by induction on

![]() $k$

. For

$k$

. For

![]() $k = 1$

define

$k = 1$

define

![]() $f_1$

as

$f_1$

as

\begin{align*} 000 &\mapsto 110 \\ 100 &\mapsto 101 \\ 010 &\mapsto 011 \\ 001 &\mapsto 111 \\ x &\mapsto x \quad \textrm{for all } x \in \{110,101,011,111\}. \end{align*}

\begin{align*} 000 &\mapsto 110 \\ 100 &\mapsto 101 \\ 010 &\mapsto 011 \\ 001 &\mapsto 111 \\ x &\mapsto x \quad \textrm{for all } x \in \{110,101,011,111\}. \end{align*}

That is,

![]() $f_1$

acts as the identity map for all

$f_1$

acts as the identity map for all

![]() $x \in{A_{\textsf{rec-maj}_{1}}}$

, and maps all inputs in

$x \in{A_{\textsf{rec-maj}_{1}}}$

, and maps all inputs in

![]() $Z_{\textsf{rec-maj}_{1}}$

to

$Z_{\textsf{rec-maj}_{1}}$

to

![]() $A_{\textsf{rec-maj}_{1}}$

in a one-to-one way. Note that

$A_{\textsf{rec-maj}_{1}}$

in a one-to-one way. Note that

![]() $f_1$

is a non-decreasing mapping, that is,

$f_1$

is a non-decreasing mapping, that is,

![]() $(f_1(x))_i \geq x_i$

for all

$(f_1(x))_i \geq x_i$

for all

![]() $x \in \mathcal{H}_3$

and

$x \in \mathcal{H}_3$

and

![]() $i \in [3]$

.

$i \in [3]$

.

For

![]() $k\gt 1$

define

$k\gt 1$

define

![]() $f_k$

recursively using

$f_k$

recursively using

![]() $f_{k-1}$

as follows. For each

$f_{k-1}$

as follows. For each

![]() ${r} \in [3]$

, let

${r} \in [3]$

, let

![]() $T_{r} = \left[({r}-1) \cdot 3^{k-1}+1, \ldots,{r} \cdot 3^{k-1}\right]$

be the

$T_{r} = \left[({r}-1) \cdot 3^{k-1}+1, \ldots,{r} \cdot 3^{k-1}\right]$

be the

![]() $r$

’th third of the interval

$r$

’th third of the interval

![]() $\big[3^k\big]$

. For

$\big[3^k\big]$

. For

![]() $x \in \mathcal{H}_{3^k}$

, write

$x \in \mathcal{H}_{3^k}$

, write

![]() $x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where

$x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where

![]() $x^{({r})} = x_{T_{r}} \in \mathcal{H}_{3^{k-1}}$

is the

$x^{({r})} = x_{T_{r}} \in \mathcal{H}_{3^{k-1}}$

is the

![]() $r$

’th third of

$r$

’th third of

![]() $x$

. Let

$x$

. Let

![]() $y = (y_1,y_2,y_3) \in \{0,1\}^3$

be defined as

$y = (y_1,y_2,y_3) \in \{0,1\}^3$

be defined as

![]() $y_{r} ={\textsf{rec-maj}}_{k-1}\left(x^{({r})}\right)$

, and let

$y_{r} ={\textsf{rec-maj}}_{k-1}\left(x^{({r})}\right)$

, and let

![]() $w =(w_1,w_2,w_3) = f_1(y) \in \{0,1\}^3$

. Define

$w =(w_1,w_2,w_3) = f_1(y) \in \{0,1\}^3$

. Define

\begin{equation*} f_{k-1}^{(r)}\big(x^{(r)}\big) = \begin {cases} f_{k-1}\left(x^{({r})}\right) & \mbox {if $w_{r} \neq y_{r}$}, \\[5pt] x^{({r})} & \mbox {otherwise}. \end {cases} \end{equation*}

\begin{equation*} f_{k-1}^{(r)}\big(x^{(r)}\big) = \begin {cases} f_{k-1}\left(x^{({r})}\right) & \mbox {if $w_{r} \neq y_{r}$}, \\[5pt] x^{({r})} & \mbox {otherwise}. \end {cases} \end{equation*}

Finally, the mapping

![]() $f_k$

is defined as

$f_k$

is defined as

That is, if

![]() ${\textsf{rec-maj}}_{k}(x) = 1$

then

${\textsf{rec-maj}}_{k}(x) = 1$

then

![]() $w = y$

, and hence

$w = y$

, and hence

![]() $f_k(x) = x$

, and otherwise,

$f_k(x) = x$

, and otherwise,

![]() $f_{k-1}^{(r)}\big(x^{(r)}\big) \neq x^{(r)}$

for all

$f_{k-1}^{(r)}\big(x^{(r)}\big) \neq x^{(r)}$

for all

![]() $r \in [3]$

where

$r \in [3]$

where

![]() $y_r = 0$

and

$y_r = 0$

and

![]() $w_r = 1$

.

$w_r = 1$

.

Next we prove that

![]() $f_k$

satisfies the properties stated in Lemma 3.1.

$f_k$

satisfies the properties stated in Lemma 3.1.

-

1. It is clear from the definition that if

${\textsf{rec-maj}}_{k}(x) = 1$

, then

${\textsf{rec-maj}}_{k}(x) = 1$

, then

$w = y$

, and hence

$w = y$

, and hence

$f_k(x) = x$

.

$f_k(x) = x$

. -

2. Next, we prove by induction on

$k$

that the restriction of

$k$

that the restriction of

$f_k$

to

$f_k$

to

$Z_{\textsf{rec-maj}_{k}}$

induces a bijection. For

$Z_{\textsf{rec-maj}_{k}}$

induces a bijection. For

$k = 1$

the statement clearly holds. For

$k = 1$

the statement clearly holds. For

$k \gt 2$

suppose that the restriction of

$k \gt 2$

suppose that the restriction of

$f_{k-1}$

to

$f_{k-1}$

to

$Z_{\textsf{rec-maj}_{k-1}}$

induces a bijection. We show that for every

$Z_{\textsf{rec-maj}_{k-1}}$

induces a bijection. We show that for every

$x \in{A_{\textsf{rec-maj}_{k}}}$

the mapping

$x \in{A_{\textsf{rec-maj}_{k}}}$

the mapping

$f_k$

has a preimage of

$f_k$

has a preimage of

$x$

in

$x$

in

$Z_{\textsf{rec-maj}_{k}}$

. Write

$Z_{\textsf{rec-maj}_{k}}$

. Write

$x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where

$x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where

$x^{({r})} = x_{T_{r}} \in \mathcal{H}_{3^{k-1}}$

is the

$x^{({r})} = x_{T_{r}} \in \mathcal{H}_{3^{k-1}}$

is the

$r$

’th third of

$r$

’th third of

$x$

. Let

$x$

. Let

$w = (w_1,w_2,w_3)$

be defined as

$w = (w_1,w_2,w_3)$

be defined as

$w_{r} ={\textsf{rec-maj}}_{k}[k-1]\left(x^{({r})}\right)$

. Since

$w_{r} ={\textsf{rec-maj}}_{k}[k-1]\left(x^{({r})}\right)$

. Since

$x \in{A_{\textsf{rec-maj}_{k}}}$

it follows that

$x \in{A_{\textsf{rec-maj}_{k}}}$

it follows that

$w \in \{110,101,011,111\}$

. Let

$w \in \{110,101,011,111\}$

. Let

$y =(y_1,y_2,y_3) \in{Z_{\textsf{rec-maj}_{1}}}$

such that

$y =(y_1,y_2,y_3) \in{Z_{\textsf{rec-maj}_{1}}}$

such that

$f_1(y) = w$

. For each

$f_1(y) = w$

. For each

${r} \in [3]$

such that

${r} \in [3]$

such that

$w_{r} = 1$

and

$w_{r} = 1$

and

$y_{r} = 0$

it must be the case that

$y_{r} = 0$

it must be the case that

$x^{({r})} \in{A_{\textsf{rec-maj}_{k-1}}}$

, and hence, by the induction hypothesis, there is some

$x^{({r})} \in{A_{\textsf{rec-maj}_{k-1}}}$

, and hence, by the induction hypothesis, there is some

$z^{({r})} \in{Z_{\textsf{rec-maj}_{k-1}}}$

such that

$z^{({r})} \in{Z_{\textsf{rec-maj}_{k-1}}}$

such that

$f_{k-1}\left(z^{({r})}\right) = x^{({r})}$

. For each

$f_{k-1}\left(z^{({r})}\right) = x^{({r})}$

. For each

${r} \in [3]$

such that

${r} \in [3]$

such that

$y_{r} = w_{r}$

define

$y_{r} = w_{r}$

define

$z^{({r})} = x^{({r})}$

. Since

$z^{({r})} = x^{({r})}$

. Since

$y =(y_1,y_2,y_3) \in{Z_{\textsf{rec-maj}_{1}}}$

, it follows that

$y =(y_1,y_2,y_3) \in{Z_{\textsf{rec-maj}_{1}}}$

, it follows that

$z = z^{(1)} \circ z^{(2)} \circ z^{(3)} \in{Z_{\textsf{rec-maj}_{k}}}$

. It is immediate by the construction that, indeed,

$z = z^{(1)} \circ z^{(2)} \circ z^{(3)} \in{Z_{\textsf{rec-maj}_{k}}}$

. It is immediate by the construction that, indeed,

$f_k(z) = x$

.

$f_k(z) = x$

. -

3. Fix

$i \in \big[3^k\big]$

. In order to prove

$i \in \big[3^k\big]$

. In order to prove

${\mathbb E}[{{\sf dist}}(f_k(x),f_k(x+e_i)) ] = O(1)$

consider the following events.Then

${\mathbb E}[{{\sf dist}}(f_k(x),f_k(x+e_i)) ] = O(1)$

consider the following events.Then \begin{align*} E_1 & = \left\{{\textsf{rec-maj}}_{k}(x) = 1 ={\textsf{rec-maj}}_{k}(x+e_i)\right\}, \\[3pt] E_2 & = \left\{{\textsf{rec-maj}}_{k}(x) = 0,{\textsf{rec-maj}}_{k}(x+e_i) = 1\right\}, \\[3pt] E_3 & = \left\{{\textsf{rec-maj}}_{k}(x) = 1,{\textsf{rec-maj}}_{k}(x+e_i) = 0\right\}, \\[3pt] E_4 & = \left\{{\textsf{rec-maj}}_{k}(x) = 0 ={\textsf{rec-maj}}_{k}(x+e_i)\right\}. \end{align*}

\begin{align*} E_1 & = \left\{{\textsf{rec-maj}}_{k}(x) = 1 ={\textsf{rec-maj}}_{k}(x+e_i)\right\}, \\[3pt] E_2 & = \left\{{\textsf{rec-maj}}_{k}(x) = 0,{\textsf{rec-maj}}_{k}(x+e_i) = 1\right\}, \\[3pt] E_3 & = \left\{{\textsf{rec-maj}}_{k}(x) = 1,{\textsf{rec-maj}}_{k}(x+e_i) = 0\right\}, \\[3pt] E_4 & = \left\{{\textsf{rec-maj}}_{k}(x) = 0 ={\textsf{rec-maj}}_{k}(x+e_i)\right\}. \end{align*}

${\mathbb E}\!\left[{{\sf dist}}\!\left(f_k(x), f_k(x+e_i)\right)\right] = \sum _{j=1,2,3,4}{\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_j\right] \cdot \mathbb{P}\big[E_j\big]$

. The following three claims prove an upper bound on

${\mathbb E}\!\left[{{\sf dist}}\!\left(f_k(x), f_k(x+e_i)\right)\right] = \sum _{j=1,2,3,4}{\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_j\right] \cdot \mathbb{P}\big[E_j\big]$

. The following three claims prove an upper bound on

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right)\right]$

.

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right)\right]$

.

Claim 3.2.

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_1\right] = 1$

.

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_1\right] = 1$

.Claim 3.3.

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_2\right] \leq 2 \cdot 1.5^k$

.

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_2\right] \leq 2 \cdot 1.5^k$

.Claim 3.4.

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_4\right] \cdot \mathbb{P}[E_4] \leq 8$

.

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_4\right] \cdot \mathbb{P}[E_4] \leq 8$

.

By symmetry, it is clear that

![]() ${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_2\right] ={\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_3\right]$

. Note also that

${\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_2\right] ={\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_3\right]$

. Note also that

![]() $\mathbb{P}\big[E_1\big] \lt 0.5$

and

$\mathbb{P}\big[E_1\big] \lt 0.5$

and

![]() $\mathbb{P}\left[E_2 \cup E_3\right] = 2^{-k}$

.Footnote

3

Therefore, the claims above imply that

$\mathbb{P}\left[E_2 \cup E_3\right] = 2^{-k}$

.Footnote

3

Therefore, the claims above imply that

\begin{align*} {\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right)\right] & = \sum _{j=1,2,3,4}{\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_i\right] \cdot \mathbb {P}[E_i] \leq 1 \cdot 0.5\\& + 2 \cdot 1.5^k \cdot 2^{-k} + 8 \leq 10, \end{align*}

\begin{align*} {\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right)\right] & = \sum _{j=1,2,3,4}{\mathbb E}\left[{{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) | E_i\right] \cdot \mathbb {P}[E_i] \leq 1 \cdot 0.5\\& + 2 \cdot 1.5^k \cdot 2^{-k} + 8 \leq 10, \end{align*}

which completes the proof of Lemma 3.1.

Next we prove the above claims.

Proof of Claim 3.2. If

![]() $E_1$

holds then

$E_1$

holds then

![]() ${{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) ={{\sf dist}}(x,x+e_i) = 1$

.

${{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) ={{\sf dist}}(x,x+e_i) = 1$

.

Proof of Claim 3.3. We prove first that

The proof is by induction on

![]() $k$

. For

$k$

. For

![]() $k = 1$

we have

$k = 1$

we have

![]() ${\mathbb E}\left[{{\sf dist}}\left(x,f_1(x)\right) |{\textsf{rec-maj}}_{k}(x) = 0\right] = 1.5$

as there are two inputs

${\mathbb E}\left[{{\sf dist}}\left(x,f_1(x)\right) |{\textsf{rec-maj}}_{k}(x) = 0\right] = 1.5$

as there are two inputs

![]() $x \in{Z_{\textsf{rec-maj}_{k}}}$

with

$x \in{Z_{\textsf{rec-maj}_{k}}}$

with

![]() ${{\sf dist}}\left(x,f_1(x)\right) = 1$

and two

${{\sf dist}}\left(x,f_1(x)\right) = 1$

and two

![]() $x$

’s in

$x$

’s in

![]() $Z_{\textsf{rec-maj}_{k}}$

with

$Z_{\textsf{rec-maj}_{k}}$

with

![]() ${{\sf dist}}\left(x,f_1(x)\right) = 2$

. For

${{\sf dist}}\left(x,f_1(x)\right) = 2$

. For

![]() $k \gt 1$

suppose that

$k \gt 1$

suppose that

![]() ${\mathbb E}\left[{{\sf dist}}(x,f_{k-1}(x)) |{\textsf{rec-maj}}_{k-1}(x)\right] = 1.5^{k-1}$

. Write each

${\mathbb E}\left[{{\sf dist}}(x,f_{k-1}(x)) |{\textsf{rec-maj}}_{k-1}(x)\right] = 1.5^{k-1}$

. Write each

![]() $x \in \mathcal{H}_{3^k}$

as

$x \in \mathcal{H}_{3^k}$

as

![]() $x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where

$x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where

![]() $x^{({r})} = x_{T_{r}} \in \mathcal{H}_{3^{k-1}}$

is the

$x^{({r})} = x_{T_{r}} \in \mathcal{H}_{3^{k-1}}$

is the

![]() $r$

’th third of

$r$

’th third of

![]() $x$

, and let

$x$

, and let

![]() $y = (y_1,y_2,y_3)$

be defined as

$y = (y_1,y_2,y_3)$

be defined as

![]() $y_{r} ={\textsf{rec-maj}}_{k-1}\left(x^{({r})}\right)$

. Since

$y_{r} ={\textsf{rec-maj}}_{k-1}\left(x^{({r})}\right)$

. Since

![]() ${\mathbb E}_{x \in H_{3^{k-1}}}\left[{\textsf{rec-maj}}_{k-1}(x)\right] = 0.5$

, it follows that for a random

${\mathbb E}_{x \in H_{3^{k-1}}}\left[{\textsf{rec-maj}}_{k-1}(x)\right] = 0.5$

, it follows that for a random

![]() $z \in{Z_{\textsf{rec-maj}_{k}}}$

each

$z \in{Z_{\textsf{rec-maj}_{k}}}$

each

![]() $y \in \{000, 100, 010, 001\}$

happens with the same probability

$y \in \{000, 100, 010, 001\}$

happens with the same probability

![]() $1/4$

, and hence, using the induction hypothesis we get

$1/4$

, and hence, using the induction hypothesis we get

\begin{eqnarray*}{\mathbb E}\left[{{\sf dist}}\left(x,f_{k}(x)\right) |{\textsf{rec-maj}}_{k}(x) = 0\right] &=& \mathbb{P}\left[y \in \{100, 010\} |{\textsf{rec-maj}}_{k}(x) = 0\right] \times 1.5^{k-1} \\ && +\, \mathbb{P}\left[y \in \{000, 001\} |{\textsf{rec-maj}}_{k}(x) = 0\right] \times 2 \cdot 1.5^{k-1} \\ &=& 1.5^k, \end{eqnarray*}

\begin{eqnarray*}{\mathbb E}\left[{{\sf dist}}\left(x,f_{k}(x)\right) |{\textsf{rec-maj}}_{k}(x) = 0\right] &=& \mathbb{P}\left[y \in \{100, 010\} |{\textsf{rec-maj}}_{k}(x) = 0\right] \times 1.5^{k-1} \\ && +\, \mathbb{P}\left[y \in \{000, 001\} |{\textsf{rec-maj}}_{k}(x) = 0\right] \times 2 \cdot 1.5^{k-1} \\ &=& 1.5^k, \end{eqnarray*}

which proves equation (1).

Next we prove thatFootnote 4

\begin{equation} {\mathbb E}\left[{{\sf dist}}\left(x, f_k(x)\right) | E_2\right] \leq \sum _{j=0}^{k-1} 1.5^j = 2 \cdot \left(1.5^k - 1\right). \end{equation}

\begin{equation} {\mathbb E}\left[{{\sf dist}}\left(x, f_k(x)\right) | E_2\right] \leq \sum _{j=0}^{k-1} 1.5^j = 2 \cdot \left(1.5^k - 1\right). \end{equation}

Note that equation (2) proves Claim 3.3. Indeed, if

![]() $E_2$

holds then using the triangle inequality we have

$E_2$

holds then using the triangle inequality we have

![]() ${{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) \leq{{\sf dist}}(f_k(x), x) +{{\sf dist}}(x,x+e_i) +{{\sf dist}}(x+e_i, f_k(x+e_i)) ={{\sf dist}}(f_k(x), x) + 1$

, and hence

${{\sf dist}}\left(f_k(x), f_k(x+e_i)\right) \leq{{\sf dist}}(f_k(x), x) +{{\sf dist}}(x,x+e_i) +{{\sf dist}}(x+e_i, f_k(x+e_i)) ={{\sf dist}}(f_k(x), x) + 1$

, and hence

as required.

We prove equation (2) by induction on

![]() $k$

. For

$k$

. For

![]() $k = 1$

equation (2) clearly holds. For the induction step let

$k = 1$

equation (2) clearly holds. For the induction step let

![]() $k \gt 1$

. As in the definition of

$k \gt 1$

. As in the definition of

![]() $f_k$

write each

$f_k$

write each

![]() $x \in \mathcal{H}_{3^k}$

as

$x \in \mathcal{H}_{3^k}$

as

![]() $x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where

$x = x^{(1)} \circ x^{(2)} \circ x^{(3)}$

, where

![]() $x^{({r})} = x_{T_{r}}$

is the

$x^{({r})} = x_{T_{r}}$

is the

![]() $r$

’th third of

$r$

’th third of

![]() $x$

, and let

$x$

, and let

![]() $y = (y_1,y_2,y_3)$

be defined as

$y = (y_1,y_2,y_3)$

be defined as

![]() $y_{r} ={\textsf{rec-maj}}_{k-1}\left(x^{({r})}\right)$

.

$y_{r} ={\textsf{rec-maj}}_{k-1}\left(x^{({r})}\right)$

.

Let us suppose for concreteness that

![]() $i \in T_1$

. (The cases of

$i \in T_1$

. (The cases of

![]() $i \in T_2$

and

$i \in T_2$

and

![]() $i \in T_3$

are handled similarly.) Note that if

$i \in T_3$

are handled similarly.) Note that if

![]() ${\textsf{rec-maj}}_{k}(x) = 0$

,

${\textsf{rec-maj}}_{k}(x) = 0$

,

![]() ${\textsf{rec-maj}}_{k}(x+e_i) = 1$

, and

${\textsf{rec-maj}}_{k}(x+e_i) = 1$

, and

![]() $i \in T_1$

, then

$i \in T_1$

, then

![]() $y \in \{010,001\}$

. We consider each case separately.

$y \in \{010,001\}$

. We consider each case separately.

-

1. Suppose that

$y = 010$

. Then

$y = 010$

. Then

$w = f(y) =011$

, and hence

$w = f(y) =011$

, and hence

$f(x)$

differs from

$f(x)$

differs from

$x$

only in

$x$

only in

$T_3$

. Taking the expectation over

$T_3$

. Taking the expectation over

$x$

such that

$x$

such that

${\textsf{rec-maj}}_{k}(x) = 0$

and

${\textsf{rec-maj}}_{k}(x) = 0$

and

${\textsf{rec-maj}}_{k}(x+e_i) = 1$

by equation (1) we get

${\textsf{rec-maj}}_{k}(x+e_i) = 1$

by equation (1) we get

${\mathbb E}\left[{{\sf dist}}(x,f(x)) | E_2, y = 010\right] ={\mathbb E}\left[{{\sf dist}}\left(f_{k-1}\big(x^{(3)}\big), x^{(3)}\right) |{\textsf{rec-maj}}_{k-1}\right. \left.\big(x^{(3)}\big)=0\right] = 1.5^{k-1}$

.

${\mathbb E}\left[{{\sf dist}}(x,f(x)) | E_2, y = 010\right] ={\mathbb E}\left[{{\sf dist}}\left(f_{k-1}\big(x^{(3)}\big), x^{(3)}\right) |{\textsf{rec-maj}}_{k-1}\right. \left.\big(x^{(3)}\big)=0\right] = 1.5^{k-1}$

. -

2. If

$y = 001$

, then

$y = 001$

, then

$w = f_1(y) = 111$

, and

$w = f_1(y) = 111$

, and

$f(x)$

differs from

$f(x)$

differs from

$x$

only in

$x$

only in

$T_1 \cup T_2$

. ThenDenoting by

$T_1 \cup T_2$

. ThenDenoting by \begin{eqnarray*}{\mathbb E}\left[{{\sf dist}}(x,f(x)) | E_2, y = 001\right] &=&{\mathbb E}\left[{{\sf dist}}\big(f_{k-1}\big(x^{(1)}\big), x^{(1)}\big) | E_2, y = 001\right] \\ && +\,{\mathbb E}\left[{{\sf dist}}\big(f_{k-1}\big(x^{(2)}\big), x^{(2)}\big) | E_2, y = 001\right]. \end{eqnarray*}

\begin{eqnarray*}{\mathbb E}\left[{{\sf dist}}(x,f(x)) | E_2, y = 001\right] &=&{\mathbb E}\left[{{\sf dist}}\big(f_{k-1}\big(x^{(1)}\big), x^{(1)}\big) | E_2, y = 001\right] \\ && +\,{\mathbb E}\left[{{\sf dist}}\big(f_{k-1}\big(x^{(2)}\big), x^{(2)}\big) | E_2, y = 001\right]. \end{eqnarray*}

$E^{\prime}_2$

the event that

$E^{\prime}_2$

the event that

${\textsf{rec-maj}}_{k-1}\big(x^{(1)}\big) = 0,{\textsf{rec-maj}}_{k-1}(x^{(1)}+e_i) = 1$

(i.e., the analogue of the event

${\textsf{rec-maj}}_{k-1}\big(x^{(1)}\big) = 0,{\textsf{rec-maj}}_{k-1}(x^{(1)}+e_i) = 1$

(i.e., the analogue of the event

$E_2$

applied on

$E_2$

applied on

${\textsf{rec-maj}}_{k-1}$

), we note thatwhich is upper bounded by

${\textsf{rec-maj}}_{k-1}$

), we note thatwhich is upper bounded by \begin{equation*} {\mathbb E}\left[{{\sf dist}}\big(f_{k-1}\big(x^{(1)}\big), x^{(1)}\big) | E_2, y = 001\right] = {\mathbb E}\left[ {{\sf dist}}\big(f_{k-1}\big(x^{(1)}\big), x^{(1)}\big) | E^{\prime}_2\right], \end{equation*}

\begin{equation*} {\mathbb E}\left[{{\sf dist}}\big(f_{k-1}\big(x^{(1)}\big), x^{(1)}\big) | E_2, y = 001\right] = {\mathbb E}\left[ {{\sf dist}}\big(f_{k-1}\big(x^{(1)}\big), x^{(1)}\big) | E^{\prime}_2\right], \end{equation*}

$\sum _{j=0}^{k-2} 1.5^j$

using the induction hypothesis. For the second term we havewhich is at most

$\sum _{j=0}^{k-2} 1.5^j$

using the induction hypothesis. For the second term we havewhich is at most \begin{equation*} {\mathbb E}\left[{{\sf dist}}\big(f_{k-1}\big(x^{(2)}\big), x^{(2)}\big) | E_2, y = 001\right] = {\mathbb E}\left[ {{\sf dist}}\big(f_{k-1}\big(x^{(2)}\big), x^{(2)}\big) | {\textsf {rec-maj}}_{k-1}\big(x^{(2)}\big) = 0\right], \end{equation*}

\begin{equation*} {\mathbb E}\left[{{\sf dist}}\big(f_{k-1}\big(x^{(2)}\big), x^{(2)}\big) | E_2, y = 001\right] = {\mathbb E}\left[ {{\sf dist}}\big(f_{k-1}\big(x^{(2)}\big), x^{(2)}\big) | {\textsf {rec-maj}}_{k-1}\big(x^{(2)}\big) = 0\right], \end{equation*}

$1.5^{k-1}$

using equation (1). Therefore, for

$1.5^{k-1}$

using equation (1). Therefore, for

$y = 001$

we have

$y = 001$

we have \begin{equation*} {\mathbb E}[{{\sf dist}}(x,f(x)) | E_2, y = 001] \leq \sum _{j=0}^{k-2} 1.5^j + 1.5^{k-1}. \end{equation*}

\begin{equation*} {\mathbb E}[{{\sf dist}}(x,f(x)) | E_2, y = 001] \leq \sum _{j=0}^{k-2} 1.5^j + 1.5^{k-1}. \end{equation*}

Using the two cases for