Impact Statement

Waves similar to those observed at the beach exist throughout the ocean interior and are induced by tides, winds, currents, eddies, and other processes. Similar to beach waves, internal waves can also roll up and break. Widespread internal-wave breaking helps drive the ocean circulation by upwelling the densest waters that form in polar regions and sink to the ocean abyss. They also play an important role in transport and storage of heat, carbon and nutrients. In this work, we show how well-understood physical understanding can be used in conjunction with statistics of observed ocean turbulence to improve our understanding of the impact of small-scale mixing on the global ocean, and thereby on the climate system.

1. Introduction

Turbulence induced by breaking density overturns in the ocean interior, such as those induced by internal waves or boundary layer turbulence, plays a key role in regulating the global ocean circulation and budgets of climatically important tracers such as heat, carbon and nutrients (Reference Garabato and MeredithGarabato & Meredith, 2022; Reference Talley, Feely, Sloyan, Wanninkhof, Baringer, Bullister and ZhangTalley et al., 2016; Reference Whalen, De Lavergne, Naveira Garabato, Klymak, Mackinnon and SheenWhalen et al., 2020). Such turbulence is primarily excited at the bottom of the ocean through interaction of tides, currents and eddies with bottom topography and at the surface through the wind stress acting on the sea-surface (Reference AlfordAlford, 2020; Reference Garabato and MeredithGarabato & Meredith, 2022; Reference Garrett and KunzeGarrett & Kunze, 2007; Reference LeggLegg, 2021; Reference Nikurashin and FerrariNikurashin & Ferrari, 2013). As an example, Figure 1 shows an observationally sampled abyssal high mixing zone, the Samoan Passage, where northward flow of Antarctic Bottom Waters through a constriction generates strong turbulence through a generation of wave-induced and hydraulically controlled density overturns (Reference Alford, Girton, Voet, Carter, Mickett and KlymakAlford et al., 2013; Reference Carter, Voet, Alford, Girton, Mickett, Klymak and TanCarter et al., 2019). Figure 1b highlights the intermittency of turbulence, which renders it difficult to sample sufficiently to allow for accurate quantification of properties of interest such as turbulent mixing. Thus, sampling poses a monumental challenge to connecting our understanding of the physics of turbulence, which occur on scales ![]() $\mathcal {O}(10^{-3}\unicode{x2013}10^{-1})$ m, to the larger scale regional and global implications, which are relevant on scales

$\mathcal {O}(10^{-3}\unicode{x2013}10^{-1})$ m, to the larger scale regional and global implications, which are relevant on scales ![]() $\mathcal {O}(10^6\unicode{x2013}10^7)$ m. In this sense, this problem is analogous to cloud physics: both involve micro-physics with leading order global impacts, both occur on scales much smaller than typical climate model resolutions and thus need to be parametrized, and both have been notoriously difficult to parametrize and contribute significantly to inaccuracies in model solutions. In this paper, we provide a novel methodology, based on recent progress in our understanding of the microphysics of mixing (Reference Mashayek, Caulfield and AlfordMashayek, Caulfield, & Alford, 2021, hereafter Reference Mashayek, Caulfield and AlfordMCA21) and statistics of wave-induced turbulence (Reference Cael and MashayekCael & Mashayek, 2021, hereafter Reference Cael and MashayekCM21), to connect the physics of small-scale turbulent mixing to large-scale dynamics. While we make specific choices with regard to the physical parametrization of mixing and statistical distributions of ocean turbulence, our choices may reasonably be thought of as placeholders, based on the best we have today. The machinery to which we refer as a ‘recipe’, however, is a broader framework, the components of which can improve over time, for linking physics and statistics to represent small-scale mixing in ocean/climate models.

$\mathcal {O}(10^6\unicode{x2013}10^7)$ m. In this sense, this problem is analogous to cloud physics: both involve micro-physics with leading order global impacts, both occur on scales much smaller than typical climate model resolutions and thus need to be parametrized, and both have been notoriously difficult to parametrize and contribute significantly to inaccuracies in model solutions. In this paper, we provide a novel methodology, based on recent progress in our understanding of the microphysics of mixing (Reference Mashayek, Caulfield and AlfordMashayek, Caulfield, & Alford, 2021, hereafter Reference Mashayek, Caulfield and AlfordMCA21) and statistics of wave-induced turbulence (Reference Cael and MashayekCael & Mashayek, 2021, hereafter Reference Cael and MashayekCM21), to connect the physics of small-scale turbulent mixing to large-scale dynamics. While we make specific choices with regard to the physical parametrization of mixing and statistical distributions of ocean turbulence, our choices may reasonably be thought of as placeholders, based on the best we have today. The machinery to which we refer as a ‘recipe’, however, is a broader framework, the components of which can improve over time, for linking physics and statistics to represent small-scale mixing in ocean/climate models.

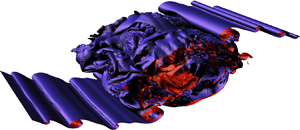

Figure 1. (a) Bottom water temperature in the Samoan Passage, a chokepoint of abyssal ocean circulation. (b) Turbulence in the passage: northward velocity (colours), potential temperature (black contours), and dissipation rate measured by a turbulence microstructure profiler (shaded black profiles) and inferred from Thorpe scales (blue profiles). Panels (a,b) are from Reference Alford, Girton, Voet, Carter, Mickett and KlymakAlford et al. (2013). (c) Evolution of the overturn scale, ![]() $L_T$, and upper turbulence bound,

$L_T$, and upper turbulence bound, ![]() $L_O$, as well as the viscous dissipation scale,

$L_O$, as well as the viscous dissipation scale, ![]() $L_K$, for an archetypal mixing process in the form of a shear instability of Kelvin Helmholtz type (Reference Mashayek, Caulfield and PeltierMashayek, Caulfield, & Peltier, 2013). The insets show the turbulence breakdown of the wave by showing the spanwise (out of the page) vorticity in grey and counter-rotating streamwise (along flow) vorticity iso-surfaces in green and purple, illustrating the hydrodynamic instabilities that facilitate a wave breakdown (Reference Mashayek and PeltierMashayek & Peltier, 2012a, Reference Mashayek and Peltier2012b). Such instabilities create a turbulence cascade that transfers energy from the energy-containing scale (

$L_K$, for an archetypal mixing process in the form of a shear instability of Kelvin Helmholtz type (Reference Mashayek, Caulfield and PeltierMashayek, Caulfield, & Peltier, 2013). The insets show the turbulence breakdown of the wave by showing the spanwise (out of the page) vorticity in grey and counter-rotating streamwise (along flow) vorticity iso-surfaces in green and purple, illustrating the hydrodynamic instabilities that facilitate a wave breakdown (Reference Mashayek and PeltierMashayek & Peltier, 2012a, Reference Mashayek and Peltier2012b). Such instabilities create a turbulence cascade that transfers energy from the energy-containing scale (![]() $L_T$) to the scales where molecular dissipation erodes momentum (

$L_T$) to the scales where molecular dissipation erodes momentum (![]() $L_K$).

$L_K$).

The paper is organized as follows. In § 2, we review the physics of small-scale turbulent mixing. In § 3, we provide an overview of the statistics of the observed ocean turbulence and argue that the chronic undersampling of turbulence highlights the inevitability of a statistical approach. In § 4.1, we introduce a recipe that can put the physics and statistics together to infer ![]() $\varGamma _B$. In § 5, we showcase the application of the methodology to an ocean basin. We finish by discussions of the results and their implications in § 7.

$\varGamma _B$. In § 5, we showcase the application of the methodology to an ocean basin. We finish by discussions of the results and their implications in § 7.

2. Physics of wave-induced turbulence

Our understanding of the physics of wave-induced density stratified turbulent mixing has progressed significantly over the past few decades (Reference CaulfieldCaulfield, 2021; Reference Ivey, Winters and KoseffIvey, Winters, & Koseff, 2008; Reference Peltier and CaulfieldPeltier & Caulfield, 2003). A key question concerns how the total power available to turbulence, ![]() $\mathcal {P}$, from winds, tides and other sources, is partitioned into (I) mixing,

$\mathcal {P}$, from winds, tides and other sources, is partitioned into (I) mixing, ![]() $\mathcal {M}$, defined as a net vertical irreversible buoyancy flux, and (II) dissipation into heat (due to the seawater viscosity), the rate of which is referred to as

$\mathcal {M}$, defined as a net vertical irreversible buoyancy flux, and (II) dissipation into heat (due to the seawater viscosity), the rate of which is referred to as ![]() $\varepsilon$. The turbulent mixing may be approximated by

$\varepsilon$. The turbulent mixing may be approximated by

where ![]() $N^2=-({g}/{\rho _0})\partial _z\rho$ represents the density stratification,

$N^2=-({g}/{\rho _0})\partial _z\rho$ represents the density stratification, ![]() $g$ is the gravitational constant and

$g$ is the gravitational constant and ![]() $\rho _0$ is a reference density (Reference OsbornOsborn, 1980) (note that while the caveats associated with this approximation are important (Reference Mashayek, Caulfield and PeltierMashayek et al., 2013; Reference Mashayek and PeltierMashayek & Peltier, 2013), they do not affect the premise or conclusions of this work in any major way). In physical terms,

$\rho _0$ is a reference density (Reference OsbornOsborn, 1980) (note that while the caveats associated with this approximation are important (Reference Mashayek, Caulfield and PeltierMashayek et al., 2013; Reference Mashayek and PeltierMashayek & Peltier, 2013), they do not affect the premise or conclusions of this work in any major way). In physical terms, ![]() $\mathcal {M}$ represents a net vertical mass flux as turbulence works against gravity to lift denser waters upward. Such turbulence feeds upon the available potential energy (APE) stored in overturns. Upon mixing, a fraction of the APE gets converted into the background potential energy (i.e. the potential energy that the system would acquire if it were allowed to come to rest adiabatically), with the rest dissipating to heat and increasing the internal energy of the system (which leads to an insignificant temperature rise due to the high heat capacity of seawater; Reference Peltier and CaulfieldPeltier & Caulfield, 2003). The turbulent diffusivity,

$\mathcal {M}$ represents a net vertical mass flux as turbulence works against gravity to lift denser waters upward. Such turbulence feeds upon the available potential energy (APE) stored in overturns. Upon mixing, a fraction of the APE gets converted into the background potential energy (i.e. the potential energy that the system would acquire if it were allowed to come to rest adiabatically), with the rest dissipating to heat and increasing the internal energy of the system (which leads to an insignificant temperature rise due to the high heat capacity of seawater; Reference Peltier and CaulfieldPeltier & Caulfield, 2003). The turbulent diffusivity, ![]() $\kappa$, is an input parameter in climate models to account for the subgrid-scale unresolved turbulent mixing. The turbulent flux coefficient,

$\kappa$, is an input parameter in climate models to account for the subgrid-scale unresolved turbulent mixing. The turbulent flux coefficient, ![]() $\varGamma =\mathcal {M}/\varepsilon$, is often taken to be a constant value of 0.2 for historical reasons and arguably for the lack of a universally accepted parametrization for it. However, it is well known to be highly variable (Reference CaulfieldCaulfield, 2021; Reference Gregg, D'Asaro, Riley and KunzeGregg, D'Asaro, Riley, & Kunze, 2018; Reference Mashayek and PeltierMashayek & Peltier, 2013), with appreciable consequences for ocean circulation (Reference Cimoli, Caulfield, Johnson, Marshall, Mashayek, Naveira Garabato and VicCimoli et al., 2019; Reference de Lavergne, Madec, Le Sommer, Nurser and Naveira Garabatode Lavergne, Madec, Le Sommer, Nurser, & Naveira Garabato, 2015; Reference Mashayek, Salehipour, Bouffard, Caulfield, Ferrari, Nikurashin and SmythMashayek et al., 2017). While

$\varGamma =\mathcal {M}/\varepsilon$, is often taken to be a constant value of 0.2 for historical reasons and arguably for the lack of a universally accepted parametrization for it. However, it is well known to be highly variable (Reference CaulfieldCaulfield, 2021; Reference Gregg, D'Asaro, Riley and KunzeGregg, D'Asaro, Riley, & Kunze, 2018; Reference Mashayek and PeltierMashayek & Peltier, 2013), with appreciable consequences for ocean circulation (Reference Cimoli, Caulfield, Johnson, Marshall, Mashayek, Naveira Garabato and VicCimoli et al., 2019; Reference de Lavergne, Madec, Le Sommer, Nurser and Naveira Garabatode Lavergne, Madec, Le Sommer, Nurser, & Naveira Garabato, 2015; Reference Mashayek, Salehipour, Bouffard, Caulfield, Ferrari, Nikurashin and SmythMashayek et al., 2017). While ![]() $\mathcal {M}$ is often the quantity of interest from a physical perspective, it is difficult to observe directly and is often inferred from

$\mathcal {M}$ is often the quantity of interest from a physical perspective, it is difficult to observe directly and is often inferred from ![]() $\varepsilon$ (via (2.1)) which itself is inferred from microscale shear (i.e. spatial gradients of velocity) measured by microstructure probes on profiling instruments, gliders or moorings. Thus, accurate quantification of

$\varepsilon$ (via (2.1)) which itself is inferred from microscale shear (i.e. spatial gradients of velocity) measured by microstructure probes on profiling instruments, gliders or moorings. Thus, accurate quantification of ![]() $\varGamma$ is key to inferring ocean mixing from direct observations.

$\varGamma$ is key to inferring ocean mixing from direct observations.

Three basic turbulence scales have proven useful for parametrization of ![]() $\varGamma$ based on observable quantities. To illustrate their relevance, in Figure 1c, we show their evolution over the life cycle of turbulence breakdown of a canonical overturn. The Kolmogorov scale,

$\varGamma$ based on observable quantities. To illustrate their relevance, in Figure 1c, we show their evolution over the life cycle of turbulence breakdown of a canonical overturn. The Kolmogorov scale, ![]() $L_K=(\nu ^3/\varepsilon )^{1/4}$, represents the scale below which viscous dissipation takes kinetic energy out of the system, the Ozmidov scale,

$L_K=(\nu ^3/\varepsilon )^{1/4}$, represents the scale below which viscous dissipation takes kinetic energy out of the system, the Ozmidov scale, ![]() $L_O=(\varepsilon /N^3)^{1/2}$, is the largest (vertical) scale that is not strongly affected by stratification, and the Thorpe scale,

$L_O=(\varepsilon /N^3)^{1/2}$, is the largest (vertical) scale that is not strongly affected by stratification, and the Thorpe scale, ![]() $L_T$, is a geometrical scale characteristic of vertical displacement of notional fluid parcels within an overturning turbulent patch (Reference DillonDillon, 1982; Reference Mashayek, Caulfield and PeltierMashayek, Caulfield, & Peltier, 2017; Reference Smyth and MoumSmyth & Moum, 2000) –

$L_T$, is a geometrical scale characteristic of vertical displacement of notional fluid parcels within an overturning turbulent patch (Reference DillonDillon, 1982; Reference Mashayek, Caulfield and PeltierMashayek, Caulfield, & Peltier, 2017; Reference Smyth and MoumSmyth & Moum, 2000) – ![]() $\nu$ is the kinematic viscosity of seawater. The figure shows the initial accumulation of available potential energy (APE) from background shear into the primary billow (large

$\nu$ is the kinematic viscosity of seawater. The figure shows the initial accumulation of available potential energy (APE) from background shear into the primary billow (large ![]() $L_T$) followed by the growth of smaller eddies within the main billow upon feeding on its APE source (increase in

$L_T$) followed by the growth of smaller eddies within the main billow upon feeding on its APE source (increase in ![]() $L_O$). As turbulence grows, the scale at which energy is taken out of the system,

$L_O$). As turbulence grows, the scale at which energy is taken out of the system, ![]() $L_K$, decreases. The phase where

$L_K$, decreases. The phase where ![]() $L_O{\sim }L_T$ is the most efficient transfer of energy from the overturn to the smaller scales, and thus is the richest dynamic range, marked by the largest gap between

$L_O{\sim }L_T$ is the most efficient transfer of energy from the overturn to the smaller scales, and thus is the richest dynamic range, marked by the largest gap between ![]() $L_O$ and

$L_O$ and ![]() $L_K$, and thus the most efficient phase of the flow where (an instantaneous) mixing efficiency is defined as

$L_K$, and thus the most efficient phase of the flow where (an instantaneous) mixing efficiency is defined as ![]() $\mathcal {M}/(\mathcal {M}+\varepsilon )=\varGamma /(1+\varGamma )$ (Reference Peltier and CaulfieldPeltier & Caulfield, 2003). Beyond this efficient phase, both

$\mathcal {M}/(\mathcal {M}+\varepsilon )=\varGamma /(1+\varGamma )$ (Reference Peltier and CaulfieldPeltier & Caulfield, 2003). Beyond this efficient phase, both ![]() $L_T$ and

$L_T$ and ![]() $L_O$ decay, as does the turbulence.

$L_O$ decay, as does the turbulence.

The ratio of ![]() $L_O$ and

$L_O$ and ![]() $L_K$, often expressed as the buoyancy Reynolds number

$L_K$, often expressed as the buoyancy Reynolds number ![]() $Re_b=(L_O/L_K)^{4/3}$, has been widely used to quantify

$Re_b=(L_O/L_K)^{4/3}$, has been widely used to quantify ![]() $\varGamma$ (Reference Bouffard and BoegmanBouffard & Boegman, 2013; Reference Gregg, D'Asaro, Riley and KunzeGregg et al., 2018; Reference Mashayek, Salehipour, Bouffard, Caulfield, Ferrari, Nikurashin and SmythMashayek et al., 2017; Reference Monismith, Koseff and WhiteMonismith, Koseff, & White, 2018) and also to establish the global scale impacts of variations in

$\varGamma$ (Reference Bouffard and BoegmanBouffard & Boegman, 2013; Reference Gregg, D'Asaro, Riley and KunzeGregg et al., 2018; Reference Mashayek, Salehipour, Bouffard, Caulfield, Ferrari, Nikurashin and SmythMashayek et al., 2017; Reference Monismith, Koseff and WhiteMonismith, Koseff, & White, 2018) and also to establish the global scale impacts of variations in ![]() $\varGamma$ (Reference Cimoli, Caulfield, Johnson, Marshall, Mashayek, Naveira Garabato and VicCimoli et al., 2019; Reference de Lavergne, Madec, Le Sommer, Nurser and Naveira Garabatode Lavergne et al., 2015; Reference Mashayek, Salehipour, Bouffard, Caulfield, Ferrari, Nikurashin and SmythMashayek et al., 2017). However, some of these efforts, as well as others, have also highlighted the inherent deficiency of

$\varGamma$ (Reference Cimoli, Caulfield, Johnson, Marshall, Mashayek, Naveira Garabato and VicCimoli et al., 2019; Reference de Lavergne, Madec, Le Sommer, Nurser and Naveira Garabatode Lavergne et al., 2015; Reference Mashayek, Salehipour, Bouffard, Caulfield, Ferrari, Nikurashin and SmythMashayek et al., 2017). However, some of these efforts, as well as others, have also highlighted the inherent deficiency of ![]() $Re_b$ since it only includes instantaneous information on the turbulence scales

$Re_b$ since it only includes instantaneous information on the turbulence scales ![]() $L_O$ and

$L_O$ and ![]() $L_K$ while not ‘knowing’ anything about either the energy containing scale

$L_K$ while not ‘knowing’ anything about either the energy containing scale ![]() $L_T$ (Reference Gargett and MoumGargett & Moum, 1995; Reference GarrettGarrett, 2001; Reference Mashayek, Caulfield and AlfordMashayek et al., 2021; Reference Mashayek and PeltierMashayek & Peltier, 2011; Reference Mater and VenayagamoorthyMater & Venayagamoorthy, 2014) or any time dependence of the flow, although evidence is accumulating that ‘history matters’ in stratified mixing (Reference CaulfieldCaulfield, 2021). An alternate method for inferring mixing (or more accurately inferring

$L_T$ (Reference Gargett and MoumGargett & Moum, 1995; Reference GarrettGarrett, 2001; Reference Mashayek, Caulfield and AlfordMashayek et al., 2021; Reference Mashayek and PeltierMashayek & Peltier, 2011; Reference Mater and VenayagamoorthyMater & Venayagamoorthy, 2014) or any time dependence of the flow, although evidence is accumulating that ‘history matters’ in stratified mixing (Reference CaulfieldCaulfield, 2021). An alternate method for inferring mixing (or more accurately inferring ![]() $\varepsilon$ from which diffusivity or flux may be inferred), employed when direct inference of

$\varepsilon$ from which diffusivity or flux may be inferred), employed when direct inference of ![]() $\varepsilon$ is not available, is to assume that

$\varepsilon$ is not available, is to assume that ![]() $R_{OT}=L_O/L_T$ is a constant (taken to be between 0.6 and 1; Reference DillonDillon, 1982; Reference Ferron, Mercier, Speer, Gargett and PolzinFerron, Mercier, Speer, Gargett, & Polzin, 1998; Reference ThorpeThorpe, 2005) and

$R_{OT}=L_O/L_T$ is a constant (taken to be between 0.6 and 1; Reference DillonDillon, 1982; Reference Ferron, Mercier, Speer, Gargett and PolzinFerron, Mercier, Speer, Gargett, & Polzin, 1998; Reference ThorpeThorpe, 2005) and ![]() $\varGamma =0.2$. This method, too, is inherently deficient (although in practice might be the only available option) since it only ‘knows’ about the energy containing scale and not the dissipation scale, and so does not ‘feel’ the width of the dynamic range of the turbulence (the so-called inertial subrange).

$\varGamma =0.2$. This method, too, is inherently deficient (although in practice might be the only available option) since it only ‘knows’ about the energy containing scale and not the dissipation scale, and so does not ‘feel’ the width of the dynamic range of the turbulence (the so-called inertial subrange).

Building on an extensive relevant literature, recently, Reference Mashayek, Caulfield and AlfordMCA21 extended earlier scaling relations relating ![]() $R_{OT}$ to

$R_{OT}$ to ![]() $\varGamma$ and proposed a simple parametrization for

$\varGamma$ and proposed a simple parametrization for ![]() $\Gamma$, on basic physical grounds, that agreed well with observational data:

$\Gamma$, on basic physical grounds, that agreed well with observational data:

\begin{equation} \varGamma_i = A\frac{R_{OT}^{{-}1}}{1 + R_{OT}^{{1}/{3}}}, \end{equation}

\begin{equation} \varGamma_i = A\frac{R_{OT}^{{-}1}}{1 + R_{OT}^{{1}/{3}}}, \end{equation}

where ![]() $A$ is a constant ranging between

$A$ is a constant ranging between ![]() $1/2$ and

$1/2$ and ![]() $2/3$. The subscript

$2/3$. The subscript ![]() $i$ in (2.2) emphasizes the applicability of it to individual turbulent patches as opposed to a bulk region, which typically comprises a multitude of turbulence events separated (in time and space) by ‘quiet’ zones. Crucially, this parametrization captures the fundamental time-dependent nature of overturning mixing events, allowing for variation in

$i$ in (2.2) emphasizes the applicability of it to individual turbulent patches as opposed to a bulk region, which typically comprises a multitude of turbulence events separated (in time and space) by ‘quiet’ zones. Crucially, this parametrization captures the fundamental time-dependent nature of overturning mixing events, allowing for variation in ![]() $R_{OT}$ and hence

$R_{OT}$ and hence ![]() $\varGamma$ at different stages in the life cycle of a mixing event. Figure 2a, reproduced from Reference Mashayek, Caulfield and AlfordMCA21, shows the agreement with a combination of data from

$\varGamma$ at different stages in the life cycle of a mixing event. Figure 2a, reproduced from Reference Mashayek, Caulfield and AlfordMCA21, shows the agreement with a combination of data from ![]() $\sim$50 000 turbulent patches gathered from six different field campaigns that sampled turbulence in different geographical locations around the globe, at different depths and turbulence regimes, and from turbulence induced by different processes (see supplementary material available at https://doi.org/10.1017/flo.2022.16 for a brief description of data). Equation (2.2) reduces to

$\sim$50 000 turbulent patches gathered from six different field campaigns that sampled turbulence in different geographical locations around the globe, at different depths and turbulence regimes, and from turbulence induced by different processes (see supplementary material available at https://doi.org/10.1017/flo.2022.16 for a brief description of data). Equation (2.2) reduces to ![]() $A/R_{OT}$ in the limit of young turbulence (

$A/R_{OT}$ in the limit of young turbulence (![]() $R_{OT}\ll 1)$) and to

$R_{OT}\ll 1)$) and to ![]() $A/R_{OT}^{4/3}$ in the limit of (older) decaying turbulence (

$A/R_{OT}^{4/3}$ in the limit of (older) decaying turbulence (![]() $R_{OT}\gg 1)$). While the latter has been suggested by others in the past, as reviewed in Reference Mashayek, Caulfield and AlfordMCA21, the former limit and the transition between the two limits were formulated in Reference Mashayek, Caulfield and AlfordMCA21.

$R_{OT}\gg 1)$). While the latter has been suggested by others in the past, as reviewed in Reference Mashayek, Caulfield and AlfordMCA21, the former limit and the transition between the two limits were formulated in Reference Mashayek, Caulfield and AlfordMCA21.

Figure 2. (a) Agreement of (2.2) with ![]() $\sim$50 000 turbulent patches from six different datasets covering a variety of turbulent regimes and processes. The bar plot insets show the histogram of

$\sim$50 000 turbulent patches from six different datasets covering a variety of turbulent regimes and processes. The bar plot insets show the histogram of ![]() $R_{OT}$ (top axis) and

$R_{OT}$ (top axis) and ![]() $\varGamma$ for the parametrization (in red) and data (in blue) along the right vertical axis. The value of

$\varGamma$ for the parametrization (in red) and data (in blue) along the right vertical axis. The value of ![]() $A$ is obtained through regression of data to (2.2). Reproduced from Reference Mashayek, Caulfield and AlfordMashayek et al. (2021) – see supplementary materials for a brief description of data. Note that the data plotted here include observations in the Samoan Passage (shown in Figure 1b) and in the Brazil Basin (of relevance to Figures 5–7). (b) Probability density function (PDF) of

$A$ is obtained through regression of data to (2.2). Reproduced from Reference Mashayek, Caulfield and AlfordMashayek et al. (2021) – see supplementary materials for a brief description of data. Note that the data plotted here include observations in the Samoan Passage (shown in Figure 1b) and in the Brazil Basin (of relevance to Figures 5–7). (b) Probability density function (PDF) of ![]() $R_{OT}=L_O/L_T$ for the same data as in panel (a), compartmentalized in terms of the rate of dissipation of kinetic energy,

$R_{OT}=L_O/L_T$ for the same data as in panel (a), compartmentalized in terms of the rate of dissipation of kinetic energy, ![]() $\varepsilon$. The bottom/second/third/top quartiles have increasing modes of 0.66/0.78/0.82/0.88, respectively. Note the negative skewness of the log-transformed PDF. (c,d) Temporal fraction of turbulence lifecycle, as well as the ratio of

$\varepsilon$. The bottom/second/third/top quartiles have increasing modes of 0.66/0.78/0.82/0.88, respectively. Note the negative skewness of the log-transformed PDF. (c,d) Temporal fraction of turbulence lifecycle, as well as the ratio of ![]() $R_{OT}$ during the turbulent phase of the flow to its mean value over the whole life cycle. Each symbol represents a life-cycle-averaged quantity from a direct numerical simulation (such as the one in Figure 1d) for the corresponding Reynolds and Richardson numbers. All cases in panel (c) are for

$R_{OT}$ during the turbulent phase of the flow to its mean value over the whole life cycle. Each symbol represents a life-cycle-averaged quantity from a direct numerical simulation (such as the one in Figure 1d) for the corresponding Reynolds and Richardson numbers. All cases in panel (c) are for ![]() $Ri=0.12$ while all cases in panel (d) are for

$Ri=0.12$ while all cases in panel (d) are for ![]() $Re=6000$. The turbulent phases of the life cycles are defined as the times when

$Re=6000$. The turbulent phases of the life cycles are defined as the times when ![]() $Re_b>20$ (Reference GibsonGibson, 1991; Reference Smyth and MoumSmyth & Moum, 2000).

$Re_b>20$ (Reference GibsonGibson, 1991; Reference Smyth and MoumSmyth & Moum, 2000).

A nice feature of (2.2) is that it allows for the two ranges to merge smoothly at ![]() $R_{OT}{\sim }1$. This limit corresponds to efficient mixing when there exists an optimal balance between the stratification and energy available to turbulence. In the young turbulence range, stratification is relatively high and sufficient energy has not yet transferred to turbulence to work against the stratification and mix. So, while

$R_{OT}{\sim }1$. This limit corresponds to efficient mixing when there exists an optimal balance between the stratification and energy available to turbulence. In the young turbulence range, stratification is relatively high and sufficient energy has not yet transferred to turbulence to work against the stratification and mix. So, while ![]() $\varGamma _i$ can be very large, it does not imply much mixing: it is large since

$\varGamma _i$ can be very large, it does not imply much mixing: it is large since ![]() $\varepsilon$ is very small, not because

$\varepsilon$ is very small, not because ![]() $\mathcal {M}$ is large. In fact, in the limit of laminar flow,

$\mathcal {M}$ is large. In fact, in the limit of laminar flow, ![]() $\varGamma _i \rightarrow \infty$. However, in the decaying phase of turbulence, stratification is somewhat eroded and so

$\varGamma _i \rightarrow \infty$. However, in the decaying phase of turbulence, stratification is somewhat eroded and so ![]() $\mathcal {M}$ is weaker as there is less to mix, while

$\mathcal {M}$ is weaker as there is less to mix, while ![]() $\varepsilon$ is still finite as even an unstratified flow can have significant

$\varepsilon$ is still finite as even an unstratified flow can have significant ![]() $\varepsilon$. Thus, in this limit,

$\varepsilon$. Thus, in this limit, ![]() $\varGamma _0 \rightarrow 0$. It is the

$\varGamma _0 \rightarrow 0$. It is the ![]() $R_{OT}{\sim }1$ limit in which the right balance of stratification and power exists and optimal mixing occurs. Since this intermediate phase appears to lead to ‘optimal’ mixing, neither too hot nor too cold but ‘just right’, Reference Mashayek, Caulfield and AlfordMCA21 referred to this as ‘Goldilocks mixing’.

$R_{OT}{\sim }1$ limit in which the right balance of stratification and power exists and optimal mixing occurs. Since this intermediate phase appears to lead to ‘optimal’ mixing, neither too hot nor too cold but ‘just right’, Reference Mashayek, Caulfield and AlfordMCA21 referred to this as ‘Goldilocks mixing’.

Here we argue that all three (time-dependent) phases of the turbulence life cycle, importantly including the young turbulence limit, are key to connecting the small-scale physics of mixing to the large-scale ocean dynamics. While the Goldilocks mixing phase is when most of the effective turbulent flux occurs, the young and weakly turbulent patches are important since most of the ocean interior is relatively ‘quiet’ with intermittent bursts of turbulence. We will argue that it is essential to account for the less/non-turbulent regions, whereas historically, parametrizations have primarily focused on energetic turbulence. This will necessitate careful analysis of the statistics of turbulent patches.

We note that while we will employ the Goldilocks paradigm of Reference Mashayek, Caulfield and AlfordMCA21 in the forthcoming recipe, in principle, it can be replaced by alternative parametrizations for ![]() $\varGamma _i$.

$\varGamma _i$.

3. Statistics of turbulence

3.1 Statistics of patches

Figure 2b shows the histogram of the data used in Figure 2a separated into four quartiles in terms of ![]() $\varepsilon$. The distribution shows that most turbulent patches lie within a factor of 3 of

$\varepsilon$. The distribution shows that most turbulent patches lie within a factor of 3 of ![]() $R_{OT}=1$ and the larger the

$R_{OT}=1$ and the larger the ![]() $\varepsilon$, the closer to 1 the peak of

$\varepsilon$, the closer to 1 the peak of ![]() $R_{OT}$ lies. This suggests the majority of turbulent patches are in this phase of optimal or Goldilocks mixing. Such a clustering of data has been widely reported in the past (Reference DillonDillon, 1982; Reference Mashayek, Caulfield and PeltierMashayek et al., 2017; Reference Mater, Venayagamoorthy, Laurent and MoumMater, Venayagamoorthy, Laurent, & Moum, 2015; Reference ThorpeThorpe, 2005) and suggests that out of the three phases of energetic ocean turbulence events, namely ‘young’ growth, intermediate Goldilocks mixing and ‘old’ decay, the intermediate phase spans the larger fraction of the turbulence life cycle. This is shown quantitatively in Figures 2c and 2d where life-cycle-averaged properties are plotted from turbulence life cycle simulations of shear instabilities by Reference Mashayek and PeltierMashayek and Peltier (2013), Reference Mashayek, Caulfield and PeltierMashayek et al. (2013). Figures 2c and 2d, together, show that for sufficiently energetic turbulence, a larger proportion of the turbulence life cycle corresponds to the Goldilocks phase rather than to the growth and decay phases.

$R_{OT}$ lies. This suggests the majority of turbulent patches are in this phase of optimal or Goldilocks mixing. Such a clustering of data has been widely reported in the past (Reference DillonDillon, 1982; Reference Mashayek, Caulfield and PeltierMashayek et al., 2017; Reference Mater, Venayagamoorthy, Laurent and MoumMater, Venayagamoorthy, Laurent, & Moum, 2015; Reference ThorpeThorpe, 2005) and suggests that out of the three phases of energetic ocean turbulence events, namely ‘young’ growth, intermediate Goldilocks mixing and ‘old’ decay, the intermediate phase spans the larger fraction of the turbulence life cycle. This is shown quantitatively in Figures 2c and 2d where life-cycle-averaged properties are plotted from turbulence life cycle simulations of shear instabilities by Reference Mashayek and PeltierMashayek and Peltier (2013), Reference Mashayek, Caulfield and PeltierMashayek et al. (2013). Figures 2c and 2d, together, show that for sufficiently energetic turbulence, a larger proportion of the turbulence life cycle corresponds to the Goldilocks phase rather than to the growth and decay phases.

The parametrization (2.2) describes individual turbulent patches. A turbulent region within the ocean, such as that shown in Figure 1b, hosts many patches in different stages of their evolution. Reference Mashayek, Caulfield and AlfordMCA21 argued that an appropriate value for a bulk ![]() $\varGamma$ may be constructed for such a region through

$\varGamma$ may be constructed for such a region through

\begin{equation} \varGamma_{B} = \frac{\mathcal{M}_B}{\varepsilon_B}\approx \frac{\displaystyle \sum_{i = 1}^n \varGamma_i \times \varepsilon_i}{\displaystyle \sum_{i = 1}^n \varepsilon_i}, \end{equation}

\begin{equation} \varGamma_{B} = \frac{\mathcal{M}_B}{\varepsilon_B}\approx \frac{\displaystyle \sum_{i = 1}^n \varGamma_i \times \varepsilon_i}{\displaystyle \sum_{i = 1}^n \varepsilon_i}, \end{equation}

where ![]() $n$ represents the number of patches in a region of interest and the subscript

$n$ represents the number of patches in a region of interest and the subscript ![]() $B$ denotes ‘Bulk’. For example, typical resolution of climate models is

$B$ denotes ‘Bulk’. For example, typical resolution of climate models is ![]() $\mathcal {O}(100\ {\rm km})$ in the horizontal and

$\mathcal {O}(100\ {\rm km})$ in the horizontal and ![]() $\mathcal {O}(100\ {\rm m})$ in the vertical direction. Models that employ a temporally evolving mixing parametrization, employ

$\mathcal {O}(100\ {\rm m})$ in the vertical direction. Models that employ a temporally evolving mixing parametrization, employ ![]() $\varGamma _B=0.2$ to construct a diffusivity for each grid cell. Of course there is no obvious reason why such a constant should universally hold, and as we shall show,

$\varGamma _B=0.2$ to construct a diffusivity for each grid cell. Of course there is no obvious reason why such a constant should universally hold, and as we shall show, ![]() $\varGamma _{B}$ relies on the statistical distribution of patches within the grid cell and their associated

$\varGamma _{B}$ relies on the statistical distribution of patches within the grid cell and their associated ![]() $\varGamma _i$ values. By applying (3.1) to four datasets, Reference Mashayek, Caulfield and AlfordMCA21 showed that

$\varGamma _i$ values. By applying (3.1) to four datasets, Reference Mashayek, Caulfield and AlfordMCA21 showed that ![]() $\varGamma _B$ can be close to the Goldilocks mixing (i.e.

$\varGamma _B$ can be close to the Goldilocks mixing (i.e. ![]() $A/2\approx 1/3$ in (2.2)) when the region of study has the right balance of power and stratification (where

$A/2\approx 1/3$ in (2.2)) when the region of study has the right balance of power and stratification (where ![]() $R_{OT}{\sim }1$), thereby comprising mostly Goldilocks mixing patches. However, regions that host a higher percentage of young or weak turbulent patches can have

$R_{OT}{\sim }1$), thereby comprising mostly Goldilocks mixing patches. However, regions that host a higher percentage of young or weak turbulent patches can have ![]() $\varGamma _B {\sim } \mathcal {O}(1)$ since young patches have large

$\varGamma _B {\sim } \mathcal {O}(1)$ since young patches have large ![]() $\varGamma _i$ values, as shown in Figure 3 in the limit of small

$\varGamma _i$ values, as shown in Figure 3 in the limit of small ![]() $R_{OT}$ (Young patches have large

$R_{OT}$ (Young patches have large ![]() $\varGamma$ because of small

$\varGamma$ because of small ![]() $\varepsilon$ NOT due to large

$\varepsilon$ NOT due to large ![]() $\mathcal {M}$. Thus, while they do not contribute much to the net turbulent flux

$\mathcal {M}$. Thus, while they do not contribute much to the net turbulent flux ![]() $\sum \varGamma _i \varepsilon _i$, they bias

$\sum \varGamma _i \varepsilon _i$, they bias ![]() $\varGamma _B$ high).

$\varGamma _B$ high).

Figure 3. (a) Sampling bias and uncertainty for buoyancy flux (dashed lines), ![]() $\varGamma \times \varepsilon$ (solid lines) and

$\varGamma \times \varepsilon$ (solid lines) and ![]() $\varepsilon$ (dotted lines) for a single turbulent event (e.g. Figure 1c). Purple lines indicate the normalized standard deviation and yellow lines indicate the median relative underestimation, each as a function of sample size, estimated from bootstrap resampling a direct numerical simulation (shown in Figure 1c, from Reference Mashayek, Caulfield and PeltierMashayek et al., 2013). For example, relative uncertainty is

$\varepsilon$ (dotted lines) for a single turbulent event (e.g. Figure 1c). Purple lines indicate the normalized standard deviation and yellow lines indicate the median relative underestimation, each as a function of sample size, estimated from bootstrap resampling a direct numerical simulation (shown in Figure 1c, from Reference Mashayek, Caulfield and PeltierMashayek et al., 2013). For example, relative uncertainty is ![]() ${>}100$ % for the time-averaged buoyancy flux of this event with fewer than

${>}100$ % for the time-averaged buoyancy flux of this event with fewer than ![]() $\sim$16 samples, and the median time-averaged buoyancy flux estimate with four or fewer samples underestimates the buoyancy flux by a factor of two or more. (b) Sampling bias and uncertainty for the mean

$\sim$16 samples, and the median time-averaged buoyancy flux estimate with four or fewer samples underestimates the buoyancy flux by a factor of two or more. (b) Sampling bias and uncertainty for the mean ![]() $\varepsilon$ sampled from a log-skew-normal distribution with the parameters estimated from the dataset described in the text, discarding

$\varepsilon$ sampled from a log-skew-normal distribution with the parameters estimated from the dataset described in the text, discarding ![]() $\varepsilon$ values >10

$\varepsilon$ values >10![]() $^{-5}$ m

$^{-5}$ m![]() $^2$ s

$^2$ s![]() $^{-3}$. The green line indicates the normalized standard deviation and the orange line indicates the median relative underestimation, each as a function of sample size, estimated from bootstrap resampling.

$^{-3}$. The green line indicates the normalized standard deviation and the orange line indicates the median relative underestimation, each as a function of sample size, estimated from bootstrap resampling.

3.2 Statistics of continuous profiles

Bulk mixing therefore depends on the statistics of turbulent patches. However, weakly/non-turbulent waters reside in between intermittent turbulent patches. Recently, Reference Cael and MashayekCM21 showed that ![]() $\varepsilon$ data from over 750 full depth microstructure profiles from 14 field experiments (covering a wide range of depths, geographical locations and turbulence-inducing processes; see Reference Cael and MashayekCM21 for details), are well described by a log-skew-normal distribution (Figure 4a), which has the form

$\varepsilon$ data from over 750 full depth microstructure profiles from 14 field experiments (covering a wide range of depths, geographical locations and turbulence-inducing processes; see Reference Cael and MashayekCM21 for details), are well described by a log-skew-normal distribution (Figure 4a), which has the form

where ![]() $f$ is a probability density function and

$f$ is a probability density function and ![]() $\phi$ and

$\phi$ and ![]() $\varphi$ respectively are the probability and cumulative density functions (PDF and CDF) of a standard Gaussian random variable. We refer the reader to Reference Cael and MashayekCM21 for details, but here discuss the essential aspects of that paper for the problem at hand. As discussed in Reference Cael and MashayekCM21, the relationship between the log-skewness (

$\varphi$ respectively are the probability and cumulative density functions (PDF and CDF) of a standard Gaussian random variable. We refer the reader to Reference Cael and MashayekCM21 for details, but here discuss the essential aspects of that paper for the problem at hand. As discussed in Reference Cael and MashayekCM21, the relationship between the log-skewness (![]() $\theta$) of the distribution and the additional shape parameter

$\theta$) of the distribution and the additional shape parameter ![]() $\alpha$ is one-to-one. The parameters (

$\alpha$ is one-to-one. The parameters (![]() $\xi,\omega,\alpha$) are related to the log-mean, log-standard deviation and log-skewness (

$\xi,\omega,\alpha$) are related to the log-mean, log-standard deviation and log-skewness (![]() $\mu,\sigma,\theta$) of a log-skew-normal random variable according to the following equations:

$\mu,\sigma,\theta$) of a log-skew-normal random variable according to the following equations:

where

\begin{equation} \delta = \frac{\alpha}{\sqrt{1 + \alpha^2}}, \quad \sigma = \omega \sqrt{1-\frac2{\rm \pi} \delta^2}, \quad \left.\theta = (4-{\rm \pi} )\left(\sqrt{\frac2{\rm \pi} }\delta\right)^3 \right/ \left(2\left(1-\frac2{\rm \pi} \delta^2\right)^{3/2}\right). \end{equation}

\begin{equation} \delta = \frac{\alpha}{\sqrt{1 + \alpha^2}}, \quad \sigma = \omega \sqrt{1-\frac2{\rm \pi} \delta^2}, \quad \left.\theta = (4-{\rm \pi} )\left(\sqrt{\frac2{\rm \pi} }\delta\right)^3 \right/ \left(2\left(1-\frac2{\rm \pi} \delta^2\right)^{3/2}\right). \end{equation}

Figure 4. (a) Reproduced from Reference Cael and MashayekCM21, ![]() $\varepsilon$ data from over 750 full depth microstructure profiles from 14 field experiments (see Reference Cael and MashayekCael and Mashayek (2021) for more details) are excellently characterized by a log-skew-normal distribution. Cumulative distribution functions (CDFs) are shown in the main plot; the upper inset shows the corresponding probability density functions and that of a log-normal distribution (purple line) for comparison; the lower inset shows the difference between the empirical and hypothesized log-skew-normal CDFs. (b) Regression of log

$\varepsilon$ data from over 750 full depth microstructure profiles from 14 field experiments (see Reference Cael and MashayekCael and Mashayek (2021) for more details) are excellently characterized by a log-skew-normal distribution. Cumulative distribution functions (CDFs) are shown in the main plot; the upper inset shows the corresponding probability density functions and that of a log-normal distribution (purple line) for comparison; the lower inset shows the difference between the empirical and hypothesized log-skew-normal CDFs. (b) Regression of log![]() $_{10}(L_T)$ against log

$_{10}(L_T)$ against log![]() $_{10}(L_O)$. The middle line captures the central scaling relationship and is estimated via model II regression as described in the text; the outside lines capture the heteroscedasticity of the residuals and is estimated via quartile regression as described in the text. (c) Mean quantities from the dataset in panel (b) (with error bars representing standard deviation), grouped into three categories: (I) energetic turbulence in weak stratification, (II) weak turbulence in strong stratification, and (III) energetic stratified turbulence; see main text for a discussion. (d) Sensitivity of the bulk flux coefficient to the different

$_{10}(L_O)$. The middle line captures the central scaling relationship and is estimated via model II regression as described in the text; the outside lines capture the heteroscedasticity of the residuals and is estimated via quartile regression as described in the text. (c) Mean quantities from the dataset in panel (b) (with error bars representing standard deviation), grouped into three categories: (I) energetic turbulence in weak stratification, (II) weak turbulence in strong stratification, and (III) energetic stratified turbulence; see main text for a discussion. (d) Sensitivity of the bulk flux coefficient to the different ![]() $L_T$–

$L_T$–![]() $L_O$ scaling parameters, perturbed from a baseline

$L_O$ scaling parameters, perturbed from a baseline ![]() $\varGamma _g$ estimated from the combined global dataset described in the text. Larger fluctuations in

$\varGamma _g$ estimated from the combined global dataset described in the text. Larger fluctuations in ![]() $L_T$ when

$L_T$ when ![]() $L_O$ is large can increase the bulk flux coefficient, but it asymptotes to a constant value with decreasing fluctuations. Increasing either the scaling exponent or coefficient increases

$L_O$ is large can increase the bulk flux coefficient, but it asymptotes to a constant value with decreasing fluctuations. Increasing either the scaling exponent or coefficient increases ![]() $L_T$ values for large

$L_T$ values for large ![]() $L_O$, thus increasing the bulk flux coefficient for large

$L_O$, thus increasing the bulk flux coefficient for large ![]() $\varepsilon$; decreasing either the scaling exponent or coefficient drives bulk mixing to zero as

$\varepsilon$; decreasing either the scaling exponent or coefficient drives bulk mixing to zero as ![]() $R_{OT}\gg 1$ when

$R_{OT}\gg 1$ when ![]() $\varepsilon$ is large. See supplementary materials for information on data sources.

$\varepsilon$ is large. See supplementary materials for information on data sources.

The log-skew-normal distribution arises from the analogue of the Central Limit Theorem for lognormal variables: that is, the sum of log-normal variables converges to a log-skew-normal distribution (Reference Wu, Li, Husnay, Chakravarthy, Wang and WuWu et al., 2009). For turbulence, the log-skew-normal distribution is thought to arise because the total, measured ![]() $\varepsilon$ results from a combination of multiple and/or not statistically steady turbulence-generating processes (Reference Caldwell and MoumCaldwell & Moum, 1995), which individually and/or instantaneously have log-normally distributed dissipation rates.

$\varepsilon$ results from a combination of multiple and/or not statistically steady turbulence-generating processes (Reference Caldwell and MoumCaldwell & Moum, 1995), which individually and/or instantaneously have log-normally distributed dissipation rates.

3.3 Chronic undersampling: inevitability of a statistical approach to  $\varGamma _B$

$\varGamma _B$

The difficulty of sampling intermittent turbulence has long been recognized (Reference Gregg, Seim and PercivalGregg, Seim, & Percival, 1993), but the problem is perhaps even worse than conventionally thought, owing to the even more heavy-tailed nature of a log-skew-normal ![]() $\varepsilon$ distribution than a lognormal one. This presents a severe challenge for constraining the average

$\varepsilon$ distribution than a lognormal one. This presents a severe challenge for constraining the average ![]() $\varepsilon$ value for an ocean volume from a limited sample set, since many samples are required to resolve the disproportionately consequential tail. Direct numerical simulations have established that turbulent quantities, such as

$\varepsilon$ value for an ocean volume from a limited sample set, since many samples are required to resolve the disproportionately consequential tail. Direct numerical simulations have established that turbulent quantities, such as ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\Gamma$, vary substantially over the lifetime of an overturning event (Reference CaulfieldCaulfield, 2021). This implies that a single measurement, or even a handful of measurements, of such an event can be a poor estimate of its time-integrated properties since they most likely miss the most energetic phase of turbulence for each event. This underscores the need for large measurement sets and a statistical approach to fill in the gaps and accurately estimate bulk turbulent properties. To highlight the sampling issue, in Figure 3, we consider two examples: one based on sampling of an individual turbulence event; and one based on sampling of the collective global dataset discussed in Figure 4a.

$\Gamma$, vary substantially over the lifetime of an overturning event (Reference CaulfieldCaulfield, 2021). This implies that a single measurement, or even a handful of measurements, of such an event can be a poor estimate of its time-integrated properties since they most likely miss the most energetic phase of turbulence for each event. This underscores the need for large measurement sets and a statistical approach to fill in the gaps and accurately estimate bulk turbulent properties. To highlight the sampling issue, in Figure 3, we consider two examples: one based on sampling of an individual turbulence event; and one based on sampling of the collective global dataset discussed in Figure 4a.

Direct numerical simulations, such as that shown in Figure 1c, clearly demonstrate that turbulent quantities such as ![]() $\mathcal {M}$,

$\mathcal {M}$, ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\varGamma$ vary substantially over the lifetime of an overturning event. This implies that a single measurement, or even a handful of measurements, of such an event are highly likely to be a poor estimate of its time-integrated properties. Figure 3a shows the uncertainty and bias associated with random samples of one (other simulations yielded similar results) simulation's history

$\varGamma$ vary substantially over the lifetime of an overturning event. This implies that a single measurement, or even a handful of measurements, of such an event are highly likely to be a poor estimate of its time-integrated properties. Figure 3a shows the uncertainty and bias associated with random samples of one (other simulations yielded similar results) simulation's history ![]() $(Re = 6000, Ri = 0.16)$ for different sample sizes. Uncertainty is quantified by the normalized standard deviation of the sample mean for collections of samples taken at random times along the temporal history of the overturning event. Bias is quantified by the ratio of the median sample mean from these collections of randomly timed samples to the true time-averaged mean of each property. Because all the properties shown are positively skewed quantities, the sample means tend to miss the peak values and thus underestimate the time-averaged value; because these skews are so large, means of different collections of random samples vary by e.g. more than a factor of two for fewer than

$(Re = 6000, Ri = 0.16)$ for different sample sizes. Uncertainty is quantified by the normalized standard deviation of the sample mean for collections of samples taken at random times along the temporal history of the overturning event. Bias is quantified by the ratio of the median sample mean from these collections of randomly timed samples to the true time-averaged mean of each property. Because all the properties shown are positively skewed quantities, the sample means tend to miss the peak values and thus underestimate the time-averaged value; because these skews are so large, means of different collections of random samples vary by e.g. more than a factor of two for fewer than ![]() $\sim$16 measurements. Turbulent measurements are snapshots of events; it is not possible to make tens of measurements of the same turbulent event. These appreciable biases and uncertainties for characterizing individual events thus underscore the importance of the statistics of turbulent properties.

$\sim$16 measurements. Turbulent measurements are snapshots of events; it is not possible to make tens of measurements of the same turbulent event. These appreciable biases and uncertainties for characterizing individual events thus underscore the importance of the statistics of turbulent properties.

The heavy-tailed nature of the ![]() $\varepsilon$ distribution in Figure 4a presents a similar challenge for constraining the average

$\varepsilon$ distribution in Figure 4a presents a similar challenge for constraining the average ![]() $\varepsilon$ value for an ocean volume from a limited sample set. Figure 3b shows the same as Figure 3a, but for random samples from a log-skew-normal distribution whose parameters are fit to match the

$\varepsilon$ value for an ocean volume from a limited sample set. Figure 3b shows the same as Figure 3a, but for random samples from a log-skew-normal distribution whose parameters are fit to match the ![]() $\varepsilon$ data from the experiments described above (

$\varepsilon$ data from the experiments described above (![]() $\xi = -24.8$,

$\xi = -24.8$, ![]() $\omega = 3.91$,

$\omega = 3.91$, ![]() $\alpha = 5.89$) using the same procedure as in Reference Cael and MashayekCM21. We discard values above a chosen

$\alpha = 5.89$) using the same procedure as in Reference Cael and MashayekCM21. We discard values above a chosen ![]() $\varepsilon _{max}$ threshold of

$\varepsilon _{max}$ threshold of ![]() $10^{-5}$ m

$10^{-5}$ m![]() $^2$ s

$^2$ s![]() $^{-3}$; as with any parametrization for the PDF of

$^{-3}$; as with any parametrization for the PDF of ![]() $\varepsilon$ supported on

$\varepsilon$ supported on ![]() $(0,\infty )$, the log-skew-normal allows for a non-zero probability density for unmeasurably and/or unphysically large

$(0,\infty )$, the log-skew-normal allows for a non-zero probability density for unmeasurably and/or unphysically large ![]() $\varepsilon$ values, which are either too large to be measured by sampling probes and/or yield impossibly large

$\varepsilon$ values, which are either too large to be measured by sampling probes and/or yield impossibly large ![]() $L_O$ values. Here,

$L_O$ values. Here, ![]() $\mathcal {O}(1000)$ samples are needed to make the underestimation bias less than

$\mathcal {O}(1000)$ samples are needed to make the underestimation bias less than ![]() $\sim$10 %, and

$\sim$10 %, and ![]() $\mathcal {O}(100)$ samples are needed to make the standard deviation of sample means less than the true mean (i.e. <100 % relative uncertainty). (Larger/smaller values of

$\mathcal {O}(100)$ samples are needed to make the standard deviation of sample means less than the true mean (i.e. <100 % relative uncertainty). (Larger/smaller values of ![]() $\varepsilon _{max}$ increase/reduce these sample size numbers.) This further underscores the need for large measurement sets and a statistical approach to estimate bulk turbulent properties accurately.

$\varepsilon _{max}$ increase/reduce these sample size numbers.) This further underscores the need for large measurement sets and a statistical approach to estimate bulk turbulent properties accurately.

4. A recipe for  $\varGamma _B$

$\varGamma _B$

Ingredients: ![]() $\varGamma (R_{OT})$ for individual patches + statistics of

$\varGamma (R_{OT})$ for individual patches + statistics of ![]() $L_O$,

$L_O$, ![]() $L_T$, and

$L_T$, and ![]() $R_{OT}$.

$R_{OT}$.

Here, we aim to exploit the log-skew-normality of ![]() $\varepsilon$ and the statistics of

$\varepsilon$ and the statistics of ![]() $R_{OT}$ to construct a parametrization for

$R_{OT}$ to construct a parametrization for ![]() $\varGamma _B$ based on the total power and the mean stratification for the region (or the grid cell) for which

$\varGamma _B$ based on the total power and the mean stratification for the region (or the grid cell) for which ![]() $\varGamma _B$ is sought. To this end, first we note that

$\varGamma _B$ is sought. To this end, first we note that ![]() $L_O \propto \varepsilon ^{1/2}$ by definition; the former lies within the

$L_O \propto \varepsilon ^{1/2}$ by definition; the former lies within the ![]() $R_{OT}$-based

$R_{OT}$-based ![]() $\varGamma$ parametrization in (2.2) and the latter is captured by (3.2) (historically,

$\varGamma$ parametrization in (2.2) and the latter is captured by (3.2) (historically, ![]() $\varepsilon$ has often been described as log-normally distributed based on a simple argument from multi-stage subdivisions of an initial flux for three-dimensional homogeneous isotropic turbulence (Reference Gurvich and YaglomGurvich & Yaglom, 1967), but the log-normal distribution has also been long recognized as a quantitatively inaccurate description of measured distributions (Reference Yamazaki and LueckYamazaki & Lueck, 1990)). Because

$\varepsilon$ has often been described as log-normally distributed based on a simple argument from multi-stage subdivisions of an initial flux for three-dimensional homogeneous isotropic turbulence (Reference Gurvich and YaglomGurvich & Yaglom, 1967), but the log-normal distribution has also been long recognized as a quantitatively inaccurate description of measured distributions (Reference Yamazaki and LueckYamazaki & Lueck, 1990)). Because ![]() $L_O$ divides

$L_O$ divides ![]() $\varepsilon$ by another random variable and then takes a square root of their quotient, this distribution is also preserved for

$\varepsilon$ by another random variable and then takes a square root of their quotient, this distribution is also preserved for ![]() $L_O$ (supplementary material, Figure S1, Table S1; also see below). Here, we pair the parametrizations for the statistical distribution of

$L_O$ (supplementary material, Figure S1, Table S1; also see below). Here, we pair the parametrizations for the statistical distribution of ![]() $\varepsilon$ in (3.2) and

$\varepsilon$ in (3.2) and ![]() $\varGamma _i=f(R_{OT})$ in (2.2) to obtain a parametrization for

$\varGamma _i=f(R_{OT})$ in (2.2) to obtain a parametrization for ![]() $\varGamma _B$.

$\varGamma _B$.

If, as described above, ![]() $\varepsilon _B$ is log-skew-normally distributed because it is the sum of log-normal random variables (call these

$\varepsilon _B$ is log-skew-normally distributed because it is the sum of log-normal random variables (call these ![]() $\varepsilon _i$), then

$\varepsilon _i$), then ![]() $L_O$ must also be so distributed. If we pass the

$L_O$ must also be so distributed. If we pass the ![]() $N^3$ to the summed-over

$N^3$ to the summed-over ![]() $\varepsilon _i$, such that

$\varepsilon _i$, such that ![]() $\varepsilon _B/N^3 = \sum _i (\varepsilon _i/N^3)$, this will introduce a correlation into the

$\varepsilon _B/N^3 = \sum _i (\varepsilon _i/N^3)$, this will introduce a correlation into the ![]() $\varepsilon _i/N^3$ being summed over, which does not affect the log-skew-normality of their sum (Reference Hcine and BouallegueHcine & Bouallegue, 2015). For the

$\varepsilon _i/N^3$ being summed over, which does not affect the log-skew-normality of their sum (Reference Hcine and BouallegueHcine & Bouallegue, 2015). For the ![]() $\varepsilon _i$, this introduces another variable multiplicative factor and these each should still then be log-normal, so altogether

$\varepsilon _i$, this introduces another variable multiplicative factor and these each should still then be log-normal, so altogether ![]() $\sum _i (\varepsilon _i/N^3)$ is still the sum of log-normal random variables. As the skew-normal distribution is insensitive to multiplicative transformations – we may write any skew-normal random variable

$\sum _i (\varepsilon _i/N^3)$ is still the sum of log-normal random variables. As the skew-normal distribution is insensitive to multiplicative transformations – we may write any skew-normal random variable ![]() $s$ as

$s$ as ![]() $s = \mu + \sigma (\delta |n_1| + n_2 \sqrt {1 - \delta ^2})$ (Reference PourahmadiPourahmadi, 2007) so multiplying by some factor

$s = \mu + \sigma (\delta |n_1| + n_2 \sqrt {1 - \delta ^2})$ (Reference PourahmadiPourahmadi, 2007) so multiplying by some factor ![]() $m$ just changes

$m$ just changes ![]() $\mu \to m\mu$ and

$\mu \to m\mu$ and ![]() $\sigma \to m\sigma$ – the log-skew-normal must be equivalently insensitive to exponentiation. Thus, if

$\sigma \to m\sigma$ – the log-skew-normal must be equivalently insensitive to exponentiation. Thus, if ![]() $\varepsilon _B$ is log-skew-normally distributed, then so is

$\varepsilon _B$ is log-skew-normally distributed, then so is ![]() $L_O$. We indeed find that all the datasets used here, as well as their aggregate, are well described by a log-skew-normal distribution (Kuiper's statistic

$L_O$. We indeed find that all the datasets used here, as well as their aggregate, are well described by a log-skew-normal distribution (Kuiper's statistic ![]() $V = 0.021$ for the combined dataset and as well as the median across the individual datasets; supplementary material, Figure S1, Table S1).

$V = 0.021$ for the combined dataset and as well as the median across the individual datasets; supplementary material, Figure S1, Table S1).

In contrast, there is not a clear description of the probability distribution of ![]() $L_T$. While calculating

$L_T$. While calculating ![]() $L_T$ is straightforward conceptually, it involves subjective choices that bias its distribution (Reference Mater, Venayagamoorthy, Laurent and MoumMater et al., 2015). Thus, it is less practical to identify the ‘true’ distribution for

$L_T$ is straightforward conceptually, it involves subjective choices that bias its distribution (Reference Mater, Venayagamoorthy, Laurent and MoumMater et al., 2015). Thus, it is less practical to identify the ‘true’ distribution for ![]() $L_T$ from collections of

$L_T$ from collections of ![]() $L_T$ measurements, as is the equivalent identification of an underlying distribution for

$L_T$ measurements, as is the equivalent identification of an underlying distribution for ![]() $\varepsilon$. It has recently been argued that the size of turbulent overturns, which are thought to have a correspondence with

$\varepsilon$. It has recently been argued that the size of turbulent overturns, which are thought to have a correspondence with ![]() $L_T$, should be power-law distributed (Reference Smyth, Nash and MoumSmyth, Nash, & Moum, 2019), but we find little to no evidence of this in the datasets we use here (supplementary material, Table S2). Either

$L_T$, should be power-law distributed (Reference Smyth, Nash and MoumSmyth, Nash, & Moum, 2019), but we find little to no evidence of this in the datasets we use here (supplementary material, Table S2). Either ![]() $L_T$ is not proportional to patch size in the datasets examined here or the patch sizes in these datasets are not power-law distributed (Reference Clauset, Shalizi and NewmanClauset, Shalizi, & Newman, 2009). The

$L_T$ is not proportional to patch size in the datasets examined here or the patch sizes in these datasets are not power-law distributed (Reference Clauset, Shalizi and NewmanClauset, Shalizi, & Newman, 2009). The ![]() $L_T$ probability distribution for all the datasets used here is unimodal in log-space, such that at least the bulk of the distributions are qualitatively much closer to log-normally distributed than power-law distributed (supplementary material, Figure S1).

$L_T$ probability distribution for all the datasets used here is unimodal in log-space, such that at least the bulk of the distributions are qualitatively much closer to log-normally distributed than power-law distributed (supplementary material, Figure S1).

Regardless, it is the probability distribution of ![]() $R_{OT} = L_O/L_T$ that is of interest here, for which a parametrization of the probability of

$R_{OT} = L_O/L_T$ that is of interest here, for which a parametrization of the probability of ![]() $L_T$ is not necessary. Instead, one needs a suitable description of the conditional distribution of

$L_T$ is not necessary. Instead, one needs a suitable description of the conditional distribution of ![]() $L_T$ for a given

$L_T$ for a given ![]() $L_O$ value, i.e.

$L_O$ value, i.e. ![]() $P(L_T | L_O)$. Somewhat surprisingly, we find that the log-skew-normal distribution is again an excellent description of the probability distribution of

$P(L_T | L_O)$. Somewhat surprisingly, we find that the log-skew-normal distribution is again an excellent description of the probability distribution of ![]() $R_{OT}$ (

$R_{OT}$ (![]() $V = 0.010$ for the combined dataset and the median

$V = 0.010$ for the combined dataset and the median ![]() $V = 0.027$ across the eight individual datasets; supplementary material, Table S1), but that in this case, the log-skewness is negative. The log-skewness of

$V = 0.027$ across the eight individual datasets; supplementary material, Table S1), but that in this case, the log-skewness is negative. The log-skewness of ![]() $R_{OT}$ is in fact only positive for the IH18 dataset (skewness

$R_{OT}$ is in fact only positive for the IH18 dataset (skewness ![]() $\tilde {\mu }_3 = 0.48$). Thus, the log-skew-normal distribution is, similar to

$\tilde {\mu }_3 = 0.48$). Thus, the log-skew-normal distribution is, similar to ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $L_O$, a satisfactory parametrization of the probability distribution of

$L_O$, a satisfactory parametrization of the probability distribution of ![]() $R_{OT}$, but by and large with a different sign in log-skewness. The question then becomes how

$R_{OT}$, but by and large with a different sign in log-skewness. The question then becomes how ![]() $L_O$ and

$L_O$ and ![]() $L_T$ are related such that the distribution of

$L_T$ are related such that the distribution of ![]() $R_{OT}$: (i) is log-skew-normal; (ii) has a negative log-skewness and (iii) is peaked at

$R_{OT}$: (i) is log-skew-normal; (ii) has a negative log-skewness and (iii) is peaked at ![]() $\mathcal {O}(1)$. In the absence of a theory for the probability distribution of

$\mathcal {O}(1)$. In the absence of a theory for the probability distribution of ![]() $L_T$, we derive an empirical construction of

$L_T$, we derive an empirical construction of ![]() $P(L_T|L_O)$ that recapitulates the probability distribution of

$P(L_T|L_O)$ that recapitulates the probability distribution of ![]() $R_{OT}$ with simulated

$R_{OT}$ with simulated ![]() $L_T$ values. As

$L_T$ values. As ![]() $L_T$ appears to be approximately log-normally distributed (supplementary material, Figure S1), it is justifiable to base such a construction on a statistical scaling relationship, with multiplicative fluctuations.

$L_T$ appears to be approximately log-normally distributed (supplementary material, Figure S1), it is justifiable to base such a construction on a statistical scaling relationship, with multiplicative fluctuations.

The conditions (i–iii) above can occur for ![]() $R_{OT}$ if

$R_{OT}$ if ![]() $L_T$ scales heteroscedastically, i.e. the fluctuations around how

$L_T$ scales heteroscedastically, i.e. the fluctuations around how ![]() $L_T$ scales with

$L_T$ scales with ![]() $L_O$ are themselves also a function of

$L_O$ are themselves also a function of ![]() $L_O$, and the coefficients of the scaling relationship are close to unity. If

$L_O$, and the coefficients of the scaling relationship are close to unity. If ![]() $L_T {\sim } \zeta L_O^\beta$ with

$L_T {\sim } \zeta L_O^\beta$ with ![]() $\zeta \approx 1$ and

$\zeta \approx 1$ and ![]() $\beta \approx 1$, then most values of

$\beta \approx 1$, then most values of ![]() $L_O/L_T$ will be

$L_O/L_T$ will be ![]() $\mathcal {O}(1)$. If this scaling relationship is tight when

$\mathcal {O}(1)$. If this scaling relationship is tight when ![]() $L_O$ is large (i.e. small fluctuations and low probability of small

$L_O$ is large (i.e. small fluctuations and low probability of small ![]() $L_T$), this will make cases where

$L_T$), this will make cases where ![]() $L_O/L_T$ is very large unlikely. Conversely, if this scaling relationship is weaker when

$L_O/L_T$ is very large unlikely. Conversely, if this scaling relationship is weaker when ![]() $L_O$ is small (i.e. larger fluctuations and comparatively higher probability of larger

$L_O$ is small (i.e. larger fluctuations and comparatively higher probability of larger ![]() $L_T$), this will make cases where

$L_T$), this will make cases where ![]() $L_O/L_T$ is very small less unlikely. Together, these heteroscedastic effects can change the sign of the log-skewness.

$L_O/L_T$ is very small less unlikely. Together, these heteroscedastic effects can change the sign of the log-skewness.

As both ![]() $L_O$ and

$L_O$ and ![]() $L_T$ are random variables, their scaling relationship can be captured by model II regression; the heteroscedasticity of this relationship can then be captured by quantile regression, which estimates the conditional quantiles of the response variable (Reference Koenker and HallockKoenker & Hallock, 2001), applied to the residuals. First, applying model II regression to the combined and log-transformed

$L_T$ are random variables, their scaling relationship can be captured by model II regression; the heteroscedasticity of this relationship can then be captured by quantile regression, which estimates the conditional quantiles of the response variable (Reference Koenker and HallockKoenker & Hallock, 2001), applied to the residuals. First, applying model II regression to the combined and log-transformed ![]() $(L_O,L_T)$ dataset (i.e. that used in Figure 2a,b), we find the best-fit scaling for these data to be

$(L_O,L_T)$ dataset (i.e. that used in Figure 2a,b), we find the best-fit scaling for these data to be ![]() $L_T = 1.24 L_O^{1.01}$ (see Figure 4b; we use least-squares cubic regression (Reference YorkYork, 1966) but other standard methods such as bisector or major axis yield similar results). Then applying quantile regression to the residuals, we indeed find that the fluctuations in

$L_T = 1.24 L_O^{1.01}$ (see Figure 4b; we use least-squares cubic regression (Reference YorkYork, 1966) but other standard methods such as bisector or major axis yield similar results). Then applying quantile regression to the residuals, we indeed find that the fluctuations in ![]() $L_T$ around this scaling relationship increase as

$L_T$ around this scaling relationship increase as ![]() $L_O$ decreases. This decrease is characterized by the relationship

$L_O$ decreases. This decrease is characterized by the relationship ![]() $r = r_o + r_1 \log _{10} (L_O)$, where

$r = r_o + r_1 \log _{10} (L_O)$, where ![]() $r$ is the amplitude of the residuals around the best-fit scaling,

$r$ is the amplitude of the residuals around the best-fit scaling, ![]() $r_o$ is the residual amplitude when

$r_o$ is the residual amplitude when ![]() $L_0 = 1$ m and

$L_0 = 1$ m and ![]() $r_1$ captures how the residuals decrease as

$r_1$ captures how the residuals decrease as ![]() $L_O$ increases. We calculate separate relationships for positive and negative residuals, via quantile regression. (We use the 10th and 90th percentiles to compute

$L_O$ increases. We calculate separate relationships for positive and negative residuals, via quantile regression. (We use the 10th and 90th percentiles to compute ![]() $r_o$ and

$r_o$ and ![]() $r_1$ but our results are not sensitive to this choice.) We can then recover the joint

$r_1$ but our results are not sensitive to this choice.) We can then recover the joint ![]() $(L_O,L_T)$ distribution and hence the

$(L_O,L_T)$ distribution and hence the ![]() $R_{OT}$ distribution by simulating the

$R_{OT}$ distribution by simulating the ![]() $L_T$ accordingly for a given

$L_T$ accordingly for a given ![]() $L_O$. The simulated

$L_O$. The simulated ![]() $L_T$ values yield good agreement with the empirical

$L_T$ values yield good agreement with the empirical ![]() $R_{OT}$ distribution (

$R_{OT}$ distribution (![]() $V = 0.028$). We define

$V = 0.028$). We define ![]() $L_T = \eta \times 1.24 L_O^{1.01}$, where

$L_T = \eta \times 1.24 L_O^{1.01}$, where ![]() $\eta$ is a log-normal random variable with

$\eta$ is a log-normal random variable with ![]() $\mu = 0$ and

$\mu = 0$ and ![]() $\sigma \propto L_O$. This results in a conditional distribution for

$\sigma \propto L_O$. This results in a conditional distribution for ![]() $L_T$ that matches the estimated scaling relationships, and is thus an approximation of the joint distribution of the data shown in Figure 4b.

$L_T$ that matches the estimated scaling relationships, and is thus an approximation of the joint distribution of the data shown in Figure 4b.

Physically, this scaling behaviour has an intuitive interpretation (as illustrated in Figure 4c): ![]() $L_T$ scaling with

$L_T$ scaling with ![]() $L_O$ with an exponent close to unity, such that the peak in the probability density function for

$L_O$ with an exponent close to unity, such that the peak in the probability density function for ![]() $R_{OT}$ is

$R_{OT}$ is ![]() $\mathcal {O}(1)$, occurs because for most turbulent overturns, the displacement of fluid parcels in that patch (

$\mathcal {O}(1)$, occurs because for most turbulent overturns, the displacement of fluid parcels in that patch (![]() $L_T$) will be of the same order of magnitude as the vertical scale that they can be displaced given the background stratification (

$L_T$) will be of the same order of magnitude as the vertical scale that they can be displaced given the background stratification (![]() $L_O$), as discussed above. If an overturn has a small

$L_O$), as discussed above. If an overturn has a small ![]() $L_O$, this could either be because it is a small overturn, meaning it has a similarly small

$L_O$, this could either be because it is a small overturn, meaning it has a similarly small ![]() $L_T$, or a young overturn with a larger

$L_T$, or a young overturn with a larger ![]() $L_T$ but still low turbulent dissipation. If

$L_T$ but still low turbulent dissipation. If ![]() $L_O$ is large, however, it likely corresponds to the energetic phase of the flow (i.e. the optimal mixing phase in Figure 1c) and so

$L_O$ is large, however, it likely corresponds to the energetic phase of the flow (i.e. the optimal mixing phase in Figure 1c) and so ![]() $L_T$ could be reasonably expected to be similarly large. This asymmetry in the life cycle of turbulent overturns produces this skewed, heteroscedastic scaling in Figure 4b. Figure 4c groups the data in Figure 4b (same as that in Figures 2a and 2b) into the three categories discussed above, and shows that (a) weak stratification cannot yield a high flux coefficient even at high energy levels as there is barely enough to mix, (b) low energy turbulence in the presence of strong stratification can result in a high flux coefficient, but importantly that does not imply a high flux (since flux