1. Introduction

1.1. Setting and motivation

The class of tempered stable processes is very popular in the financial modelling of asset prices of risky assets; see e.g. [Reference Cont and Tankov10]. A tempered stable process

![]() $X=(X_t)_{t\geq0}$

naturally addresses the shortcomings of diffusion models by allowing for the large (often heavy-tailed and asymmetric) sudden movements of the asset price observed in the markets, while preserving the exponential moments required in exponential Lévy models

$X=(X_t)_{t\geq0}$

naturally addresses the shortcomings of diffusion models by allowing for the large (often heavy-tailed and asymmetric) sudden movements of the asset price observed in the markets, while preserving the exponential moments required in exponential Lévy models

![]() $S_0 e^X$

of asset prices [Reference Carr, Geman, Madan and Yor7, Reference Cont and Tankov10, Reference Kou26, Reference Schoutens36]. Of particular interest in this context are the expected drawdown (the current decline from a historical peak) and its duration (the elapsed time since the historical peak) (see e.g. [Reference Baurdoux, Palmowski and Pistorius3, Reference Carr, Zhang and Hadjiliadis8, Reference Landriault, Li and Zhang28, Reference Sornette38, Reference Vecer39]), as well as barrier option prices [Reference Avram, Chan and Usabel2, Reference Giles and Xia14, Reference Kudryavtsev and Levendorski27, Reference Schoutens37] and the estimation of ruin probabilities in insurance [Reference Klüppelberg, Kyprianou and Maller25, Reference Li, Zhao and Zhou29, Reference Mordecki31]. In these application areas, the key quantities are the historic maximum

$S_0 e^X$

of asset prices [Reference Carr, Geman, Madan and Yor7, Reference Cont and Tankov10, Reference Kou26, Reference Schoutens36]. Of particular interest in this context are the expected drawdown (the current decline from a historical peak) and its duration (the elapsed time since the historical peak) (see e.g. [Reference Baurdoux, Palmowski and Pistorius3, Reference Carr, Zhang and Hadjiliadis8, Reference Landriault, Li and Zhang28, Reference Sornette38, Reference Vecer39]), as well as barrier option prices [Reference Avram, Chan and Usabel2, Reference Giles and Xia14, Reference Kudryavtsev and Levendorski27, Reference Schoutens37] and the estimation of ruin probabilities in insurance [Reference Klüppelberg, Kyprianou and Maller25, Reference Li, Zhao and Zhou29, Reference Mordecki31]. In these application areas, the key quantities are the historic maximum

![]() $\overline{X}_T$

at time T, the time

$\overline{X}_T$

at time T, the time

![]() $\tau_T(X)$

at which this maximum was attained during the time interval [0,T], and the value

$\tau_T(X)$

at which this maximum was attained during the time interval [0,T], and the value

![]() $X_T$

of the process X at time T.

$X_T$

of the process X at time T.

In this paper we focus on the Monte Carlo (MC) estimation of

![]() $\mathbb{E} [g(X_T,\overline{X}_T,\tau_T(X))]$

, where the payoff g is (locally) Lipschitz or of barrier type (cf. Proposition 3.1 below), covering the aforementioned applications. We construct a novel tempered stick-breaking algorithm (TSB-Alg), applicable to a tempered Lévy process, if the increments of the process without tempering can be simulated, which clearly holds if X is a tempered stable process. We show that the bias of TSB-Alg decays geometrically fast in its computational cost for functions g that are either locally Lipschitz or of barrier type (see Subsection 3 for details). We prove that the corresponding multilevel Monte Carlo (MLMC) estimator has optimal computational complexity (i.e. of order

$\mathbb{E} [g(X_T,\overline{X}_T,\tau_T(X))]$

, where the payoff g is (locally) Lipschitz or of barrier type (cf. Proposition 3.1 below), covering the aforementioned applications. We construct a novel tempered stick-breaking algorithm (TSB-Alg), applicable to a tempered Lévy process, if the increments of the process without tempering can be simulated, which clearly holds if X is a tempered stable process. We show that the bias of TSB-Alg decays geometrically fast in its computational cost for functions g that are either locally Lipschitz or of barrier type (see Subsection 3 for details). We prove that the corresponding multilevel Monte Carlo (MLMC) estimator has optimal computational complexity (i.e. of order

![]() $\varepsilon^{-2}$

if the mean squared error is at most

$\varepsilon^{-2}$

if the mean squared error is at most

![]() $\varepsilon^2$

) and establish the central limit theorem (CLT) for the MLMC estimator. Using the CLT we construct confidence intervals for barrier option prices and various risk measures based on drawdown under the tempered stable (CGMY) model. TSB-Alg combines the stick-breaking algorithm in [Reference González Cázares, Mijatović and Uribe Bravo18] with the exponential change of measure for Lévy processes, also applied in [Reference Poirot and Tankov33] for the MC pricing of European options. A short YouTube video [Reference González Cázares and Mijatović17] describes TSB-Alg and the results of this paper.

$\varepsilon^2$

) and establish the central limit theorem (CLT) for the MLMC estimator. Using the CLT we construct confidence intervals for barrier option prices and various risk measures based on drawdown under the tempered stable (CGMY) model. TSB-Alg combines the stick-breaking algorithm in [Reference González Cázares, Mijatović and Uribe Bravo18] with the exponential change of measure for Lévy processes, also applied in [Reference Poirot and Tankov33] for the MC pricing of European options. A short YouTube video [Reference González Cázares and Mijatović17] describes TSB-Alg and the results of this paper.

1.2. Comparison with the literature

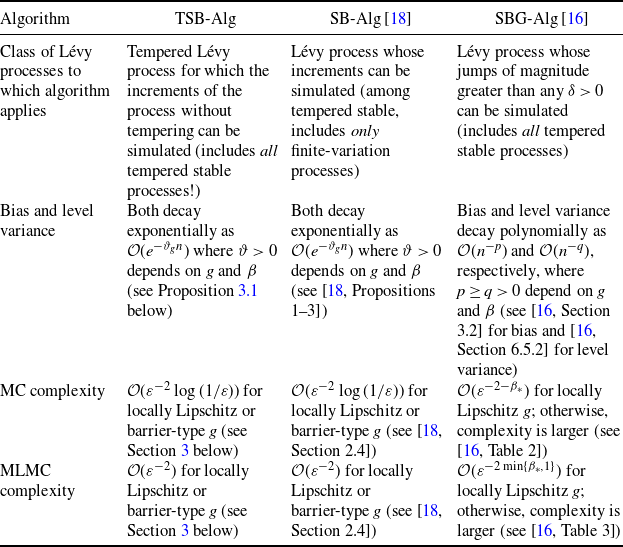

Exact simulation of the drawdown is currently out of reach. Under the assumption that the increments of the Lévy process X can be simulated (an assumption not satisfied by tempered stable models of infinite variation), the algorithm SB-Alg in [Reference González Cázares, Mijatović and Uribe Bravo18] has a geometrically small bias, significantly outperforming other algorithms for which the computational complexity analysis has been carried out. For instance, the computational complexity analysis for the procedures presented in [Reference Figueroa-López and Tankov12, Reference Kim and Kim24], applicable to tempered stable processes of finite variation, has not been carried out, so that a direct quantitative comparison with SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18] is not possible at present. If the increments cannot be sampled, a general approach utilises the Gaussian approximation of small jumps, in which case the algorithm SBG-Alg [Reference González Cázares and Mijatović16] outperforms its competitors (e.g. random walk approximation; see [Reference González Cázares and Mijatović16] for details), while retaining polynomially small bias. Thus it suffices to compare TSB-Alg below with SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18] and SBG-Alg [Reference González Cázares and Mijatović16]. Table 1 below provides a summary of the properties of TSB-Alg, SB-Alg, and SBG-Alg as well as the asymptotic computational complexities of the corresponding MC and MLMC estimators based on these algorithms (see also Subsection 3.4 below for a detailed comparison).

The stick-breaking (SB) representation in (2) plays a central role in the algorithms TSB-Alg, SB-Alg, and SBG-Alg. The SB representation was used in [Reference González Cázares, Mijatović and Uribe Bravo18] to obtain an approximation

![]() $\chi_n$

of

$\chi_n$

of

![]() $\chi\,:\!=\,(X_T,\overline{X}_T,\tau_T(X))$

that converges geometrically fast in the computational cost when the increments of X can be simulated exactly. In [Reference González Cázares and Mijatović16], the same representation was used in conjunction with a small-jump Gaussian approximation for arbitrary Lévy processes. In the present work we address a situation in between those of the two aforementioned papers, using TSB-Alg below. TSB-Alg preserves the geometric convergence in the computational cost of SB-Alg, while being applicable to general tempered stable processes (unlike SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18] in the infinite-variation case) and asymptotically outperforming SBG-Alg [Reference González Cázares and Mijatović16]; see Table 1 for an overview.

$\chi\,:\!=\,(X_T,\overline{X}_T,\tau_T(X))$

that converges geometrically fast in the computational cost when the increments of X can be simulated exactly. In [Reference González Cázares and Mijatović16], the same representation was used in conjunction with a small-jump Gaussian approximation for arbitrary Lévy processes. In the present work we address a situation in between those of the two aforementioned papers, using TSB-Alg below. TSB-Alg preserves the geometric convergence in the computational cost of SB-Alg, while being applicable to general tempered stable processes (unlike SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18] in the infinite-variation case) and asymptotically outperforming SBG-Alg [Reference González Cázares and Mijatović16]; see Table 1 for an overview.

Table 1. Summary of the properties of TSB-Alg, SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18], and SBG-Alg [Reference González Cázares and Mijatović16]. The index

![]() $\beta_*$

, defined in (21) below, is slightly larger than the Blumenthal–Getoor index

$\beta_*$

, defined in (21) below, is slightly larger than the Blumenthal–Getoor index

![]() $\beta$

; see Section 5 below for details. The bias and level variance are parametrised by computational effort n as

$\beta$

; see Section 5 below for details. The bias and level variance are parametrised by computational effort n as

![]() $n\to\infty$

, while the MC and MLMC complexities are parametrised by the accuracy

$n\to\infty$

, while the MC and MLMC complexities are parametrised by the accuracy

![]() $\varepsilon$

(i.e., the mean squared error is at most

$\varepsilon$

(i.e., the mean squared error is at most

![]() $\varepsilon^2$

) as

$\varepsilon^2$

) as

![]() $\varepsilon\to 0$

.

$\varepsilon\to 0$

.

1.3. Organisation

The remainder of the paper is structured as follows. In Section 2 we recall the SB representation and construct TSB-Alg. In Section 3 we describe the geometric decay of the bias and the strong error in

![]() $L^p$

and explain what the computational complexities of the MC and MLMC estimators are. We discuss briefly in Subsection 3.3 the construction and properties of unbiased estimators based on TSB-Alg. In Subsection 3.4 we provide an in-depth comparison of TSB-Alg with the SB and SBG algorithms, identifying where each algorithm outperforms the others. In Section 4 we consider the case of tempered stable processes and illustrate the previously described results with numerical examples. The proofs of all the results except Theorem 2.1, which is stated and proved in Section 2, are given in Section 5 below.

$L^p$

and explain what the computational complexities of the MC and MLMC estimators are. We discuss briefly in Subsection 3.3 the construction and properties of unbiased estimators based on TSB-Alg. In Subsection 3.4 we provide an in-depth comparison of TSB-Alg with the SB and SBG algorithms, identifying where each algorithm outperforms the others. In Section 4 we consider the case of tempered stable processes and illustrate the previously described results with numerical examples. The proofs of all the results except Theorem 2.1, which is stated and proved in Section 2, are given in Section 5 below.

2. The tempered stick-breaking algorithm

Let

![]() $T>0$

be a time horizon and

$T>0$

be a time horizon and

![]() ${\boldsymbol\lambda}=(\lambda_+,\lambda_-)\in\mathbb{R}_+^2$

a vector with non-negative coordinates, different from the origin

${\boldsymbol\lambda}=(\lambda_+,\lambda_-)\in\mathbb{R}_+^2$

a vector with non-negative coordinates, different from the origin

![]() ${\boldsymbol0}=(0,0)$

. A stochastic process

${\boldsymbol0}=(0,0)$

. A stochastic process

![]() $X=(X_t)_{t\in[0,T]}$

is said to be a tempered Lévy process under the probability measure

$X=(X_t)_{t\in[0,T]}$

is said to be a tempered Lévy process under the probability measure

![]() $\mathbb{P}_{\boldsymbol\lambda}$

if its characteristic function satisfies

$\mathbb{P}_{\boldsymbol\lambda}$

if its characteristic function satisfies

\begin{align} t^{-1}\log\mathbb{E}_{\boldsymbol\lambda}\big[e^{iuX_t}\big]= & iub_{\boldsymbol\lambda}-\frac{1}{2}\sigma^2u^2+\int_{\mathbb{R}\setminus\{0\}}\big(e^{iux}-1-iux\cdot\mathbb{1}_{({-}1,1)}(x)\big)\nu_{\boldsymbol\lambda}(\textrm{d} x),\\&\qquad\qquad\qquad\qquad\qquad\qquad\qquad \text{for all }u\in\mathbb{R},\,t>0,\end{align}

\begin{align} t^{-1}\log\mathbb{E}_{\boldsymbol\lambda}\big[e^{iuX_t}\big]= & iub_{\boldsymbol\lambda}-\frac{1}{2}\sigma^2u^2+\int_{\mathbb{R}\setminus\{0\}}\big(e^{iux}-1-iux\cdot\mathbb{1}_{({-}1,1)}(x)\big)\nu_{\boldsymbol\lambda}(\textrm{d} x),\\&\qquad\qquad\qquad\qquad\qquad\qquad\qquad \text{for all }u\in\mathbb{R},\,t>0,\end{align}

where

![]() $\mathbb{E}_{\boldsymbol\lambda}$

denotes the expectation under the probability measure

$\mathbb{E}_{\boldsymbol\lambda}$

denotes the expectation under the probability measure

![]() $\mathbb{P}_{\boldsymbol\lambda}$

, and the generating (or Lévy) triplet

$\mathbb{P}_{\boldsymbol\lambda}$

, and the generating (or Lévy) triplet

![]() $(\sigma^2,\nu_{\boldsymbol\lambda},b_{\boldsymbol\lambda})$

is given by

$(\sigma^2,\nu_{\boldsymbol\lambda},b_{\boldsymbol\lambda})$

is given by

where

![]() $\sigma^2\in\mathbb{R}_+$

,

$\sigma^2\in\mathbb{R}_+$

,

![]() $b\in\mathbb{R}$

, and

$b\in\mathbb{R}$

, and

![]() $\nu$

is a Lévy measure on

$\nu$

is a Lévy measure on

![]() $\mathbb{R}\setminus\{0\}$

, i.e.

$\mathbb{R}\setminus\{0\}$

, i.e.

![]() $\nu$

satisfies

$\nu$

satisfies

![]() $\int_{(-1,1)}x^2\nu(\textrm{d} x)<\infty$

and

$\int_{(-1,1)}x^2\nu(\textrm{d} x)<\infty$

and

![]() $\nu(\mathbb{R}\setminus({-}1,1))<\infty$

. (All Lévy triplets in this paper are given with respect to the cutoff function

$\nu(\mathbb{R}\setminus({-}1,1))<\infty$

. (All Lévy triplets in this paper are given with respect to the cutoff function

![]() $x\mapsto\mathbb{1}_{(-1,1)}(x)$

, and the sign function in (1) is defined as

$x\mapsto\mathbb{1}_{(-1,1)}(x)$

, and the sign function in (1) is defined as

![]() ${\textrm{sgn}}(x)\,:\!=\,\mathbb{1}_{\{x>0\}}-\mathbb{1}_{\{x<0\}}$

.) The triplet

${\textrm{sgn}}(x)\,:\!=\,\mathbb{1}_{\{x>0\}}-\mathbb{1}_{\{x<0\}}$

.) The triplet

![]() $\big(\sigma^2,\nu_{\boldsymbol\lambda},b_{\boldsymbol\lambda}\big)$

uniquely determines the law of X via the Lévy–Khintchine formula for the characteristic function of

$\big(\sigma^2,\nu_{\boldsymbol\lambda},b_{\boldsymbol\lambda}\big)$

uniquely determines the law of X via the Lévy–Khintchine formula for the characteristic function of

![]() $X_t$

for

$X_t$

for

![]() $t>0$

given in the displays above (for details, see [Reference Sato35, Theorems 7.10 and 8.1, Definition 8.2]).

$t>0$

given in the displays above (for details, see [Reference Sato35, Theorems 7.10 and 8.1, Definition 8.2]).

Our aim is to sample from the law of the statistic

![]() $(X_T,\overline{X}_T,\tau_T)$

consisting of the position

$(X_T,\overline{X}_T,\tau_T)$

consisting of the position

![]() $X_T$

of the process X at T, the supremum

$X_T$

of the process X at T, the supremum

![]() $\overline{X}_T\,:\!=\,\sup\{X_s:s\in[0,T]\}$

of X on the time interval [0,T], and the time

$\overline{X}_T\,:\!=\,\sup\{X_s:s\in[0,T]\}$

of X on the time interval [0,T], and the time

![]() $\tau_T\,:\!=\,\inf\{s\in[0,T]:\overline{X}_s=\overline{X}_T\}$

at which the supremum was attained in [0,T]. By [Reference González Cázares, Mijatović and Uribe Bravo18, Theorem 1] there exists a coupling

$\tau_T\,:\!=\,\inf\{s\in[0,T]:\overline{X}_s=\overline{X}_T\}$

at which the supremum was attained in [0,T]. By [Reference González Cázares, Mijatović and Uribe Bravo18, Theorem 1] there exists a coupling

![]() $(X,Y,\ell)$

under a probability measure

$(X,Y,\ell)$

under a probability measure

![]() $\mathbb{P}_{\boldsymbol\lambda}$

, such that

$\mathbb{P}_{\boldsymbol\lambda}$

, such that

![]() $\ell=(\ell_n)_{n\in\mathbb{N}}$

is a stick-breaking process on [0,T] based on the uniform law

$\ell=(\ell_n)_{n\in\mathbb{N}}$

is a stick-breaking process on [0,T] based on the uniform law

![]() $\textrm{U}(0,1)$

(i.e.

$\textrm{U}(0,1)$

(i.e.

![]() $L_0\,:\!=\,T$

,

$L_0\,:\!=\,T$

,

![]() $L_n\,:\!=\,L_{n-1}U_n$

, and

$L_n\,:\!=\,L_{n-1}U_n$

, and

![]() $\ell_n\,:\!=\,L_{n-1}-L_n$

for

$\ell_n\,:\!=\,L_{n-1}-L_n$

for

![]() $n\in\mathbb{N}$

, where the

$n\in\mathbb{N}$

, where the

![]() $U_n$

are independent and identically distributed (i.i.d.) as

$U_n$

are independent and identically distributed (i.i.d.) as

![]() $\textrm{U}(0,1)$

), independent of the Lévy process Y with law equal to that of X, and the SB representation holds

$\textrm{U}(0,1)$

), independent of the Lévy process Y with law equal to that of X, and the SB representation holds

![]() $\mathbb{P}_{\boldsymbol\lambda}$

-almost surely (a.s.):

$\mathbb{P}_{\boldsymbol\lambda}$

-almost surely (a.s.):

We stress that

![]() $\ell$

is not independent of X. In fact

$\ell$

is not independent of X. In fact

![]() $(\ell,Y)$

can be expressed naturally through the geometry of the path of X (see [Reference Pitman and Uribe Bravo32, Theorem 1] and the coupling in [Reference González Cázares, Mijatović and Uribe Bravo18]), but further details of the coupling are not important for our purposes. The key step in the construction of our algorithm is given by the following theorem. Its proof is based on the coupling described above and the change-of-measure theorem for Lévy processes [Reference Sato35, Theorems 33.1 and 33.2].

$(\ell,Y)$

can be expressed naturally through the geometry of the path of X (see [Reference Pitman and Uribe Bravo32, Theorem 1] and the coupling in [Reference González Cázares, Mijatović and Uribe Bravo18]), but further details of the coupling are not important for our purposes. The key step in the construction of our algorithm is given by the following theorem. Its proof is based on the coupling described above and the change-of-measure theorem for Lévy processes [Reference Sato35, Theorems 33.1 and 33.2].

Theorem 2.1.

Denote by

![]() $\sigma B$

,

$\sigma B$

,

![]() $Y^{{(+)}}$

,

$Y^{{(+)}}$

,

![]() $Y^{{(-)}}$

the independent Lévy processes with generating triplets

$Y^{{(-)}}$

the independent Lévy processes with generating triplets

![]() $\big(\sigma^2,0,0\big)$

,

$\big(\sigma^2,0,0\big)$

,

![]() $(0,\nu_{\boldsymbol\lambda}|_{(0,\infty)},0)$

,

$(0,\nu_{\boldsymbol\lambda}|_{(0,\infty)},0)$

,

![]() $(0,\nu_{\boldsymbol\lambda}|_{(-\infty,0)},0)$

, respectively, satisfying

$(0,\nu_{\boldsymbol\lambda}|_{(-\infty,0)},0)$

, respectively, satisfying

![]() $Y_t=\sigma B_t+Y_t^{{(+)}}+Y_t^{{(-)}}+b_{\boldsymbol\lambda} t$

for all

$Y_t=\sigma B_t+Y_t^{{(+)}}+Y_t^{{(-)}}+b_{\boldsymbol\lambda} t$

for all

![]() $t\in[0,T]$

,

$t\in[0,T]$

,

![]() $\mathbb{P}_{\boldsymbol\lambda}$

-a.s. Let

$\mathbb{P}_{\boldsymbol\lambda}$

-a.s. Let

![]() $\mathbb{E}_{\boldsymbol\lambda}$

(resp.

$\mathbb{E}_{\boldsymbol\lambda}$

(resp.

![]() $\mathbb{E}_{\boldsymbol0}$

) be the expectation under

$\mathbb{E}_{\boldsymbol0}$

) be the expectation under

![]() $\mathbb{P}_{\boldsymbol\lambda}$

(resp.

$\mathbb{P}_{\boldsymbol\lambda}$

(resp.

![]() $\mathbb{P}_{\boldsymbol0}$

) and define

$\mathbb{P}_{\boldsymbol0}$

) and define

Then for any

![]() $\sigma(\ell,\xi)$

-measurable random variable

$\sigma(\ell,\xi)$

-measurable random variable

![]() $\zeta$

with

$\zeta$

with

![]() $\mathbb{E}_{\boldsymbol\lambda}|\zeta|<\infty$

we have

$\mathbb{E}_{\boldsymbol\lambda}|\zeta|<\infty$

we have

![]() $\mathbb{E}_{\boldsymbol\lambda}[\zeta]= \mathbb{E}_{\boldsymbol0}[\zeta\Upsilon_{\boldsymbol\lambda}]$

.

$\mathbb{E}_{\boldsymbol\lambda}[\zeta]= \mathbb{E}_{\boldsymbol0}[\zeta\Upsilon_{\boldsymbol\lambda}]$

.

Proof. The exponential change-of-measure theorem for Lévy processes (see [Reference Sato35, Theorems 33.1 and 33.2]) implies that for any measurable function F with

![]() $\mathbb{E}_{\boldsymbol\lambda} |F((Y_t)_{t\in[0,T]})|<\infty$

, we have the identity

$\mathbb{E}_{\boldsymbol\lambda} |F((Y_t)_{t\in[0,T]})|<\infty$

, we have the identity

![]() $\mathbb{E}_{\boldsymbol\lambda}[F((Y_t)_{t\in[0,T]})]=\mathbb{E}_{\boldsymbol0}[F((Y_t)_{t\in[0,T]})\Upsilon_{\boldsymbol\lambda}]$

, where

$\mathbb{E}_{\boldsymbol\lambda}[F((Y_t)_{t\in[0,T]})]=\mathbb{E}_{\boldsymbol0}[F((Y_t)_{t\in[0,T]})\Upsilon_{\boldsymbol\lambda}]$

, where

![]() $\Upsilon_{\boldsymbol\lambda}$

is defined in (3) in the statement of Theorem 2.1. Since the stick-breaking process

$\Upsilon_{\boldsymbol\lambda}$

is defined in (3) in the statement of Theorem 2.1. Since the stick-breaking process

![]() $\ell$

is independent of Y under both

$\ell$

is independent of Y under both

![]() $\mathbb{P}_{\boldsymbol\lambda}$

and

$\mathbb{P}_{\boldsymbol\lambda}$

and

![]() $\mathbb{P}_{\boldsymbol0}$

, this property extends to measurable functions of

$\mathbb{P}_{\boldsymbol0}$

, this property extends to measurable functions of

![]() $(\ell, (Y_t)_{t\in[0,T]})$

and thus to measurable functions of

$(\ell, (Y_t)_{t\in[0,T]})$

and thus to measurable functions of

![]() $(\ell,\xi)$

, as claimed.

$(\ell,\xi)$

, as claimed.

By the equality in (2), the measurable function

![]() $\zeta$

of the sequences

$\zeta$

of the sequences

![]() $\ell$

and

$\ell$

and

![]() $\xi$

in Theorem 2.1 may be equal either to

$\xi$

in Theorem 2.1 may be equal either to

![]() $g(\chi)$

(for any integrable function g of the statistic

$g(\chi)$

(for any integrable function g of the statistic

![]() $\chi$

) or to its approximation

$\chi$

) or to its approximation

![]() $g(\chi_n)$

, where

$g(\chi_n)$

, where

![]() $\chi_n$

is as introduced in [Reference González Cázares, Mijatović and Uribe Bravo18]:

$\chi_n$

is as introduced in [Reference González Cázares, Mijatović and Uribe Bravo18]:

Theorem 2.1 enables us to sample

![]() $\chi_n$

under the probability measure

$\chi_n$

under the probability measure

![]() $\mathbb{P}_{\boldsymbol0}$

, which for any tempered stable process X makes the increments of Y stable and thus easy to simulate. Under

$\mathbb{P}_{\boldsymbol0}$

, which for any tempered stable process X makes the increments of Y stable and thus easy to simulate. Under

![]() $\mathbb{P}_{\boldsymbol0}$

, the law of

$\mathbb{P}_{\boldsymbol0}$

, the law of

![]() $Y_t$

equals that of

$Y_t$

equals that of

![]() $Y_t^{{(+)}}+Y_t^{{(-)}}+\sigma B_t+b t$

, where

$Y_t^{{(+)}}+Y_t^{{(-)}}+\sigma B_t+b t$

, where

![]() $\sigma B_t+b t$

is normal

$\sigma B_t+b t$

is normal

![]() $N\big(bt,\sigma^2 t\big)$

with mean bt and variance

$N\big(bt,\sigma^2 t\big)$

with mean bt and variance

![]() $\sigma^2 t$

and the Lévy processes

$\sigma^2 t$

and the Lévy processes

![]() $Y^{{(+)}}$

and

$Y^{{(+)}}$

and

![]() $Y^{{(-)}}$

have triplets

$Y^{{(-)}}$

have triplets

![]() $(0,\nu|_{(0,\infty)},0)$

and

$(0,\nu|_{(0,\infty)},0)$

and

![]() $(0,\nu|_{(-\infty,0)},0)$

, respectively. Denote their distribution functions by

$(0,\nu|_{(-\infty,0)},0)$

, respectively. Denote their distribution functions by

![]() $F^{{(\pm)}}(t,x)\,:\!=\,\mathbb{P}_{\boldsymbol0}\big(Y_t^{{(\pm)}}\le x\big)$

,

$F^{{(\pm)}}(t,x)\,:\!=\,\mathbb{P}_{\boldsymbol0}\big(Y_t^{{(\pm)}}\le x\big)$

,

![]() $x\in\mathbb{R}$

,

$x\in\mathbb{R}$

,

![]() $t>0$

.

$t>0$

.

TSB-Alg

Note that the output

![]() $g(\chi_n)\Upsilon_{\boldsymbol\lambda}$

of TSB-Alg is sampled under

$g(\chi_n)\Upsilon_{\boldsymbol\lambda}$

of TSB-Alg is sampled under

![]() $\mathbb{P}_{\boldsymbol0}$

and, by Theorem 2.1 above, is unbiased since

$\mathbb{P}_{\boldsymbol0}$

and, by Theorem 2.1 above, is unbiased since

![]() $\mathbb{E}_{\boldsymbol0}[g(\chi_n)\Upsilon_{\boldsymbol\lambda}]=\mathbb{E}_{\boldsymbol\lambda}[g(\chi_n)]$

. As our aim is to obtain MC and MLMC estimators for

$\mathbb{E}_{\boldsymbol0}[g(\chi_n)\Upsilon_{\boldsymbol\lambda}]=\mathbb{E}_{\boldsymbol\lambda}[g(\chi_n)]$

. As our aim is to obtain MC and MLMC estimators for

![]() $\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]$

, our next task is to understand the expected error of TSB-Alg; see (6) in Subsection 3.1 below. In [Reference González Cázares, Mijatović and Uribe Bravo18] it was proved that the approximation

$\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]$

, our next task is to understand the expected error of TSB-Alg; see (6) in Subsection 3.1 below. In [Reference González Cázares, Mijatović and Uribe Bravo18] it was proved that the approximation

![]() $\chi_n$

converges geometrically fast in computational effort (or equivalently as

$\chi_n$

converges geometrically fast in computational effort (or equivalently as

![]() $n\to\infty$

) to

$n\to\infty$

) to

![]() $\chi$

if the increments of Y can be sampled under

$\chi$

if the increments of Y can be sampled under

![]() $\mathbb{P}_{\boldsymbol\lambda}$

(see [Reference González Cázares, Mijatović and Uribe Bravo18] for more details and a discussion of the benefits of the ‘correction term’

$\mathbb{P}_{\boldsymbol\lambda}$

(see [Reference González Cázares, Mijatović and Uribe Bravo18] for more details and a discussion of the benefits of the ‘correction term’

![]() $(Y_{L_n},\max\{Y_{L_n},0\},L_n\cdot\mathbb{1}_{\{Y_{L_n}>0\}})$

in (5)). Theorem 2.1 allows us to weaken this requirement in the context of tempered Lévy processes, by requiring that we be able to sample the increments of Y under

$(Y_{L_n},\max\{Y_{L_n},0\},L_n\cdot\mathbb{1}_{\{Y_{L_n}>0\}})$

in (5)). Theorem 2.1 allows us to weaken this requirement in the context of tempered Lévy processes, by requiring that we be able to sample the increments of Y under

![]() $\mathbb{P}_{\boldsymbol0}$

. The main application of TSB-Alg is to general tempered stable processes, as the simulation of their increments is currently out of reach for many cases of interest (see Section 3.4 below for comparison with existing methods when it is not), making the main algorithm in [Reference González Cázares, Mijatović and Uribe Bravo18] not applicable. Moreover, Theorem 2.1 allows us to retain the geometric convergence of

$\mathbb{P}_{\boldsymbol0}$

. The main application of TSB-Alg is to general tempered stable processes, as the simulation of their increments is currently out of reach for many cases of interest (see Section 3.4 below for comparison with existing methods when it is not), making the main algorithm in [Reference González Cázares, Mijatović and Uribe Bravo18] not applicable. Moreover, Theorem 2.1 allows us to retain the geometric convergence of

![]() $\chi_n$

established in [Reference González Cázares, Mijatović and Uribe Bravo18]; see Section 3 below for details.

$\chi_n$

established in [Reference González Cázares, Mijatović and Uribe Bravo18]; see Section 3 below for details.

3. Monte Carlo and multilevel Monte Carlo estimators based on TSB-Alg

3.1. Bias of TSB-Alg

An application of Theorem 2.1 implies that the bias of TSB-Alg equals

The natural coupling

![]() $\big(\chi,\chi_n, Y_T^{{(+)}}, Y_T^{{(-)}}\big)$

in (6) is defined by

$\big(\chi,\chi_n, Y_T^{{(+)}}, Y_T^{{(-)}}\big)$

in (6) is defined by

![]() $Y_T^{{(\pm)}}\,:\!=\,\sum_{k=1}^{\infty}\xi_k^{{(\pm)}}$

,

$Y_T^{{(\pm)}}\,:\!=\,\sum_{k=1}^{\infty}\xi_k^{{(\pm)}}$

,

![]() $\xi_k\,:\!=\,\xi_k^{{(+)}}+\xi_k^{{(-)}}+ \eta_k$

for all

$\xi_k\,:\!=\,\xi_k^{{(+)}}+\xi_k^{{(-)}}+ \eta_k$

for all

![]() $k\in\mathbb{N}$

,

$k\in\mathbb{N}$

,

![]() $\chi$

in (2), and

$\chi$

in (2), and

![]() $\chi_n$

in (5) with

$\chi_n$

in (5) with

![]() $Y_{L_n}\,:\!=\,\sum_{k=n+1}^\infty \xi_k$

, where, conditional on the stick-breaking process

$Y_{L_n}\,:\!=\,\sum_{k=n+1}^\infty \xi_k$

, where, conditional on the stick-breaking process

![]() $\ell=(\ell_k)_{k\in\mathbb{N}}$

, the random variables

$\ell=(\ell_k)_{k\in\mathbb{N}}$

, the random variables

![]() $\{\xi_k^{{(\pm)}},\eta_k:k\in\mathbb{N}\}$

are independent and distributed as

$\{\xi_k^{{(\pm)}},\eta_k:k\in\mathbb{N}\}$

are independent and distributed as

![]() $\xi_k^{{(\pm)}}\sim F^{{(\pm)}}(\ell_k,\cdot)$

and

$\xi_k^{{(\pm)}}\sim F^{{(\pm)}}(\ell_k,\cdot)$

and

![]() $\eta_k\sim N\big(\ell_k b,\sigma^2 \ell_k\big)$

for

$\eta_k\sim N\big(\ell_k b,\sigma^2 \ell_k\big)$

for

![]() $k\in\mathbb{N}$

.

$k\in\mathbb{N}$

.

The following result presents the decay of the strong error

![]() $\Delta_n^g$

for Lipschitz, locally Lipschitz, and two classes of barrier-type discontinuous payoffs that arise frequently in applications. Observe that, in all cases and under the corresponding mild assumptions, the pth moment of the strong error

$\Delta_n^g$

for Lipschitz, locally Lipschitz, and two classes of barrier-type discontinuous payoffs that arise frequently in applications. Observe that, in all cases and under the corresponding mild assumptions, the pth moment of the strong error

![]() $\Delta_n^g$

decays exponentially fast in n with a rate

$\Delta_n^g$

decays exponentially fast in n with a rate

![]() $\vartheta>0$

that depends on the payoff g, the index

$\vartheta>0$

that depends on the payoff g, the index

![]() $\beta_*$

defined in (21) below, and the degree p of the considered moment. In Proposition 3.1 and throughout the paper, the notation

$\beta_*$

defined in (21) below, and the degree p of the considered moment. In Proposition 3.1 and throughout the paper, the notation

![]() $f(n)=\mathcal{O}(g(n))$

as

$f(n)=\mathcal{O}(g(n))$

as

![]() $n\to\infty$

for functions

$n\to\infty$

for functions

![]() $f,g:\mathbb{N}\to(0,\infty)$

means

$f,g:\mathbb{N}\to(0,\infty)$

means

![]() $\limsup_{n\to\infty}f(n)/g(n)<\infty$

. Put differently,

$\limsup_{n\to\infty}f(n)/g(n)<\infty$

. Put differently,

![]() $f(n)=\mathcal{O}(g(n))$

is equivalent to f(n) being bounded above by

$f(n)=\mathcal{O}(g(n))$

is equivalent to f(n) being bounded above by

![]() $C_0 g(n)$

for some constant

$C_0 g(n)$

for some constant

![]() $C_0>0$

and all

$C_0>0$

and all

![]() $n\in\mathbb{N}$

.

$n\in\mathbb{N}$

.

Proposition 3.1.

Let

![]() ${\boldsymbol\lambda}=(\lambda_+,\lambda_-)$

,

${\boldsymbol\lambda}=(\lambda_+,\lambda_-)$

,

![]() $\nu$

, and

$\nu$

, and

![]() $\sigma^2$

be as in Section 2, and fix

$\sigma^2$

be as in Section 2, and fix

![]() $p\ge 1$

. Then, for the classes of payoffs

$p\ge 1$

. Then, for the classes of payoffs

![]() $g(\chi)=g(X_T,\overline{X}_T, \tau_T)$

below, the strong error of TSB-Alg decays as follows (as

$g(\chi)=g(X_T,\overline{X}_T, \tau_T)$

below, the strong error of TSB-Alg decays as follows (as

![]() $n\to\infty$

).

$n\to\infty$

).

(Lipschitz.) Suppose

![]() $|g(x,y,t)-g(x,y',t')|\le K(|y-y'|+|t-t'|)$

for some K and all

$|g(x,y,t)-g(x,y',t')|\le K(|y-y'|+|t-t'|)$

for some K and all

![]() $(x,y,y',t,t')\in\mathbb{R}\times\mathbb{R}_+^2\times[0,T]^2$

. Then

$(x,y,y',t,t')\in\mathbb{R}\times\mathbb{R}_+^2\times[0,T]^2$

. Then

![]() $\mathbb{E}_{{\boldsymbol0}}\big[\big|\Delta_n^g\big|^p\big]=\mathcal{O}\big(e^{-\vartheta_p n}\big)$

, where

$\mathbb{E}_{{\boldsymbol0}}\big[\big|\Delta_n^g\big|^p\big]=\mathcal{O}\big(e^{-\vartheta_p n}\big)$

, where

![]() $\vartheta_p\in[\log(3/2),\log2]$

is in (23) below.

$\vartheta_p\in[\log(3/2),\log2]$

is in (23) below.

(Locally Lipschitz.) Let

![]() $|g(x,y,t)-g(x,y',t')|\le K(|y-y'|+|t-t'|)e^{\max\{y,y'\}}$

for some constant

$|g(x,y,t)-g(x,y',t')|\le K(|y-y'|+|t-t'|)e^{\max\{y,y'\}}$

for some constant

![]() $K>0$

and all

$K>0$

and all

![]() $(x,y,y',t,t')\in\mathbb{R}\times\mathbb{R}_+^2\times[0,T]^2$

. If

$(x,y,y',t,t')\in\mathbb{R}\times\mathbb{R}_+^2\times[0,T]^2$

. If

![]() $\lambda_+\geq q > 1$

and

$\lambda_+\geq q > 1$

and

![]() $\int_{[1,\infty)}e^{p(q-\lambda_+)x}\nu(\textrm{d} x)<\infty$

, then

$\int_{[1,\infty)}e^{p(q-\lambda_+)x}\nu(\textrm{d} x)<\infty$

, then

![]() $\mathbb{E}_{{\boldsymbol0}}\big[\big|\Delta_n^g\big|^p\big]=\mathcal{O}\big(e^{-( \vartheta_{pr}/r)n}\big)$

, where

$\mathbb{E}_{{\boldsymbol0}}\big[\big|\Delta_n^g\big|^p\big]=\mathcal{O}\big(e^{-( \vartheta_{pr}/r)n}\big)$

, where

![]() $r\,:\!=\,(1-1/q)^{-1}>1$

and

$r\,:\!=\,(1-1/q)^{-1}>1$

and

![]() $\vartheta_{pr}\in[\log(3/2),\log2]$

is as in (23).

$\vartheta_{pr}\in[\log(3/2),\log2]$

is as in (23).

(Barrier type 1.) Suppose

![]() $g(\chi)=h(X_T)\cdot\mathbb{1}\{\overline{X}_T\le M\}$

for some

$g(\chi)=h(X_T)\cdot\mathbb{1}\{\overline{X}_T\le M\}$

for some

![]() $M>0$

and a measurable bounded function

$M>0$

and a measurable bounded function

![]() $h:\mathbb{R}\to\mathbb{R}$

. If

$h:\mathbb{R}\to\mathbb{R}$

. If

![]() $\mathbb{P}_{{\boldsymbol0}}(M<\overline{X}_T\le M+x)\le Kx$

for some

$\mathbb{P}_{{\boldsymbol0}}(M<\overline{X}_T\le M+x)\le Kx$

for some

![]() $K>0$

and all

$K>0$

and all

![]() $x\ge0$

, then for

$x\ge0$

, then for

![]() $\alpha_*\in (1,2]$

in (22) and any

$\alpha_*\in (1,2]$

in (22) and any

![]() $\gamma\in(0,1)$

we have

$\gamma\in(0,1)$

we have

![]() $\mathbb{E}_{{\boldsymbol0}}\big[\big|\Delta_n^g\big|^p\big]=\mathcal{O}\big(e^{-[\gamma\log(2)/(\gamma+\alpha_*)]n}\big)$

. Moreover, we may take

$\mathbb{E}_{{\boldsymbol0}}\big[\big|\Delta_n^g\big|^p\big]=\mathcal{O}\big(e^{-[\gamma\log(2)/(\gamma+\alpha_*)]n}\big)$

. Moreover, we may take

![]() $\gamma=1$

if any of the following hold:

$\gamma=1$

if any of the following hold:

![]() $\sigma^2>0$

or

$\sigma^2>0$

or

![]() $\int_{(-1,1)}|x|\nu(\textrm{d} x)<\infty$

or Assumption 5.1 below.

$\int_{(-1,1)}|x|\nu(\textrm{d} x)<\infty$

or Assumption 5.1 below.

(Barrier type 2.) Suppose

![]() $g(\chi)=h(X_T,\overline{X}_T)\cdot\mathbb{1}\{\tau_T\le s\}$

, where

$g(\chi)=h(X_T,\overline{X}_T)\cdot\mathbb{1}\{\tau_T\le s\}$

, where

![]() $s\in(0,T)$

, and h is measurable and bounded with

$s\in(0,T)$

, and h is measurable and bounded with

![]() $|h(x,y)-h(x,y')|\le K|y-y'|$

for some

$|h(x,y)-h(x,y')|\le K|y-y'|$

for some

![]() $K>0$

and all

$K>0$

and all

![]() $(x,y,y')\in\mathbb{R}\times\mathbb{R}_+^2$

. If

$(x,y,y')\in\mathbb{R}\times\mathbb{R}_+^2$

. If

![]() $\sigma^2>0$

or

$\sigma^2>0$

or

![]() $\nu(\mathbb{R}\setminus\{0\})=\infty$

, then

$\nu(\mathbb{R}\setminus\{0\})=\infty$

, then

![]() $\mathbb{E}_{{\boldsymbol0}}\big[\big|\Delta_n^g\big|^p\big]=\mathcal{O}\big(e^{-n/e}\big)$

.

$\mathbb{E}_{{\boldsymbol0}}\big[\big|\Delta_n^g\big|^p\big]=\mathcal{O}\big(e^{-n/e}\big)$

.

Remark 3.1. (i) The proof of Proposition 3.1, given in Section 5 below, is based on Theorem 2.1 and analogous bounds in [Reference González Cázares, Mijatović and Uribe Bravo18] (for Lipschitz, locally Lipschitz, and barrier-type-1 payoffs) and [Reference González Cázares and Mijatović16] (for barrier-type-2 payoffs). In particular, in the proof of Proposition 3.1 below, we need not assume

![]() $\lambda_+>0$

to apply [Reference González Cázares, Mijatović and Uribe Bravo18, Proposition 1], which works under our standing assumption

$\lambda_+>0$

to apply [Reference González Cázares, Mijatović and Uribe Bravo18, Proposition 1], which works under our standing assumption

![]() ${\boldsymbol\lambda}\neq {\boldsymbol0}$

.

${\boldsymbol\lambda}\neq {\boldsymbol0}$

.

(ii) For barrier option payoffs under a tempered stable process X (i.e. the barrier-type-1 class in Proposition 3.1), we may take

![]() $\gamma=1$

since X satisfies either

$\gamma=1$

since X satisfies either

![]() $\int_{(-1,1)}|x|\nu(\textrm{d} x)<\infty$

or Assumption 5.1.

$\int_{(-1,1)}|x|\nu(\textrm{d} x)<\infty$

or Assumption 5.1.

(iii) The restriction

![]() $p\ge 1$

is not essential, as we may consider any

$p\ge 1$

is not essential, as we may consider any

![]() $p>0$

at the cost of a smaller (but still geometric) convergence rate. In particular, our standing assumption

$p>0$

at the cost of a smaller (but still geometric) convergence rate. In particular, our standing assumption

![]() ${\boldsymbol\lambda}\ne{\boldsymbol0}$

(and

${\boldsymbol\lambda}\ne{\boldsymbol0}$

(and

![]() $\lambda_+>1$

in the locally Lipschitz case) guarantees the finiteness of the p-moment of the strong error

$\lambda_+>1$

in the locally Lipschitz case) guarantees the finiteness of the p-moment of the strong error

![]() $\Delta_n^g$

for any

$\Delta_n^g$

for any

![]() $p>0$

. However, the restriction

$p>0$

. However, the restriction

![]() $p\ge 1$

covers the cases

$p\ge 1$

covers the cases

![]() $p\in\{1,2\}$

required for the MC and MLMC complexity analyses and ensures that the corresponding rate

$p\in\{1,2\}$

required for the MC and MLMC complexity analyses and ensures that the corresponding rate

![]() $\vartheta_s$

in (23) lies in

$\vartheta_s$

in (23) lies in

![]() $[\log(3/2),\log2]$

. In fact, for any payoff g in Proposition 3.1 we have

$[\log(3/2),\log2]$

. In fact, for any payoff g in Proposition 3.1 we have

![]() $\mathbb{E}_{\boldsymbol0}\big[\big|\Delta_{k}^g\big|^p\big]=\mathcal{O}\big(e^{-\vartheta_gk}\big)$

for

$\mathbb{E}_{\boldsymbol0}\big[\big|\Delta_{k}^g\big|^p\big]=\mathcal{O}\big(e^{-\vartheta_gk}\big)$

for

![]() $p\in\{1,2\}$

and a positive rate

$p\in\{1,2\}$

and a positive rate

![]() $\vartheta_g>0$

bounded away from zero:

$\vartheta_g>0$

bounded away from zero:

![]() $\vartheta_g\geq 0.23$

(resp.

$\vartheta_g\geq 0.23$

(resp.

![]() $\log(3/2)$

,

$\log(3/2)$

,

![]() $(1-1/\lambda_+)\log(3/2)$

) for barrier-type-1 and barrier-type-2 (resp. Lipschitz, locally Lipschitz) payoffs.

$(1-1/\lambda_+)\log(3/2)$

) for barrier-type-1 and barrier-type-2 (resp. Lipschitz, locally Lipschitz) payoffs.

3.2. Computational complexity and the central limit theorem for the Monte Carlo and multilevel Monte Carlo estimators

Consider the MC estimator

\begin{equation}\hat\theta^{g,n}_{\textrm{MC}} \,:\!=\, \frac{1}{N}\sum_{i=1}^N \theta_i^{g,n},\end{equation}

\begin{equation}\hat\theta^{g,n}_{\textrm{MC}} \,:\!=\, \frac{1}{N}\sum_{i=1}^N \theta_i^{g,n},\end{equation}

where

![]() $\{\theta_i^{g,n}\}_{i\in\mathbb{N}}$

is the i.i.d. output of TSB-Alg with

$\{\theta_i^{g,n}\}_{i\in\mathbb{N}}$

is the i.i.d. output of TSB-Alg with

![]() $\theta_1^{g,n}\overset{d}{=} g(\chi_n)\Upsilon_{\boldsymbol\lambda}$

(under

$\theta_1^{g,n}\overset{d}{=} g(\chi_n)\Upsilon_{\boldsymbol\lambda}$

(under

![]() $\mathbb{P}_{\boldsymbol0}$

) and

$\mathbb{P}_{\boldsymbol0}$

) and

![]() $n,N\in\mathbb{N}$

. The corresponding MLMC estimator is given by

$n,N\in\mathbb{N}$

. The corresponding MLMC estimator is given by

\begin{equation}\hat\theta^{g,n}_{\textrm{ML}}: = \sum_{k=1}^n\frac{1}{N_k}\sum_{i=1}^{N_k}D^g_{k,i},\end{equation}

\begin{equation}\hat\theta^{g,n}_{\textrm{ML}}: = \sum_{k=1}^n\frac{1}{N_k}\sum_{i=1}^{N_k}D^g_{k,i},\end{equation}

where

![]() $\{D^g_{k,i}\}_{k,i\in\mathbb{N}}$

is an array of independent variables satisfying

$\{D^g_{k,i}\}_{k,i\in\mathbb{N}}$

is an array of independent variables satisfying

![]() $D^g_{k,i}\overset{d}{=} (g(\chi_{k})-g(\chi_{k-1}))\Upsilon_{\boldsymbol\lambda}$

and

$D^g_{k,i}\overset{d}{=} (g(\chi_{k})-g(\chi_{k-1}))\Upsilon_{\boldsymbol\lambda}$

and

![]() $D^g_{1,i}\overset{d}{=} g(\chi_1)\Upsilon_{\boldsymbol\lambda}$

(under

$D^g_{1,i}\overset{d}{=} g(\chi_1)\Upsilon_{\boldsymbol\lambda}$

(under

![]() $\mathbb{P}_{\boldsymbol0}$

), for

$\mathbb{P}_{\boldsymbol0}$

), for

![]() $i\in\mathbb{N}$

,

$i\in\mathbb{N}$

,

![]() $k\ge 2$

, and

$k\ge 2$

, and

![]() $n,N_1,\ldots,N_n\in\mathbb{N}$

. Note that TSB-Alg can easily be adapted to sample the variable

$n,N_1,\ldots,N_n\in\mathbb{N}$

. Note that TSB-Alg can easily be adapted to sample the variable

![]() $D^g_{k,i}$

by drawing the ingredients for

$D^g_{k,i}$

by drawing the ingredients for

![]() $(\chi_k,\Upsilon_{\boldsymbol\lambda})$

and computing

$(\chi_k,\Upsilon_{\boldsymbol\lambda})$

and computing

![]() $(\chi_{k-1},\chi_k,\Upsilon_{\boldsymbol\lambda})$

deterministically from the output; see [Reference González Cázares, Mijatović and Uribe Bravo18, Subsection 2.4] for further details. In the following, we refer to

$(\chi_{k-1},\chi_k,\Upsilon_{\boldsymbol\lambda})$

deterministically from the output; see [Reference González Cázares, Mijatović and Uribe Bravo18, Subsection 2.4] for further details. In the following, we refer to

![]() $\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]$

as the level variance of the MLMC estimator.

$\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]$

as the level variance of the MLMC estimator.

The computational complexity analysis of the MC and MLMC estimators is given in the next result (the usual notation

![]() $\lceil x\rceil\,:\!=\,\inf\{k\in\mathbb{N}:k\ge x\}$

,

$\lceil x\rceil\,:\!=\,\inf\{k\in\mathbb{N}:k\ge x\}$

,

![]() $x\in\mathbb{R}_+$

, is used for the ceiling function). In Proposition 3.2 and throughout the paper, the computational cost of an algorithm is measured as the total number of operations carried out by the algorithm. In particular, we assume that the following operations have computational costs uniformly bounded by some constant (measured, for instance, in units of time): simulation from the uniform law; simulation from the laws

$x\in\mathbb{R}_+$

, is used for the ceiling function). In Proposition 3.2 and throughout the paper, the computational cost of an algorithm is measured as the total number of operations carried out by the algorithm. In particular, we assume that the following operations have computational costs uniformly bounded by some constant (measured, for instance, in units of time): simulation from the uniform law; simulation from the laws

![]() $F^{(\pm)}(t,\cdot)$

,

$F^{(\pm)}(t,\cdot)$

,

![]() $t>0$

; evaluation of elementary mathematical operations such as addition, subtraction, multiplication, and division; and evaluation of elementary functions such as

$t>0$

; evaluation of elementary mathematical operations such as addition, subtraction, multiplication, and division; and evaluation of elementary functions such as

![]() $\exp$

,

$\exp$

,

![]() $\log$

,

$\log$

,

![]() $\sin$

,

$\sin$

,

![]() $\cos$

,

$\cos$

,

![]() $\tan$

, and

$\tan$

, and

![]() $\arctan$

.

$\arctan$

.

Proposition 3.2.

Let the payoff g from Proposition 3.1 also satisfy

![]() $\mathbb{E}_{\boldsymbol0}\big[g(\chi)^2\Upsilon_{\boldsymbol\lambda}^2\big]<\infty$

. For any

$\mathbb{E}_{\boldsymbol0}\big[g(\chi)^2\Upsilon_{\boldsymbol\lambda}^2\big]<\infty$

. For any

![]() $\varepsilon>0$

, let

$\varepsilon>0$

, let

![]() $n(\varepsilon) \,:\!=\,\inf\{k\in\mathbb{N}:|\mathbb{E}_{{\boldsymbol0}}[g(\chi_k)\Upsilon_{\boldsymbol\lambda}]-\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]|\le\varepsilon/\sqrt{2}\}$

. Let c be an upper bound on the expected computational cost of line 2 in TSB-Alg for a time horizon bounded by T, and let

$n(\varepsilon) \,:\!=\,\inf\{k\in\mathbb{N}:|\mathbb{E}_{{\boldsymbol0}}[g(\chi_k)\Upsilon_{\boldsymbol\lambda}]-\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]|\le\varepsilon/\sqrt{2}\}$

. Let c be an upper bound on the expected computational cost of line 2 in TSB-Alg for a time horizon bounded by T, and let

![]() $\mathbb{V}_{\boldsymbol0}[\cdot]$

denote the variance under the probability measure

$\mathbb{V}_{\boldsymbol0}[\cdot]$

denote the variance under the probability measure

![]() $\mathbb{P}_{\boldsymbol0}$

.

$\mathbb{P}_{\boldsymbol0}$

.

(

MC.) Suppose

![]() $n=n(\varepsilon)$

and

$n=n(\varepsilon)$

and

![]() $N=\lceil2\varepsilon^{-2} \mathbb{V}_{\boldsymbol0}[g(\chi_n)\Upsilon_{\boldsymbol\lambda}]\rceil$

. Then the MC estimator

$N=\lceil2\varepsilon^{-2} \mathbb{V}_{\boldsymbol0}[g(\chi_n)\Upsilon_{\boldsymbol\lambda}]\rceil$

. Then the MC estimator

![]() $\hat\theta^{g,n}_{\textrm{MC}}$

is

$\hat\theta^{g,n}_{\textrm{MC}}$

is

![]() $\varepsilon$

-accurate, i.e.

$\varepsilon$

-accurate, i.e.

![]() $\mathbb{E}_{\boldsymbol0}\big[\big|\hat\theta^{g,n}_{\textrm{MC}} -\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]\big|^2\big]\le\varepsilon^2$

, with expected cost

$\mathbb{E}_{\boldsymbol0}\big[\big|\hat\theta^{g,n}_{\textrm{MC}} -\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]\big|^2\big]\le\varepsilon^2$

, with expected cost

![]() $\mathcal{C}_{\textrm{MC}}(\varepsilon)\,:\!=\,c(n+1)N=\mathcal{O}\big(\varepsilon^{-2}\log(1/\varepsilon)\big)$

as

$\mathcal{C}_{\textrm{MC}}(\varepsilon)\,:\!=\,c(n+1)N=\mathcal{O}\big(\varepsilon^{-2}\log(1/\varepsilon)\big)$

as

![]() $\varepsilon\to0$

.

$\varepsilon\to0$

.

(MLMC.) Suppose

![]() $n=n(\varepsilon)$

and set

$n=n(\varepsilon)$

and set

\begin{equation} N_k\,:\!=\,\Bigg\lceil2\varepsilon^{-2} \sqrt{\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]/k} \Bigg(\sum_{j=1}^{n}\sqrt{j\mathbb{V}_{\boldsymbol0}\big[D_{j,1}^g\big]}\Bigg) \Bigg\rceil, \quad k\in\{1,\ldots,n\}.\end{equation}

\begin{equation} N_k\,:\!=\,\Bigg\lceil2\varepsilon^{-2} \sqrt{\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]/k} \Bigg(\sum_{j=1}^{n}\sqrt{j\mathbb{V}_{\boldsymbol0}\big[D_{j,1}^g\big]}\Bigg) \Bigg\rceil, \quad k\in\{1,\ldots,n\}.\end{equation}

Then the MLMC estimator

![]() $\hat\theta^{g,n}_{\textrm{ML}}$

is

$\hat\theta^{g,n}_{\textrm{ML}}$

is

![]() $\varepsilon$

-accurate, and the corresponding expected cost equals

$\varepsilon$

-accurate, and the corresponding expected cost equals

\begin{equation}\mathcal{C}_{\textrm{ML}}(\varepsilon)\,:\!=\,2c\varepsilon^{-2} \Bigg(\sum_{k=1}^{n} \sqrt{k\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]}\Bigg)^2= \mathcal{O}\big(\varepsilon^{-2}\big)\quad \text{as $\varepsilon\to0$.}\end{equation}

\begin{equation}\mathcal{C}_{\textrm{ML}}(\varepsilon)\,:\!=\,2c\varepsilon^{-2} \Bigg(\sum_{k=1}^{n} \sqrt{k\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]}\Bigg)^2= \mathcal{O}\big(\varepsilon^{-2}\big)\quad \text{as $\varepsilon\to0$.}\end{equation}

Proposition 3.1 (with

![]() $p=1$

) implies that the bias in (6) equals

$p=1$

) implies that the bias in (6) equals

![]() $\mathbb{E}_{\boldsymbol0}\big[\Delta_n^g\big]=\mathcal{O}\big(e^{-\vartheta_g n}\big)$

as

$\mathbb{E}_{\boldsymbol0}\big[\Delta_n^g\big]=\mathcal{O}\big(e^{-\vartheta_g n}\big)$

as

![]() $n\to\infty$

for some

$n\to\infty$

for some

![]() $\vartheta_g>0$

. Thus, the integer

$\vartheta_g>0$

. Thus, the integer

![]() $n(\varepsilon)$

in Proposition 3.2 is finite for all payoffs g considered in Proposition 3.1, and moreover,

$n(\varepsilon)$

in Proposition 3.2 is finite for all payoffs g considered in Proposition 3.1, and moreover,

![]() $n(\varepsilon)=\mathcal{O}(\log(1/\varepsilon))$

as

$n(\varepsilon)=\mathcal{O}(\log(1/\varepsilon))$

as

![]() $\varepsilon\to0$

in all cases. In addition, by Remark 3.1(i) above, the variance of

$\varepsilon\to0$

in all cases. In addition, by Remark 3.1(i) above, the variance of

![]() $\theta^{g,k}_1$

is bounded in

$\theta^{g,k}_1$

is bounded in

![]() $k\in\mathbb{N}$

:

$k\in\mathbb{N}$

:

Note that barrier-type payoffs g considered in Proposition 3.1 satisfy the second moment assumption, while in the Lipschitz or locally Lipschitz cases it is sufficient to assume additionally that

![]() $\lambda_+$

is either positive or strictly greater than one, respectively. Moreover,

$\lambda_+$

is either positive or strictly greater than one, respectively. Moreover,

![]() $\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]\le 2\mathbb{E}_{\boldsymbol0}\big[\big(\Delta_{k}^g\big)^2+\big(\Delta_{k-1}^g\big)^2\big]=\mathcal{O}\big(e^{-\vartheta_gk}\big)$

for

$\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]\le 2\mathbb{E}_{\boldsymbol0}\big[\big(\Delta_{k}^g\big)^2+\big(\Delta_{k-1}^g\big)^2\big]=\mathcal{O}\big(e^{-\vartheta_gk}\big)$

for

![]() $\vartheta_g>0$

bounded away from zero (see Remark 3.1(iii) above). These facts and the standard complexity analysis for MLMC (see e.g. [Reference González Cázares and Mijatović16, Appendix A] and the references therein) imply that the estimators

$\vartheta_g>0$

bounded away from zero (see Remark 3.1(iii) above). These facts and the standard complexity analysis for MLMC (see e.g. [Reference González Cázares and Mijatović16, Appendix A] and the references therein) imply that the estimators

![]() $\hat\theta^{g,n}_{\textrm{MC}}$

and

$\hat\theta^{g,n}_{\textrm{MC}}$

and

![]() $\hat\theta^{g,n}_{\textrm{ML}}$

are

$\hat\theta^{g,n}_{\textrm{ML}}$

are

![]() $\varepsilon$

-accurate with the stated computational costs, proving Proposition 3.2.

$\varepsilon$

-accurate with the stated computational costs, proving Proposition 3.2.

We stress that the payoffs g in Proposition 3.2 include discontinuous payoffs in the supremum

![]() $\overline{X}_T$

(barrier type 1) or the time

$\overline{X}_T$

(barrier type 1) or the time

![]() $\tau_T$

when this supremum is attained (barrier type 2), with the corresponding computational complexities of the MC and MLMC estimators given by

$\tau_T$

when this supremum is attained (barrier type 2), with the corresponding computational complexities of the MC and MLMC estimators given by

![]() $\mathcal{O}\big(\varepsilon^{-2}\log(1/\varepsilon)\big)$

and

$\mathcal{O}\big(\varepsilon^{-2}\log(1/\varepsilon)\big)$

and

![]() $\mathcal{O}\big(\varepsilon^{-2}\big)$

, respectively. This theoretical prediction matches the numerical performance of TSB-Alg for barrier options and the modified ulcer index; see Section 4.2 below.

$\mathcal{O}\big(\varepsilon^{-2}\big)$

, respectively. This theoretical prediction matches the numerical performance of TSB-Alg for barrier options and the modified ulcer index; see Section 4.2 below.

For obtaining confidence intervals (CIs) for the MC and MLMC estimators

![]() $\hat\theta^{g,n}_{\textrm{MC}}$

and

$\hat\theta^{g,n}_{\textrm{MC}}$

and

![]() $\hat\theta^{g,n}_{\textrm{ML}}$

, a CLT can be very helpful. (The CIs derived in this paper do not account for model uncertainty or the uncertainty in the estimation or calibration of the parameters.) In fact, the CLT is necessary to construct a CI if the constants in the bounds on the bias in Proposition 3.1 are not explicitly known (e.g. for barrier-type-1 payoffs, the constant depends on the unknown value of the density of the supremum

$\hat\theta^{g,n}_{\textrm{ML}}$

, a CLT can be very helpful. (The CIs derived in this paper do not account for model uncertainty or the uncertainty in the estimation or calibration of the parameters.) In fact, the CLT is necessary to construct a CI if the constants in the bounds on the bias in Proposition 3.1 are not explicitly known (e.g. for barrier-type-1 payoffs, the constant depends on the unknown value of the density of the supremum

![]() $\overline{X}_T$

at the barrier); see the discussion in [Reference González Cázares, Mijatović and Uribe Bravo18, Section 2.3]. Moreover, even if the bias can be controlled explicitly, the concentration inequalities typically lead to wider CIs than those based on the CLT; see [Reference Ben Alaya and Kebaier4, Reference Hoel and Krumscheid20]. The following result establishes the CLT for the MC and MLMC estimators valid for payoffs considered in Proposition 3.1.

$\overline{X}_T$

at the barrier); see the discussion in [Reference González Cázares, Mijatović and Uribe Bravo18, Section 2.3]. Moreover, even if the bias can be controlled explicitly, the concentration inequalities typically lead to wider CIs than those based on the CLT; see [Reference Ben Alaya and Kebaier4, Reference Hoel and Krumscheid20]. The following result establishes the CLT for the MC and MLMC estimators valid for payoffs considered in Proposition 3.1.

Theorem 3.1. [CLT for TSB-Alg] Let g be any of the payoffs in Proposition 3.1, satisfying in addition

![]() $\mathbb{E}_{\boldsymbol0}\big[g(\chi)^2\Upsilon_{\boldsymbol\lambda}^2\big]<\infty$

. Let

$\mathbb{E}_{\boldsymbol0}\big[g(\chi)^2\Upsilon_{\boldsymbol\lambda}^2\big]<\infty$

. Let

![]() $\vartheta_g\in(0,\log2]$

be the rate satisfying

$\vartheta_g\in(0,\log2]$

be the rate satisfying

![]() $\mathbb{E}_{\boldsymbol0}\big[\big|\Delta_n^g\big|\big]=\mathcal{O}\big(e^{-\vartheta_gn}\big)$

, given in Proposition 3.1 and Remark 3.1(iii) above (with

$\mathbb{E}_{\boldsymbol0}\big[\big|\Delta_n^g\big|\big]=\mathcal{O}\big(e^{-\vartheta_gn}\big)$

, given in Proposition 3.1 and Remark 3.1(iii) above (with

![]() $p=1$

). Fix

$p=1$

). Fix

![]() $c_0>1/\vartheta_g$

, let

$c_0>1/\vartheta_g$

, let

![]() $n=n(\varepsilon)\,:\!=\,\lceil c_0\log(1/\varepsilon)\rceil$

, and suppose N and

$n=n(\varepsilon)\,:\!=\,\lceil c_0\log(1/\varepsilon)\rceil$

, and suppose N and

![]() $N_1,\ldots,N_n$

are as in Proposition 3.2. Then the MC and MLMC estimators

$N_1,\ldots,N_n$

are as in Proposition 3.2. Then the MC and MLMC estimators

![]() $\hat\theta_{\textrm{MC}}^{g,n}$

and

$\hat\theta_{\textrm{MC}}^{g,n}$

and

![]() $\hat\theta_{\textrm{ML}}^{g,n}$

respectively satisfy the following CLTs (Z is a standard normal random variable):

$\hat\theta_{\textrm{ML}}^{g,n}$

respectively satisfy the following CLTs (Z is a standard normal random variable):

Theorem 3.1 works well in practice: in Figure 7 of Section 4.2 below we construct CIs (as a function of decreasing accuracy

![]() $\varepsilon$

) for an MLMC estimator of a barrier option price under a tempered stable model. The rate

$\varepsilon$

) for an MLMC estimator of a barrier option price under a tempered stable model. The rate

![]() $c_0$

can be taken arbitrarily close to

$c_0$

can be taken arbitrarily close to

![]() $1/\vartheta_g$

, where

$1/\vartheta_g$

, where

![]() $\vartheta_g$

is the corresponding geometric rate of decay of the error for the payoff g in Proposition 3.1 (

$\vartheta_g$

is the corresponding geometric rate of decay of the error for the payoff g in Proposition 3.1 (

![]() $\vartheta_g$

is bounded away from zero by Remark 3.1(iii) above), ensuring that the bias of the estimators vanishes in the limit.

$\vartheta_g$

is bounded away from zero by Remark 3.1(iii) above), ensuring that the bias of the estimators vanishes in the limit.

By Lemma 5.2 below, the definition of the sample sizes N and

![]() $N_1,\ldots,N_n$

in Proposition 3.2 implies that the variances of the estimators

$N_1,\ldots,N_n$

in Proposition 3.2 implies that the variances of the estimators

![]() $\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}$

and

$\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}$

and

![]() $\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}$

(under

$\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}$

(under

![]() $\mathbb{P}_{\boldsymbol0}$

) satisfy

$\mathbb{P}_{\boldsymbol0}$

) satisfy

\[\frac{\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}\big]}{\varepsilon^{2}/2}=\frac{\mathbb{V}_{\boldsymbol0}\big[\theta_1^{g,n(\varepsilon)}\big]}{\varepsilon^2 N/2}\to 1\quad \text{and} \quad \frac{\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}\big]}{\varepsilon^{2}/2}=\sum_{k=1}^{n(\varepsilon)}\frac{\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]}{\varepsilon^2 N_k/2}\to 1\quad \text{ as $\varepsilon\to0$}.\]

\[\frac{\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}\big]}{\varepsilon^{2}/2}=\frac{\mathbb{V}_{\boldsymbol0}\big[\theta_1^{g,n(\varepsilon)}\big]}{\varepsilon^2 N/2}\to 1\quad \text{and} \quad \frac{\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}\big]}{\varepsilon^{2}/2}=\sum_{k=1}^{n(\varepsilon)}\frac{\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]}{\varepsilon^2 N_k/2}\to 1\quad \text{ as $\varepsilon\to0$}.\]

Hence, the CLT in (11) can also be expressed as follows:

\begin{align*}&\big(\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}-\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]\big)/\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}\big]^{1/2} \overset{d}{\to}Z \\\quad \text{and}\quad &\big(\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}-\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]\big)/\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}\big]^{1/2} \overset{d}{\to}Z,\quad \text{as }\varepsilon\to 0.\end{align*}

\begin{align*}&\big(\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}-\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]\big)/\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}\big]^{1/2} \overset{d}{\to}Z \\\quad \text{and}\quad &\big(\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}-\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]\big)/\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}\big]^{1/2} \overset{d}{\to}Z,\quad \text{as }\varepsilon\to 0.\end{align*}

Since the variances

![]() $\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}\big]$

and

$\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{MC}}^{g,n(\varepsilon)}\big]$

and

![]() $\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}\big]$

can be estimated from the sample, this is in fact how the CLT is often applied in practice. The proof of Theorem 3.1 is based on the CLT for triangular arrays and amounts to verifying Lindeberg’s condition for the estimators

$\mathbb{V}_{\boldsymbol0}\big[\hat\theta_{\textrm{ML}}^{g,n(\varepsilon)}\big]$

can be estimated from the sample, this is in fact how the CLT is often applied in practice. The proof of Theorem 3.1 is based on the CLT for triangular arrays and amounts to verifying Lindeberg’s condition for the estimators

![]() $\hat\theta_{\textrm{MC}}^{g,n}$

and

$\hat\theta_{\textrm{MC}}^{g,n}$

and

![]() $\hat\theta_{\textrm{ML}}^{g,n}$

; see Section 5 below.

$\hat\theta_{\textrm{ML}}^{g,n}$

; see Section 5 below.

3.3. Unbiased estimators

It is known that when the MLMC complexity is optimal, it is possible to construct unbiased estimators without altering the optimal computational complexity

![]() $\mathcal{O}\big(\varepsilon^{-2}\big)$

as

$\mathcal{O}\big(\varepsilon^{-2}\big)$

as

![]() $\varepsilon\to 0$

. Such debiasing techniques are based around randomising the number of levels

$\varepsilon\to 0$

. Such debiasing techniques are based around randomising the number of levels

![]() $\sup\{k\in\mathbb{N}:N_k>0\}$

and number of samples

$\sup\{k\in\mathbb{N}:N_k>0\}$

and number of samples

![]() $(N_k)_{k\in\mathbb{N}}$

at each level of the variables

$(N_k)_{k\in\mathbb{N}}$

at each level of the variables

![]() $\{D^g_{k,i}\}_{k,i\in\mathbb{N}}$

in the MLMC estimator in (8); see e.g. [Reference McLeish30, Reference Rhee and Glynn34, Reference Vihola40]. More precisely, following [Reference Vihola40, Theorem 7], for any g in Proposition 3.1 and a parameter

$\{D^g_{k,i}\}_{k,i\in\mathbb{N}}$

in the MLMC estimator in (8); see e.g. [Reference McLeish30, Reference Rhee and Glynn34, Reference Vihola40]. More precisely, following [Reference Vihola40, Theorem 7], for any g in Proposition 3.1 and a parameter

![]() $N\in\mathbb{N}$

, we may construct an almost surely finite sequence of random integers

$N\in\mathbb{N}$

, we may construct an almost surely finite sequence of random integers

![]() $(N_k)_{k\in\mathbb{N}}$

(i.e.

$(N_k)_{k\in\mathbb{N}}$

(i.e.

![]() $\sup\{k\in\mathbb{N}:N_k>0\}<\infty$

a.s.) with explicit means

$\sup\{k\in\mathbb{N}:N_k>0\}<\infty$

a.s.) with explicit means

![]() $\mathbb{E}_{\boldsymbol0}[N_k]>0$

, such that the estimator

$\mathbb{E}_{\boldsymbol0}[N_k]>0$

, such that the estimator

\begin{equation}\hat{P}_N=:\sum_{k=1}^\infty\frac{1}{\mathbb{E}_{\boldsymbol0} [N_k]}\sum_{i=1}^{N_k}D_{k,i}^g\end{equation}

\begin{equation}\hat{P}_N=:\sum_{k=1}^\infty\frac{1}{\mathbb{E}_{\boldsymbol0} [N_k]}\sum_{i=1}^{N_k}D_{k,i}^g\end{equation}

is unbiased,

![]() $\mathbb{E}_{\boldsymbol0}[\hat{P}_N]=\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]$

, and its variance

$\mathbb{E}_{\boldsymbol0}[\hat{P}_N]=\mathbb{E}_{\boldsymbol\lambda}[g(\chi)]$

, and its variance

![]() $\mathbb{V}_{\boldsymbol0}[\hat{P}_N]$

and expected computational cost (under

$\mathbb{V}_{\boldsymbol0}[\hat{P}_N]$

and expected computational cost (under

![]() $\mathbb{P}_{\boldsymbol0}$

) are proportional to

$\mathbb{P}_{\boldsymbol0}$

) are proportional to

![]() $1/N$

and N, respectively, as

$1/N$

and N, respectively, as

![]() $N\to\infty$

. The MC complexity analysis of the estimator

$N\to\infty$

. The MC complexity analysis of the estimator

![]() $\hat{P}_N$

is then nearly identical to that of the classical MC estimator for exact simulation algorithms.

$\hat{P}_N$

is then nearly identical to that of the classical MC estimator for exact simulation algorithms.

There are several parametric ways of constructing the random sequence

![]() $(N_k)_{k\in\mathbb{N}}$

(see e.g. [Reference Vihola40]), and it is also possible to optimise for the parameters appearing in these constructions as a function of the considered payoff g and other characteristics of X (see e.g. [Reference González Cázares, Mijatović and Uribe Bravo18, Section 2.5]). The details of such optimisations have been omitted in the present work in the interest of brevity, since they coincide with those found in [Reference González Cázares, Mijatović and Uribe Bravo18, Section 2.5 and Appendix A.3]. We comment that, as pointed out by the referee, in addition to achieving the optimal complexity, unbiased estimators (referred to as ‘single-term estimators’ in [Reference Rhee and Glynn34, Reference Vihola40]) also achieve the same asymptotic variance as the MLMC estimators (see [Reference Vihola40, Theorem 19]). Moreover, there are cases where unbiased estimators of a different form (referred to as ‘coupled-sum estimators’ in [Reference Rhee and Glynn34, Reference Vihola40]) achieve much smaller asymptotic variance than the MLMC (see Table 17 in the online supplement of [Reference Rhee and Glynn34]). We are grateful to the referee for this observation.

$(N_k)_{k\in\mathbb{N}}$

(see e.g. [Reference Vihola40]), and it is also possible to optimise for the parameters appearing in these constructions as a function of the considered payoff g and other characteristics of X (see e.g. [Reference González Cázares, Mijatović and Uribe Bravo18, Section 2.5]). The details of such optimisations have been omitted in the present work in the interest of brevity, since they coincide with those found in [Reference González Cázares, Mijatović and Uribe Bravo18, Section 2.5 and Appendix A.3]. We comment that, as pointed out by the referee, in addition to achieving the optimal complexity, unbiased estimators (referred to as ‘single-term estimators’ in [Reference Rhee and Glynn34, Reference Vihola40]) also achieve the same asymptotic variance as the MLMC estimators (see [Reference Vihola40, Theorem 19]). Moreover, there are cases where unbiased estimators of a different form (referred to as ‘coupled-sum estimators’ in [Reference Rhee and Glynn34, Reference Vihola40]) achieve much smaller asymptotic variance than the MLMC (see Table 17 in the online supplement of [Reference Rhee and Glynn34]). We are grateful to the referee for this observation.

3.4. Comparisons

This subsection provides non-asymptotic and asymptotic performance comparisons of estimators based on TSB-Alg. The main aim is to develop rule-of-thumb principles guiding the user to the most effective estimator. In Subsection 3.4.1, for a given value of the accuracy

![]() $\varepsilon$

, we compare the computational complexity of the MC and MLMC estimators based on TSB-Alg. The MLMC estimator based on TSB-Alg is compared with the ones based on SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18] with rejection sampling (available only when the jumps of X are of finite variation) and SBG-Alg [Reference González Cázares and Mijatović16] in Subsections 3.4.2 and 3.4.3, respectively. In both cases we analyse the behaviour of the computational complexity in two regimes: (I)

$\varepsilon$

, we compare the computational complexity of the MC and MLMC estimators based on TSB-Alg. The MLMC estimator based on TSB-Alg is compared with the ones based on SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18] with rejection sampling (available only when the jumps of X are of finite variation) and SBG-Alg [Reference González Cázares and Mijatović16] in Subsections 3.4.2 and 3.4.3, respectively. In both cases we analyse the behaviour of the computational complexity in two regimes: (I)

![]() $\varepsilon$

tending to 0 and fixed time horizon T; (II) fixed

$\varepsilon$

tending to 0 and fixed time horizon T; (II) fixed

![]() $\varepsilon$

and time horizon T tending to 0 or

$\varepsilon$

and time horizon T tending to 0 or

![]() $\infty$

.

$\infty$

.

Regime (II) is useful when there is a limited benefit to arbitrary accuracy in

![]() $\varepsilon$

but the constants may vary greatly with the time horizon T. For example, in option pricing, estimators with accuracy smaller than a basis point are of limited interest. For simplicity, in the remainder of this subsection the payoff g is assumed to be Lipschitz. However, analogous comparisons can be made for other payoffs under appropriate assumptions.

$\varepsilon$

but the constants may vary greatly with the time horizon T. For example, in option pricing, estimators with accuracy smaller than a basis point are of limited interest. For simplicity, in the remainder of this subsection the payoff g is assumed to be Lipschitz. However, analogous comparisons can be made for other payoffs under appropriate assumptions.

3.4.1. Comparison between the Monte Carlo and multilevel Monte Carlo estimators based on TSB-Alg

Recall first that both MC and MLMC estimators have the same bias, since the latter estimator is a telescoping sum of a sequence of the former estimators, controlled by

![]() $n(\varepsilon)$

given in Theorem 3.1 above.

$n(\varepsilon)$

given in Theorem 3.1 above.

Regime (I). Propositions 3.1 and 3.2 imply that the MLMC estimator outperforms the MC estimator as

![]() $\varepsilon\to 0$

. Moreover, since

$\varepsilon\to 0$

. Moreover, since

![]() $\mathbb{V}_{\boldsymbol0}[g(\chi_n)\Upsilon_{\boldsymbol\lambda}]\to\mathbb{V}_{\boldsymbol0}[g(\chi)\Upsilon_{\boldsymbol\lambda}]$

and

$\mathbb{V}_{\boldsymbol0}[g(\chi_n)\Upsilon_{\boldsymbol\lambda}]\to\mathbb{V}_{\boldsymbol0}[g(\chi)\Upsilon_{\boldsymbol\lambda}]$

and

![]() $\varepsilon^2\mathcal{C}_{\textrm{ML}}(\varepsilon)\to 2c\big(\sum_{k=1}^\infty(k\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big])^{1/2}\big)^2<\infty$

as

$\varepsilon^2\mathcal{C}_{\textrm{ML}}(\varepsilon)\to 2c\big(\sum_{k=1}^\infty(k\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big])^{1/2}\big)^2<\infty$

as

![]() $\varepsilon\to0$

, the MLMC estimator is preferable to the MC estimator for

$\varepsilon\to0$

, the MLMC estimator is preferable to the MC estimator for

![]() $\varepsilon > 0$

satisfying

$\varepsilon > 0$

satisfying

\[n(\varepsilon)>\Bigg(\sum_{k=1}^\infty\sqrt{k\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]}\Bigg)^2/\mathbb{V}_{\boldsymbol0}\big[g(\chi)\Upsilon_{\boldsymbol\lambda}\big].\]

\[n(\varepsilon)>\Bigg(\sum_{k=1}^\infty\sqrt{k\mathbb{V}_{\boldsymbol0}\big[D_{k,1}^g\big]}\Bigg)^2/\mathbb{V}_{\boldsymbol0}\big[g(\chi)\Upsilon_{\boldsymbol\lambda}\big].\]

Since the payoff g is Lipschitz, Proposition 3.1 implies that this condition is close to

\[\log(1/\varepsilon)>\vartheta_1\Bigg(\sum_{k=1}^\infty\sqrt{k\big(2^{-k}-e^{-2\vartheta_1k}\big)}\Bigg)^2,\]

\[\log(1/\varepsilon)>\vartheta_1\Bigg(\sum_{k=1}^\infty\sqrt{k\big(2^{-k}-e^{-2\vartheta_1k}\big)}\Bigg)^2,\]

where we recall that

![]() $\vartheta_1\in[\log(3/2),\log2]$

is defined in (23) below. In particular, the latter condition is equivalent to

$\vartheta_1\in[\log(3/2),\log2]$

is defined in (23) below. In particular, the latter condition is equivalent to

![]() $\varepsilon<0.0915$

if

$\varepsilon<0.0915$

if

![]() $\vartheta_1=\log(3/2)$

, or

$\vartheta_1=\log(3/2)$

, or

![]() $\varepsilon<5.06\times 10^{-5}$

if

$\varepsilon<5.06\times 10^{-5}$

if

![]() $\vartheta_1=\log2$

.

$\vartheta_1=\log2$

.

Regime (II). Assume

![]() $\varepsilon>0$

is fixed. In this case, the MC and MLMC estimators share the value of

$\varepsilon>0$

is fixed. In this case, the MC and MLMC estimators share the value of

![]() $n=n(\varepsilon)$

, which is

$n=n(\varepsilon)$

, which is

![]() $\mathcal{O}(\max\{1,\log T\})$

as either

$\mathcal{O}(\max\{1,\log T\})$

as either

![]() $T\to 0$

or

$T\to 0$

or

![]() $T\to\infty$

. Moreover, the variances

$T\to\infty$

. Moreover, the variances

![]() $\mathbb{V}_{\boldsymbol0}[g(\chi_n)\Upsilon_{\boldsymbol\lambda}]$

(appearing in

$\mathbb{V}_{\boldsymbol0}[g(\chi_n)\Upsilon_{\boldsymbol\lambda}]$

(appearing in

![]() $\mathcal{C}_{\textrm{MC}}$

) and

$\mathcal{C}_{\textrm{MC}}$

) and

![]() $\mathbb{V}_{\boldsymbol0}\big[D^g_{k,1}\big]$

,

$\mathbb{V}_{\boldsymbol0}\big[D^g_{k,1}\big]$

,

![]() $k\in\mathbb{N}$

(appearing in

$k\in\mathbb{N}$

(appearing in

![]() $\mathcal{C}_{\textrm{ML}}$

; see Proposition 3.2 above) are all proportional to

$\mathcal{C}_{\textrm{ML}}$

; see Proposition 3.2 above) are all proportional to

![]() $\mathcal{O}\big(\big(T+T^2\big)e^{(\mu_{2{\boldsymbol\lambda}}-2\mu_{\boldsymbol\lambda})T}\big)$

as either

$\mathcal{O}\big(\big(T+T^2\big)e^{(\mu_{2{\boldsymbol\lambda}}-2\mu_{\boldsymbol\lambda})T}\big)$

as either

![]() $T\to0$

or

$T\to0$

or

![]() $T\to\infty$

. Therefore, by Proposition 3.2, the quotient

$T\to\infty$

. Therefore, by Proposition 3.2, the quotient

![]() $\mathcal{C}_{\textrm{MC}}/\mathcal{C}_{\textrm{ML}}$

is proportional to a constant as

$\mathcal{C}_{\textrm{MC}}/\mathcal{C}_{\textrm{ML}}$

is proportional to a constant as

![]() $T\to 0$

and a multiple of

$T\to 0$

and a multiple of

![]() $\log T$

as

$\log T$

as

![]() $T\to\infty$

.

$T\to\infty$

.

In conclusion, the MLMC estimator is preferable to the MC estimator when either

![]() $\varepsilon$

is small or T is large. Otherwise, when

$\varepsilon$

is small or T is large. Otherwise, when

![]() $\varepsilon$

is not small and T is small, the two estimators have similar performance.

$\varepsilon$

is not small and T is small, the two estimators have similar performance.

3.4.2. Comparison with SB-Alg

In the special case when the jumps of X have finite variation (or equivalently,

![]() $\int_{(-1,1)}|x|\nu(\textrm{d} x)<\infty$

), the increments

$\int_{(-1,1)}|x|\nu(\textrm{d} x)<\infty$

), the increments

![]() $X_t$

can be simulated under

$X_t$

can be simulated under

![]() $\mathbb{P}_{\boldsymbol\lambda}$

using rejection sampling (see [Reference Grabchak19, Reference Kawai and Masuda23]), making SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18] applicable to sample

$\mathbb{P}_{\boldsymbol\lambda}$

using rejection sampling (see [Reference Grabchak19, Reference Kawai and Masuda23]), making SB-Alg [Reference González Cázares, Mijatović and Uribe Bravo18] applicable to sample

![]() $\chi_n$

(see (5) for its definition) under

$\chi_n$

(see (5) for its definition) under

![]() $\mathbb{P}_{\boldsymbol\lambda}$

. The rejection sampling is performed for each of the increments of the subordinators

$\mathbb{P}_{\boldsymbol\lambda}$

. The rejection sampling is performed for each of the increments of the subordinators

and the processes

![]() $Y^{{(\pm)}}$

are as in Theorem 2.1. The algorithm proposes samples under

$Y^{{(\pm)}}$

are as in Theorem 2.1. The algorithm proposes samples under

![]() $\mathbb{P}_{\boldsymbol0}$

and rejects independently with probability

$\mathbb{P}_{\boldsymbol0}$

and rejects independently with probability

![]() $\exp\big({-}\lambda_\pm\widetilde Y_t^{{(\pm)}}\big)$

. Let

$\exp\big({-}\lambda_\pm\widetilde Y_t^{{(\pm)}}\big)$