1. Introduction

While statistical approaches dominated the first half of the 20th century in understanding the dynamics of turbulence, the increasing availability of experimental and direct numerical simulation (DNS) data has enabled structure-based approaches to yield useful insights. For example, in the viscous sublayer of wall-bounded flows, turbulent structures coherent in space and time were discovered and characterized using hydrogen bubble visualization (Kline et al. Reference Kline, Reynolds, Schraub and Runstadler1967). These structures consisted of streaks, which are spanwise-alternating regions of high-speed and low-speed fluid, and quasi-streamwise vortices. From this characterization of the sublayer structures, the self-sustaining process (SSP) was developed and is now a widely accepted model for how these structures sustain themselves (Hamilton, Kim & Waleffe Reference Hamilton, Kim and Waleffe1995; Waleffe Reference Waleffe1997; Jiménez & Pinelli Reference Jiménez and Pinelli1999). It is evident that the detailed characterization of turbulent structures is a necessary first step toward a mechanistic understanding and modelling of wall-bounded turbulence. Structures in wall-bounded turbulence are reviewed by Robinson (Reference Robinson1991), Adrian (Reference Adrian2007), Jiménez (Reference Jiménez2012), and McKeon (Reference McKeon2017).

With an eye toward a structural model of wall-bounded turbulence, Townsend proposed the attached eddy hypothesis (AEH) for asymptotically high-Reynolds number flows (Townsend Reference Townsend1976). The AEH supposes that the main, energy-containing motion is made up of contributions from attached eddies. Attached eddies are persistent flow patterns in the log layer whose size scales linearly with their distance from the wall; they span the entire range of scales in the log layer and – critically – are presumed to be geometrically self-similar. The AEH makes specific predictions for how the normal components of the Reynolds stress vary with distance from the wall, which are borne out by experimental (Hultmark et al. Reference Hultmark, Vallikivi, Bailey and Smits2012; Hutchins et al. Reference Hutchins, Chauhan, Marusic, Monty and Klewicki2012; Kulandaivelu Reference Kulandaivelu2012; Winkel et al. Reference Winkel, Cutbirth, Ceccio, Perlin and Dowling2012; Marusic et al. Reference Marusic, Monty, Hultmark and Smits2013) and numerical data (Lee & Moser Reference Lee and Moser2015). Townsend did not commit himself to a particular form for the attached eddies in his statistical AEH model. Inspired by Kline et al. (Reference Kline, Reynolds, Schraub and Runstadler1967), Townsend considered a physical, double-cone model; unfortunately, it was inconsistent with the AEH, and Townsend's modelling efforts ended there.

Inspired by the flow visualization results of Head & Bandyopadhyay (Reference Head and Bandyopadhyay1981), Perry & Chong (Reference Perry and Chong1982) specified a shape for the attached eddies, leading to their attached eddy model (AEM). In addition to making the same predictions as the AEH for the normal components of the Reynolds stress, their AEM also yields the log law for the mean flow and predictions for streamwise spectra. They found that all eddy shapes yield similar first- and second-order statistics, but predictions beyond basic statistics are dependent on the shape of the eddy. A key point is that attached eddies, however they look, are spatially localized. More details on the AEM and subsequent refinements are reviewed by Marusic & Monty (Reference Marusic and Monty2019).

Partly spurred by the success of the AEH, an increasing abundance of investigations have explored whether self-similar coherent motions are present in wall-bounded turbulence. Tomkins & Adrian (Reference Tomkins and Adrian2003) found that the spanwise length scales of conditionally averaged low-speed structures grew linearly with distance from the wall, as attached eddies are supposed to. del Álamo et al. (Reference del Álamo, Jiménez, Zandonade and Moser2006) found that, on average, the spanwise and streamwise length scales of vortical structures grow roughly linearly with distance from the wall. Lozano-Durán & Jiménez (Reference Lozano-Durán and Jiménez2014) concluded the same for sweep and ejection structures, and Hwang & Sung (Reference Hwang and Sung2018) concluded the same for structures of positive or negative velocity fluctuations. These studies show that the length scales of various structures grow, on average, with distance from the wall.

Evocative results were published by Dennis & Nickels (Reference Dennis and Nickels2011). By conditionally averaging their three-dimensional (3-D) particle image velocimetry measurements based on spanwise swirl strength at a chosen distance from the wall, they extracted a structure with the shape of a horseshoe vortex. Repeating the analysis at several distances from the wall, they found a similar shape at each distance whose size grew with distance from the wall. The authors suggested that the shape would make a sensible choice of a ‘representative eddy’. Although the analysis was qualitative and performed in the outer layer, it provided exciting hints of the presence of self-similar coherent structures.

Recalling that Townsend's eddies constitute the main energy-containing motions, Hellström, Marusic & Smits (Reference Hellström, Marusic and Smits2016) used proper orthogonal decomposition (POD) to explore the existence of self-similar eddies in turbulent pipe flow. These results were also highly evocative as the leading (most energetic) POD modes collapsed on top of each other when scaled by their size. The self-similar POD modes spanned a decade in wall-normal length scales, and the largest structures extended into the outer region.

Although POD provides an approach to extracting energetic structures from data, it has the drawback that in homogeneous directions, the POD modes are constrained to be Fourier modes, precluding direct identification of structure or localization (Holmes et al. Reference Holmes, Lumley, Berkooz and Rowley2012). Lumley has espoused the view that such unlocalized structures should not be considered eddies (Lumley Reference Lumley1970; Tennekes & Lumley Reference Tennekes and Lumley1972; Lumley Reference Lumley1981). A well-known formalism incorporating localization is that of wavelets. A wavelet basis consists of elements localized in both space and scale. Traditionally, the basis elements are formed by translations and dilations of a pre-specified vector called the mother wavelet; this construction makes wavelets perfectly self-similar across scales. Due to their localization and the space-scale unfolding they produce, wavelets have found much use in turbulence (Argoul et al. Reference Argoul, Arneodo, Grasseau, Gagne, Hopfinger and Frisch1989; Everson, Sirovich & Sreenivasan Reference Everson, Sirovich and Sreenivasan1990; Meneveau Reference Meneveau1991a,Reference Meneveaub; Yamada & Ohkitani Reference Yamada and Ohkitani1991; Farge Reference Farge1992; Katul, Parlange & Chu Reference Katul, Parlange and Chu1994; Katul & Vidakovic Reference Katul and Vidakovic1998; Farge, Pellegrino & Schneider Reference Farge, Pellegrino and Schneider2001; Farge et al. Reference Farge, Schneider, Pellegrino, Wray and Rogallo2003; Okamoto et al. Reference Okamoto, Yoshimatsu, Schneider, Farge and Kaneda2007; Ruppert-Felsot, Farge & Petitjeans Reference Ruppert-Felsot, Farge and Petitjeans2009). More information about wavelets is available in the brief review article by Strang (Reference Strang1989) and in the books by Daubechies (Reference Daubechies1992), Meyer (Reference Meyer1993), Mallat (Reference Mallat1999), and Frazier (Reference Frazier2006).

In applications, a drawback of wavelets is that there are many possible choices for the mother wavelet. Naturally, one may wonder which wavelet is best suited for a particular application. Katul & Vidakovic (Reference Katul and Vidakovic1996) considered this question in the context of atmospheric turbulence. Motivated by the AEH, they sought to decompose flow variables into contributions from attached (energy-containing) eddies and detached (negligible-energy) eddies. Katul & Vidakovic used an entropy-minimizing scheme developed by Coifman & Wickerhauser (Reference Coifman and Wickerhauser1992) to select the optimal wavelet from a library of known wavelets. We emphasize that the shapes of the wavelets are still pre-specified and that they are perfectly self-similar across different scales. These two features – common in any traditional wavelet analysis – are undesirable if one's goal is to extract structure from data and to determine the degree of self-similarity of the data.

Motivated by the preceding discussion, Floryan & Graham (Reference Floryan and Graham2021) developed a technique called data-driven wavelet decomposition (DDWD). DDWD integrates the data- and energy-driven nature of POD with the space and scale localization properties of wavelets without imposing self-similarity across scales. Floryan & Graham (Reference Floryan and Graham2021) applied DDWD to homogeneous isotropic turbulence and found that the structures in the data (represented by the data-driven wavelets) were self-similar for scales corresponding to the inertial subrange, reminiscent of the Richardson cascade. Our goal in this work is to leverage DDWD as a data-driven basis of energetic, spatially localized, multiscale vectors for characterizing the shape and self-similarity of structures in wall-bounded turbulence. We extend and apply the technique to experimental measurements in high-Reynolds number pipe flow. As we will show, we identify strongly self-similar, spatially localized, energetic structures across a range of scales – candidate eddies. We describe the experimental setup and measurements in § 2. We then briefly review DDWD in § 3 and describe extensions to accommodate the presently analysed experimental measurements. Section 4 describes the structures that we identify, and we end by placing our results in the context of the literature in § 5.

2. Experimental setup

The analysis in this work is based on data acquired in the Princeton Superpipe facility, a facility capable of creating fully developed pipe flow at high Reynolds numbers. The data were acquired at a Reynolds number of ![]() $Re_D = U_b D / \nu = 608\,000$, with a corresponding friction Reynolds number of

$Re_D = U_b D / \nu = 608\,000$, with a corresponding friction Reynolds number of ![]() $Re_\tau = u_\tau R / \nu = 12\,400$, using a cross-wire thermal anemometry probe. Here,

$Re_\tau = u_\tau R / \nu = 12\,400$, using a cross-wire thermal anemometry probe. Here, ![]() $U_b$ is the bulk velocity,

$U_b$ is the bulk velocity, ![]() $D = 2R$ is the pipe diameter,

$D = 2R$ is the pipe diameter, ![]() $\nu$ is the kinematic viscosity of the fluid, and

$\nu$ is the kinematic viscosity of the fluid, and ![]() $u_\tau = \sqrt {\tau _w / \rho }$ is the friction velocity, where

$u_\tau = \sqrt {\tau _w / \rho }$ is the friction velocity, where ![]() $\tau _w$ is the wall shear stress, and

$\tau _w$ is the wall shear stress, and ![]() $\rho$ is the density of the fluid. The pipe is hydraulically smooth for this operating condition (McKeon et al. Reference McKeon, Swanson, Zagarola, Donnelly and Smits2004b). Details of the facility can be found in Zagarola & Smits (Reference Zagarola and Smits1998), and details of the data we use and the measurement probe can be found in Fu, Fan & Hultmark (Reference Fu, Fan and Hultmark2019); we recapitulate below.

$\rho$ is the density of the fluid. The pipe is hydraulically smooth for this operating condition (McKeon et al. Reference McKeon, Swanson, Zagarola, Donnelly and Smits2004b). Details of the facility can be found in Zagarola & Smits (Reference Zagarola and Smits1998), and details of the data we use and the measurement probe can be found in Fu, Fan & Hultmark (Reference Fu, Fan and Hultmark2019); we recapitulate below.

The data consist of long time series of the streamwise velocity component measured at 40 distances from the pipe wall, with two time series taken at each distance. The measurements at different distances from the wall were not simultaneous. The positions ![]() $y$ of the measurement probe are shown in figure 1, with a superscript

$y$ of the measurement probe are shown in figure 1, with a superscript ![]() $+$ denoting wall units (non-dimensionalized by

$+$ denoting wall units (non-dimensionalized by ![]() $\nu / u_\tau$). The measurements were acquired in three regions of the flow: (I) the power-law region, (II) the log-law region, and (III) the wake region. Although the log-law region is classically taken to start at

$\nu / u_\tau$). The measurements were acquired in three regions of the flow: (I) the power-law region, (II) the log-law region, and (III) the wake region. Although the log-law region is classically taken to start at ![]() $y^+ = 30$ (Pope Reference Pope2000), experiments in the Superpipe suggest that the mean streamwise velocity follows a power law for

$y^+ = 30$ (Pope Reference Pope2000), experiments in the Superpipe suggest that the mean streamwise velocity follows a power law for ![]() $50 < y^+ < 600$, with the log law not taking hold until

$50 < y^+ < 600$, with the log law not taking hold until ![]() $y^+ = 600$ (McKeon et al. Reference McKeon, Li, Jiang, Morrison and Smits2004a).

$y^+ = 600$ (McKeon et al. Reference McKeon, Li, Jiang, Morrison and Smits2004a).

Figure 1. Wall-normal positions of the measurement probe.

Each time series consists of roughly ![]() $1.67 \times 10^7$ samples, sampled at

$1.67 \times 10^7$ samples, sampled at ![]() $F_s=300$ kHz, and filtered using an analog 8-pole Butterworth filter at 150 kHz. We convert the temporal signals to spatial signals using Taylor's frozen field hypothesis, in which case distances between consecutive samples range from 2.6 wall units at the measurement position closest to the wall to 4.0 wall units at the measurement position furthest from the wall. The measurement probe had a volume of

$F_s=300$ kHz, and filtered using an analog 8-pole Butterworth filter at 150 kHz. We convert the temporal signals to spatial signals using Taylor's frozen field hypothesis, in which case distances between consecutive samples range from 2.6 wall units at the measurement position closest to the wall to 4.0 wall units at the measurement position furthest from the wall. The measurement probe had a volume of ![]() $42\,\mathrm {\mu } {\rm m}\times 42\,\mathrm {\mu }{\rm m}\times 50\,\mathrm {\mu } {\rm m}$, or

$42\,\mathrm {\mu } {\rm m}\times 42\,\mathrm {\mu }{\rm m}\times 50\,\mathrm {\mu } {\rm m}$, or ![]() $8.0\times 8.0\times 9.5$ wall units

$8.0\times 8.0\times 9.5$ wall units![]() $^3$. The length of the wires in the measurement probe was 60

$^3$. The length of the wires in the measurement probe was 60 ![]() $\mathrm {\mu }$m, or 11.4 wall units.

$\mathrm {\mu }$m, or 11.4 wall units.

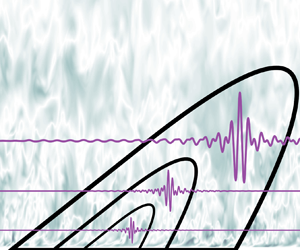

Since the time series were filtered at the Nyquist frequency of 150 kHz during collection, the energy at the largest wavenumbers ![]() $k$ corresponding to the measurement noise remains unattenuated. We filter each time series again with a two-pole Butterworth filter at 25 kHz. Figure 2 shows the effect of this filter on the premultiplied power spectral density (PSD) of the signal measured closest to the wall at

$k$ corresponding to the measurement noise remains unattenuated. We filter each time series again with a two-pole Butterworth filter at 25 kHz. Figure 2 shows the effect of this filter on the premultiplied power spectral density (PSD) of the signal measured closest to the wall at ![]() $y^+=350$ (the rest of the time series are affected similarly by the same filter). The PSD,

$y^+=350$ (the rest of the time series are affected similarly by the same filter). The PSD, ![]() $\phi _{uu}$, was estimated using segments that contained

$\phi _{uu}$, was estimated using segments that contained ![]() $2^{19}$ elements, overlapped by 50 %, and windowed with the Hamming window.

$2^{19}$ elements, overlapped by 50 %, and windowed with the Hamming window.

Figure 2. Premultiplied PSD of ![]() $u$, measured at

$u$, measured at ![]() $y^+=350$, without and with filtering at 25 kHz.

$y^+=350$, without and with filtering at 25 kHz.

Before describing the method we will use to analyse the structure of the Superpipe data, we estimate the largest coherent structure – or eddy – we can expect to find. The largest eddies in a flow are usually referred to as the very-large-scale motions (VLSMs), and their sizes can be obtained from the PSD of the streamwise velocity. Specifically, the initial peak in the premultiplied PSD is taken to correspond to the VLSM (Kim & Adrian Reference Kim and Adrian1999). Selecting this peak can be difficult, however, since producing a good estimate of the PSD involves a tradeoff between using longer data segments (yielding greater resolution in ![]() $k$) and more segments (yielding less variance in the PSD estimate). Even though our time series are rather long, the PSD in figure 2 is the best we can produce without relying on using a moving-average filter in

$k$) and more segments (yielding less variance in the PSD estimate). Even though our time series are rather long, the PSD in figure 2 is the best we can produce without relying on using a moving-average filter in ![]() $k$-space. Estimating the location of the initial peak in the premultiplied PSD can be fraught with uncertainty. To avoid the tradeoff involved in estimating PSDs, we opted to use the time correlation function to estimate the largest expected coherent structure. The heatmap of the time correlation function at all 40 wall-normal positions is shown in figure 3, and it suggests that all 40 time series are correlated up until 10 eddy turnover times. Since

$k$-space. Estimating the location of the initial peak in the premultiplied PSD can be fraught with uncertainty. To avoid the tradeoff involved in estimating PSDs, we opted to use the time correlation function to estimate the largest expected coherent structure. The heatmap of the time correlation function at all 40 wall-normal positions is shown in figure 3, and it suggests that all 40 time series are correlated up until 10 eddy turnover times. Since ![]() $U_b=5.4$ m s

$U_b=5.4$ m s![]() $^{-1}$ and the mean velocity

$^{-1}$ and the mean velocity ![]() $\bar U$ only ranges from 4.2 to 6.4 m s

$\bar U$ only ranges from 4.2 to 6.4 m s![]() $^{-1}$ over the range of wall-normal positions considered here, one can effectively say that the structures at all wall-normal positions are correlated in the streamwise direction up until

$^{-1}$ over the range of wall-normal positions considered here, one can effectively say that the structures at all wall-normal positions are correlated in the streamwise direction up until ![]() $O(1 R)$ to

$O(1 R)$ to ![]() $O(10 R)$. VLSMs have previously been estimated to be

$O(10 R)$. VLSMs have previously been estimated to be ![]() $O(10 R)$ in length (Kim & Adrian Reference Kim and Adrian1999).

$O(10 R)$ in length (Kim & Adrian Reference Kim and Adrian1999).

Figure 3. Heat map of the time correlation function at all wall-normal positions. ![]() $N_\tau = \tau F_s$ is the number of points needed to capture a time lag of

$N_\tau = \tau F_s$ is the number of points needed to capture a time lag of ![]() $\tau$ (s), and

$\tau$ (s), and ![]() $\tau U_b/R$ is the dimensionless eddy turnover time.

$\tau U_b/R$ is the dimensionless eddy turnover time.

3. Data-driven wavelet decomposition

Data-driven wavelet decomposition was introduced by Floryan & Graham (Reference Floryan and Graham2021), and has its roots in POD and wavelet analysis. First, we provide a conceptual overview of DDWD and its connections to POD and wavelet analysis – for full details, we refer the reader to Floryan & Graham (Reference Floryan and Graham2021) and to Appendix A. We then describe the pre-processing steps and modifications needed to analyse the long time series described in § 2.

3.1. Overview and connections to POD and wavelet analysis

We consider periodic vectors ![]() $\boldsymbol{z}\in \mathbb {R}^N$, with

$\boldsymbol{z}\in \mathbb {R}^N$, with ![]() $N$ even, and a dataset

$N$ even, and a dataset ![]() $\{\boldsymbol{z}_i \in \mathbb {R}^N\}_{i=1}^{M}$. Wavelets, POD, and DDWD construct different orthonormal bases for

$\{\boldsymbol{z}_i \in \mathbb {R}^N\}_{i=1}^{M}$. Wavelets, POD, and DDWD construct different orthonormal bases for ![]() $\mathbb {R}^N$. We interpret the basis elements as structures. Wavelet basis elements are localized structures that are independent of the data being analysed. POD basis elements, on the other hand, tend to be global structures, and they derive from the data under consideration. Specifically, POD basis elements are constructed via an energy maximization problem and are thus interpreted as energetic structures present in the data. DDWD combines the main features of wavelets and POD, producing basis elements representing energetic, localized structures present in the data.

$\mathbb {R}^N$. We interpret the basis elements as structures. Wavelet basis elements are localized structures that are independent of the data being analysed. POD basis elements, on the other hand, tend to be global structures, and they derive from the data under consideration. Specifically, POD basis elements are constructed via an energy maximization problem and are thus interpreted as energetic structures present in the data. DDWD combines the main features of wavelets and POD, producing basis elements representing energetic, localized structures present in the data.

For our purposes, the most useful way to think about wavelets is by the associated subspaces. A wavelet basis splits ![]() $\mathbb {R}^N$ into two orthogonal subspaces of dimension

$\mathbb {R}^N$ into two orthogonal subspaces of dimension ![]() $N/2$: the approximation subspace

$N/2$: the approximation subspace ![]() $V_{-1}$, and the detail subspace

$V_{-1}$, and the detail subspace ![]() $W_{-1}$. Subspace

$W_{-1}$. Subspace ![]() $V_{-1}$ is spanned by

$V_{-1}$ is spanned by ![]() $N/2$ mutually orthonormal even translates of the father wavelet,

$N/2$ mutually orthonormal even translates of the father wavelet, ![]() $\boldsymbol{\phi}_{-1}$, and subspace

$\boldsymbol{\phi}_{-1}$, and subspace ![]() $W_{-1}$ is spanned by

$W_{-1}$ is spanned by ![]() $N/2$ mutually orthonormal even translates of the mother wavelet,

$N/2$ mutually orthonormal even translates of the mother wavelet, ![]() $\boldsymbol{\psi}_{-1}$. (By ‘even’, we mean that the translates are shifted by an even number of mesh points.) The mother wavelet can be constructed from the father wavelet, and vice versa. Traditionally, projecting a vector in

$\boldsymbol{\psi}_{-1}$. (By ‘even’, we mean that the translates are shifted by an even number of mesh points.) The mother wavelet can be constructed from the father wavelet, and vice versa. Traditionally, projecting a vector in ![]() $\mathbb {R}^N$ onto the approximation subspace

$\mathbb {R}^N$ onto the approximation subspace ![]() $V_{-1}$ yields a low-pass filtered version of the vector, and projecting the vector onto the detail subspace

$V_{-1}$ yields a low-pass filtered version of the vector, and projecting the vector onto the detail subspace ![]() $W_{-1}$ yields a high-pass filtered version of the vector (hence the nomenclature of ‘approximation’ and ‘detail’ subspaces). Since

$W_{-1}$ yields a high-pass filtered version of the vector (hence the nomenclature of ‘approximation’ and ‘detail’ subspaces). Since ![]() $V_{-1}$ and

$V_{-1}$ and ![]() $W_{-1}$ are orthogonal complements, the vector is equal to the superposition of these two projections. The splitting of subspaces is performed recursively on the approximation subspace, ultimately yielding a decomposition

$W_{-1}$ are orthogonal complements, the vector is equal to the superposition of these two projections. The splitting of subspaces is performed recursively on the approximation subspace, ultimately yielding a decomposition ![]() $\mathbb {R}^N = V_{-p} \oplus W_{-p} \oplus \cdots \oplus W_{-1}$ for

$\mathbb {R}^N = V_{-p} \oplus W_{-p} \oplus \cdots \oplus W_{-1}$ for ![]() $N = 2^p$, illustrated in figure 4. One may think of this decomposition as the direct sum of a very coarse approximation subspace (

$N = 2^p$, illustrated in figure 4. One may think of this decomposition as the direct sum of a very coarse approximation subspace (![]() $V_{-p}$) and progressively finer detail subspaces (

$V_{-p}$) and progressively finer detail subspaces (![]() $W_{-p}$ to

$W_{-p}$ to ![]() $W_{-1}$). At each stage

$W_{-1}$). At each stage ![]() $l$,

$l$, ![]() $V_{-l}$ and

$V_{-l}$ and ![]() $W_{-l}$ are respectively spanned by

$W_{-l}$ are respectively spanned by ![]() $N/2^l$ mutually orthonormal translates of

$N/2^l$ mutually orthonormal translates of ![]() $\boldsymbol{\phi} _{-l}$ and

$\boldsymbol{\phi} _{-l}$ and ![]() $\boldsymbol{\psi} _{-l}$. In a traditional wavelet basis, one specifies the mother wavelet,

$\boldsymbol{\psi} _{-l}$. In a traditional wavelet basis, one specifies the mother wavelet, ![]() $\boldsymbol{\psi} _{-1}$, and all other basis elements derive from the mother wavelet. An example – the Haar wavelet basis – is shown in figure 5. Note two salient features of wavelet bases: spatial localization of the basis elements, and self-similarity across stages (the wavelets at coarser stages are essentially simple dilations of the mother wavelet).

$\boldsymbol{\psi} _{-1}$, and all other basis elements derive from the mother wavelet. An example – the Haar wavelet basis – is shown in figure 5. Note two salient features of wavelet bases: spatial localization of the basis elements, and self-similarity across stages (the wavelets at coarser stages are essentially simple dilations of the mother wavelet).

Figure 4. Subspaces formed by wavelets periodic in ![]() $\mathbb {R}^N$. The full space

$\mathbb {R}^N$. The full space ![]() $\mathbb {R}^N$ is composed of the highlighted subspaces.

$\mathbb {R}^N$ is composed of the highlighted subspaces.

Figure 5. Haar wavelets on ![]() $\mathbb {R}^8$, following the style of figure 4. The Haar basis for each subspace is shown.

$\mathbb {R}^8$, following the style of figure 4. The Haar basis for each subspace is shown.

POD can be considered in a similar light as wavelet analysis. Letting ![]() $\{\boldsymbol{u}_j\}_{j=1}^N$ denote the ordered set of POD basis elements, we have the following decomposition:

$\{\boldsymbol{u}_j\}_{j=1}^N$ denote the ordered set of POD basis elements, we have the following decomposition: ![]() ${\mathbb {R}^N = \text {span}(\boldsymbol{u}_1) \oplus \cdots \oplus \text {span}(\boldsymbol{u}_N)}$. That is, POD decomposes

${\mathbb {R}^N = \text {span}(\boldsymbol{u}_1) \oplus \cdots \oplus \text {span}(\boldsymbol{u}_N)}$. That is, POD decomposes ![]() $\mathbb {R}^N$ into a direct sum of 1-D subspaces. Whereas wavelet subspaces are ordered by scale, POD subspaces are ordered by energy content; whereas wavelet subspaces are determined by the choice of the mother wavelet, POD subspaces are determined from data.

$\mathbb {R}^N$ into a direct sum of 1-D subspaces. Whereas wavelet subspaces are ordered by scale, POD subspaces are ordered by energy content; whereas wavelet subspaces are determined by the choice of the mother wavelet, POD subspaces are determined from data.

DDWD combines POD and wavelet analysis. Namely, it maintains the subspace structure of a wavelet basis (shown in figure 4, where each subspace is spanned by mutually orthonormal translates of a single wavelet), but the wavelet at each stage is determined from data by an energy maximization principle. Letting ![]() $E({\cdot })$ denote the energy of a dataset contained in a subspace, orthogonality of the subspaces implies that

$E({\cdot })$ denote the energy of a dataset contained in a subspace, orthogonality of the subspaces implies that ![]() $E(V_{-l}) = E(V_{-l-1}) + E(W_{-l-1})$. That is, whenever a subspace is split, the energy contained in it is also split. Motivated by the observation that large-scale (or coarse) structures tend to be the most energetic ones in fluid flows, each time we split a subspace, we design the subsequent subspaces so that the approximation subspace contains as much of the dataset's energy as possible. An analogous approach is to progressively minimize the energy in the detail subspaces. The design of the subspaces can be framed as an optimization problem. Consider an ensemble of

$E(V_{-l}) = E(V_{-l-1}) + E(W_{-l-1})$. That is, whenever a subspace is split, the energy contained in it is also split. Motivated by the observation that large-scale (or coarse) structures tend to be the most energetic ones in fluid flows, each time we split a subspace, we design the subsequent subspaces so that the approximation subspace contains as much of the dataset's energy as possible. An analogous approach is to progressively minimize the energy in the detail subspaces. The design of the subspaces can be framed as an optimization problem. Consider an ensemble of ![]() $M$ data vectors forming the dataset

$M$ data vectors forming the dataset ![]() $\boldsymbol{\mathsf{Z}} = [\boldsymbol{z}_1\ \boldsymbol{z}_2\ \cdots \ \boldsymbol{z}_M] \in \mathbb {R}^{N \times M}$. The mathematical formulation for finding the first-stage DDWD wavelet is

$\boldsymbol{\mathsf{Z}} = [\boldsymbol{z}_1\ \boldsymbol{z}_2\ \cdots \ \boldsymbol{z}_M] \in \mathbb {R}^{N \times M}$. The mathematical formulation for finding the first-stage DDWD wavelet is

\begin{equation} \left.\begin{gathered} \min _{\boldsymbol{\psi}_{-1}} \quad \frac{1}{\| \boldsymbol{\mathsf{Z}} \|_F^2} \underbrace{\sum _{m=0}^{\boldsymbol{N}/2-1} \| \boldsymbol{\mathsf{Z}}^{\rm T} \boldsymbol{\mathsf{R}}^{2m} (\boldsymbol{\psi}_{-1}) \|^2 }_{M E_1} + \lambda^2 \text{Var}(\boldsymbol{\psi}_{-1}) \\ \text{s.t.} \quad \boldsymbol{\psi}_{-1}^{\rm T} \boldsymbol{\mathsf{R}}^{2m}(\boldsymbol{\psi}_{-1}) = \delta_{m 0},\quad m = 0, \unicode{x2026}, N/2-1, \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} \min _{\boldsymbol{\psi}_{-1}} \quad \frac{1}{\| \boldsymbol{\mathsf{Z}} \|_F^2} \underbrace{\sum _{m=0}^{\boldsymbol{N}/2-1} \| \boldsymbol{\mathsf{Z}}^{\rm T} \boldsymbol{\mathsf{R}}^{2m} (\boldsymbol{\psi}_{-1}) \|^2 }_{M E_1} + \lambda^2 \text{Var}(\boldsymbol{\psi}_{-1}) \\ \text{s.t.} \quad \boldsymbol{\psi}_{-1}^{\rm T} \boldsymbol{\mathsf{R}}^{2m}(\boldsymbol{\psi}_{-1}) = \delta_{m 0},\quad m = 0, \unicode{x2026}, N/2-1, \end{gathered}\right\} \end{equation}

where ![]() $\boldsymbol{\mathsf{R}}^q$ is the linear operator that circularly shifts its input by

$\boldsymbol{\mathsf{R}}^q$ is the linear operator that circularly shifts its input by ![]() $q$ (e.g. for

$q$ (e.g. for ![]() $\boldsymbol{\psi} _{-1} = [a,b,c,d]^\textrm {T}$,

$\boldsymbol{\psi} _{-1} = [a,b,c,d]^\textrm {T}$, ![]() $\boldsymbol{\mathsf{R}}^1(\boldsymbol{\psi} _{-1}) = [d,a,b,c]^\textrm {T}$). Above,

$\boldsymbol{\mathsf{R}}^1(\boldsymbol{\psi} _{-1}) = [d,a,b,c]^\textrm {T}$). Above, ![]() $E_1$ denotes the mean energy of the data vectors projected onto

$E_1$ denotes the mean energy of the data vectors projected onto ![]() $W_{-1}$, and

$W_{-1}$, and ![]() $F$ denotes the Frobenius norm. The first term in (3.1) is the fraction of the dataset's energy in the finest detail subspace (which typically contains the smallest scale). The circular variance of the wavelet, which is bounded between 0 and 1, is penalized in the second term to encourage the wavelet to be spatially localized. The results are robust to the strength of the penalty parameter

$F$ denotes the Frobenius norm. The first term in (3.1) is the fraction of the dataset's energy in the finest detail subspace (which typically contains the smallest scale). The circular variance of the wavelet, which is bounded between 0 and 1, is penalized in the second term to encourage the wavelet to be spatially localized. The results are robust to the strength of the penalty parameter ![]() $\lambda ^2$, with the penalty term hardly affecting the wavelets’ ability to capture energy (Floryan & Graham Reference Floryan and Graham2021). The reason is that the penalty term manipulates the phases of each Fourier component of a wavelet to achieve spatial localization while essentially leaving the magnitudes untouched. Moreover, we find that the shape of the wavelet found by DDWD is generally robust to the choice of

$\lambda ^2$, with the penalty term hardly affecting the wavelets’ ability to capture energy (Floryan & Graham Reference Floryan and Graham2021). The reason is that the penalty term manipulates the phases of each Fourier component of a wavelet to achieve spatial localization while essentially leaving the magnitudes untouched. Moreover, we find that the shape of the wavelet found by DDWD is generally robust to the choice of ![]() $\lambda ^2$ across several orders of magnitude (see appendix A.2). For the results in this work, we have used

$\lambda ^2$ across several orders of magnitude (see appendix A.2). For the results in this work, we have used ![]() $\lambda ^2 = 10^{-4}$.

$\lambda ^2 = 10^{-4}$.

The wavelets at later stages are found recursively. To find ![]() $\boldsymbol{\psi} _{-2}$, we project the dataset onto

$\boldsymbol{\psi} _{-2}$, we project the dataset onto ![]() $V_{-1}$, replace

$V_{-1}$, replace ![]() $N$ by

$N$ by ![]() $N/2$ and

$N/2$ and ![]() $\boldsymbol{\mathsf{R}}^{2m}$ by

$\boldsymbol{\mathsf{R}}^{2m}$ by ![]() $\boldsymbol{\mathsf{R}}^{4 m}$ in (3.1), and decrease

$\boldsymbol{\mathsf{R}}^{4 m}$ in (3.1), and decrease ![]() $\lambda ^2$ by a factor of 4 (Floryan & Graham Reference Floryan and Graham2021). In the end, DDWD produces an energetic hierarchy of spatially localized orthonormal structures.

$\lambda ^2$ by a factor of 4 (Floryan & Graham Reference Floryan and Graham2021). In the end, DDWD produces an energetic hierarchy of spatially localized orthonormal structures.

We emphasize two differences between DDWD and traditional wavelet analysis. The first is that the concept of energy is built into DDWD. Whereas the stage of the decomposition induced by a traditional wavelet basis is synonymous with the scale of the wavelets, this is not necessarily the case for a basis produced by DDWD since energy is also taken into account. Scale and energy are often related in fluid flows, however, in which case the energetic hierarchy of structures found by DDWD will also be a hierarchy of scales.

The second difference is that self-similarity across stages is not built into DDWD. It bears repeating that only energy is taken into consideration when finding structures at each stage of the decomposition, and a separate optimization problem is solved at each stage. For data that are not self-similar, neither are the basis elements produced by DDWD. The converse is also true: self-similarity in the data manifests itself in self-similar basis elements. In Floryan & Graham (Reference Floryan and Graham2021), DDWD applied to data from homogeneous isotropic turbulence produced self-similar basis elements in the range of scales corresponding to the inertial subrange, while when it was applied to white noise and data generated by the Kuramoto–Sivashinsky equation, the basis elements were not self-similar. Determining the degree of self-similarity of structures in the Superpipe data will feature prominently later in this work.

3.2. Pre-processing and modifications for long time series

Like POD, DDWD uses an ensemble of vectors (the data). Here, however, we have two long time series of data (for each wall-normal position, which will be analysed separately). We form an ensemble by breaking the two time series into many shorter segments, as is done when estimating the PSD. Doing so introduces a number of issues that must be addressed: how long should the shorter segments be; how can border effects be minimized; and how can we efficiently capture very small and very large structures?

3.2.1. Data segmentation

We first address how to segment a long time series into an ensemble of shorter segments of length ![]() $N=2^p$. The segments need to be long enough to capture the length of the largest coherent structure – or eddy – in the flow. Figure 3 suggests we need

$N=2^p$. The segments need to be long enough to capture the length of the largest coherent structure – or eddy – in the flow. Figure 3 suggests we need ![]() $p \ge 15$ to capture the largest correlated structure; however, the last 2–3 stages of any wavelet basis contain wavelets that are not spatially localized. We set

$p \ge 15$ to capture the largest correlated structure; however, the last 2–3 stages of any wavelet basis contain wavelets that are not spatially localized. We set ![]() $p=18$ to ensure the largest spatially localized wavelet represents the largest correlated/coherent structure.

$p=18$ to ensure the largest spatially localized wavelet represents the largest correlated/coherent structure.

3.2.2. Border effects

Following the classical discrete wavelet transform, DDWD implicitly assumes that signals are periodic. Of course, this is not true for our segmented data. If a segment of data is treated as periodic, there will generally be a discontinuity when the end of the segment is wrapped back around to the beginning. A wavelet decomposition of a signal with a discontinuity generally has high energy in the small-scale wavelets localized around the discontinuity. This border effect could greatly distort the data-driven wavelets found by DDWD.

To circumvent distortions due to border effects, we turn to PSD estimators. PSD estimators based on the discrete Fourier transform also implicitly assume periodic signals, so they are also subject to border effects. To minimize border effects, estimators window the data vectors before taking discrete Fourier transforms of them (Press et al. Reference Press, Teukolsky, Vetterling and Flannery2007). Windowing data consists of multiplying the data by a function ![]() $w$ that rises from zero to a peak of one and then falls back to zero. We do the same, windowing our data vectors with a 50 % Tukey window before performing DDWD on them. Meneveau (Reference Meneveau1991a) similarly windowed his non-periodic data before performing a wavelet analysis. We have tested this procedure on windowed and unwindowed periodic data, finding that windowing does not affect the shapes of the data-driven wavelets. The type of window used also does not have a significant impact. We double the number of data vectors by overlapping the segments by 50 %; this is a common practice to decrease variance when estimating spectra (Press et al. Reference Press, Teukolsky, Vetterling and Flannery2007), and doing so allows almost every part of the signal to appear in our dataset unattenuated by the 50 % Tukey window.

$w$ that rises from zero to a peak of one and then falls back to zero. We do the same, windowing our data vectors with a 50 % Tukey window before performing DDWD on them. Meneveau (Reference Meneveau1991a) similarly windowed his non-periodic data before performing a wavelet analysis. We have tested this procedure on windowed and unwindowed periodic data, finding that windowing does not affect the shapes of the data-driven wavelets. The type of window used also does not have a significant impact. We double the number of data vectors by overlapping the segments by 50 %; this is a common practice to decrease variance when estimating spectra (Press et al. Reference Press, Teukolsky, Vetterling and Flannery2007), and doing so allows almost every part of the signal to appear in our dataset unattenuated by the 50 % Tukey window.

Since windowing data attenuates the signal, PSD estimators compensate for this attenuation in order to calculate accurate estimates of the energy contained within each Fourier mode. For a window ![]() $\boldsymbol{w} \in \mathbb {R}^N$, the attenuation caused by the windowing is counteracted by dividing the energy in each Fourier mode by

$\boldsymbol{w} \in \mathbb {R}^N$, the attenuation caused by the windowing is counteracted by dividing the energy in each Fourier mode by ![]() $\boldsymbol{w}^\textrm {T} \boldsymbol{w}/N$, which is bounded between zero and one (Press et al. Reference Press, Teukolsky, Vetterling and Flannery2007). Each DDWD subspace contains basis elements that (as a whole) span the entire spatial domain, just like individual Fourier modes. Consequently, we can account for the attenuation from the windowing by dividing the energy in

$\boldsymbol{w}^\textrm {T} \boldsymbol{w}/N$, which is bounded between zero and one (Press et al. Reference Press, Teukolsky, Vetterling and Flannery2007). Each DDWD subspace contains basis elements that (as a whole) span the entire spatial domain, just like individual Fourier modes. Consequently, we can account for the attenuation from the windowing by dividing the energy in ![]() $\boldsymbol{\mathsf{Z}}$ and each DDWD subspace by

$\boldsymbol{\mathsf{Z}}$ and each DDWD subspace by ![]() $\boldsymbol{w}^\textrm {T} \boldsymbol{w}/N$. We account for the segment overlapping, which doubles our number of data vectors, by further dividing the energy by 2. The additional factor arises for the following reason. The energy of the long time series

$\boldsymbol{w}^\textrm {T} \boldsymbol{w}/N$. We account for the segment overlapping, which doubles our number of data vectors, by further dividing the energy by 2. The additional factor arises for the following reason. The energy of the long time series ![]() $\boldsymbol{u}$ (before segmenting it) is

$\boldsymbol{u}$ (before segmenting it) is ![]() $\boldsymbol{u}^\textrm {T}\boldsymbol{u}$. After we segment it with 50 % overlap, we store the resulting segments in a data matrix

$\boldsymbol{u}^\textrm {T}\boldsymbol{u}$. After we segment it with 50 % overlap, we store the resulting segments in a data matrix ![]() $\boldsymbol{\mathsf{Z}}$. Each entry of

$\boldsymbol{\mathsf{Z}}$. Each entry of ![]() $\boldsymbol{u}$ appears twice in

$\boldsymbol{u}$ appears twice in ![]() $\boldsymbol{\mathsf{Z}}$ due to the 50 % overlap (except entries near the beginning and end of

$\boldsymbol{\mathsf{Z}}$ due to the 50 % overlap (except entries near the beginning and end of ![]() $\boldsymbol{u}$). Then the energy in

$\boldsymbol{u}$). Then the energy in ![]() $\boldsymbol{\mathsf{Z}}$ is

$\boldsymbol{\mathsf{Z}}$ is ![]() $\Vert \boldsymbol{\mathsf{Z}} \Vert _F^2 \approx 2 \boldsymbol{u}^\textrm {T} \boldsymbol{u}$. Note that multiplying the data by a constant does not affect the optimization problem in (3.1) because of how the first term in the objective function is normalized. Hereafter,

$\Vert \boldsymbol{\mathsf{Z}} \Vert _F^2 \approx 2 \boldsymbol{u}^\textrm {T} \boldsymbol{u}$. Note that multiplying the data by a constant does not affect the optimization problem in (3.1) because of how the first term in the objective function is normalized. Hereafter, ![]() $l$ will denote the stage, and

$l$ will denote the stage, and ![]() $E_l$ will denote the stage-

$E_l$ will denote the stage-![]() $l$ energy with the factor of

$l$ energy with the factor of ![]() $2 \boldsymbol{w}^\textrm {T} \boldsymbol{w}/N$ accounted for.

$2 \boldsymbol{w}^\textrm {T} \boldsymbol{w}/N$ accounted for.

3.2.3. Capturing disparate scales

An important issue is how to efficiently capture very small and very large scales. The very smallest scales in the data will be of a size on the order of one element (2.6 to 4.0 viscous lengths, depending on the wall-normal position) in our data segments, and the very largest scales will be of a size on the order of ![]() $2^{18}$ elements (

$2^{18}$ elements (![]() $55R$ to

$55R$ to ![]() $85R$). The constrained optimization problem in (3.1) is converted to an unconstrained optimization problem (see Floryan & Graham Reference Floryan and Graham2021) and solved using the BFGS algorithm. The optimization problem scales poorly since evaluating the converted objective function once has computational complexity

$85R$). The constrained optimization problem in (3.1) is converted to an unconstrained optimization problem (see Floryan & Graham Reference Floryan and Graham2021) and solved using the BFGS algorithm. The optimization problem scales poorly since evaluating the converted objective function once has computational complexity ![]() $O(N^2 \log _2 N + N^2 M)$, and the number of iterations it takes the BFGS algorithm to converge grows with

$O(N^2 \log _2 N + N^2 M)$, and the number of iterations it takes the BFGS algorithm to converge grows with ![]() $N$ (although the rate of growth is unclear). This makes the problem intractable for data vectors of length

$N$ (although the rate of growth is unclear). This makes the problem intractable for data vectors of length ![]() $2^{18}$.

$2^{18}$.

We solve this problem by exploiting the iterative nature of a wavelet decomposition and the spatial localization of data-driven wavelets. In the first stage of DDWD, we find the lowest-energy (typically finest-scale) structures. Since these first-stage structures tend to be highly localized, we do not need long data vectors to capture them. Thus, we can perform DDWD on an ensemble of short vectors of length ![]() $N_s \ll N$ to capture the smallest-scale structures.

$N_s \ll N$ to capture the smallest-scale structures.

The result of the first stage of DDWD will be wavelets of length ![]() $N_s$, which form part of a basis for

$N_s$, which form part of a basis for ![]() $\mathbb {R}^{N_s}$. Ultimately, we need to work our way up to full-length vectors of length

$\mathbb {R}^{N_s}$. Ultimately, we need to work our way up to full-length vectors of length ![]() $N$ since we seek a basis for

$N$ since we seek a basis for ![]() $\mathbb {R}^N$. Taking advantage of the spatial localization of the wavelets, we simply pad them with zeros until their length is

$\mathbb {R}^N$. Taking advantage of the spatial localization of the wavelets, we simply pad them with zeros until their length is ![]() $N$. With the full-length first-stage wavelets in hand, we use them to coarsen the full-length data vectors by projecting the data onto the approximation subspace

$N$. With the full-length first-stage wavelets in hand, we use them to coarsen the full-length data vectors by projecting the data onto the approximation subspace ![]() $V_{-1}$ (see figure 4). The projected data have length

$V_{-1}$ (see figure 4). The projected data have length ![]() $N/2$ and are simply coarsened versions of the original data. We proceed iteratively, performing one stage of DDWD at a time on shortened segments of the coarsened data. The technical details are in Appendix A.1.

$N/2$ and are simply coarsened versions of the original data. We proceed iteratively, performing one stage of DDWD at a time on shortened segments of the coarsened data. The technical details are in Appendix A.1.

Note that once we have our data-driven wavelets, performing a wavelet decomposition with them scales well with the length of the data vectors. It is only the algorithm used to find the data-driven wavelets that scales poorly.

3.2.4. Enforcing zero mean and validating against synthetic data

In traditional wavelet bases, ![]() $\boldsymbol{\phi} _{-18}$ from stage

$\boldsymbol{\phi} _{-18}$ from stage ![]() $p=18$ is always a vector of ones and represents the mean component of all elements in

$p=18$ is always a vector of ones and represents the mean component of all elements in ![]() $\mathbb {R}^N$. Due to orthogonality, all wavelets

$\mathbb {R}^N$. Due to orthogonality, all wavelets ![]() $\boldsymbol{\psi} _{-l}$ and their translates must have zero mean. When using DDWD to generate a wavelet basis for a given dataset, one can introduce a hard constraint to enforce zero mean on

$\boldsymbol{\psi} _{-l}$ and their translates must have zero mean. When using DDWD to generate a wavelet basis for a given dataset, one can introduce a hard constraint to enforce zero mean on ![]() $\boldsymbol{\psi} _{-l}$ (Floryan & Graham Reference Floryan and Graham2021); however, this hard constraint is generally not needed since the energetically minimal

$\boldsymbol{\psi} _{-l}$ (Floryan & Graham Reference Floryan and Graham2021); however, this hard constraint is generally not needed since the energetically minimal ![]() $\boldsymbol{\psi} _{-l}$ that DDWD finds also tends to have nearly zero mean. Nonetheless, we choose to enforce zero mean on

$\boldsymbol{\psi} _{-l}$ that DDWD finds also tends to have nearly zero mean. Nonetheless, we choose to enforce zero mean on ![]() $\boldsymbol{\psi} _{-l}$ in this work to mitigate a numerical issue that causes the wavelets in stages 13 and up to have artificially high wavenumber content. This numerical issue was found by validating DDWD against synthetic signals composed of known wavelets (see Appendix A.2 for details). Synthetic data were also used to demonstrate DDWD's ability to uncover many types of wavelets, its robustness to the variance penalty parameter

$\boldsymbol{\psi} _{-l}$ in this work to mitigate a numerical issue that causes the wavelets in stages 13 and up to have artificially high wavenumber content. This numerical issue was found by validating DDWD against synthetic signals composed of known wavelets (see Appendix A.2 for details). Synthetic data were also used to demonstrate DDWD's ability to uncover many types of wavelets, its robustness to the variance penalty parameter ![]() $\lambda ^2$ across multiple orders of magnitude, the necessity of windowing and the validity of the modification to DDWD described in § 3.2.3.

$\lambda ^2$ across multiple orders of magnitude, the necessity of windowing and the validity of the modification to DDWD described in § 3.2.3.

4. Results

We apply DDWD to the Superpipe data described in § 2. Starting with the measurements made nearest to the wall (![]() $y^+ = 350$), in §§ 4.1 and 4.2 we respectively examine the streamwise shape and degree of self-similarity of the localized structures we compute. Extending the analysis to all wall-normal positions, in § 4.3 we discuss the computed structures’ streamwise self-similarity and the similarity across different wall-normal positions. In § 4.4 we examine projections of the data onto the computed wavelet subspaces. Lastly, in § 4.5 we derive how spectral power law scalings translate to wavelet space and search for the presence of such scaling laws.

$y^+ = 350$), in §§ 4.1 and 4.2 we respectively examine the streamwise shape and degree of self-similarity of the localized structures we compute. Extending the analysis to all wall-normal positions, in § 4.3 we discuss the computed structures’ streamwise self-similarity and the similarity across different wall-normal positions. In § 4.4 we examine projections of the data onto the computed wavelet subspaces. Lastly, in § 4.5 we derive how spectral power law scalings translate to wavelet space and search for the presence of such scaling laws.

4.1. Shape of localized structures at  $y^+ = 350$

$y^+ = 350$

Figure 6(a) shows the wavelets contained in the 18-stage DDWD basis. For comparison, we have also plotted Meyer wavelets. Each plot corresponds to a different stage of the wavelet decomposition, showing the corresponding wavelet. The plot corresponding to ‘Stage 18a’ shows the basis element for the coarsest approximation subspace, ![]() $V_{-18}$. For the first 15 stages, the range of the horizontal axis is progressively dilated by a factor of two. For ease of comparison, we have shifted the wavelets and, in some cases, reflected them about their horizontal axes (equivalent to multiplying the wavelet coefficients by

$V_{-18}$. For the first 15 stages, the range of the horizontal axis is progressively dilated by a factor of two. For ease of comparison, we have shifted the wavelets and, in some cases, reflected them about their horizontal axes (equivalent to multiplying the wavelet coefficients by ![]() $-1$). Although a stage-

$-1$). Although a stage-![]() $l$ wavelet only has translational invariance with respect to shifts by

$l$ wavelet only has translational invariance with respect to shifts by ![]() $2^l$ (i.e. the spacing between its translates), shifting it by any integer is justifiable here since the translational invariance of the data causes the energy contained in the subspace

$2^l$ (i.e. the spacing between its translates), shifting it by any integer is justifiable here since the translational invariance of the data causes the energy contained in the subspace ![]() $W_{-l}$ to remain the same no matter the shift value. We observe that the scale and stage of the DWWD wavelets are synonymous. Note that for stages 15 to 18, the wavelets (from all bases) are not spatially localized or self-similar because their scale approaches the length of the data segments.

$W_{-l}$ to remain the same no matter the shift value. We observe that the scale and stage of the DWWD wavelets are synonymous. Note that for stages 15 to 18, the wavelets (from all bases) are not spatially localized or self-similar because their scale approaches the length of the data segments.

Figure 6. Comparing the 18-stage DDWD basis at ![]() $y^+=350$ to three known wavelet bases. (a) Superimposed wavelets (only DDWD and Meyer shown for clarity). (b) Stage-8 wavelets. (c) Similarity between DDWD basis and known wavelet bases.

$y^+=350$ to three known wavelet bases. (a) Superimposed wavelets (only DDWD and Meyer shown for clarity). (b) Stage-8 wavelets. (c) Similarity between DDWD basis and known wavelet bases.

Figure 6(b) shows the shapes of the stage-8 DDWD wavelet and the Meyer, Fejér–Korovkin 6 (fk6), and Daubechies 2 (db2) wavelets; we chose these wavelets for comparison since they have varying length scales (which we will quantify later) even within the same stage. Figure 6(c) quantifies the similarity of these three known wavelets to the DDWD wavelet with the inner product

where ![]() $\boldsymbol{\psi} _{-l}^{(b_1)}$ is the stage-

$\boldsymbol{\psi} _{-l}^{(b_1)}$ is the stage-![]() $l$ wavelet belonging to basis

$l$ wavelet belonging to basis ![]() $b_1$ and similarly for

$b_1$ and similarly for ![]() $\boldsymbol{\psi} _{-l}^{(b_2)}$ (in figure 6c,

$\boldsymbol{\psi} _{-l}^{(b_2)}$ (in figure 6c, ![]() $b_1$ is always the DDWD basis, while

$b_1$ is always the DDWD basis, while ![]() $b_2$ changes between the three known wavelet bases). The absolute value of the inner product is bounded between 0 and 1. The DDWD wavelets obtained from the Superpipe data are quantitatively most similar to Meyer wavelets. This similarity was also noted when wavelets were computed from data from homogeneous isotropic turbulence (Floryan & Graham Reference Floryan and Graham2021). Two potential reasons that the most energetic wavelets in these two datasets are similar to Meyer wavelets, as opposed to fk6 or db2, is that Meyer wavelets are smoother and have a larger length scale at any given stage. Velocity fields are smooth, so smooth basis functions should give a better representation. As for the larger length scale, that may be a consequence of the combination of smoothness and the orthogonality condition, or it may be connected to the fact that there is always a non-local aspect to incompressible flows because of the global pressure constraint.

$b_2$ changes between the three known wavelet bases). The absolute value of the inner product is bounded between 0 and 1. The DDWD wavelets obtained from the Superpipe data are quantitatively most similar to Meyer wavelets. This similarity was also noted when wavelets were computed from data from homogeneous isotropic turbulence (Floryan & Graham Reference Floryan and Graham2021). Two potential reasons that the most energetic wavelets in these two datasets are similar to Meyer wavelets, as opposed to fk6 or db2, is that Meyer wavelets are smoother and have a larger length scale at any given stage. Velocity fields are smooth, so smooth basis functions should give a better representation. As for the larger length scale, that may be a consequence of the combination of smoothness and the orthogonality condition, or it may be connected to the fact that there is always a non-local aspect to incompressible flows because of the global pressure constraint.

Note that at a given stage, wavelets across different bases can appear very different in shape and width but still have an inner product close to 1 due to having similar Fourier content. In what follows, we consider shapes to be similar when ![]() $\alpha _l^{(b_1,b_2)} \gtrsim 0.95$.

$\alpha _l^{(b_1,b_2)} \gtrsim 0.95$.

4.2. Self-similarity of localized structures at  $y^+ = 350$

$y^+ = 350$

We analyse the similarity of DDWD wavelets across stages, which reflects the streamwise self-similarity of the velocity signal. To do so, we must introduce the scaling operator ![]() $S$. The scaling operator is used when constructing a traditional wavelet basis, with

$S$. The scaling operator is used when constructing a traditional wavelet basis, with ![]() $\boldsymbol{\psi} _{-l} = S\boldsymbol{\psi} _{-(l-1)}$ for all stages. The scaling operator defines our notion of self-similarity: we say that wavelets across a stage are exactly self-similar when

$\boldsymbol{\psi} _{-l} = S\boldsymbol{\psi} _{-(l-1)}$ for all stages. The scaling operator defines our notion of self-similarity: we say that wavelets across a stage are exactly self-similar when ![]() $\boldsymbol{\psi} _{-l} = S\boldsymbol{\psi} _{-(l-1)}$, as is the case for traditional wavelets. This operator essentially dilates its input wavelet by a factor of two, as is evident by comparing the Meyer wavelets from one stage to the next in figure 6(a) (a precise mathematical definition of

$\boldsymbol{\psi} _{-l} = S\boldsymbol{\psi} _{-(l-1)}$, as is the case for traditional wavelets. This operator essentially dilates its input wavelet by a factor of two, as is evident by comparing the Meyer wavelets from one stage to the next in figure 6(a) (a precise mathematical definition of ![]() $S$ is given in Floryan & Graham Reference Floryan and Graham2021). In figure 7(a), the stage-

$S$ is given in Floryan & Graham Reference Floryan and Graham2021). In figure 7(a), the stage-![]() $l$ DDWD wavelets

$l$ DDWD wavelets ![]() $\boldsymbol{\psi} _{-l}$ are plotted with solid red curves and the dilated, previous-stage wavelets

$\boldsymbol{\psi} _{-l}$ are plotted with solid red curves and the dilated, previous-stage wavelets ![]() $S\boldsymbol{\psi} _{-(l-1)}$ are plotted with dashed blue curves. The wavelets are apparently strongly self-similar across adjacent stages.

$S\boldsymbol{\psi} _{-(l-1)}$ are plotted with dashed blue curves. The wavelets are apparently strongly self-similar across adjacent stages.

Figure 7. (a) 18-stage DDWD basis at ![]() $y^+=350$; solid red curves are

$y^+=350$; solid red curves are ![]() $\boldsymbol{\psi} _{-l}$ (or

$\boldsymbol{\psi} _{-l}$ (or ![]() $\boldsymbol{\phi} _{-18}$ for stage

$\boldsymbol{\phi} _{-18}$ for stage ![]() $18a$), and dashed blue curves are

$18a$), and dashed blue curves are ![]() $S \boldsymbol{\psi} _{-(l-1)}$ (or

$S \boldsymbol{\psi} _{-(l-1)}$ (or ![]() $S \boldsymbol{\phi} _{-17}$ for stage

$S \boldsymbol{\phi} _{-17}$ for stage ![]() $18a$), where

$18a$), where ![]() $S$ essentially dilates its input wavelet by a factor of 2. (b) Self-similarity across adjacent stages (i.e. inner product between the normal and dilated wavelets), where the grey region covers the range of values encountered across 10 DDWD initializations. (c) Self-similarity across stages 1 through 15, where the dashed triangle indicates the region of high self-similarity (

$S$ essentially dilates its input wavelet by a factor of 2. (b) Self-similarity across adjacent stages (i.e. inner product between the normal and dilated wavelets), where the grey region covers the range of values encountered across 10 DDWD initializations. (c) Self-similarity across stages 1 through 15, where the dashed triangle indicates the region of high self-similarity (![]() $\alpha _{l_a,l_b} \gtrsim 0.95$).

$\alpha _{l_a,l_b} \gtrsim 0.95$).

To quantify the degree of self-similarity, we compute the inner product

analogous to (4.1). Figure 7(b) shows ![]() $\alpha _{l_a,l_b}$ for

$\alpha _{l_a,l_b}$ for ![]() $l_a = l$ and

$l_a = l$ and ![]() $l_b = l-1$, which is the inner product between the solid and dashed wavelets appearing in each subplot in figure 7(a). A high degree of self-similarity (

$l_b = l-1$, which is the inner product between the solid and dashed wavelets appearing in each subplot in figure 7(a). A high degree of self-similarity (![]() $\alpha _{l,l-1} \gtrsim 0.95$) across adjacent stages occurs in stages 2 through 11 and then in stages 14 and 15. The drop in self-similarity between stages 11 and 14 is physical – spatially localized structures are no longer self-similar at these stages. However, since the wavelets in the last 2–3 stages (stages 16 and beyond here) of any basis span the whole spatial domain, we disregard them hereafter. Finally, we note (but do not show here) that the drop in self-similarity at stage 11 is robust to decreasing the total number of stages from

$\alpha _{l,l-1} \gtrsim 0.95$) across adjacent stages occurs in stages 2 through 11 and then in stages 14 and 15. The drop in self-similarity between stages 11 and 14 is physical – spatially localized structures are no longer self-similar at these stages. However, since the wavelets in the last 2–3 stages (stages 16 and beyond here) of any basis span the whole spatial domain, we disregard them hereafter. Finally, we note (but do not show here) that the drop in self-similarity at stage 11 is robust to decreasing the total number of stages from ![]() $p=18$ to

$p=18$ to ![]() $p=14$.

$p=14$.

Figure 7(c) shows ![]() $\alpha _{l_a,l_b}$ for

$\alpha _{l_a,l_b}$ for ![]() $l_a > l_b, l_a \in [2,15]$, quantifying the degree of self-similarity across multiple stages. The results in figure 7(b) appear along the main diagonal in figure 7(c). One can see that high self-similarity of

$l_a > l_b, l_a \in [2,15]$, quantifying the degree of self-similarity across multiple stages. The results in figure 7(b) appear along the main diagonal in figure 7(c). One can see that high self-similarity of ![]() $\boldsymbol{\psi} _{-l}$ occurs in stages 2 through 11 across all stages, not just adjacent stages.

$\boldsymbol{\psi} _{-l}$ occurs in stages 2 through 11 across all stages, not just adjacent stages.

Altogether, DDWD reveals that coherent velocity structures (induced by eddies) are highly self-similar in stages 2 through 11. Defining a length scale ![]() $\lambda _x$ for a wavelet as the region centred about the origin that contains

$\lambda _x$ for a wavelet as the region centred about the origin that contains ![]() $90\,\%$ of its energy, this range of stages corresponds to

$90\,\%$ of its energy, this range of stages corresponds to ![]() $\lambda _x^+ = 20$ through

$\lambda _x^+ = 20$ through ![]() $\lambda _x/R = 1.26$. (Note, our definition for

$\lambda _x/R = 1.26$. (Note, our definition for ![]() $\lambda _x$ is justified in Appendix A.3 and is unrelated to the Fourier wavenumber

$\lambda _x$ is justified in Appendix A.3 and is unrelated to the Fourier wavenumber ![]() $k$.) Not only does DDWD give direct evidence of streamwise self-similarity of localized structures in wall-bounded turbulence, but it also reveals a surprisingly much larger range of self-similarity when compared to many statistical and structural approaches. Section 5 includes a more detailed discussion comparing the results obtained using DDWD to those in the literature.

$k$.) Not only does DDWD give direct evidence of streamwise self-similarity of localized structures in wall-bounded turbulence, but it also reveals a surprisingly much larger range of self-similarity when compared to many statistical and structural approaches. Section 5 includes a more detailed discussion comparing the results obtained using DDWD to those in the literature.

4.3. Self-similarity of localized structures in wall-normal direction

Figure 8(a) shows DDWD bases for three wall-normal positions (one for each region defined in figure 1): ![]() $y^+=350$,

$y^+=350$, ![]() $y/R=0.10$ and

$y/R=0.10$ and ![]() $y/R=0.59$. The wavelets are shown as functions of time scaled by the eddy turnover time (using the mean streamwise velocity at

$y/R=0.59$. The wavelets are shown as functions of time scaled by the eddy turnover time (using the mean streamwise velocity at ![]() $y^+=350$). We plot in time instead of space to avoid the slight dilation of the wavelets that would occur from using Taylor's hypothesis. For all wall-normal positions, stage is synonymous with scale for the DDWD wavelets. For each wall-normal position, figure 8(b) quantifies the self-similarity of the wavelets across adjacent stages. Our observation of strong streamwise self-similarity at

$y^+=350$). We plot in time instead of space to avoid the slight dilation of the wavelets that would occur from using Taylor's hypothesis. For all wall-normal positions, stage is synonymous with scale for the DDWD wavelets. For each wall-normal position, figure 8(b) quantifies the self-similarity of the wavelets across adjacent stages. Our observation of strong streamwise self-similarity at ![]() $y^+ = 350$ extends to the other wall-normal positions in the range of

$y^+ = 350$ extends to the other wall-normal positions in the range of ![]() $l \in [2, 11]$. Figure 8(c) quantifies the self-similarity of the DDWD wavelets across wall-normal positions by plotting the inner product in (4.1) computed between the wavelets at

$l \in [2, 11]$. Figure 8(c) quantifies the self-similarity of the DDWD wavelets across wall-normal positions by plotting the inner product in (4.1) computed between the wavelets at ![]() $y^+ = 350$ and at the other wall-normal positions. A high degree of wall-normal self-similarity is present for stages

$y^+ = 350$ and at the other wall-normal positions. A high degree of wall-normal self-similarity is present for stages ![]() $l \in [1, 11]$.

$l \in [1, 11]$.

Figure 8. (a) DDWD bases at ![]() $y^+=350$,

$y^+=350$, ![]() $y/R=0.10$, and

$y/R=0.10$, and ![]() $y/R=0.59$. (b) Similarity across adjacent stages for each basis. (c) Similarity across bases, where

$y/R=0.59$. (b) Similarity across adjacent stages for each basis. (c) Similarity across bases, where ![]() $b_1$ is for the signal closest to the wall and

$b_1$ is for the signal closest to the wall and ![]() $b_2$ is for the signal either in region II or III (regions defined in figure 1).

$b_2$ is for the signal either in region II or III (regions defined in figure 1).

Finally, we quantify the simultaneous self-similarity of the DDWD wavelets in the streamwise and wall-normal directions. To do so, we first choose a reference wavelet at stage ![]() $l_{ref} = 8$ and wall-normal position

$l_{ref} = 8$ and wall-normal position ![]() $y_{ref}^+=350$. Then, we calculate the inner product between this reference wavelet and all spatially localized wavelets (

$y_{ref}^+=350$. Then, we calculate the inner product between this reference wavelet and all spatially localized wavelets (![]() $l \leq 15$) at all wall-normal positions. We denote this inner product as

$l \leq 15$) at all wall-normal positions. We denote this inner product as ![]() $\alpha _{ref}(l,y)$. Note that either the reference wavelet or the wavelet at

$\alpha _{ref}(l,y)$. Note that either the reference wavelet or the wavelet at ![]() $(l,y)$ is scaled up to stage

$(l,y)$ is scaled up to stage ![]() $\max (l,l_{ref})$ using the scaling operator

$\max (l,l_{ref})$ using the scaling operator ![]() $S$ before computing

$S$ before computing ![]() $\alpha _{ref}(l,y)$ (which is done in time, not space, to avoid slight dilations resulting from Taylor's hypothesis). Figure 9 plots

$\alpha _{ref}(l,y)$ (which is done in time, not space, to avoid slight dilations resulting from Taylor's hypothesis). Figure 9 plots ![]() $\alpha _{ref}(l,y)$. In § 4.2, the DDWD wavelets at

$\alpha _{ref}(l,y)$. In § 4.2, the DDWD wavelets at ![]() $y^+=350$ exhibited strong self-similarity for

$y^+=350$ exhibited strong self-similarity for ![]() $l \in [2,11]$; however, based on the criterion

$l \in [2,11]$; however, based on the criterion ![]() $\alpha _{ref}\gtrsim 0.95$, figure 9 suggests a range of

$\alpha _{ref}\gtrsim 0.95$, figure 9 suggests a range of ![]() $l \in [3,10]$ for all wall-normal positions. The slight discrepancy in the range of

$l \in [3,10]$ for all wall-normal positions. The slight discrepancy in the range of ![]() $l$ is caused by the slight difference between

$l$ is caused by the slight difference between ![]() $\alpha _{l_a,l_b}$ and

$\alpha _{l_a,l_b}$ and ![]() $\alpha _{ref}(l,y)$; we will use the latter range of

$\alpha _{ref}(l,y)$; we will use the latter range of ![]() $l \in [3,10]$ henceforth. Figure 9 suggests that DDWD wavelets, which form spatially localized, energy-containing motions, are self-similar between streamwise length scales of

$l \in [3,10]$ henceforth. Figure 9 suggests that DDWD wavelets, which form spatially localized, energy-containing motions, are self-similar between streamwise length scales of ![]() $\lambda _x^+ = 40$ to

$\lambda _x^+ = 40$ to ![]() $\lambda _x/R = 1$ and between wall-normal length scales of

$\lambda _x/R = 1$ and between wall-normal length scales of ![]() $y^+=350$ to

$y^+=350$ to ![]() $y/R = 1$. Here, the self-similarity of the data has been assessed by the self-similarity of the DDWD basis. In the next section, a (perhaps) more direct method is explored where we assess the self-similarity of the data projected onto DDWD subspaces of different scales.

$y/R = 1$. Here, the self-similarity of the data has been assessed by the self-similarity of the DDWD basis. In the next section, a (perhaps) more direct method is explored where we assess the self-similarity of the data projected onto DDWD subspaces of different scales.

Figure 9. Self-similarity between the reference wavelet at ![]() $l_{ref}=8$ and

$l_{ref}=8$ and ![]() $y_{ref}^+=350$ and all other wavelets. Dashed horizontal lines separate the wall-normal regions defined in figure 1. Solid white lines are isolines of the streamwise length scale

$y_{ref}^+=350$ and all other wavelets. Dashed horizontal lines separate the wall-normal regions defined in figure 1. Solid white lines are isolines of the streamwise length scale ![]() $\lambda _x$ of the wavelets.

$\lambda _x$ of the wavelets.

4.4. Self-similarity of wavelet projections

The stage-![]() $l$ wavelet projection

$l$ wavelet projection ![]() $\boldsymbol{\mathsf{P}}_l \boldsymbol{z}_i$ denotes the projection of

$\boldsymbol{\mathsf{P}}_l \boldsymbol{z}_i$ denotes the projection of ![]() $\boldsymbol{z}_i \in \mathbb {R}^N$ onto the detail subspace

$\boldsymbol{z}_i \in \mathbb {R}^N$ onto the detail subspace ![]() $W_{-l}$ and is given by

$W_{-l}$ and is given by

\begin{equation}

\boldsymbol{\mathsf{P}}_l

\boldsymbol{z}_i = \sum_{m=0}^{N/2^l-1} \left\langle

\boldsymbol{\mathsf{R}}^{2^l m}(\boldsymbol{\psi}_{-l}),

\boldsymbol{z}_i \right\rangle \boldsymbol{\mathsf{R}}^{2^l

m}(\boldsymbol{\psi}_{-l}).

\end{equation}

\begin{equation}

\boldsymbol{\mathsf{P}}_l

\boldsymbol{z}_i = \sum_{m=0}^{N/2^l-1} \left\langle

\boldsymbol{\mathsf{R}}^{2^l m}(\boldsymbol{\psi}_{-l}),

\boldsymbol{z}_i \right\rangle \boldsymbol{\mathsf{R}}^{2^l

m}(\boldsymbol{\psi}_{-l}).

\end{equation}

Henceforth, ![]() $\boldsymbol{\mathsf{P}}_l \boldsymbol{u}$ denotes the stage-

$\boldsymbol{\mathsf{P}}_l \boldsymbol{u}$ denotes the stage-![]() $l$ wavelet projection of the velocity signal

$l$ wavelet projection of the velocity signal ![]() $\boldsymbol{u}$ (or rather its segments) onto the DDWD subspaces.

$\boldsymbol{u}$ (or rather its segments) onto the DDWD subspaces.

Wavelet projections, just like wavelets themselves, are multiscale. Larger-scale wavelet projections occupy the smaller-![]() $k$ range, and vice versa. For

$k$ range, and vice versa. For ![]() $y^+=350$, figure 10 shows the PSDs of

$y^+=350$, figure 10 shows the PSDs of ![]() $\boldsymbol{u}$,

$\boldsymbol{u}$, ![]() $\boldsymbol{\mathsf{P}}_l \boldsymbol{u}$, and the wavelets themselves,

$\boldsymbol{\mathsf{P}}_l \boldsymbol{u}$, and the wavelets themselves, ![]() $\boldsymbol{\psi} _{-l}$. As an aside, figure 10 provides another potential reason why DDWD uncovers Meyer-like wavelets: the lower spatial localization (and thus higher spectral localization) of Meyer-like wavelets allows a narrower range of wavenumbers to be removed from the broadband velocity signal during the energy optimization.

$\boldsymbol{\psi} _{-l}$. As an aside, figure 10 provides another potential reason why DDWD uncovers Meyer-like wavelets: the lower spatial localization (and thus higher spectral localization) of Meyer-like wavelets allows a narrower range of wavenumbers to be removed from the broadband velocity signal during the energy optimization.

Figure 10. PSDs of ![]() $\boldsymbol{u}$, its stage-

$\boldsymbol{u}$, its stage-![]() $l$ DDWD wavelet projections

$l$ DDWD wavelet projections ![]() $\boldsymbol{\mathsf{P}}_l \boldsymbol{u}$, and the DDWD wavelets

$\boldsymbol{\mathsf{P}}_l \boldsymbol{u}$, and the DDWD wavelets ![]() $\boldsymbol{\psi} _{-l}$ at

$\boldsymbol{\psi} _{-l}$ at ![]() $y^+ = 350$. Note that the PSDs of the wavelets are manually shifted to be at the same height.

$y^+ = 350$. Note that the PSDs of the wavelets are manually shifted to be at the same height.

We assess the self-similarity of the wavelet projections in figure 10 by comparing their PSDs. Figure 11(a) shows the PSDs when scaled in ![]() $k$ by

$k$ by ![]() $2^l$ in order to account for the dyadic progression of scales; they are also normalized to have unit norm. The self-similarity of

$2^l$ in order to account for the dyadic progression of scales; they are also normalized to have unit norm. The self-similarity of ![]() $\boldsymbol{\mathsf{P}}_l \boldsymbol{u}$ is quantified by

$\boldsymbol{\mathsf{P}}_l \boldsymbol{u}$ is quantified by ![]() $\gamma _{l_a,l_b}$, which is the inner product between the scaled and normalized PSDs of scale

$\gamma _{l_a,l_b}$, which is the inner product between the scaled and normalized PSDs of scale ![]() $l_a$ and

$l_a$ and ![]() $l_b$; figure 11(b) plots this inner product. Just as with

$l_b$; figure 11(b) plots this inner product. Just as with ![]() $\alpha$, we take

$\alpha$, we take ![]() $\gamma \gtrsim 0.95$ to indicate a high degree of self-similarity. Unlike DDWD wavelets, the projections are not highly self-similar until a much later stage of

$\gamma \gtrsim 0.95$ to indicate a high degree of self-similarity. Unlike DDWD wavelets, the projections are not highly self-similar until a much later stage of ![]() $l=6$.

$l=6$.

Figure 11. (a) Scaled and normalized PSDs of the DDWD wavelet projections in figure 10 for ![]() $l \in [3,13]$. (b) Inner product between the curves in (a), where the dashed triangle indicates the region of high self-similarity (

$l \in [3,13]$. (b) Inner product between the curves in (a), where the dashed triangle indicates the region of high self-similarity (![]() $\gamma _{l_a,l_b} \gtrsim 0.95$). Highly self-similar projections of scales

$\gamma _{l_a,l_b} \gtrsim 0.95$). Highly self-similar projections of scales ![]() $l \in [6,11]$ in (a) are coloured red based on the triangular region in (b).

$l \in [6,11]$ in (a) are coloured red based on the triangular region in (b).

Similar to the approach used in § 4.3, we calculate the self-similarity of wavelet projections simultaneously in stage and wall-normal position by choosing a reference projection. We choose ![]() $l_{ref} = 8$ at the wall-normal position

$l_{ref} = 8$ at the wall-normal position ![]() $y_{ref}^+=350$ again, and denote this inner product as

$y_{ref}^+=350$ again, and denote this inner product as ![]() $\gamma _{ref}(l,y)$; note that this inner product is done in frequency, not wavenumber, to avoid slight dilations resulting from Taylor's hypothesis. Figure 12 plots

$\gamma _{ref}(l,y)$; note that this inner product is done in frequency, not wavenumber, to avoid slight dilations resulting from Taylor's hypothesis. Figure 12 plots ![]() $\gamma _{ref}(l,y)$ for the DDWD, Meyer and db2 bases. DDWD wavelet projections are self-similar in

$\gamma _{ref}(l,y)$ for the DDWD, Meyer and db2 bases. DDWD wavelet projections are self-similar in ![]() $y$ from

$y$ from ![]() $y^+=350$ to

$y^+=350$ to ![]() $y/R = 1$ and self-similar in

$y/R = 1$ and self-similar in ![]() $x$ (across stages) up until wavelets of wavelength

$x$ (across stages) up until wavelets of wavelength ![]() $\lambda _x/R = 1$, exactly like DDWD wavelets (see § 4.3). The only difference is the lower bound of self-similarity in

$\lambda _x/R = 1$, exactly like DDWD wavelets (see § 4.3). The only difference is the lower bound of self-similarity in ![]() $x$: DDWD wavelets are self-similar starting from

$x$: DDWD wavelets are self-similar starting from ![]() $\lambda _x^+ = 40$, while the projections are self-similar starting from