What is audit and feedback? Why is it needed?

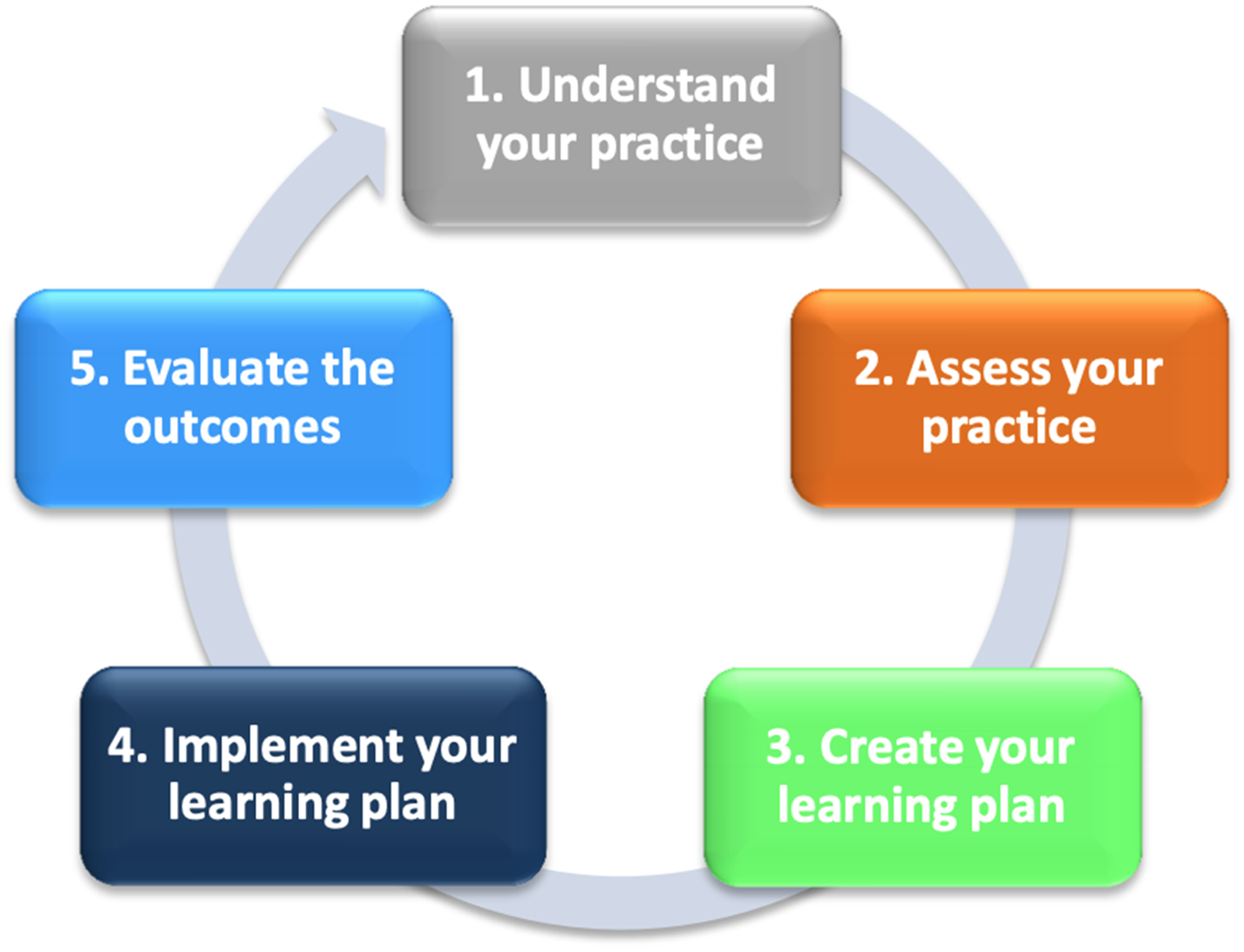

Audit and feedback is a practice improvement strategy. By summarizing an individual's clinical performance over a specific time period, it allows for the identification of areas for improvement. The rationale is that, by demonstrating a gap between a clinician's actual and desired practice for a specified measure, clinicians will be made aware of an unperceived learning need and will be motivated to make a change in their practice.Reference Meyer and Singh1 While there are other commonly used terms for audit and feedback, it is our experience that some of these terms (e.g., report cards) can elicit negative reactions from clinicians. Audit and feedback may be given in various formats.Reference Jamtvedt, Young, Kristoffersen, O'Brien and Oxman2 Physicians may be provided with information about their performance relative to their peers, targets and/or benchmarks. Audit and feedback may include an action plan that can lead to improvement.Reference Ivers, Sales and Colquhoun3 Some may wonder why audit and feedback is necessary; physicians are highly trained, motivated individuals seeking to provide the best care to their patients. Yet without receiving timely and specific practice feedback, it is difficult for physicians to identify unperceived learning needs or practice gaps. Furthermore, the Competency by Design Framework4 presented by the Royal College encourages physicians to understand and assess their practice, create and implement a learning plan and evaluate the outcomes. When done properly, audit and feedback is both a professional development and quality improvement (QI) strategy. However, this strategy has to be designed and delivered in an effective manner and in line with the priorities of physicians and patients (Box 1).Reference MacLean, Kerr and Qaseem5

Box 1. Key elements of audit and feedback.

1. Defining performance metrics

a. Evidence-informed

b. Align data with goals and priorities of department/physiciansReference Brehaut, Colquhoun and Eva7

c. Co-designed /selected by physicians/providers

d. When possible provide individual (rather than aggregate) dataReference Brehaut, Colquhoun and Eva7

e. Metric should be within individual physician control

2. Data report

a. Include peer comparator or explicit goal/targetReference Brehaut, Colquhoun and Eva7

b. Provide timely feedback over multiple instancesReference Brehaut, Colquhoun and Eva7

c. Minimize extraneous cognitive loadReference Brehaut, Colquhoun and Eva7

3. Facilitation

a. Facilitation (group or individual) can help

i. Address reactions to data and feedback processReference Brehaut, Colquhoun and Eva7

ii. Identify barriers and enablers to practice change

iii. Guide physicians in creating action plans

iv. Ensure the data are delivered both written and verballyReference Brehaut, Colquhoun and Eva7

v. Facilitation by a respected colleague not serving in a power position

4. Remeasurement to assess whether a change has occurred

At the next departmental meeting, Dr. Smith presented the idea of providing individual physicians with their practice data. Overall, there seemed to be a lot of interest around the concept, although a few of her colleagues questioned the utility of getting a “report card.” Have other EDs done this and what is the evidence?

What is the evidence to support audit and feedback?

While several individual physician groups and healthcare regulatory bodies have embraced the use of audit and feedback for QI purposes, the effectiveness of audit and feedback can be variable. In the most recent Cochrane review, Ivers et al. analyzed 140 studies and found that audit and feedback leads to a median 4.3% absolute improvement in practice (interquartile range, 0.5% to 16%).Reference Ivers, Jamtvedt and Flottorp6 However, the effect size was determined by the type of clinical behavior the audit and feedback aimed to address. Audit and feedback was more effective if baseline performance was low and feedback was: (1) from a supervisor or colleague, (2) provided more than once, (3) delivered in both written and verbal format, or and (4) included specific targets and an action plan.Reference Ivers, Jamtvedt and Flottorp6

This suggests that audit and feedback is not a homogenous intervention, and the nature of the feedback and method of delivery influences its effectiveness. The best practices are further defined by Brehaut et al.,Reference Brehaut, Colquhoun and Eva7 who proposed 15 suggestions for optimizing feedback. This list includes recommendations on how to most effectively deliver practice feedback with respect to nature of the desired action, data available for feedback, how to display the data and deliver the feedback.Reference Brehaut, Colquhoun and Eva7 How these concepts apply to the emergency medicine (EM) context will be explored in more detail throughout this study.

After reviewing the literature, Dr. Smith decided to use audit and feedback to improve the quality of care provided by the group. With the assistance of the hospital's information technology department, Dr. Smith was able to obtain access to several physician practice metrics, including number of patients seen per hour, imaging ordering rates, etc. How will she determine which data elements to use?

What are common approaches to identifying audit and feedback indicators?

Identifying which clinical indicators to provide physicians is a difficult yet critical step. A common starting point for selecting audit and feedback metrics for the ED is to use published clinical practice guidelines or achievable benchmarks of care (ABCs).Reference Kiefe, Allison, Williams, Person, Weaver and Weissman8,Reference Reyes, Paulus and Hronek9 Many audit and feedback interventions have used these guidelines and demonstrated clinical improvement.Reference Kiefe, Allison, Williams, Person, Weaver and Weissman8 Another potential source of clinical indicators are the Choosing Wisely recommendationsReference Reyes, Paulus and Hronek9 supported in a recent commentary by Ivers and Desveaux, outlining why audit and feedback is a fundamental element in reducing low-value care.Reference Ivers and Desveaux10

An additional source of audit and feedback indicators may include making use of mandatory ED performance metrics. Although these may be viewed as “easy wins,” there are important limitations to this approach. Metrics that are of interest to regulatory bodies may reflect departmental rather than individual physicians’ performance.Reference Brehaut, Colquhoun and Eva7 Examples of provincial performance metrics include Physician Initial Assessment (PIA) times and Ambulance Offload Times. An alternative would be to use these metrics to derive surrogate measures of individual clinician practice (i.e., report ED PIA to disposition times for clinicians).

Often, audit and feedback indicators with the greatest potential for improvement come from examination of local issues that are known to have significant variation in practice. One can also draw inspiration from variations identified in other similar departments. ED leaders should engage with broader EM communities to learn from those with audit and feedback expertise.

Co-designing metrics with physicians

Although departments should use the above processes to derive some metrics to be used locally, we also advocate for a consensus process for the feedback on the final metrics to be used in the group. This can be done with a simple survey or a more robust Delphi process.Reference Dalkey11 This constitutes an important element of change management and provides each practitioner with the opportunity to opine on each metric. This collaborative approach is critical for engagement from frontline clinicians.

Physician groups looking to implement audit and feedback mechanisms could also establish objective evaluation criteria for current and newly proposed metrics according to the American College of Physicians criteria, ensuring only valuable metrics continue to be included (online Appendix A). Lastly, it is important to remember that improvement is a balance of the user's perception of value, capacity, and workload associated with the improvement task.Reference Hayes and Goldmann12

Dr. Smith decided to postpone dissemination of physician performance metrics, and instead formed a departmental working group to help define their group's priorities. Together, they achieved consensus on areas of clinical care to focus on and began to define the data elements that would help them monitor those areas of care.

Aligning data with goals and priorities

In the development of metrics, ED groups should consider the inclusion of measures that reflect current departmental goals.Reference Brehaut, Colquhoun and Eva7 This may also include the modification of metrics to more closely align with these priorities and allows groups to quantify their individual and collective practice in areas that enable improvement. These specific metrics can often be found in hospital QI plans, or governing documents that set the improvement agenda for the organization.

Using available data, the working group decided on three areas of care to improve which each had a large variation in practice: patient flow, sepsis management, and computed tomography use. With these specific issues in mind, the working group developed the metrics for each area. The committee chose to review and report to individual physicians their data on: source of sepsis and time to initial lactate and antibiotics (see online Appendix A).

Development of data reports

The physician reports are a key element of audit and feedback, and thoughtful consideration should be given to the design and content. Although aggregate group data may be useful for system level measures, physician level feedback is generally more meaningful and is more likely to lead to change. Providing comparator data is beneficial as it helps support the desired behavior change.Reference Brehaut, Colquhoun and Eva7 For metrics where an accepted ABC exists, providing this as a comparator can be more effective than peer data according to one study. In one study, including a behavior change message (i.e., message that supports desired action) resulted in fewer antibiotic prescriptions compared with control.Reference Elouafkaoui, Young and Newlands13

The optimal frequency of distributing reports is unknown and may depend on the data being provided. Providing feedback in a timely manner is important to ensure the data is relevant and actionable.Reference Brehaut, Colquhoun and Eva7 Exceedingly frequent data delivery may lead to data fatigue or dramatic practice changes based on a small number of cases.Reference Brehaut, Colquhoun and Eva7 By contrast, infrequent feedback may be discounted as no longer relevant due to a perceived change in personal practice that may or may not have occurred. Ideally, physicians would individually track their performance over time and see if their action plans resulted in noticeable improvements. Data reports can include a trend-over-time graphic to allow for comparison to prior performance.

When creating the reports, it is important to reduce extraneous cognitive load for feedback recipients.Reference Brehaut, Colquhoun and Eva7 This may mean providing fewer metrics or only data for the most relevant indicators. One should also simplify the data presented. If the data require significant effort to interpret, it may be misunderstood or entirely ignored. Reports should be presented both verbally and in writing, and figures should be consistent with the message being conveyed (e.g., poor performance at bottom of a graph v. good performance at top of a graph).

Ensuring reports are easily accessible and actively disseminated will increase engagement among physicians. Passive report dissemination (e.g., physicians needing to retrieve the report from an online dashboard) may be a barrier to physicians. Without context, physicians may question the validity of the data, thus acknowledging the limitations of the data at the outset is critical.

Finally, a stepwise approach in establishing the scope and progressive rollout of performance metrics is important. Careful consideration must be given to the initial set of measures that are proposed within a group so as to ensure a balanced approach. As an example, it could be dangerous to implement an initial set of measures which included time to consult, advanced imaging use rates, number of patients seen per hour, and average billings per patient. This suite would incentivize the rapid cycling of patients with fewer investigations and more procedures. This “directionality” of initial performance metrics must include balancing measures from the outset such as percent consults admitted and 72 hours ED return and admit rates.14

As the working group began to finalize a mock-up of a performance report card, Dr. Smith wondered about the logistics of presenting these metrics: Do I send them to the group by email or do I meet with each individual?

How to deliver and facilitate audit and feedback?

To maximize the impact of audit and feedback on individual clinicians, we must also consider emerging trends in medical education and professional development. Recently, the Royal College of Physicians and Surgeons of Canada has made audit and feedback an integral part of their Competency By Design Framework, emphasizing the importance of Physician Practice Improvement (Figure 1).4 To do this successfully, we need to provide clinicians with their practice data as well as provide them with the tools to implement action plans. This process requires more than just passive dissemination of physician data.

Figure 1. Physician practice improvement.

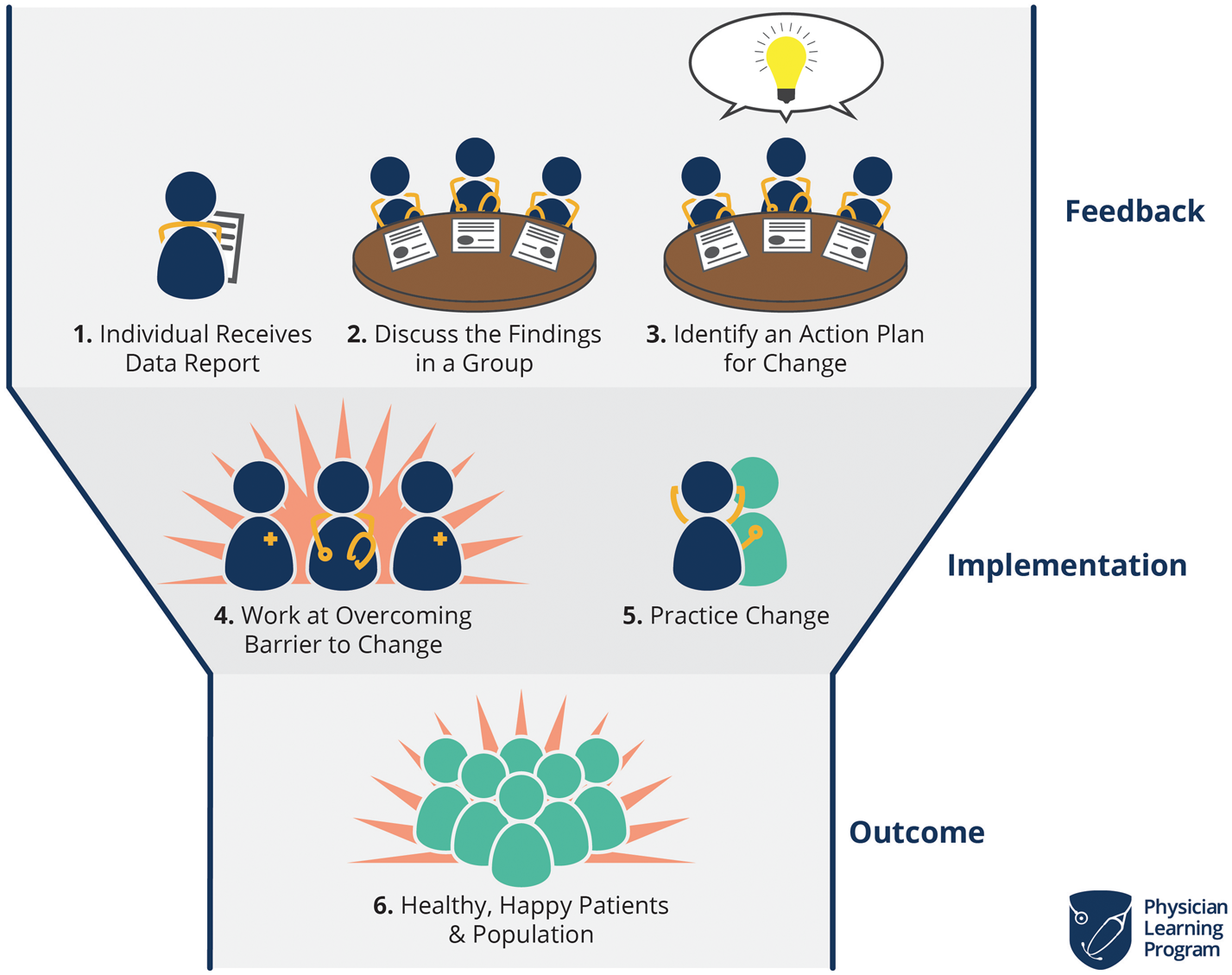

Group facilitated feedback sessions have been used in audit and feedback sessions to leverage social learning theory and promote discussion among cliniciansReference Cooke, Duncan, Rivera, Dowling, Symonds and Armson15 (Figure 2). In these sessions, participants go through a predictable pattern of reacting to and understanding the data, contextualizing, reflecting, and planning for change.Reference Cooke, Duncan, Rivera, Dowling, Symonds and Armson16 This process helps physicians convert their practice data into meaningful information that can be used for practice improvement. We have also seen a growing call for medical coaching in the mainstream media, with multiple articles published on the topic.Reference Watling, Driessen, van der Vleuten, Vanstone and Lingard17–Reference Watling and LaDonna19 This high intensity activity will require formal assessment to establish its role in physician practice improvement.

Figure 2. Calgary audit and feedback framework.

Additional considerations: privacy and controversies related to audit and feedback

A constant tension exists as to whether audit and feedback should be used as a performance management tool or as a self-reflective strategy. Although arguments can be made on both fronts, the current data available are unable to provide a holistic view of the “quality of care that one physician provides,” at best, we can provide snapshots of a physician practice with respect to the metrics provided. It is important to have real discussions about the permissibility of the data and the extent to which can be used within a group. Clear evidence of high-risk practice may require early intervention that should be discussed at the outset. As such, using audit and feedback for performance assessment, pay for performance, or employment decisions should be done with caution until there is evidence around which strategy is most effective. Yet, it is also easy to imagine the use of audit and feedback to establish minimum practice metrics or envision their use in a punitive sense. This is often dubbed “data for accountability.” Within this publication, we openly advocate for use of this audit and feedback as a self-reflective professional development tool.

As with any data, those individuals collecting the data are responsible for its privacy. Although there is often an interest in providing unblinded data reports (whereby physicians are openly compared with each other); this strategy has not been studied and could have detrimental impact on morale and gaming of metrics to improve ones’ rank within the department. How the de-identified physician data are disseminated should be discussed within the group, which includes specifics on data storage, how the data will be used, and which individuals within the department should have access to identifiable datasets. Furthermore, groups should explicitly state their policy toward dissemination of de-identified data outside of the clinical group (i.e., hospital leadership) to ensure the data are used for its intended purpose.

As a result of physician inclusion, a model for audit and feedback is developed. Based on this, Dr. Smith has designed a feedback plan that includes scheduling small group discussions with peers. She also began to develop coaching modules for her physicians. Within the first 3 months of implementing the audit and feedback program, she sees modest but encouraging results within the group. She will use feedback to further improve the process.

Dr. Smith is the Chief of the Emergency Department (ED) at the Royal Oak Hospital. This medium-sized community center is affiliated with the local medical school. Recently, the hospital has adopted the use of electronic health records and as a result, Dr. Smith now has potential access to large amounts of ED data. Having heard other ED leaders talking about “physician report cards,” Dr. Smith wonders if she should also provide data-driven feedback to her group.

What is audit and feedback? Why is it needed?

Audit and feedback is a practice improvement strategy. By summarizing an individual's clinical performance over a specific time period, it allows for the identification of areas for improvement. The rationale is that, by demonstrating a gap between a clinician's actual and desired practice for a specified measure, clinicians will be made aware of an unperceived learning need and will be motivated to make a change in their practice.Reference Meyer and Singh1 While there are other commonly used terms for audit and feedback, it is our experience that some of these terms (e.g., report cards) can elicit negative reactions from clinicians. Audit and feedback may be given in various formats.Reference Jamtvedt, Young, Kristoffersen, O'Brien and Oxman2 Physicians may be provided with information about their performance relative to their peers, targets and/or benchmarks. Audit and feedback may include an action plan that can lead to improvement.Reference Ivers, Sales and Colquhoun3 Some may wonder why audit and feedback is necessary; physicians are highly trained, motivated individuals seeking to provide the best care to their patients. Yet without receiving timely and specific practice feedback, it is difficult for physicians to identify unperceived learning needs or practice gaps. Furthermore, the Competency by Design Framework4 presented by the Royal College encourages physicians to understand and assess their practice, create and implement a learning plan and evaluate the outcomes. When done properly, audit and feedback is both a professional development and quality improvement (QI) strategy. However, this strategy has to be designed and delivered in an effective manner and in line with the priorities of physicians and patients (Box 1).Reference MacLean, Kerr and Qaseem5

Box 1. Key elements of audit and feedback.

1. Defining performance metrics

a. Evidence-informed

b. Align data with goals and priorities of department/physiciansReference Brehaut, Colquhoun and Eva7

c. Co-designed /selected by physicians/providers

d. When possible provide individual (rather than aggregate) dataReference Brehaut, Colquhoun and Eva7

e. Metric should be within individual physician control

2. Data report

a. Include peer comparator or explicit goal/targetReference Brehaut, Colquhoun and Eva7

b. Provide timely feedback over multiple instancesReference Brehaut, Colquhoun and Eva7

c. Minimize extraneous cognitive loadReference Brehaut, Colquhoun and Eva7

3. Facilitation

a. Facilitation (group or individual) can help

i. Address reactions to data and feedback processReference Brehaut, Colquhoun and Eva7

ii. Identify barriers and enablers to practice change

iii. Guide physicians in creating action plans

iv. Ensure the data are delivered both written and verballyReference Brehaut, Colquhoun and Eva7

v. Facilitation by a respected colleague not serving in a power position

4. Remeasurement to assess whether a change has occurred

At the next departmental meeting, Dr. Smith presented the idea of providing individual physicians with their practice data. Overall, there seemed to be a lot of interest around the concept, although a few of her colleagues questioned the utility of getting a “report card.” Have other EDs done this and what is the evidence?

What is the evidence to support audit and feedback?

While several individual physician groups and healthcare regulatory bodies have embraced the use of audit and feedback for QI purposes, the effectiveness of audit and feedback can be variable. In the most recent Cochrane review, Ivers et al. analyzed 140 studies and found that audit and feedback leads to a median 4.3% absolute improvement in practice (interquartile range, 0.5% to 16%).Reference Ivers, Jamtvedt and Flottorp6 However, the effect size was determined by the type of clinical behavior the audit and feedback aimed to address. Audit and feedback was more effective if baseline performance was low and feedback was: (1) from a supervisor or colleague, (2) provided more than once, (3) delivered in both written and verbal format, or and (4) included specific targets and an action plan.Reference Ivers, Jamtvedt and Flottorp6

This suggests that audit and feedback is not a homogenous intervention, and the nature of the feedback and method of delivery influences its effectiveness. The best practices are further defined by Brehaut et al.,Reference Brehaut, Colquhoun and Eva7 who proposed 15 suggestions for optimizing feedback. This list includes recommendations on how to most effectively deliver practice feedback with respect to nature of the desired action, data available for feedback, how to display the data and deliver the feedback.Reference Brehaut, Colquhoun and Eva7 How these concepts apply to the emergency medicine (EM) context will be explored in more detail throughout this study.

After reviewing the literature, Dr. Smith decided to use audit and feedback to improve the quality of care provided by the group. With the assistance of the hospital's information technology department, Dr. Smith was able to obtain access to several physician practice metrics, including number of patients seen per hour, imaging ordering rates, etc. How will she determine which data elements to use?

What are common approaches to identifying audit and feedback indicators?

Identifying which clinical indicators to provide physicians is a difficult yet critical step. A common starting point for selecting audit and feedback metrics for the ED is to use published clinical practice guidelines or achievable benchmarks of care (ABCs).Reference Kiefe, Allison, Williams, Person, Weaver and Weissman8,Reference Reyes, Paulus and Hronek9 Many audit and feedback interventions have used these guidelines and demonstrated clinical improvement.Reference Kiefe, Allison, Williams, Person, Weaver and Weissman8 Another potential source of clinical indicators are the Choosing Wisely recommendationsReference Reyes, Paulus and Hronek9 supported in a recent commentary by Ivers and Desveaux, outlining why audit and feedback is a fundamental element in reducing low-value care.Reference Ivers and Desveaux10

An additional source of audit and feedback indicators may include making use of mandatory ED performance metrics. Although these may be viewed as “easy wins,” there are important limitations to this approach. Metrics that are of interest to regulatory bodies may reflect departmental rather than individual physicians’ performance.Reference Brehaut, Colquhoun and Eva7 Examples of provincial performance metrics include Physician Initial Assessment (PIA) times and Ambulance Offload Times. An alternative would be to use these metrics to derive surrogate measures of individual clinician practice (i.e., report ED PIA to disposition times for clinicians).

Often, audit and feedback indicators with the greatest potential for improvement come from examination of local issues that are known to have significant variation in practice. One can also draw inspiration from variations identified in other similar departments. ED leaders should engage with broader EM communities to learn from those with audit and feedback expertise.

Co-designing metrics with physicians

Although departments should use the above processes to derive some metrics to be used locally, we also advocate for a consensus process for the feedback on the final metrics to be used in the group. This can be done with a simple survey or a more robust Delphi process.Reference Dalkey11 This constitutes an important element of change management and provides each practitioner with the opportunity to opine on each metric. This collaborative approach is critical for engagement from frontline clinicians.

Physician groups looking to implement audit and feedback mechanisms could also establish objective evaluation criteria for current and newly proposed metrics according to the American College of Physicians criteria, ensuring only valuable metrics continue to be included (online Appendix A). Lastly, it is important to remember that improvement is a balance of the user's perception of value, capacity, and workload associated with the improvement task.Reference Hayes and Goldmann12

Dr. Smith decided to postpone dissemination of physician performance metrics, and instead formed a departmental working group to help define their group's priorities. Together, they achieved consensus on areas of clinical care to focus on and began to define the data elements that would help them monitor those areas of care.

Aligning data with goals and priorities

In the development of metrics, ED groups should consider the inclusion of measures that reflect current departmental goals.Reference Brehaut, Colquhoun and Eva7 This may also include the modification of metrics to more closely align with these priorities and allows groups to quantify their individual and collective practice in areas that enable improvement. These specific metrics can often be found in hospital QI plans, or governing documents that set the improvement agenda for the organization.

Using available data, the working group decided on three areas of care to improve which each had a large variation in practice: patient flow, sepsis management, and computed tomography use. With these specific issues in mind, the working group developed the metrics for each area. The committee chose to review and report to individual physicians their data on: source of sepsis and time to initial lactate and antibiotics (see online Appendix A).

Development of data reports

The physician reports are a key element of audit and feedback, and thoughtful consideration should be given to the design and content. Although aggregate group data may be useful for system level measures, physician level feedback is generally more meaningful and is more likely to lead to change. Providing comparator data is beneficial as it helps support the desired behavior change.Reference Brehaut, Colquhoun and Eva7 For metrics where an accepted ABC exists, providing this as a comparator can be more effective than peer data according to one study. In one study, including a behavior change message (i.e., message that supports desired action) resulted in fewer antibiotic prescriptions compared with control.Reference Elouafkaoui, Young and Newlands13

The optimal frequency of distributing reports is unknown and may depend on the data being provided. Providing feedback in a timely manner is important to ensure the data is relevant and actionable.Reference Brehaut, Colquhoun and Eva7 Exceedingly frequent data delivery may lead to data fatigue or dramatic practice changes based on a small number of cases.Reference Brehaut, Colquhoun and Eva7 By contrast, infrequent feedback may be discounted as no longer relevant due to a perceived change in personal practice that may or may not have occurred. Ideally, physicians would individually track their performance over time and see if their action plans resulted in noticeable improvements. Data reports can include a trend-over-time graphic to allow for comparison to prior performance.

When creating the reports, it is important to reduce extraneous cognitive load for feedback recipients.Reference Brehaut, Colquhoun and Eva7 This may mean providing fewer metrics or only data for the most relevant indicators. One should also simplify the data presented. If the data require significant effort to interpret, it may be misunderstood or entirely ignored. Reports should be presented both verbally and in writing, and figures should be consistent with the message being conveyed (e.g., poor performance at bottom of a graph v. good performance at top of a graph).

Ensuring reports are easily accessible and actively disseminated will increase engagement among physicians. Passive report dissemination (e.g., physicians needing to retrieve the report from an online dashboard) may be a barrier to physicians. Without context, physicians may question the validity of the data, thus acknowledging the limitations of the data at the outset is critical.

Finally, a stepwise approach in establishing the scope and progressive rollout of performance metrics is important. Careful consideration must be given to the initial set of measures that are proposed within a group so as to ensure a balanced approach. As an example, it could be dangerous to implement an initial set of measures which included time to consult, advanced imaging use rates, number of patients seen per hour, and average billings per patient. This suite would incentivize the rapid cycling of patients with fewer investigations and more procedures. This “directionality” of initial performance metrics must include balancing measures from the outset such as percent consults admitted and 72 hours ED return and admit rates.14

As the working group began to finalize a mock-up of a performance report card, Dr. Smith wondered about the logistics of presenting these metrics: Do I send them to the group by email or do I meet with each individual?

How to deliver and facilitate audit and feedback?

To maximize the impact of audit and feedback on individual clinicians, we must also consider emerging trends in medical education and professional development. Recently, the Royal College of Physicians and Surgeons of Canada has made audit and feedback an integral part of their Competency By Design Framework, emphasizing the importance of Physician Practice Improvement (Figure 1).4 To do this successfully, we need to provide clinicians with their practice data as well as provide them with the tools to implement action plans. This process requires more than just passive dissemination of physician data.

Figure 1. Physician practice improvement.

Group facilitated feedback sessions have been used in audit and feedback sessions to leverage social learning theory and promote discussion among cliniciansReference Cooke, Duncan, Rivera, Dowling, Symonds and Armson15 (Figure 2). In these sessions, participants go through a predictable pattern of reacting to and understanding the data, contextualizing, reflecting, and planning for change.Reference Cooke, Duncan, Rivera, Dowling, Symonds and Armson16 This process helps physicians convert their practice data into meaningful information that can be used for practice improvement. We have also seen a growing call for medical coaching in the mainstream media, with multiple articles published on the topic.Reference Watling, Driessen, van der Vleuten, Vanstone and Lingard17–Reference Watling and LaDonna19 This high intensity activity will require formal assessment to establish its role in physician practice improvement.

Figure 2. Calgary audit and feedback framework.

Additional considerations: privacy and controversies related to audit and feedback

A constant tension exists as to whether audit and feedback should be used as a performance management tool or as a self-reflective strategy. Although arguments can be made on both fronts, the current data available are unable to provide a holistic view of the “quality of care that one physician provides,” at best, we can provide snapshots of a physician practice with respect to the metrics provided. It is important to have real discussions about the permissibility of the data and the extent to which can be used within a group. Clear evidence of high-risk practice may require early intervention that should be discussed at the outset. As such, using audit and feedback for performance assessment, pay for performance, or employment decisions should be done with caution until there is evidence around which strategy is most effective. Yet, it is also easy to imagine the use of audit and feedback to establish minimum practice metrics or envision their use in a punitive sense. This is often dubbed “data for accountability.” Within this publication, we openly advocate for use of this audit and feedback as a self-reflective professional development tool.

As with any data, those individuals collecting the data are responsible for its privacy. Although there is often an interest in providing unblinded data reports (whereby physicians are openly compared with each other); this strategy has not been studied and could have detrimental impact on morale and gaming of metrics to improve ones’ rank within the department. How the de-identified physician data are disseminated should be discussed within the group, which includes specifics on data storage, how the data will be used, and which individuals within the department should have access to identifiable datasets. Furthermore, groups should explicitly state their policy toward dissemination of de-identified data outside of the clinical group (i.e., hospital leadership) to ensure the data are used for its intended purpose.

As a result of physician inclusion, a model for audit and feedback is developed. Based on this, Dr. Smith has designed a feedback plan that includes scheduling small group discussions with peers. She also began to develop coaching modules for her physicians. Within the first 3 months of implementing the audit and feedback program, she sees modest but encouraging results within the group. She will use feedback to further improve the process.

CONCLUSION

Audit and feedback can be a powerful QI tool that should be considered by EM practitioners and EDs. To maximize its potential, careful design of its metrics and a deliberate implementation process should be undertaken. Particularly, the processes should be physician-led, align with departmental and patient-centered priorities, and incorporate the best possible evidence on both clinical care and the tenets of audit and feedback. The individual data that result from audit and feedback activities should be used for data-driven practice improvement as part of continuous professional development activities. This process should also be mobilized as a tool to decrease low-value care and improve the quality of care provided in the ED.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/cem.2020.28.