1. Introduction

Prediction and control of fluid flows to pursue a specific objective is a highly compelling research area (Gad-el Hak Reference Gad-el Hak2000). Flow control offers wide-ranging practical applications in diverse fields, including vehicle dynamics, aircraft and marine transportation, meteorology, energy production from water and wind, combustion and chemical processes, and more (Duriez, Brunton & Noack Reference Duriez, Brunton and Noack2017). The goals of fluid flow control generally encompass, among others, drag reduction, control of separation and transition, lift or mixing enhancement. In recent years, drag reduction has received considerable attention due to its notable impact on the environmental footprint of transportation means (Green Reference Green2003).

In the last decades, active flow control has garnered increasing attention. This technique can be implemented in open-loop and closed-loop configurations. The former involves predetermining the actuation law irrespective of the system state, thus simplifying its application. Notable examples include wake control of bluff bodies (Blackburn & Henderson Reference Blackburn and Henderson1999; Cetiner & Rockwell Reference Cetiner and Rockwell2001; Thiria, Goujon-Durand & Wesfreid Reference Thiria, Goujon-Durand and Wesfreid2006; Parkin, Thompson & Sheridan Reference Parkin, Thompson and Sheridan2014), open-cavity flows (Little et al. Reference Little, Debiasi, Caraballo and Samimy2007; Sipp Reference Sipp2012; Nagarajan et al. Reference Nagarajan, Singha, Cordier and Airiau2018) and heat transfer (Castellanos et al. Reference Castellanos, Michelis, Discetti, Ianiro and Kotsonis2022b), among others. However, open-loop control's effectiveness is limited in unstable flow stabilization, responding to changes in the controlled system parameters or dealing with external disturbances. On the contrary, closed-loop implementations (also referred to as reactive control) involve feeding the control law by the knowledge of the state. This approach offers greater flexibility and adaptability (Brunton & Noack Reference Brunton and Noack2015). Experimental evidence demonstrates the superior performance of closed-loop over open-loop control; see e.g. Pinier et al. (Reference Pinier, Ausseur, Glauser and Higuchi2007) or Shimomura et al. (Reference Shimomura, Sekimoto, Oyama, Fujii and Nishida2020).

The identification of control laws requires adequate knowledge of the system dynamics and its response to control inputs. In fluid dynamics, model-based techniques have traditionally been utilized to obtain this information, proving successful in various scenarios (Kim & Bewley Reference Kim and Bewley2007). Examples of applications include transition delay in spatially evolving wall-bounded flows (Chevalier et al. Reference Chevalier, Hœpffner, Åkervik and Henningson2007; Monokrousos et al. Reference Monokrousos, Brandt, Schlatter and Henningson2008; Tol, De Visser & Kotsonis Reference Tol, De Visser and Kotsonis2019), cavity flow control (Rowley & Williams Reference Rowley and Williams2006; Illingworth, Morgans & Rowley Reference Illingworth, Morgans and Rowley2011), separation control on a low-Reynolds-number airfoil (Ahuja et al. Reference Ahuja, Rowley, Kevrekidis, Wei, Colonius and Tadmor2007), wake stabilization of cylinders (Schumm, Berger & Monkewitz Reference Schumm, Berger and Monkewitz1994; Gerhard et al. Reference Gerhard, Pastoor, King, Noack, Dillmann, Morzynski and Tadmor2003; Tadmor et al. Reference Tadmor, Lehmann, Noack, Cordier, Delville, Bonnet and Morzyński2011), skin-friction drag reduction (Cortelezzi & Speyer Reference Cortelezzi and Speyer1998; Lee et al. Reference Lee, Cortelezzi, Kim and Speyer2001; Kim Reference Kim2011). However, the identification of efficient analytical control laws faces an important challenge in the presence of complex nonlinear multiscale dynamics.

In recent years, model-free techniques have gained popularity, driven by advancements in hardware and the increasing efficiency of data-driven and machine-learning algorithms. Examples of model-free techniques include genetic algorithms in jet mixing optimization (Koumoutsakos, Freund & Parekh Reference Koumoutsakos, Freund and Parekh2001; Wu et al. Reference Wu, Fan, Zhou, Li and Noack2018), wake flows (Poncet, Cottet & Koumoutsakos Reference Poncet, Cottet and Koumoutsakos2005; Raibaudo et al. Reference Raibaudo, Zhong, Noack and Martinuzzi2020), separation control (Gautier et al. Reference Gautier, Aider, Duriez, Noack, Segond and Abel2015) and combustion noise (Buche et al. Reference Buche, Stoll, Dornberger and Koumoutsakos2002). Reinforcement learning (RL) has also recently gained popularity, with successful applications in the control of bluff body wakes (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019; Fan et al. Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020; Castellanos et al. Reference Castellanos, Cornejo Maceda, de la Fuente, Noack, Ianiro and Discetti2022a) and natural convection (Beintema et al. Reference Beintema, Corbetta, Biferale and Toschi2020). Despite the encouraging results of such model-free techniques, their effectiveness is limited by the need for large datasets.

Within model-based techniques, model predictive control (MPC) offers interesting features to deal with the challenges of fluid flow control. Model predictive control is based on the idea of receding horizon control. It has found application in industry since the 1980s (Qin & Badgwell Reference Qin and Badgwell2003), in particular with extensive use in refineries and the petrochemical industry (Lee Reference Lee2011). Model predictive control has demonstrated excellent performance in controlling complex systems with constraints, strong nonlinearities and time delays (Henson Reference Henson1998; Allgöwer et al. Reference Allgöwer, Findeisen and Nagy2004; Camacho & Alba Reference Camacho and Alba2013; Grüne & Pannek Reference Grüne and Pannek2017). Therefore, it is particularly appropriate for complex systems that challenge traditional linear controllers (Corona & De Schutter Reference Corona and De Schutter2008). The method requires the identification of a model of the system dynamics capable of predicting its behaviour under exogenous inputs. The optimal control is determined through the iterative solution of an optimization problem within a prediction window, aiming to minimize a user-defined cost function that considers the distance of the system state from the control target. Moreover, MPC allows for the straightforward implementation of hard constraints, such as hardware limitations, distinguishing it from classical control approaches. Model predictive control has been successfully applied in the control of complex fluid systems; see e.g. Collis et al. (Reference Collis, Chang, Kellogg and Prabhu2000), Bewley, Moin & Temam (Reference Bewley, Moin and Temam2001), Bieker et al. (Reference Bieker, Peitz, Brunton, Kutz and Dellnitz2020), Sasaki & Tsubakino (Reference Sasaki and Tsubakino2020), Morton et al. (Reference Morton, Jameson, Kochenderfer and Witherden2018) and Peitz, Otto & Rowley (Reference Peitz, Otto and Rowley2020). A crucial aspect of MPC implementation is achieving a proper balance among the terms of the loss function. The user needs to select weights (referred to as hyperparameters) for the loss, considering factors like closeness to the target, cost of the action and other application-tailored constraints. This choice has a clear impact on the final performance. In flow control applications this process traditionally relies on user experience, which poses the risk of suboptimal choices.

Bayesian optimization (BO) or RL techniques have demonstrated excellent results in hyperparameter tuning, particularly in the fields of autonomous driving and robotics (Edwards et al. Reference Edwards, Tang, Mamakoukas, Murphey and Hauser2021; Fröhlich et al. Reference Fröhlich, Küttel, Arcari, Hewing, Zeilinger and Carron2022; Bøhn et al. Reference Bøhn, Gros, Moe and Johansen2023). A comprehensive review in this area can be found in Hewing et al. (Reference Hewing, Wabersich, Menner and Zeilinger2020). However, in the application of nonlinear MPC to flow control, examples are scarce, and the choice of MPC parameters is often guided by trial error and intuition. This approach risks falling into suboptimal configurations that may not adequately account for the different degrees of fidelity in the terms involved in the loss function. This issue is particularly relevant in fluid mechanics, where the uncertainty in predicting the plant behaviour and the measured state/control actions should play a role in the parameter selection process. Unfortunately, an analytical formulation is elusive in most cases.

Moreover, as a closed-loop strategy, the implementation of MPC requires feedback, consisting of time-sampled measurements of a feature of the system to be controlled. In real control scenarios, this sampling is often affected by measurement noise, which can compromise control decision making. Thus, suitable smoothing techniques are necessary to enhance noise robustness. In time series analysis a non-parametric statistical technique called local polynomial regression (LPR) proves particularly effective in this task. Local polynomial regression estimates the regression function of sensor outputs and their time derivatives without assuming any prior information. Applications of LPR for control purposes are described in works such as Steffen, Oztop & Ritter (Reference Steffen, Oztop and Ritter2010) or Ouyang et al. (Reference Ouyang, Zhou, Ma and Tu2018).

In this paper we propose a fully automatic architecture that self-tunes control and optimization process parameters with minimal user input. Our MPC framework adapts to different levels of noise and/or limited state knowledge. The methodology builds upon offline black-box optimization via Bayesian methods for hyperparameter tuning. Furthermore, we discuss the robustness enhancement to noise using an online LPR. The effectiveness of the control algorithm is evaluated through its application to the control of the wake of the fluidic pinball (Deng et al. Reference Deng, Noack, Morzyński and Pastur2020) in the chaotic regime. Although not strictly needed, plant identification is also data driven. In this work, nonlinear system identification is performed using the sparse identification of nonlinear dynamics with control (SINDYc, Brunton, Proctor & Kutz Reference Brunton, Proctor and Kutz2016b).

The paper is organized as follows. Section 2 provides a description of the methodology, emphasizing the mathematical tools and the MPC framework employed. Additionally, this section includes specific details regarding the chosen test case for illustration purposes. The results of the control application, along with their interpretation are provided in § 3. Finally, the conclusions are discussed in § 4.

2. Methodology

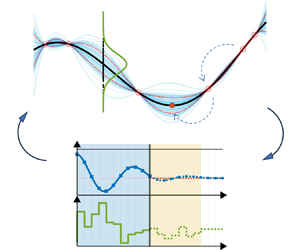

This section presents the backbone of the control algorithm, with a detailed description of the mathematical tools involved in it. Figure 1 includes a diagram illustrating all the required steps for its implementation. In addition, algorithm 1 is introduced to give more detail on the procedure.

Figure 1. The MPC-based control algorithm schematic: dataset generation, creation of the predictive model and parameter tuning. The main block displays the closed-loop MPC scheme, including also LPR to mitigate the effects of sensor measurement noise.

Algorithm 1 Control algorithm

The main block in the schematics represents the MPC algorithm, following the approach proposed by Kaiser, Kutz & Brunton (Reference Kaiser, Kutz and Brunton2018). The main novelty in this module is the robustness enhancement by online filtering with LPR. This is particularly useful when the plant dynamics is modelled with time-delay coordinates or their derivatives. Local polynomial regression is directly applied to past sensor data for online implementation.

The roadmap suggests several necessary steps before implementing the control. First, a training dataset is generated. The dataset consists of the time series of the state dynamics under different exogenous inputs. This data can be collected using various methods, including simulations or experiments. The system state and exogenous inputs should be defined based on the specific system being controlled. Second, a plant model is defined to predict the system behaviour. In this work we use a data-driven nonlinear system identification. The final step, before the implementation of the control, focuses on tuning the parameters that define the MPC cost function. Control performance is significantly influenced by their selection. The self-tuning of the hyperparameters is the core of our framework. This tuning is carried out using a BO algorithm.

An important aspect of the proposed method is the need for minimal user interaction. Indeed, the two main inputs are the reference set point (i.e. the control target) and a cost function for the MPC algorithm. The weight of the different contributions in the cost function will be determined in the MPC tuning. Bayesian optimization automatically adjusts to different levels of noise and uncertainties. This greatly enhances the usability and adaptability of the framework to different systems.

2.1. Self-tuning MPC

2.1.1. Model predictive control

This section describes the MPC implementation. It is assumed to have a time-evolving process whose complete description relies on ![]() $N_a\geq 1$ parameters. These are included in a state vector, denoted at a given instant

$N_a\geq 1$ parameters. These are included in a state vector, denoted at a given instant ![]() $t$ as

$t$ as ![]() $\boldsymbol {a} \equiv \boldsymbol {a}(t) = (a^1(t), \ldots, a^{N_a}(t))'$. The evolution in time of the process can be influenced by the choice of

$\boldsymbol {a} \equiv \boldsymbol {a}(t) = (a^1(t), \ldots, a^{N_a}(t))'$. The evolution in time of the process can be influenced by the choice of ![]() $N_b\geq 1$ exogenous parameters. These are included in an input vector

$N_b\geq 1$ exogenous parameters. These are included in an input vector ![]() $\boldsymbol {b} \equiv \boldsymbol {b}(t) = (b^1(t),\ldots, b^{N_b}(t))'$,

$\boldsymbol {b} \equiv \boldsymbol {b}(t) = (b^1(t),\ldots, b^{N_b}(t))'$, ![]() $\boldsymbol {b} \in \mathcal {B} \subset \mathbb {R}^{N_b}$, where

$\boldsymbol {b} \in \mathcal {B} \subset \mathbb {R}^{N_b}$, where ![]() $\mathcal {B}$ is the set of allowable inputs. Denoting the time derivative of the state vector with

$\mathcal {B}$ is the set of allowable inputs. Denoting the time derivative of the state vector with ![]() $\dot {\boldsymbol {a}}$, the system dynamics is described by the following set of equations:

$\dot {\boldsymbol {a}}$, the system dynamics is described by the following set of equations:

\begin{equation} \left. \begin{gathered} \boldsymbol{\dot{a}} = f(\boldsymbol{a},\boldsymbol{b}), \\ \boldsymbol{a}(0) = \boldsymbol{a}_0. \end{gathered} \right\} \end{equation}

\begin{equation} \left. \begin{gathered} \boldsymbol{\dot{a}} = f(\boldsymbol{a},\boldsymbol{b}), \\ \boldsymbol{a}(0) = \boldsymbol{a}_0. \end{gathered} \right\} \end{equation}

Here ![]() $\boldsymbol {a}(t_j)=\boldsymbol {a}_j$ and

$\boldsymbol {a}(t_j)=\boldsymbol {a}_j$ and ![]() $f$ is the function characterizing the system's temporal evolution. The process is considered as controlled. This means that for each time

$f$ is the function characterizing the system's temporal evolution. The process is considered as controlled. This means that for each time ![]() $t$, the input vector

$t$, the input vector ![]() $\boldsymbol {b}(t)$ can be selected in order to manipulate the system dynamics according to a specific objective. More specifically, the aim is to control

$\boldsymbol {b}(t)$ can be selected in order to manipulate the system dynamics according to a specific objective. More specifically, the aim is to control ![]() $N_c\geq 1$ features of the dynamical system, included in the vector

$N_c\geq 1$ features of the dynamical system, included in the vector ![]() $\boldsymbol {c} \equiv \boldsymbol {c}(t) = (c^1(t), \ldots, c^{N_c}(t))'$. Note that the vector

$\boldsymbol {c} \equiv \boldsymbol {c}(t) = (c^1(t), \ldots, c^{N_c}(t))'$. Note that the vector ![]() $\boldsymbol {c}$ is dependent on the system state. In this context, it is assumed that the target features are part of the state vector itself, although this may not always be applicable. The objective is to achieve control over the vector

$\boldsymbol {c}$ is dependent on the system state. In this context, it is assumed that the target features are part of the state vector itself, although this may not always be applicable. The objective is to achieve control over the vector ![]() $\boldsymbol {c}$ by ensuring that it closely tends to a desired reference

$\boldsymbol {c}$ by ensuring that it closely tends to a desired reference ![]() $\boldsymbol {c}_{*} \in \mathbb {R}^{N_c}$ over time. Model predictive control can be used for set-point stabilization, trajectory tracking or path following (see Raković & Levine Reference Raković and Levine2018, pp. 169–198), depending on the choice of

$\boldsymbol {c}_{*} \in \mathbb {R}^{N_c}$ over time. Model predictive control can be used for set-point stabilization, trajectory tracking or path following (see Raković & Levine Reference Raković and Levine2018, pp. 169–198), depending on the choice of ![]() $\boldsymbol {c}_{*}$.

$\boldsymbol {c}_{*}$.

For the purpose of a control application, ![]() $\boldsymbol {a}$,

$\boldsymbol {a}$, ![]() $\boldsymbol {b}$ and

$\boldsymbol {b}$ and ![]() $\boldsymbol {c}$ are sampled over a discrete-time vector, equispaced with a fixed time interval

$\boldsymbol {c}$ are sampled over a discrete-time vector, equispaced with a fixed time interval ![]() $T_s$. This discrete-time representation is essential to determine how often the exogenous input is updated. The input is assumed to be constant between consecutive time steps of the control. The implementation of the MPC follows a series of sequential procedures, as can be seen in algorithm 1. An illustration of the process is provided in figure 2.

$T_s$. This discrete-time representation is essential to determine how often the exogenous input is updated. The input is assumed to be constant between consecutive time steps of the control. The implementation of the MPC follows a series of sequential procedures, as can be seen in algorithm 1. An illustration of the process is provided in figure 2.

Figure 2. Graphical representation of MPC strategy for stabilizing around a set point (horizontal dashed line). Past measurements (light-blue-shaded region) depict system state (blue lines with squares) and actuation (green line). The control window ![]() $w_c$ is shown in orange. Dashed lines indicate future state and actuation predictions. Blue circles represent a discrete sampling of the system state prediction. The continuous formulation allows non-mandatory discrete sampling and step-like actuation can be relaxed.

$w_c$ is shown in orange. Dashed lines indicate future state and actuation predictions. Blue circles represent a discrete sampling of the system state prediction. The continuous formulation allows non-mandatory discrete sampling and step-like actuation can be relaxed.

Firstly, the control process starts from a time instant ![]() $t_j$, where a measurement

$t_j$, where a measurement ![]() $\boldsymbol {s}_j$ of the state vector is available. It is assumed that the entire vector of target features is observed. A conditional prediction of the state vector is obtained by a model of the dynamics. This prediction under a given input sequence, referred to as

$\boldsymbol {s}_j$ of the state vector is available. It is assumed that the entire vector of target features is observed. A conditional prediction of the state vector is obtained by a model of the dynamics. This prediction under a given input sequence, referred to as ![]() $\boldsymbol {\hat {a}}_{j+k|\,j}$, is generated within a prediction window

$\boldsymbol {\hat {a}}_{j+k|\,j}$, is generated within a prediction window ![]() $t_{j+k}, k=1,\ldots,w_p$. Consequently, a prediction of the target features vector

$t_{j+k}, k=1,\ldots,w_p$. Consequently, a prediction of the target features vector ![]() $\boldsymbol {\hat {c}}_{j+k|\,j}$ is obtained.

$\boldsymbol {\hat {c}}_{j+k|\,j}$ is obtained.

The optimal input sequence ![]() $\{\boldsymbol {b}_{k}^{opt}\}_{k = 1}^{w_c}$ can be determined in a control window

$\{\boldsymbol {b}_{k}^{opt}\}_{k = 1}^{w_c}$ can be determined in a control window ![]() $t_{j+k}, k = 1,\ldots,w_c$, by minimizing a cost function

$t_{j+k}, k = 1,\ldots,w_c$, by minimizing a cost function ![]() $\mathcal {J}_{MPC} : \mathbb {R}^{N_b} \rightarrow \mathbb {R}^+$. A common choice for the cost function is

$\mathcal {J}_{MPC} : \mathbb {R}^{N_b} \rightarrow \mathbb {R}^+$. A common choice for the cost function is

\begin{align} \mathcal{J}_{MPC}(\boldsymbol{b}) &= \sum_{k=0}^{w_p} \lVert \boldsymbol{\hat{c}}_{j+k|\,j} - \boldsymbol{c}_* \rVert^2_{\boldsymbol{\mathsf{Q}}} \nonumber\\ &\quad + \sum_{k=1}^{w_c}(\lVert \boldsymbol{b}_{j+k|\,j} \rVert^2_{\boldsymbol{\mathsf{R}}_b} + \lVert \Delta \boldsymbol{b}_{j+k|\,j} \rVert^2_{\boldsymbol{\mathsf{R}}_{\Delta b}}), \end{align}

\begin{align} \mathcal{J}_{MPC}(\boldsymbol{b}) &= \sum_{k=0}^{w_p} \lVert \boldsymbol{\hat{c}}_{j+k|\,j} - \boldsymbol{c}_* \rVert^2_{\boldsymbol{\mathsf{Q}}} \nonumber\\ &\quad + \sum_{k=1}^{w_c}(\lVert \boldsymbol{b}_{j+k|\,j} \rVert^2_{\boldsymbol{\mathsf{R}}_b} + \lVert \Delta \boldsymbol{b}_{j+k|\,j} \rVert^2_{\boldsymbol{\mathsf{R}}_{\Delta b}}), \end{align}

where ![]() $\boldsymbol{\mathsf{Q}} \in \mathbb {R}^{{N_c} \times {N_c}}$ and

$\boldsymbol{\mathsf{Q}} \in \mathbb {R}^{{N_c} \times {N_c}}$ and ![]() $\boldsymbol{\mathsf{R}}_b,\boldsymbol{\mathsf{R}}_{\Delta b} \in \mathbb {R}^{{N_b} \times {N_b}}$ are positive and semi-positive definite weight matrices, respectively. In addition,

$\boldsymbol{\mathsf{R}}_b,\boldsymbol{\mathsf{R}}_{\Delta b} \in \mathbb {R}^{{N_b} \times {N_b}}$ are positive and semi-positive definite weight matrices, respectively. In addition, ![]() $\lVert \boldsymbol {d} \rVert _{\boldsymbol{\mathsf{M}}}^2 = \boldsymbol {d}'\boldsymbol{\mathsf{M}}\boldsymbol {d}$ represents the weighted norm of a generic vector

$\lVert \boldsymbol {d} \rVert _{\boldsymbol{\mathsf{M}}}^2 = \boldsymbol {d}'\boldsymbol{\mathsf{M}}\boldsymbol {d}$ represents the weighted norm of a generic vector ![]() $\boldsymbol {d}$ with respect to a symmetric and positive definite matrix

$\boldsymbol {d}$ with respect to a symmetric and positive definite matrix ![]() $\boldsymbol{\mathsf{M}}$, where

$\boldsymbol{\mathsf{M}}$, where ![]() $\boldsymbol {d}'$ denotes the transposition of the vector

$\boldsymbol {d}'$ denotes the transposition of the vector ![]() $\boldsymbol {d}$. The variable

$\boldsymbol {d}$. The variable ![]() $\Delta \boldsymbol {b}_{j+k|\,j}$ denotes the input variability in time, that is,

$\Delta \boldsymbol {b}_{j+k|\,j}$ denotes the input variability in time, that is, ![]() $\boldsymbol {b}_{j+k|\,j} - \boldsymbol {b}_{j+k-1|\,j}$. In the definition of the cost function, the errors in state predictions with respect to the reference trajectory, i.e.

$\boldsymbol {b}_{j+k|\,j} - \boldsymbol {b}_{j+k-1|\,j}$. In the definition of the cost function, the errors in state predictions with respect to the reference trajectory, i.e. ![]() $\boldsymbol {\hat {c}}_{j+k|\,j} - \boldsymbol {c}_*$, are penalized, as well as the actuation cost and variability. In this paper the aforementioned weight matrices are assumed to be diagonal; thus,

$\boldsymbol {\hat {c}}_{j+k|\,j} - \boldsymbol {c}_*$, are penalized, as well as the actuation cost and variability. In this paper the aforementioned weight matrices are assumed to be diagonal; thus,

\begin{equation} \left. \begin{aligned} \boldsymbol{\mathsf{Q}} & = \text{diag}( Q^1, \ldots, Q^{N_c}),\\ \boldsymbol{\mathsf{R}}_b & = \text{diag}( R_{b^1}, \ldots, R_{b^{N_b}}),\\ \boldsymbol{\mathsf{R}}_{\Delta b} & = \text{diag}( R_{\Delta b^1}, \ldots, R_{\Delta b^{N_b}}). \end{aligned} \right\} \end{equation}

\begin{equation} \left. \begin{aligned} \boldsymbol{\mathsf{Q}} & = \text{diag}( Q^1, \ldots, Q^{N_c}),\\ \boldsymbol{\mathsf{R}}_b & = \text{diag}( R_{b^1}, \ldots, R_{b^{N_b}}),\\ \boldsymbol{\mathsf{R}}_{\Delta b} & = \text{diag}( R_{\Delta b^1}, \ldots, R_{\Delta b^{N_b}}). \end{aligned} \right\} \end{equation} Once the optimization problem in (2.2) is solved, only the first component of the optimal control sequence, ![]() $\boldsymbol {b}_{j+1} = \boldsymbol {b}_{1}^{opt}$, is applied. The optimization is then reinitialized and repeated at each subsequent time step of the control. Generally, the prediction window covers a wider range than the control window (

$\boldsymbol {b}_{j+1} = \boldsymbol {b}_{1}^{opt}$, is applied. The optimization is then reinitialized and repeated at each subsequent time step of the control. Generally, the prediction window covers a wider range than the control window (![]() $w_p \geq w_c$). The control vector is considered constant beyond the end of the control window, as discussed in Kaiser et al. (Reference Kaiser, Kutz and Brunton2018).

$w_p \geq w_c$). The control vector is considered constant beyond the end of the control window, as discussed in Kaiser et al. (Reference Kaiser, Kutz and Brunton2018).

Hard constraints on the input vector can readily be incorporated into the optimization process. At each time step, the optimal problem must guarantee that ![]() $\boldsymbol {b} \in \mathcal {B}$, where

$\boldsymbol {b} \in \mathcal {B}$, where ![]() $\mathcal {B}$ is generally determined by the control hardware. The set of allowable inputs is

$\mathcal {B}$ is generally determined by the control hardware. The set of allowable inputs is

\begin{equation} \left. \begin{aligned} b^k_j & \in [b^k_{min}, b^k_{max}],\\ \Delta b^k_j & \in [\Delta b^k_{min}, \Delta b^k_{max}], \quad j = 1,\ldots,t \text{ and } k = 1,\ldots, N_b, \end{aligned} \right\} \end{equation}

\begin{equation} \left. \begin{aligned} b^k_j & \in [b^k_{min}, b^k_{max}],\\ \Delta b^k_j & \in [\Delta b^k_{min}, \Delta b^k_{max}], \quad j = 1,\ldots,t \text{ and } k = 1,\ldots, N_b, \end{aligned} \right\} \end{equation}

where ![]() $b^k_j$ and

$b^k_j$ and ![]() $\Delta b^k_j$ are the

$\Delta b^k_j$ are the ![]() $k$th control input and input variability component at the

$k$th control input and input variability component at the ![]() $j$th time step. The superscripts min and max indicate the minimum and maximum admissible values for the control input and its variability between consecutive time steps, respectively.

$j$th time step. The superscripts min and max indicate the minimum and maximum admissible values for the control input and its variability between consecutive time steps, respectively.

A critical issue of this control technique concerns the choice of parameters. Many of these can be selected based on the physics of the system to be controlled or appropriate control hardware limits, such as the actuation constraints or the control time step. The selection of parameters involved in (2.3), as well as the length of the prediction/control window, is traditionally tuned by trial and error. In § 2.1.4 we introduce our hyperparameter self-tuning procedure.

2.1.2. Nonlinear system identification

The optimization loop of MPC requires an accurate plant model for predicting the system's dynamics. The choice of the predictive model typically involves a trade-off between accuracy and computational complexity. In this work we adopt a data-driven sparsity-promoting technique. The framework was illustrated in Brunton, Proctor & Kutz (Reference Brunton, Proctor and Kutz2016a) and later applied in MPC by Kaiser et al. (Reference Kaiser, Kutz and Brunton2018) for a variety of nonlinear dynamical systems. It must be remarked that the self-tuning framework proposed here can easily be adapted to accommodate a different plant model, either analytical or data driven, including deep-learning models.

SINDy is a method that identifies a system of ordinary differential equations describing the dynamical system. In particular, the version described in this work corresponds to the extension of the SINDy model with exogenous input (SINDYc, Brunton et al. Reference Brunton, Proctor and Kutz2016b). Considering a system of ordinary differential equations such as the one shown in (2.1), SINDYc derives an analytical expression of ![]() $f$ from data. This process requires a dataset comprising the time series of the state vector

$f$ from data. This process requires a dataset comprising the time series of the state vector ![]() $\boldsymbol {a}$ and the exogenous input

$\boldsymbol {a}$ and the exogenous input ![]() $\boldsymbol {b}$. This method is based on the idea that most physical systems can be characterized by only a few relevant terms, resulting in governing equations that are sparse in a high-dimensional nonlinear function space. The resulting sparse model identification aims to find a balance between model complexity and accuracy, preventing overfitting of the model to the data.

$\boldsymbol {b}$. This method is based on the idea that most physical systems can be characterized by only a few relevant terms, resulting in governing equations that are sparse in a high-dimensional nonlinear function space. The resulting sparse model identification aims to find a balance between model complexity and accuracy, preventing overfitting of the model to the data.

To derive an expression of the function ![]() $f$ from data, a discrete sampling is performed on a time vector, yielding

$f$ from data, a discrete sampling is performed on a time vector, yielding ![]() $r$ snapshots at time instances

$r$ snapshots at time instances ![]() $t_i, i = 1, \ldots,r$ for the state vector

$t_i, i = 1, \ldots,r$ for the state vector ![]() $\boldsymbol {a}_i = \boldsymbol {a}(t_i)$, its time derivative

$\boldsymbol {a}_i = \boldsymbol {a}(t_i)$, its time derivative ![]() $\dot {\boldsymbol {a}}_i = \dot {\boldsymbol {a}}(t_i)$ and the input signal

$\dot {\boldsymbol {a}}_i = \dot {\boldsymbol {a}}(t_i)$ and the input signal ![]() $\boldsymbol {b}_i = \boldsymbol {b}(t_i)$. The data obtained are arranged into three matrices

$\boldsymbol {b}_i = \boldsymbol {b}(t_i)$. The data obtained are arranged into three matrices ![]() $\boldsymbol{\mathsf{A}}\in \mathbb {R}^{r \times N_a}$,

$\boldsymbol{\mathsf{A}}\in \mathbb {R}^{r \times N_a}$, ![]() $\dot {\boldsymbol{\mathsf{A}}}\in \mathbb {R}^{r \times N_a}$ and

$\dot {\boldsymbol{\mathsf{A}}}\in \mathbb {R}^{r \times N_a}$ and ![]() $\boldsymbol{\mathsf{B}}\in \mathbb {R}^{r \times N_b}$:

$\boldsymbol{\mathsf{B}}\in \mathbb {R}^{r \times N_b}$:

\begin{equation} \left. \begin{aligned} \boldsymbol{\mathsf{A}} & = \left(\boldsymbol{a}_1,\ldots,\boldsymbol{a}_r\right)', \\ \dot{\boldsymbol{\mathsf{A}}} & = (\boldsymbol{\dot{a}}_1,\ldots,\boldsymbol{\dot{a}}_r)',\\ \boldsymbol{\mathsf{B}} & = \left(\boldsymbol{b}_1,\ldots,\boldsymbol{b}_r\right)'. \end{aligned} \right\} \end{equation}

\begin{equation} \left. \begin{aligned} \boldsymbol{\mathsf{A}} & = \left(\boldsymbol{a}_1,\ldots,\boldsymbol{a}_r\right)', \\ \dot{\boldsymbol{\mathsf{A}}} & = (\boldsymbol{\dot{a}}_1,\ldots,\boldsymbol{\dot{a}}_r)',\\ \boldsymbol{\mathsf{B}} & = \left(\boldsymbol{b}_1,\ldots,\boldsymbol{b}_r\right)'. \end{aligned} \right\} \end{equation} A library of functions ![]() $\boldsymbol {\varTheta }$ is then set as

$\boldsymbol {\varTheta }$ is then set as

\begin{align} \boldsymbol{\varTheta}(\boldsymbol{\mathsf{A}},\boldsymbol{\mathsf{B}})& = (\boldsymbol{\mathsf{1}}_r, \boldsymbol{\mathsf{A}}, \boldsymbol{\mathsf{B}}, \left((\boldsymbol{\mathsf{A}}_{1 \bullet}\otimes\boldsymbol{\mathsf{A}}_{1 \bullet})', \ldots, (\boldsymbol{\mathsf{A}}_{r \bullet}\otimes\boldsymbol{\mathsf{A}}_{r \bullet})'\right)',\nonumber\\ &\quad \left((\boldsymbol{\mathsf{A}}_{1 \bullet}\otimes\boldsymbol{\mathsf{B}}_{1 \bullet})', \ldots, (\boldsymbol{\mathsf{A}}_{r \bullet}\otimes\boldsymbol{\mathsf{B}}_{r \bullet})'\right)'\nonumber\\ &\quad \left((\boldsymbol{\mathsf{B}}_{1 \bullet}\otimes\boldsymbol{\mathsf{B}}_{1 \bullet})', \ldots, (\boldsymbol{\mathsf{B}}_{r \bullet}\otimes\boldsymbol{\mathsf{B}}_{r \bullet})'\right)',\ldots), \end{align}

\begin{align} \boldsymbol{\varTheta}(\boldsymbol{\mathsf{A}},\boldsymbol{\mathsf{B}})& = (\boldsymbol{\mathsf{1}}_r, \boldsymbol{\mathsf{A}}, \boldsymbol{\mathsf{B}}, \left((\boldsymbol{\mathsf{A}}_{1 \bullet}\otimes\boldsymbol{\mathsf{A}}_{1 \bullet})', \ldots, (\boldsymbol{\mathsf{A}}_{r \bullet}\otimes\boldsymbol{\mathsf{A}}_{r \bullet})'\right)',\nonumber\\ &\quad \left((\boldsymbol{\mathsf{A}}_{1 \bullet}\otimes\boldsymbol{\mathsf{B}}_{1 \bullet})', \ldots, (\boldsymbol{\mathsf{A}}_{r \bullet}\otimes\boldsymbol{\mathsf{B}}_{r \bullet})'\right)'\nonumber\\ &\quad \left((\boldsymbol{\mathsf{B}}_{1 \bullet}\otimes\boldsymbol{\mathsf{B}}_{1 \bullet})', \ldots, (\boldsymbol{\mathsf{B}}_{r \bullet}\otimes\boldsymbol{\mathsf{B}}_{r \bullet})'\right)',\ldots), \end{align}

where ![]() $\boldsymbol{\mathsf{1}}_r$ is a column vector of

$\boldsymbol{\mathsf{1}}_r$ is a column vector of ![]() $r$ ones,

$r$ ones, ![]() $\boldsymbol{\mathsf{A}}_{i \bullet }$ denotes the

$\boldsymbol{\mathsf{A}}_{i \bullet }$ denotes the ![]() $i$th row of the matrix

$i$th row of the matrix ![]() $\boldsymbol{\mathsf{A}}$ and

$\boldsymbol{\mathsf{A}}$ and ![]() $\boldsymbol{\mathsf{H}}\otimes \boldsymbol{\mathsf{K}}$ denotes the Kronecker products of

$\boldsymbol{\mathsf{H}}\otimes \boldsymbol{\mathsf{K}}$ denotes the Kronecker products of ![]() $\boldsymbol{\mathsf{H}}$ and

$\boldsymbol{\mathsf{H}}$ and ![]() $\boldsymbol{\mathsf{K}}$. The choice of functions to be included in the library is typically made by the user. This procedure is often guided by experience and prior knowledge about the dynamics of the system to be controlled. This issue introduces some limitations that are later discussed in § 4.

$\boldsymbol{\mathsf{K}}$. The choice of functions to be included in the library is typically made by the user. This procedure is often guided by experience and prior knowledge about the dynamics of the system to be controlled. This issue introduces some limitations that are later discussed in § 4.

The system data can be then assumed to be generated from the following model:

Here ![]() $\boldsymbol {\varXi } = ( \boldsymbol {\xi }^1,\ldots, \boldsymbol {\xi }^{N_a} )$ represents the matrix whose rows are vectors of coefficients determining which terms of the right-hand side are active in the dynamics of the

$\boldsymbol {\varXi } = ( \boldsymbol {\xi }^1,\ldots, \boldsymbol {\xi }^{N_a} )$ represents the matrix whose rows are vectors of coefficients determining which terms of the right-hand side are active in the dynamics of the ![]() $k$th state vector component. This matrix is sparse for many of the dynamical systems considered. It can be obtained by solving the optimization problem

$k$th state vector component. This matrix is sparse for many of the dynamical systems considered. It can be obtained by solving the optimization problem

where ![]() $\lambda$ is called the sparsity-promoting coefficient,

$\lambda$ is called the sparsity-promoting coefficient, ![]() $\boldsymbol{\mathsf{A}}_{\bullet k}$ denotes the

$\boldsymbol{\mathsf{A}}_{\bullet k}$ denotes the ![]() $k$th column of the matrix

$k$th column of the matrix ![]() $\boldsymbol{\mathsf{A}}$.

$\boldsymbol{\mathsf{A}}$. ![]() $\lVert {\cdot } \rVert _1$ and

$\lVert {\cdot } \rVert _1$ and ![]() $\lVert {\cdot } \rVert _2$ are the

$\lVert {\cdot } \rVert _2$ are the ![]() $L_1$ and

$L_1$ and ![]() $L_2$ norms, respectively. It must be remarked that

$L_2$ norms, respectively. It must be remarked that ![]() $\lambda$ must be optimized to reach a compromise between parsimony and accuracy. Nonetheless, since this block of the MPC framework can easily be replaced by other strategies,

$\lambda$ must be optimized to reach a compromise between parsimony and accuracy. Nonetheless, since this block of the MPC framework can easily be replaced by other strategies, ![]() $\lambda$ will not be included in the self-tuning optimization illustrated in § 2.1.4.

$\lambda$ will not be included in the self-tuning optimization illustrated in § 2.1.4.

Finally, the system can be described by

where in this case ![]() $\boldsymbol {\varTheta }(\boldsymbol {a},\boldsymbol {b})$ is a vector that takes into account the same functions included in the library in (2.6). The SINDYc-based model is utilized to make predictions of the dynamics involved in the control process starting from a specific state's initial condition. The steps described in this section to obtain the plant model are also condensed in the nonlinear system identification step in algorithm 1.

$\boldsymbol {\varTheta }(\boldsymbol {a},\boldsymbol {b})$ is a vector that takes into account the same functions included in the library in (2.6). The SINDYc-based model is utilized to make predictions of the dynamics involved in the control process starting from a specific state's initial condition. The steps described in this section to obtain the plant model are also condensed in the nonlinear system identification step in algorithm 1.

2.1.3. Local polynomial regression

Effectively estimating system dynamics is crucial for various control techniques, particularly in MPC, where sensor feedback is utilized to make informed decisions. The accuracy of system dynamics estimation becomes paramount in MPC as it relies on sensor information to predict the behaviour of the controlled system. However, the presence of noise can hinder accurate estimation, necessitating the implementation of noise mitigation techniques.

This challenge falls within the broader context of time series analysis, as sensor outputs represent discrete samples over time of a process variable. To address measurement noise, LPR emerges as a robust, accurate and cost-effective non-parametric smoothing technique. Applying LPR to sensor output data enhances the reliability and accuracy of estimating the current state of the system under varying noise conditions. In this section we provide a succinct overview of the formulation of LPR. The notation convention involves denoting random variables with capital letters, while deterministic values are represented in lowercase.

The objective is to predict or explain the response ![]() $S$ (sensor output) using the predictor

$S$ (sensor output) using the predictor ![]() $T$ (time) from a sample

$T$ (time) from a sample ![]() $\{(T_j, S_j)\}_{j=1}^n$ using the following regression model:

$\{(T_j, S_j)\}_{j=1}^n$ using the following regression model:

Here ![]() $m$ is the regression function,

$m$ is the regression function, ![]() $\{\epsilon _j\}_{j=1}^{n}$ are zero mean and unit variance random variables and

$\{\epsilon _j\}_{j=1}^{n}$ are zero mean and unit variance random variables and ![]() $\sigma ^2$ is the point variance. For simplicity, it is assumed that the model is homoscedastic, i.e. that the variance is constant. From a practical point of view,

$\sigma ^2$ is the point variance. For simplicity, it is assumed that the model is homoscedastic, i.e. that the variance is constant. From a practical point of view, ![]() $\sigma$ also represents the measurement of noise intensity.

$\sigma$ also represents the measurement of noise intensity.

The reason why LPR is used in this context is that it allows for a fast joint estimation of the regression function and its derivatives without making any prior. By making only a few regularity assumptions, it is characterized by a good flexibility that allows us to model complex relations beyond a parametric form. The working principle of the LPR is to approximate the regression function locally by a polynomial of order ![]() $p$, performing a weighted least squares regression using data only around the point of interest.

$p$, performing a weighted least squares regression using data only around the point of interest.

It is assumed that the regression functions admit derivatives up to the order ![]() $p+1$ and, thus, that it can be expanded in a Taylor's series. For close time instants

$p+1$ and, thus, that it can be expanded in a Taylor's series. For close time instants ![]() $t$ and

$t$ and ![]() $T_j$, the unknown regression function can be approximated by a polynomial of order

$T_j$, the unknown regression function can be approximated by a polynomial of order ![]() $p$, then

$p$, then

\begin{equation} m(T_j) \approx \sum_{i=0}^p \frac{m^{(i)}(t)}{i!}(T_j-t)^i \equiv \sum_{i=0}^p \beta_i(T_j-t)^i. \end{equation}

\begin{equation} m(T_j) \approx \sum_{i=0}^p \frac{m^{(i)}(t)}{i!}(T_j-t)^i \equiv \sum_{i=0}^p \beta_i(T_j-t)^i. \end{equation}

The vector of parameters ![]() $\boldsymbol {\beta } = (\beta _0,\ldots,\beta _p)'$ can be estimated by solving the minimization problem

$\boldsymbol {\beta } = (\beta _0,\ldots,\beta _p)'$ can be estimated by solving the minimization problem

\begin{equation} \boldsymbol{\hat{\beta}} = \underset{\boldsymbol{\beta}}{\text{arg min}} \sum_{j=1}^{n} \left\{S_j - \sum_{i=0}^p \beta_i(T_j-t)^i \right\}^2 K_h(T_j-t), \end{equation}

\begin{equation} \boldsymbol{\hat{\beta}} = \underset{\boldsymbol{\beta}}{\text{arg min}} \sum_{j=1}^{n} \left\{S_j - \sum_{i=0}^p \beta_i(T_j-t)^i \right\}^2 K_h(T_j-t), \end{equation}

where ![]() $h$ is the bandwidth determining the size of the local neighbourhood (also called smoothing parameter) and

$h$ is the bandwidth determining the size of the local neighbourhood (also called smoothing parameter) and ![]() $K_h(t) = ({1}/{h})K({t}/{h})$ with

$K_h(t) = ({1}/{h})K({t}/{h})$ with ![]() $K$ a kernel function assigning the weights to each observation. Once the vector

$K$ a kernel function assigning the weights to each observation. Once the vector ![]() $\hat {\boldsymbol {\beta }}$ has been obtained, an estimator of the

$\hat {\boldsymbol {\beta }}$ has been obtained, an estimator of the ![]() $q$th derivative of the regression function is

$q$th derivative of the regression function is

The solution of the optimization in (2.12) is simplified when the methodology is presented in matrix form. To that end, ![]() $\boldsymbol{\mathsf{T}}\in \mathbb {R}^{n\times (\,p+1)}$ is the design matrix

$\boldsymbol{\mathsf{T}}\in \mathbb {R}^{n\times (\,p+1)}$ is the design matrix

\begin{equation} \boldsymbol{\mathsf{T}} = \begin{pmatrix} 1 & (T_1-t) & \ldots & (T_1-t)^p \\ \vdots & \vdots & & \vdots \\ 1 & (T_{n}-t) & \ldots & (T_{n}-t)^p \end{pmatrix}, \end{equation}

\begin{equation} \boldsymbol{\mathsf{T}} = \begin{pmatrix} 1 & (T_1-t) & \ldots & (T_1-t)^p \\ \vdots & \vdots & & \vdots \\ 1 & (T_{n}-t) & \ldots & (T_{n}-t)^p \end{pmatrix}, \end{equation}

while the vector of responses is ![]() $\boldsymbol {S} = (S_1,\ldots,S_{n})'$ and

$\boldsymbol {S} = (S_1,\ldots,S_{n})'$ and ![]() $\boldsymbol{\mathsf{W}} = \textrm {diag}( K_h(T_1-t), \ldots, K_h(T_{n}-t))$. According to this notation and assuming the invertibility of

$\boldsymbol{\mathsf{W}} = \textrm {diag}( K_h(T_1-t), \ldots, K_h(T_{n}-t))$. According to this notation and assuming the invertibility of ![]() $\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol{\mathsf{T}}$, the weighted least squares solution of the minimization problem expressed in (2.12) is

$\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol{\mathsf{T}}$, the weighted least squares solution of the minimization problem expressed in (2.12) is

and, thus, the estimator of the local polynomial is

\begin{equation} \hat{m}(t) = \boldsymbol{e}_1'(\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol{\mathsf{T}})^{-1}\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol{S} = \sum_{j = 1}^{n} W_j^p(t)S_j, \end{equation}

\begin{equation} \hat{m}(t) = \boldsymbol{e}_1'(\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol{\mathsf{T}})^{-1}\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol{S} = \sum_{j = 1}^{n} W_j^p(t)S_j, \end{equation}

where ![]() $\boldsymbol {e}_k\in \mathbb {R}^{p+1}$ is a vector having

$\boldsymbol {e}_k\in \mathbb {R}^{p+1}$ is a vector having ![]() $1$ in the

$1$ in the ![]() $k$th entry and zero elsewhere and

$k$th entry and zero elsewhere and ![]() $W_j^p(t) = \boldsymbol {e}_1'(\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol{\mathsf{T}})^{-1}\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol {e}_j$.

$W_j^p(t) = \boldsymbol {e}_1'(\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol{\mathsf{T}})^{-1}\boldsymbol{\mathsf{T}}'\boldsymbol{\mathsf{W}}\boldsymbol {e}_j$.

The critical element that determines the degree of smoothing in LPR is the size of the local neighbourhood. The selection of ![]() $h$ determines the accuracy of the estimation of the regression function: too large values lead to a large bias in the regression, while too small values increase the variance. Its choice is generically the result of a trade-off between the variance and the estimation bias of the regression function. There are several methods to select the bandwidth to be used for LPR. One possible approach is to choose between a global bandwidth, which is common to the entire domain and is optimal for the entire range of data, or a local bandwidth that depends on the covariate and is therefore optimal at each point of the regression function estimation. The latter would allow for more flexibility in estimating inhomogeneous regression functions. However, in the proposed framework, a global bandwidth was chosen due to its simplicity and, more importantly, its reduced computational cost. In the presented approach, the global bandwidth is chosen using the leave-one-out-cross-validation methodology. Specifically, this parameter corresponds to the one that minimizes the following expression:

$h$ determines the accuracy of the estimation of the regression function: too large values lead to a large bias in the regression, while too small values increase the variance. Its choice is generically the result of a trade-off between the variance and the estimation bias of the regression function. There are several methods to select the bandwidth to be used for LPR. One possible approach is to choose between a global bandwidth, which is common to the entire domain and is optimal for the entire range of data, or a local bandwidth that depends on the covariate and is therefore optimal at each point of the regression function estimation. The latter would allow for more flexibility in estimating inhomogeneous regression functions. However, in the proposed framework, a global bandwidth was chosen due to its simplicity and, more importantly, its reduced computational cost. In the presented approach, the global bandwidth is chosen using the leave-one-out-cross-validation methodology. Specifically, this parameter corresponds to the one that minimizes the following expression:

\begin{equation} \sum_{j = 1}^{n} (S_j - \hat{m}_{h, -j}(T_j))^2. \end{equation}

\begin{equation} \sum_{j = 1}^{n} (S_j - \hat{m}_{h, -j}(T_j))^2. \end{equation}

Here ![]() $\hat {m}_{h,-j}(T_j)$ denotes the estimation of the regression function while excluding the

$\hat {m}_{h,-j}(T_j)$ denotes the estimation of the regression function while excluding the ![]() $j$th term. For further information regarding the optimal bandwidth selection, see Wand & Jones (Reference Wand and Jones1994) and Fan & Gijbels (Reference Fan and Gijbels1996). Additionally, there exist other methodologies that utilize machine-learning techniques to select the local bandwidth such as in Giordano & Parrella (Reference Giordano and Parrella2008) where neural networks are used.

$j$th term. For further information regarding the optimal bandwidth selection, see Wand & Jones (Reference Wand and Jones1994) and Fan & Gijbels (Reference Fan and Gijbels1996). Additionally, there exist other methodologies that utilize machine-learning techniques to select the local bandwidth such as in Giordano & Parrella (Reference Giordano and Parrella2008) where neural networks are used.

Once the smoothing parameter has been set, it remains to choose the weighting function and the degree of the local polynomial, although these two have a minor influence on the performance of the LPR estimation. A common choice for the first is the Epanechnikov kernel. As for the degree of the local polynomial, there is a general pattern of increasing variability according to which, to estimate ![]() $m^{(q)}(t)$, the lowest odd order is recommended, i.e.

$m^{(q)}(t)$, the lowest odd order is recommended, i.e. ![]() $p = q + 1$ or occasionally

$p = q + 1$ or occasionally ![]() $p = q + 3$ (Fan & Gijbels Reference Fan and Gijbels1996).

$p = q + 3$ (Fan & Gijbels Reference Fan and Gijbels1996).

It is also worth noting that due to asymmetric estimation within the control algorithm, boundary effects arise, leading to a bias in the estimation at the edge. These effects, discussed in more detail in Fan & Gijbels (Reference Fan and Gijbels1996), disappear when using local linear regression (![]() $\,p = 1$).

$\,p = 1$).

2.1.4. Hyperparameter automatic tuning with BO

Model predictive control relies on a specific set of hyperparameters to precisely define the cost function in (2.2) for the selection of the optimal action over the control window. A new functional, based on the global performance of the control, is defined. Bayesian optimization is employed to find the hyperparameter vector that maximizes the control performance. By adopting this approach, a more efficient and effective MPC-based control framework may be achieved. The hyperparameters are indeed adapted to different conditions of measurement noise and/or model uncertainty by the BO process. This proposed framework is practically independent of the user.

The parameters under consideration include (i) the components of the weight matrices, as presented in (2.3), which penalize errors of the state vector with respect to the control target trajectories, input cost and input time variability; and (ii) the length of the control/prediction windows, assumed equal for simplicity. All aforementioned control parameters are included in a single vector denoted as ![]() $\boldsymbol {\eta } \in \mathbb {R}^{N_{\boldsymbol {\eta }}}$, where

$\boldsymbol {\eta } \in \mathbb {R}^{N_{\boldsymbol {\eta }}}$, where ![]() $N_{\boldsymbol {\eta }} = 2 N_b + N_c + 1$. A further reduction in the number of control parameters can also be considered by imposing that the components of

$N_{\boldsymbol {\eta }} = 2 N_b + N_c + 1$. A further reduction in the number of control parameters can also be considered by imposing that the components of ![]() $\boldsymbol{\mathsf{R}}_b$ and

$\boldsymbol{\mathsf{R}}_b$ and ![]() $\boldsymbol{\mathsf{R}}_{\Delta b}$ related to the rear cylinders of the fluidic pinball are identical under a flow symmetry argument, as in Bieker et al. (Reference Bieker, Peitz, Brunton, Kutz and Dellnitz2020). Therefore, in this case it is stated that

$\boldsymbol{\mathsf{R}}_{\Delta b}$ related to the rear cylinders of the fluidic pinball are identical under a flow symmetry argument, as in Bieker et al. (Reference Bieker, Peitz, Brunton, Kutz and Dellnitz2020). Therefore, in this case it is stated that ![]() ${R}_{b^2} = {R}_{b^3} = {R}_{b^{2,3}}$ and

${R}_{b^2} = {R}_{b^3} = {R}_{b^{2,3}}$ and ![]() ${R}_{\Delta b^2} = {R}_{\Delta b^3} = {R}_{\Delta b^{2,3}}$ and the total number of parameters is reduced to

${R}_{\Delta b^2} = {R}_{\Delta b^3} = {R}_{\Delta b^{2,3}}$ and the total number of parameters is reduced to ![]() $N_{\boldsymbol {\eta }} = 2(N_b-1) + N_c + 1$. Section 3 also provides several results that justify this choice.

$N_{\boldsymbol {\eta }} = 2(N_b-1) + N_c + 1$. Section 3 also provides several results that justify this choice.

Note that control results depend on the selection of the vector ![]() $\boldsymbol {\eta }$. Consequently, an offline optimization process can be implemented to select the optimal value of

$\boldsymbol {\eta }$. Consequently, an offline optimization process can be implemented to select the optimal value of ![]() $\boldsymbol {\eta }$, which maximizes the control performance. Consider using a specific realization of the hyperparameter vector

$\boldsymbol {\eta }$, which maximizes the control performance. Consider using a specific realization of the hyperparameter vector ![]() $\boldsymbol {\eta }$ to control the system. The state vector measure is indirectly dependent on the choice of hyperparameter vector and is denoted here by

$\boldsymbol {\eta }$ to control the system. The state vector measure is indirectly dependent on the choice of hyperparameter vector and is denoted here by ![]() $\tilde {\boldsymbol {s}}(\boldsymbol {\eta })$. By running the control on a discrete-time vector of

$\tilde {\boldsymbol {s}}(\boldsymbol {\eta })$. By running the control on a discrete-time vector of ![]() $n_{BO}$ time steps, the cost function can be defined as

$n_{BO}$ time steps, the cost function can be defined as

\begin{equation} \mathcal{J}_{BO}(\boldsymbol{\eta}) = \frac{1}{n_{BO}} \sum_{k=1}^{N_c}\sum_{j=1}^{n_{BO}}\left(\tilde{s}^k_j(\boldsymbol{\eta}) - c^k_*\right)^2. \end{equation}

\begin{equation} \mathcal{J}_{BO}(\boldsymbol{\eta}) = \frac{1}{n_{BO}} \sum_{k=1}^{N_c}\sum_{j=1}^{n_{BO}}\left(\tilde{s}^k_j(\boldsymbol{\eta}) - c^k_*\right)^2. \end{equation}

Note that the user selects the target to be optimized, specified by the cost function ![]() $\mathcal {J}_{BO}$. In addition, in absence of measurement noise,

$\mathcal {J}_{BO}$. In addition, in absence of measurement noise, ![]() $\tilde {\boldsymbol {s}}(\boldsymbol {\eta })$ is the ideal measure of the target feature vector. Otherwise, the LPR technique is applied to the state vector measure. In this study the parameters are optimized to minimize the quadratic error between the controlled variables and the user-defined target. Thus, the optimal hyperparameter vector can be obtained by solving the problem

$\tilde {\boldsymbol {s}}(\boldsymbol {\eta })$ is the ideal measure of the target feature vector. Otherwise, the LPR technique is applied to the state vector measure. In this study the parameters are optimized to minimize the quadratic error between the controlled variables and the user-defined target. Thus, the optimal hyperparameter vector can be obtained by solving the problem

where ![]() $H \subset \mathbb {R}^{N_{\boldsymbol {\eta }}}$ is the search domain. Solving the optimization in (2.19) is computationally costly due to the expensive sampling of the black-box cost function

$H \subset \mathbb {R}^{N_{\boldsymbol {\eta }}}$ is the search domain. Solving the optimization in (2.19) is computationally costly due to the expensive sampling of the black-box cost function ![]() $\mathcal {J}_{BO}$. Each sample of

$\mathcal {J}_{BO}$. Each sample of ![]() $\mathcal {J}_{BO}$ is obtained via application of the MPC over

$\mathcal {J}_{BO}$ is obtained via application of the MPC over ![]() $n_{BO}$ time steps. In this framework, BO serves as an efficient method to address this problem, offering an algorithm to search the minimum of the function with a high guarantee of avoiding local minima and characterized by rapid convergence. Indeed, BO has shown to be an efficient strategy particularly when

$n_{BO}$ time steps. In this framework, BO serves as an efficient method to address this problem, offering an algorithm to search the minimum of the function with a high guarantee of avoiding local minima and characterized by rapid convergence. Indeed, BO has shown to be an efficient strategy particularly when ![]() $N_{\boldsymbol {\eta }} \leq 20$ and the search domain

$N_{\boldsymbol {\eta }} \leq 20$ and the search domain ![]() $H$ is a hyper-rectangle, that is,

$H$ is a hyper-rectangle, that is, ![]() $H = \{\boldsymbol {\eta }\in \mathbb {R}^{N_{\boldsymbol {\eta }}}| \eta ^i \in [\eta ^i_{min},\eta ^i_{max}]\subset \mathbb {R}, \eta ^i_{min}<\eta ^i_{max},i = 1, \ldots, N_{\boldsymbol {\eta }} \}$, where

$H = \{\boldsymbol {\eta }\in \mathbb {R}^{N_{\boldsymbol {\eta }}}| \eta ^i \in [\eta ^i_{min},\eta ^i_{max}]\subset \mathbb {R}, \eta ^i_{min}<\eta ^i_{max},i = 1, \ldots, N_{\boldsymbol {\eta }} \}$, where ![]() $\boldsymbol {\eta }_{min}$ and

$\boldsymbol {\eta }_{min}$ and ![]() $\boldsymbol {\eta }_{max}$ are the lower and upper bound vectors of the hyper-rectangle, respectively.

$\boldsymbol {\eta }_{max}$ are the lower and upper bound vectors of the hyper-rectangle, respectively.

In order to find the minimum of the unknown objective function, BO employs an iterative approach, as shown in the MPC-tuning step of algorithm 1. It utilizes a probabilistic model, typically a Gaussian process (GP), to estimate the behaviour of the objective function. At each iteration, the probabilistic model incorporates available data points to make predictions about the function behaviour at unexplored points in the search space. Simultaneously, an acquisition process guides the samplings by suggesting the optimal locations in order to discover the minimum point. A specific function (called the acquisition function) is set to balance exploration, by directing attention to less-explored areas of the search domain, and exploitation, by concentrating on regions near potential minimum points. Finally, the BO iterations continue until a stopping criterion is met, such as reaching a maximum number of iterations or achieving convergence in the search for the minimum. Thus denoting as ![]() $\alpha (\boldsymbol {\eta })$ the acquisition function selected, the point where to sample next in the iterative approach, that is,

$\alpha (\boldsymbol {\eta })$ the acquisition function selected, the point where to sample next in the iterative approach, that is, ![]() $\boldsymbol {\eta }^+$, can be obtained by solving

$\boldsymbol {\eta }^+$, can be obtained by solving

The expected improvement is used as an acquisition function (Snoek, Larochelle & Adams Reference Snoek, Larochelle and Adams2012), which evaluates the potential improvement over the current best solution.

The subsequent discussion will centre on the probabilistic model utilized in BO. Specifically, a prior distribution, which corresponds to a multivariate Gaussian distribution, is employed. Consider the situation where problem (2.19) is tackled using BO. A GP regression is required for the functional ![]() $\mathcal {J}_{BO}$ based on the available observations of the function up to the given iteration.

$\mathcal {J}_{BO}$ based on the available observations of the function up to the given iteration.

Since ![]() $\mathcal {J}_{BO}$ is a GP, for any collection of

$\mathcal {J}_{BO}$ is a GP, for any collection of ![]() $D$ points, included in

$D$ points, included in ![]() $\boldsymbol {\varXi } = (\boldsymbol {\eta }_1, \ldots, \boldsymbol {\eta }_D)'$, then the vector of function samplings at these points, denoted as

$\boldsymbol {\varXi } = (\boldsymbol {\eta }_1, \ldots, \boldsymbol {\eta }_D)'$, then the vector of function samplings at these points, denoted as ![]() $\boldsymbol {J} = (\mathcal {J}_{BO}(\boldsymbol {\eta }_1),\ldots,\mathcal {J}_{BO}(\boldsymbol {\eta }_D))'$ is multivariate Gaussian distributed, then

$\boldsymbol {J} = (\mathcal {J}_{BO}(\boldsymbol {\eta }_1),\ldots,\mathcal {J}_{BO}(\boldsymbol {\eta }_D))'$ is multivariate Gaussian distributed, then

where ![]() $\boldsymbol {\mu } = (\mu (\boldsymbol {\eta }_1),\ldots, \mu (\boldsymbol {\eta }_D))'$ is the mean vector and

$\boldsymbol {\mu } = (\mu (\boldsymbol {\eta }_1),\ldots, \mu (\boldsymbol {\eta }_D))'$ is the mean vector and ![]() $\boldsymbol{\mathsf{K}}$ the covariance matrix whose

$\boldsymbol{\mathsf{K}}$ the covariance matrix whose ![]() $ij$th component is

$ij$th component is ![]() $K_{ij} = k(\boldsymbol {\eta }_i,\boldsymbol {\eta }_j)$, with

$K_{ij} = k(\boldsymbol {\eta }_i,\boldsymbol {\eta }_j)$, with ![]() $\mu$ a mean function and

$\mu$ a mean function and ![]() $k$ a positive definite kernel function. For simplicity, the mean function is assumed to be null.

$k$ a positive definite kernel function. For simplicity, the mean function is assumed to be null.

In order to make a prediction of the value of the unknown function at a new point of interest ![]() $\boldsymbol {\eta }_*$, denoted as

$\boldsymbol {\eta }_*$, denoted as ![]() $\mathcal {J}_{BO_*}$, conditioned on the values of the function already observed, and included in the vector

$\mathcal {J}_{BO_*}$, conditioned on the values of the function already observed, and included in the vector ![]() $\boldsymbol {J}$, the joint multivariate Gaussian distribution can be considered:

$\boldsymbol {J}$, the joint multivariate Gaussian distribution can be considered:

Here ![]() $k_{**} = k(\boldsymbol {\eta }^*,\boldsymbol {\eta }^*)$ and the vector

$k_{**} = k(\boldsymbol {\eta }^*,\boldsymbol {\eta }^*)$ and the vector ![]() $\boldsymbol {k}_* = (k(\boldsymbol {\eta }_1, \boldsymbol {\eta }_*), \ldots,k(\boldsymbol {\eta }_D, \boldsymbol {\eta }_*))'$. This equation describes how the samples

$\boldsymbol {k}_* = (k(\boldsymbol {\eta }_1, \boldsymbol {\eta }_*), \ldots,k(\boldsymbol {\eta }_D, \boldsymbol {\eta }_*))'$. This equation describes how the samples ![]() $\boldsymbol {J}$ at the locations

$\boldsymbol {J}$ at the locations ![]() $\boldsymbol {\varXi }$ correlate with the sample of interest

$\boldsymbol {\varXi }$ correlate with the sample of interest ![]() $\mathcal {J}_{BO_*}$, whose conditional distribution is

$\mathcal {J}_{BO_*}$, whose conditional distribution is

with mean ![]() $\mu _* = \boldsymbol {k}'_*\boldsymbol {K}^{-1}\boldsymbol {J}$ and variance

$\mu _* = \boldsymbol {k}'_*\boldsymbol {K}^{-1}\boldsymbol {J}$ and variance ![]() $\sigma _*^2 = (k_{**} - \boldsymbol {k}'_*\boldsymbol {K}^{-1}\boldsymbol {k}_*)^2$. Equation (2.23) provides the posterior distribution of the unknown function at the new point where the sample has to be performed. It then furnishes the surrogate model used to describe the function

$\sigma _*^2 = (k_{**} - \boldsymbol {k}'_*\boldsymbol {K}^{-1}\boldsymbol {k}_*)^2$. Equation (2.23) provides the posterior distribution of the unknown function at the new point where the sample has to be performed. It then furnishes the surrogate model used to describe the function ![]() $\mathcal {J}_{BO}$ in the domain for the search of the minimum point.

$\mathcal {J}_{BO}$ in the domain for the search of the minimum point.

2.2. The fluidic pinball

The proposed framework is tested on the control of the two-dimensional viscous incompressible flow around a three-cylinder configuration, commonly referred to as a fluidic pinball (Pastur et al. Reference Pastur, Deng, Morzyński and Noack2019; Deng et al. Reference Deng, Noack, Morzyński and Pastur2020, Reference Deng, Noack, Morzyński and Pastur2022). The fluidic pinball was chosen because it represents a suitable multiple-input–multiple-output system benchmark for flow controllers.

A representation of the fluidic pinball can be seen in figure 3. The three cylinders have identical diameters ![]() $D = 2R$, and their geometric centres are placed at the vertices of an equilateral triangle of side

$D = 2R$, and their geometric centres are placed at the vertices of an equilateral triangle of side ![]() $3R$. The centres are symmetrically positioned with respect to the direction of the main flow. The leftmost vertex of the triangle points upstream while the rightmost side is orthogonal to the flow direction. The free stream has a constant velocity equal to

$3R$. The centres are symmetrically positioned with respect to the direction of the main flow. The leftmost vertex of the triangle points upstream while the rightmost side is orthogonal to the flow direction. The free stream has a constant velocity equal to ![]() $U_\infty$. The cylinders of the fluidic pinball, denoted here with

$U_\infty$. The cylinders of the fluidic pinball, denoted here with ![]() $1$ (front),

$1$ (front), ![]() $2$ (top) and

$2$ (top) and ![]() $3$ (bottom), can rotate independently around their axes (orthogonal to the plane of the flow) with tangential velocity

$3$ (bottom), can rotate independently around their axes (orthogonal to the plane of the flow) with tangential velocity ![]() $b^1$,

$b^1$, ![]() $b^2$ and

$b^2$ and ![]() $b^3$, respectively.

$b^3$, respectively.

Figure 3. Domain of the incompressible two-dimensional DNS of the flow around the fluidic pinball. Front, top and bottom cylinders are labelled as ![]() $1$,

$1$, ![]() $2$ and

$2$ and ![]() $3$, respectively. The rotational velocities of the cylinders are

$3$, respectively. The rotational velocities of the cylinders are ![]() $b^1$,

$b^1$, ![]() $b^2$ and

$b^2$ and ![]() $b^3$. The arrows indicate positive (counterclockwise) rotations. The background shows the

$b^3$. The arrows indicate positive (counterclockwise) rotations. The background shows the ![]() $8633$ nodes grid used for the DNS. The contour colours indicate the out-of-plane vorticity.

$8633$ nodes grid used for the DNS. The contour colours indicate the out-of-plane vorticity.

The dynamics of the wake past the fluidic pinball is obtained through a two-dimensional direct numerical simulation (DNS) with the code developed by Noack & Morzyński (Reference Noack and Morzyński2017). The flow is described in a Cartesian reference system in which the ![]() $x$ and

$x$ and ![]() $y$ axes are in the streamwise and crosswise directions, respectively. The centre of the Cartesian reference system coincides with the mid-point of the rightmost bottom and top cylinder and the computational domain, that is,

$y$ axes are in the streamwise and crosswise directions, respectively. The centre of the Cartesian reference system coincides with the mid-point of the rightmost bottom and top cylinder and the computational domain, that is, ![]() $\varOmega$, is bounded in

$\varOmega$, is bounded in ![]() $(-5D, 20D) \times (-5D, 5D)$. The position in the reference system is then described by the vector

$(-5D, 20D) \times (-5D, 5D)$. The position in the reference system is then described by the vector ![]() $\boldsymbol {x} = (x, y) = x \boldsymbol {e_1} + y \boldsymbol {e_2}$, where

$\boldsymbol {x} = (x, y) = x \boldsymbol {e_1} + y \boldsymbol {e_2}$, where ![]() $\boldsymbol {e_1}$ and

$\boldsymbol {e_1}$ and ![]() $\boldsymbol {e_2}$ are respectively the unit vectors in the directions of the

$\boldsymbol {e_2}$ are respectively the unit vectors in the directions of the ![]() $x$ and

$x$ and ![]() $y$ axes and the velocity vector is assumed to be

$y$ axes and the velocity vector is assumed to be ![]() $\boldsymbol {u} = (u, v)$. The constant density is denoted by

$\boldsymbol {u} = (u, v)$. The constant density is denoted by ![]() $\rho$, the kinematic viscosity of the fluid by

$\rho$, the kinematic viscosity of the fluid by ![]() $\nu$. All quantities used in the discussion are assumed non-dimensionalized with cylinder diameter, free-stream velocity and fluid density. The Reynolds number is defined as

$\nu$. All quantities used in the discussion are assumed non-dimensionalized with cylinder diameter, free-stream velocity and fluid density. The Reynolds number is defined as ![]() ${\textit {Re}}_D = {U_\infty D}/{\nu }$. A value of

${\textit {Re}}_D = {U_\infty D}/{\nu }$. A value of ![]() ${\textit {Re}}_D = 150$ is adopted, which is sufficiently large to ensure a chaotic behaviour, although still laminar. The two-dimensional solver has already been used in previous work at this same

${\textit {Re}}_D = 150$ is adopted, which is sufficiently large to ensure a chaotic behaviour, although still laminar. The two-dimensional solver has already been used in previous work at this same ![]() ${\textit {Re}}_D$ (Wang et al. Reference Wang, Deng, Cornejo Maceda and Noack2023). The boundary conditions comprise a far-field condition (

${\textit {Re}}_D$ (Wang et al. Reference Wang, Deng, Cornejo Maceda and Noack2023). The boundary conditions comprise a far-field condition (![]() $\boldsymbol {u} = U_\infty \boldsymbol {e_1}$) in the upper and lower edges, a stress-free one in the outflow edge and a no-slip condition on the cylinder walls, which in the absence of forcing becomes

$\boldsymbol {u} = U_\infty \boldsymbol {e_1}$) in the upper and lower edges, a stress-free one in the outflow edge and a no-slip condition on the cylinder walls, which in the absence of forcing becomes ![]() $\boldsymbol {u} = 0$.

$\boldsymbol {u} = 0$.

The DNS allows forcing by independent rotation of the cylinders. To this purpose, a velocity with module ![]() $|b^i|$ is imposed at the cylinder surface. Positive values of

$|b^i|$ is imposed at the cylinder surface. Positive values of ![]() $b^i$ are associated with counterclockwise rotations of the cylinders. In the remainder of the paper, a reference time scale is set as the convective unit (

$b^i$ are associated with counterclockwise rotations of the cylinders. In the remainder of the paper, a reference time scale is set as the convective unit (![]() $c.u.$), i.e. the time scale based on the free-stream velocity and the cylinder diameter. The lift coefficient is defined as

$c.u.$), i.e. the time scale based on the free-stream velocity and the cylinder diameter. The lift coefficient is defined as ![]() $C_l = {2F_l}/({\rho U_\infty ^2 D})$, where

$C_l = {2F_l}/({\rho U_\infty ^2 D})$, where ![]() $F_l$ is the total lift force applied to the cylinders in the direction of the

$F_l$ is the total lift force applied to the cylinders in the direction of the ![]() $y$ axis and the same quantity is applied in the scaling of

$y$ axis and the same quantity is applied in the scaling of ![]() $F_d$, the force applied to the cylinders in the direction of the

$F_d$, the force applied to the cylinders in the direction of the ![]() $x$ axis, to obtain the total drag coefficient

$x$ axis, to obtain the total drag coefficient ![]() $C_d$. The DNS uses a grid of 8633 vertices and 4225 triangles accounting for both accuracy and computational speed. A preliminary grid convergence study at

$C_d$. The DNS uses a grid of 8633 vertices and 4225 triangles accounting for both accuracy and computational speed. A preliminary grid convergence study at ![]() ${\textit {Re}}_D=150$ identified this as sufficient resolution for errors of up to

${\textit {Re}}_D=150$ identified this as sufficient resolution for errors of up to ![]() $3\,\%$ in the free case and

$3\,\%$ in the free case and ![]() ${\approx }4\,\%$ in the actuated case in terms of drag and lift.

${\approx }4\,\%$ in the actuated case in terms of drag and lift.

2.3. The control approach

The aim of the control is to achieve a reduction in the overall drag coefficient of the fluidic pinball, while also controlling the lift coefficient so that the latter has reduced oscillations with a zero average value. The vector of target features is therefore composed only of the total lift and drag coefficients, ![]() $\boldsymbol {c} = (C_d, C_l)'$. The target vector is thus composed of null components

$\boldsymbol {c} = (C_d, C_l)'$. The target vector is thus composed of null components ![]() $\boldsymbol {c_*} = (0,0)'$. Since the cost function in (2.2) is quadratic, a null target vector will penalize both mean values of

$\boldsymbol {c_*} = (0,0)'$. Since the cost function in (2.2) is quadratic, a null target vector will penalize both mean values of ![]() $C_l$ and

$C_l$ and ![]() $C_d$ and their oscillations.

$C_d$ and their oscillations.

To this purpose, the tangential velocities of the three cylinders are tuned respecting the implementation limits, here chosen equal to ![]() $b^i \in [-1, 1]$ and

$b^i \in [-1, 1]$ and ![]() $\Delta b^i \in [-4, 4]$, for all

$\Delta b^i \in [-4, 4]$, for all ![]() $i = 1,2,3$. The model plant has a state vector composed by

$i = 1,2,3$. The model plant has a state vector composed by ![]() $C_d$ and

$C_d$ and ![]() $C_l$ and their time derivatives, respectively

$C_l$ and their time derivatives, respectively ![]() $\dot {C_d}$ and

$\dot {C_d}$ and ![]() $\dot {C_l}$ (i.e.

$\dot {C_l}$ (i.e. ![]() $\boldsymbol {a} = (C_d, C_l, \dot {C_d}, \dot {C_l})'$). A state vector based solely on global variables does not require adding intrusive probes for flow estimation. Similar state vector choices that incorporate aerodynamic force coefficients and temporal derivatives are made in Nair et al. (Reference Nair, Yeh, Kaiser, Noack, Brunton and Taira2019) and Loiseau, Noack & Brunton (Reference Loiseau, Noack and Brunton2018). While this approach is often effective in separated flows, it might be challenged at higher

$\boldsymbol {a} = (C_d, C_l, \dot {C_d}, \dot {C_l})'$). A state vector based solely on global variables does not require adding intrusive probes for flow estimation. Similar state vector choices that incorporate aerodynamic force coefficients and temporal derivatives are made in Nair et al. (Reference Nair, Yeh, Kaiser, Noack, Brunton and Taira2019) and Loiseau, Noack & Brunton (Reference Loiseau, Noack and Brunton2018). While this approach is often effective in separated flows, it might be challenged at higher ![]() ${\textit {Re}}$ flows and of unfeasible application in other flow configurations.

${\textit {Re}}$ flows and of unfeasible application in other flow configurations.

Feedback to the control involves measurement of drag and lift forces, so at each time instant ![]() $t_j$, it is considered

$t_j$, it is considered ![]() $\boldsymbol {s}_j = \boldsymbol {c}_j$. This approach also facilitates a reduction in the complexity of the predictive model, thereby speeding up the control process. Indeed, a compact state vector is particularly desirable to reduce the computational cost of the iterative optimization problem over receding horizons of MPC.

$\boldsymbol {s}_j = \boldsymbol {c}_j$. This approach also facilitates a reduction in the complexity of the predictive model, thereby speeding up the control process. Indeed, a compact state vector is particularly desirable to reduce the computational cost of the iterative optimization problem over receding horizons of MPC.

In the present work, noise in lift and drag measurement is also considered. Under this assumption, the model in (2.10) is applied. The noise intensity ![]() $\sigma$ is assumed to be constant over time and given as a percentage of the full-scale measured drag and lift coefficients in conditions without actuation. Therefore, in the case of measurement noise in the sensors, the response variable to which the LPR is applied corresponds to the measurements of the drag and lift coefficients of the fluidic pinball. The LPR enables us to obtain estimates of their regression function in output from the sensors but also of their time derivatives, allowing us to use this information as control feedback.

$\sigma$ is assumed to be constant over time and given as a percentage of the full-scale measured drag and lift coefficients in conditions without actuation. Therefore, in the case of measurement noise in the sensors, the response variable to which the LPR is applied corresponds to the measurements of the drag and lift coefficients of the fluidic pinball. The LPR enables us to obtain estimates of their regression function in output from the sensors but also of their time derivatives, allowing us to use this information as control feedback.

The MPC-optimization problem is solved every control time step (so every ![]() $T_s$) to update the exogenous control input. During the time between two consecutive samples, the control input to the system remains constant. Thus, the sampling time step should be chosen small enough to ensure a good closed-loop performance, but not too small to avoid an excessive computational cost. In this work, it is set at

$T_s$) to update the exogenous control input. During the time between two consecutive samples, the control input to the system remains constant. Thus, the sampling time step should be chosen small enough to ensure a good closed-loop performance, but not too small to avoid an excessive computational cost. In this work, it is set at ![]() $T_s = 0.5\, c.u.$, i.e. sufficiently small compared with the shedding period of the fluidic pinball wake (denoted as

$T_s = 0.5\, c.u.$, i.e. sufficiently small compared with the shedding period of the fluidic pinball wake (denoted as ![]() $T_{sh}$) and not too high to affect control performance. The reason why

$T_{sh}$) and not too high to affect control performance. The reason why ![]() $T_s$ was not included in the control tuning concerns the difficulty of defining the search domain for realistic applications. Imposing bounds on this parameter requires an estimate of the computational cost of the MPC, and this procedure is postponed to future experimental applications. The method used to optimize the control action in the MPC framework is sequential quadratic programming with constraints. The optimization was carried out with a built-in function of MATLAB. The stop criteria are set at a maximum number of iterations of

$T_s$ was not included in the control tuning concerns the difficulty of defining the search domain for realistic applications. Imposing bounds on this parameter requires an estimate of the computational cost of the MPC, and this procedure is postponed to future experimental applications. The method used to optimize the control action in the MPC framework is sequential quadratic programming with constraints. The optimization was carried out with a built-in function of MATLAB. The stop criteria are set at a maximum number of iterations of ![]() $500$ and a step tolerance of