1. Introduction

We consider dynamical systems whose state ![]() $\pmb {x}$ evolves over a state space

$\pmb {x}$ evolves over a state space ![]() $\varOmega \subseteq \mathbb {R}^d$ in discrete time steps according to a function

$\varOmega \subseteq \mathbb {R}^d$ in discrete time steps according to a function ![]() $F:\varOmega \rightarrow \varOmega$. That is,

$F:\varOmega \rightarrow \varOmega$. That is,

for an initial state ![]() $\pmb {x}_0$. At the

$\pmb {x}_0$. At the ![]() $n$th time step the system is in state

$n$th time step the system is in state ![]() $\pmb {x}_{n}$, and such a dynamical system forms a trajectory of iterates

$\pmb {x}_{n}$, and such a dynamical system forms a trajectory of iterates ![]() $\pmb {x}_0, \pmb {x}_1, \pmb {x}_2,\ldots$ in

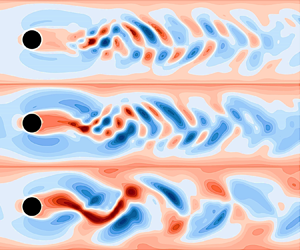

$\pmb {x}_0, \pmb {x}_1, \pmb {x}_2,\ldots$ in ![]() $\varOmega$. We consider the discrete-time setting since we are interested in analysing data collected from experiments and numerical simulations, where we cannot practically obtain a continuum of data. The dynamical system in (1.1) could, for example, be experimental fluid flow measured in a particle image velocimetry (PIV) window over a grid of discrete spatial points (see § 7 for an example). In that case, the function

$\varOmega$. We consider the discrete-time setting since we are interested in analysing data collected from experiments and numerical simulations, where we cannot practically obtain a continuum of data. The dynamical system in (1.1) could, for example, be experimental fluid flow measured in a particle image velocimetry (PIV) window over a grid of discrete spatial points (see § 7 for an example). In that case, the function ![]() $F$ corresponds to evolving the flow one time step forward and can, of course, be nonlinear.

$F$ corresponds to evolving the flow one time step forward and can, of course, be nonlinear.

Koopman operators (Koopman Reference Koopman1931; Koopman & von Neumann Reference Koopman and von Neumann1932) provide a ‘linearisation’ of nonlinear dynamical systems (1.1) (Mezić Reference Mezić2021). Sparked by Mezić (Reference Mezić2005) and Mezić & Banaszuk (Reference Mezić and Banaszuk2004), recent attention has shifted to using Koopman operators in data-driven methods for studying dynamical systems from trajectory data (Brunton et al. Reference Brunton, Budišić, Kaiser and Kutz2022). The ensuing explosion in popularity, dubbed ‘Koopmanism’ (Budišić, Mohr & Mezić Reference Budišić, Mohr and Mezić2012), includes thousands of articles over the last decade. The Koopman operator for the system (1.1), ![]() $\mathcal {K}$, is defined by its action on ‘observables’ or functions

$\mathcal {K}$, is defined by its action on ‘observables’ or functions ![]() $g:\varOmega \rightarrow \mathbb {C}$, via the composition formula

$g:\varOmega \rightarrow \mathbb {C}$, via the composition formula

where ![]() $\mathcal {D}(\mathcal {K})$ is a suitable domain of observables. Note that observables typically differ from the full state of the system in that they are only a given measure of the system. For example, an observable

$\mathcal {D}(\mathcal {K})$ is a suitable domain of observables. Note that observables typically differ from the full state of the system in that they are only a given measure of the system. For example, an observable ![]() $g(\pmb {x})$ could be the PIV-measured horizontal fluid velocity at a single point, whereas

$g(\pmb {x})$ could be the PIV-measured horizontal fluid velocity at a single point, whereas ![]() $\pmb {x}$ could correspond to the velocity values at all spatial grid points at a given time. When applied to a state of the system

$\pmb {x}$ could correspond to the velocity values at all spatial grid points at a given time. When applied to a state of the system

Thus, ![]() $\mathcal {K}$ acts on observables by advancing them one step forward in time. By considering

$\mathcal {K}$ acts on observables by advancing them one step forward in time. By considering ![]() $g_j(\pmb {x})=[\pmb {x}]_j$ for

$g_j(\pmb {x})=[\pmb {x}]_j$ for ![]() $j=1,\ldots , d$, we see that

$j=1,\ldots , d$, we see that ![]() $\mathcal {K}$ encodes the action of

$\mathcal {K}$ encodes the action of ![]() $F$ on

$F$ on ![]() $\pmb {x}_{n}$. Nevertheless, from (1.2), one can show that

$\pmb {x}_{n}$. Nevertheless, from (1.2), one can show that ![]() $\mathcal {K}$ is a linear operator, regardless of whether the dynamics are linear or nonlinear.

$\mathcal {K}$ is a linear operator, regardless of whether the dynamics are linear or nonlinear.

The Koopman operator transforms the nonlinear dynamics in the state variable ![]() $\pmb {x}$ into an equivalent linear dynamics in the observables

$\pmb {x}$ into an equivalent linear dynamics in the observables ![]() $g$. Hence, the spectral information of

$g$. Hence, the spectral information of ![]() $\mathcal {K}$ determines the behaviour of the dynamical system (1.1). For example, Koopman modes are projections of the physical field onto eigenfunctions of the Koopman operator. For fluid flows, Koopman modes provide collective motions of the fluid that are occurring at the same spatial frequency, growth or decay rate, according to an (approximate) eigenvalue of the Koopman operator. Since the vast majority of turbulent fluid interactions have nonlinear properties, this ability to transition to a linear operator is exceedingly useful. For example, obtaining linear representations for nonlinear systems has the potential to revolutionise our ability to predict and control such systems. However, there is price to pay for this linearisation –

$\mathcal {K}$ determines the behaviour of the dynamical system (1.1). For example, Koopman modes are projections of the physical field onto eigenfunctions of the Koopman operator. For fluid flows, Koopman modes provide collective motions of the fluid that are occurring at the same spatial frequency, growth or decay rate, according to an (approximate) eigenvalue of the Koopman operator. Since the vast majority of turbulent fluid interactions have nonlinear properties, this ability to transition to a linear operator is exceedingly useful. For example, obtaining linear representations for nonlinear systems has the potential to revolutionise our ability to predict and control such systems. However, there is price to pay for this linearisation – ![]() $\mathcal {K}$ acts on an infinite-dimensional space, and this is where computational difficulties arise.

$\mathcal {K}$ acts on an infinite-dimensional space, and this is where computational difficulties arise.

In the data-driven setting of this paper, we do not assume knowledge of the function ![]() $F$ in (1.1). Instead, we aim to recover the spectral properties of

$F$ in (1.1). Instead, we aim to recover the spectral properties of ![]() $\mathcal {K}$ from measured trajectory data. The leading numerical algorithm used to approximate

$\mathcal {K}$ from measured trajectory data. The leading numerical algorithm used to approximate ![]() $\mathcal {K}$ from trajectory data is dynamic mode decomposition (DMD) (Tu et al. Reference Tu, Rowley, Luchtenburg, Brunton and Kutz2014; Kutz et al. Reference Kutz, Brunton, Brunton and Proctor2016a). First introduced by Schmid (Reference Schmid2009, Reference Schmid2010) in the fluids community and connected to the Koopman operator in Rowley et al. (Reference Rowley, Mezić, Bagheri, Schlatter and Henningson2009), there are now numerous extensions and variants of DMD (Chen, Tu & Rowley Reference Chen, Tu and Rowley2012; Wynn et al. Reference Wynn, Pearson, Ganapathisubramani and Goulart2013; Jovanović, Schmid & Nichols Reference Jovanović, Schmid and Nichols2014; Kutz, Fu & Brunton Reference Kutz, Fu and Brunton2016b; Noack et al. Reference Noack, Stankiewicz, Morzyński and Schmid2016; Proctor, Brunton & Kutz Reference Proctor, Brunton and Kutz2016; Korda & Mezić Reference Korda and Mezić2018; Loiseau & Brunton Reference Loiseau and Brunton2018; Deem et al. Reference Deem, Cattafesta, Hemati, Zhang, Rowley and Mittal2020; Klus et al. Reference Klus, Nüske, Peitz, Niemann, Clementi and Schütte2020; Baddoo et al. Reference Baddoo, Herrmann, McKeon, Kutz and Brunton2021, Reference Baddoo, Herrmann, McKeon and Brunton2022; Herrmann et al. Reference Herrmann, Baddoo, Semaan, Brunton and McKeon2021; Wang & Shoele Reference Wang and Shoele2021; Colbrook Reference Colbrook2022). Of particular interest is extended DMD (EDMD) (Williams, Kevrekidis & Rowley Reference Williams, Kevrekidis and Rowley2015a), which generalised DMD to a broader class of nonlinear observables. The reader is encouraged to consult Schmid (Reference Schmid2022) for a recent overview of DMD-type methods, and Taira et al. (Reference Taira, Brunton, Dawson, Rowley, Colonius, McKeon, Schmidt, Gordeyev, Theofilis and Ukeiley2017, Reference Taira, Hemati, Brunton, Sun, Duraisamy, Bagheri, Dawson and Yeh2020) and Towne, Schmidt & Colonius (Reference Towne, Schmidt and Colonius2018) for an overview of modal-decomposition techniques in fluid flows. DMD breaks apart a high-dimensional spatio-temporal signal into a triplet of Koopman modes, scalar amplitudes and purely temporal signals. This decomposition allows the user to describe complex flow patterns by a hierarchy of simpler processes. When linearly superimposed, these simpler processes approximately recover the full flow.

$\mathcal {K}$ from trajectory data is dynamic mode decomposition (DMD) (Tu et al. Reference Tu, Rowley, Luchtenburg, Brunton and Kutz2014; Kutz et al. Reference Kutz, Brunton, Brunton and Proctor2016a). First introduced by Schmid (Reference Schmid2009, Reference Schmid2010) in the fluids community and connected to the Koopman operator in Rowley et al. (Reference Rowley, Mezić, Bagheri, Schlatter and Henningson2009), there are now numerous extensions and variants of DMD (Chen, Tu & Rowley Reference Chen, Tu and Rowley2012; Wynn et al. Reference Wynn, Pearson, Ganapathisubramani and Goulart2013; Jovanović, Schmid & Nichols Reference Jovanović, Schmid and Nichols2014; Kutz, Fu & Brunton Reference Kutz, Fu and Brunton2016b; Noack et al. Reference Noack, Stankiewicz, Morzyński and Schmid2016; Proctor, Brunton & Kutz Reference Proctor, Brunton and Kutz2016; Korda & Mezić Reference Korda and Mezić2018; Loiseau & Brunton Reference Loiseau and Brunton2018; Deem et al. Reference Deem, Cattafesta, Hemati, Zhang, Rowley and Mittal2020; Klus et al. Reference Klus, Nüske, Peitz, Niemann, Clementi and Schütte2020; Baddoo et al. Reference Baddoo, Herrmann, McKeon, Kutz and Brunton2021, Reference Baddoo, Herrmann, McKeon and Brunton2022; Herrmann et al. Reference Herrmann, Baddoo, Semaan, Brunton and McKeon2021; Wang & Shoele Reference Wang and Shoele2021; Colbrook Reference Colbrook2022). Of particular interest is extended DMD (EDMD) (Williams, Kevrekidis & Rowley Reference Williams, Kevrekidis and Rowley2015a), which generalised DMD to a broader class of nonlinear observables. The reader is encouraged to consult Schmid (Reference Schmid2022) for a recent overview of DMD-type methods, and Taira et al. (Reference Taira, Brunton, Dawson, Rowley, Colonius, McKeon, Schmidt, Gordeyev, Theofilis and Ukeiley2017, Reference Taira, Hemati, Brunton, Sun, Duraisamy, Bagheri, Dawson and Yeh2020) and Towne, Schmidt & Colonius (Reference Towne, Schmidt and Colonius2018) for an overview of modal-decomposition techniques in fluid flows. DMD breaks apart a high-dimensional spatio-temporal signal into a triplet of Koopman modes, scalar amplitudes and purely temporal signals. This decomposition allows the user to describe complex flow patterns by a hierarchy of simpler processes. When linearly superimposed, these simpler processes approximately recover the full flow.

DMD is undoubtedly a powerful data-driven algorithm that has led to rapid progress over the past decade (Grilli et al. Reference Grilli, Schmid, Hickel and Adams2012; Mezić Reference Mezić2013; Motheau, Nicoud & Poinsot Reference Motheau, Nicoud and Poinsot2014; Sarmast et al. Reference Sarmast, Dadfar, Mikkelsen, Schlatter, Ivanell, Sørensen and Henningson2014; Sayadi et al. Reference Sayadi, Schmid, Nichols and Moin2014; Subbareddy, Bartkowicz & Candler Reference Subbareddy, Bartkowicz and Candler2014; Chai, Iyer & Mahesh Reference Chai, Iyer and Mahesh2015; Brunton et al. Reference Brunton, Brunton, Proctor and Kutz2016; Hua et al. Reference Hua, Gunaratne, Talley, Gord and Roy2016; Priebe et al. Reference Priebe, Tu, Rowley and Martín2016; Pasquariello, Hickel & Adams Reference Pasquariello, Hickel and Adams2017; Page & Kerswell Reference Page and Kerswell2018, Reference Page and Kerswell2019, Reference Page and Kerswell2020; Brunton & Kutz Reference Brunton and Kutz2019; Korda, Putinar & Mezić Reference Korda, Putinar and Mezić2020). However, the infinite-dimensional nature of ![]() $\mathcal {K}$ leads to several fundamental challenges. The spectral information of infinite-dimensional operators can be far more complicated and challenging to compute than that of a finite matrix (Ben-Artzi et al. Reference Ben-Artzi, Colbrook, Hansen, Nevanlinna and Seidel2020; Colbrook Reference Colbrook2020). For example,

$\mathcal {K}$ leads to several fundamental challenges. The spectral information of infinite-dimensional operators can be far more complicated and challenging to compute than that of a finite matrix (Ben-Artzi et al. Reference Ben-Artzi, Colbrook, Hansen, Nevanlinna and Seidel2020; Colbrook Reference Colbrook2020). For example, ![]() $\mathcal {K}$ can have both discrete and continuous spectra (Mezić Reference Mezić2005). Recently, Colbrook & Townsend (Reference Colbrook and Townsend2021) introduced Residual DMD (ResDMD) aimed at tackling some of the challenges associated with infinite dimensions. The idea of ResDMD is summarised in figure 1 (see § 3). ResDMD is easily used in conjunction with existing DMD methods – it does not require additional data and is no more computationally expensive than EDMD. ResDMD addresses the challenges of:

$\mathcal {K}$ can have both discrete and continuous spectra (Mezić Reference Mezić2005). Recently, Colbrook & Townsend (Reference Colbrook and Townsend2021) introduced Residual DMD (ResDMD) aimed at tackling some of the challenges associated with infinite dimensions. The idea of ResDMD is summarised in figure 1 (see § 3). ResDMD is easily used in conjunction with existing DMD methods – it does not require additional data and is no more computationally expensive than EDMD. ResDMD addresses the challenges of:

• Spectral pollution (spurious modes). A well-known difficulty of computing spectra of infinite-dimensional operators is spectral pollution, where discretisations to a finite matrix cause the appearance of spurious eigenvalues that have nothing to do with the operator and typically have no physical meaning (Lewin & Séré Reference Lewin and Séré2010; Colbrook, Roman & Hansen Reference Colbrook, Roman and Hansen2019). DMD-type methods typically suffer from spectral pollution and can produce modes with no physical relevance to the system being investigated (Budišić et al. Reference Budišić, Mohr and Mezić2012; Williams et al. Reference Williams, Kevrekidis and Rowley2015a). It is highly desirable to have a principled way of detecting spectral pollution with as few assumptions as possible. By computing residuals associated with the spectrum with error control, ResDMD allows the computation of spectra of general Koopman operators with rigorous convergence guarantees and without spectral pollution. ResDMD verifies computations of spectral properties and provides a data-driven study of dynamical systems with error control (e.g. see Koopman modes in figure 16).

• Invariant subspaces. A finite-dimensional invariant subspace of

$\mathcal {K}$ is a space of observables

$\mathcal {K}$ is a space of observables  $\mathcal {G} = {\rm span} \{g_1,\ldots,g_k\}$ such that

$\mathcal {G} = {\rm span} \{g_1,\ldots,g_k\}$ such that  $\mathcal {K}g\in \mathcal {G}$ for all

$\mathcal {K}g\in \mathcal {G}$ for all  $g\in \mathcal {G}$. Non-trivial finite-dimensional invariant spaces need not exist (e.g. when the system is mixing), can be challenging to compute, or may not capture all of the dynamics of interest. Typically, one must settle for approximate invariant subspaces, and DMD-type methods can result in closure issues (Brunton et al. Reference Brunton, Brunton, Proctor and Kutz2016). By computing upper bounds on residuals, ResDMD provides a way of measuring how invariant a candidate subspace is. It is important to stress that ResDMD computes residuals associated with the underlying infinite-dimensional operator

$g\in \mathcal {G}$. Non-trivial finite-dimensional invariant spaces need not exist (e.g. when the system is mixing), can be challenging to compute, or may not capture all of the dynamics of interest. Typically, one must settle for approximate invariant subspaces, and DMD-type methods can result in closure issues (Brunton et al. Reference Brunton, Brunton, Proctor and Kutz2016). By computing upper bounds on residuals, ResDMD provides a way of measuring how invariant a candidate subspace is. It is important to stress that ResDMD computes residuals associated with the underlying infinite-dimensional operator  $\mathcal {K}$ (the additional matrix in figure 1 allows us to do this using finite matrices), in contrast to earlier work that computes residuals of observables with respect to a finite DMD discretisation matrix (Drmac, Mezic & Mohr Reference Drmac, Mezic and Mohr2018). In contrast to ResDMD, residuals with respect to a finite DMD discretisation of

$\mathcal {K}$ (the additional matrix in figure 1 allows us to do this using finite matrices), in contrast to earlier work that computes residuals of observables with respect to a finite DMD discretisation matrix (Drmac, Mezic & Mohr Reference Drmac, Mezic and Mohr2018). In contrast to ResDMD, residuals with respect to a finite DMD discretisation of  $\mathcal{K}$ can never be used as error bounds for spectral information of

$\mathcal{K}$ can never be used as error bounds for spectral information of  $\mathcal {K}$ and suffer from issues such as spectral pollution. Residuals computed by ResDMD also allow a natural ranking or ordering of approximated eigenvalues and Koopman modes, that can be exploited for efficient system compression (see § 8).

$\mathcal {K}$ and suffer from issues such as spectral pollution. Residuals computed by ResDMD also allow a natural ranking or ordering of approximated eigenvalues and Koopman modes, that can be exploited for efficient system compression (see § 8).• Continuous spectra. The operator

$\mathcal {K}$ can have a continuous spectrum, which is a generic feature of chaotic or turbulent flow (Basley et al. Reference Basley, Pastur, Lusseyran, Faure and Delprat2011; Arbabi & Mezić Reference Arbabi and Mezić2017b). A formidable challenge is dealing with the continuous spectrum (Mezić Reference Mezić2013; Colbrook Reference Colbrook2021; Colbrook, Horning & Townsend Reference Colbrook, Horning and Townsend2021), since discretising to a finite-dimensional operator destroys its presence. Most existing non-parametric approaches for computing continuous spectra of

$\mathcal {K}$ can have a continuous spectrum, which is a generic feature of chaotic or turbulent flow (Basley et al. Reference Basley, Pastur, Lusseyran, Faure and Delprat2011; Arbabi & Mezić Reference Arbabi and Mezić2017b). A formidable challenge is dealing with the continuous spectrum (Mezić Reference Mezić2013; Colbrook Reference Colbrook2021; Colbrook, Horning & Townsend Reference Colbrook, Horning and Townsend2021), since discretising to a finite-dimensional operator destroys its presence. Most existing non-parametric approaches for computing continuous spectra of  $\mathcal {K}$ are restricted to ergodic systems (Arbabi & Mezić Reference Arbabi and Mezić2017b; Giannakis Reference Giannakis2019; Korda et al. Reference Korda, Putinar and Mezić2020; Das, Giannakis & Slawinska Reference Das, Giannakis and Slawinska2021), as this allows relevant integrals to be computed using long-time averages. ResDMD (Colbrook & Townsend Reference Colbrook and Townsend2021) provides a general computational framework to deal with continuous spectra through smoothed approximations of spectral measures, leading to explicit and rigorous high-order convergence (see the examples in § 6). The methods deal jointly with discrete and continuous spectra, do not assume ergodicity, and can be applied to data collected from either short or long trajectories.

$\mathcal {K}$ are restricted to ergodic systems (Arbabi & Mezić Reference Arbabi and Mezić2017b; Giannakis Reference Giannakis2019; Korda et al. Reference Korda, Putinar and Mezić2020; Das, Giannakis & Slawinska Reference Das, Giannakis and Slawinska2021), as this allows relevant integrals to be computed using long-time averages. ResDMD (Colbrook & Townsend Reference Colbrook and Townsend2021) provides a general computational framework to deal with continuous spectra through smoothed approximations of spectral measures, leading to explicit and rigorous high-order convergence (see the examples in § 6). The methods deal jointly with discrete and continuous spectra, do not assume ergodicity, and can be applied to data collected from either short or long trajectories.• Nonlinearity and high-dimensional state space. For many fluid flows, e.g. turbulent phenomena, the corresponding dynamical system is strongly nonlinear and has a very large state-space dimension. ResDMD can be combined with learned dictionaries to tackle this issue. A key advantage of ResDMD compared with traditional learning methods is that one still has convergence theory and can perform a posteriori verification that the learned dictionary is suitable. In this paper, we demonstrate this for two choices of dictionary: kernelised extended dynamic mode decomposition (kEDMD) (Williams, Rowley & Kevrekidis Reference Williams, Rowley and Kevrekidis2015b) and (rank-reduced) DMD.

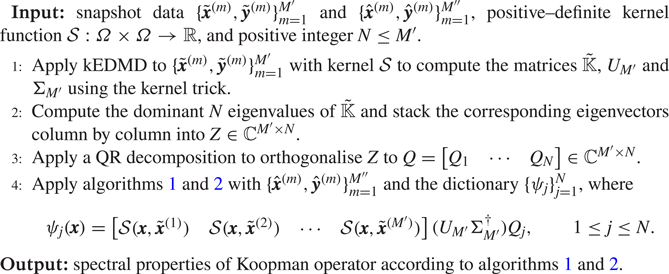

Figure 1. The basic idea of ResDMD – by introducing an additional matrix ![]() $\varPsi _Y^*W\varPsi _Y$ (compared with EDMD), we compute a residual in infinite dimensions. The matrices

$\varPsi _Y^*W\varPsi _Y$ (compared with EDMD), we compute a residual in infinite dimensions. The matrices ![]() $\varPsi _X$ and

$\varPsi _X$ and ![]() $\varPsi _Y$ are defined in (2.8a,b) and correspond to the dictionary evaluated at the snapshot data. The matrix

$\varPsi _Y$ are defined in (2.8a,b) and correspond to the dictionary evaluated at the snapshot data. The matrix ![]() $W=\mathrm {diag}(w_1,\ldots,w_M)$ is a diagonal weight matrix. The approximation of the residual becomes exact in the large data limit

$W=\mathrm {diag}(w_1,\ldots,w_M)$ is a diagonal weight matrix. The approximation of the residual becomes exact in the large data limit ![]() $M\rightarrow \infty$.

$M\rightarrow \infty$.

This paper demonstrates ResDMD in a wide range of fluid dynamic situations, at varying Reynolds numbers, from both numerical and experimental data. We discuss how the new ResDMD methods can be reliably used for turbulent flows such as aerodynamic boundary layers, and we include, for the first time, a link between the spectral measures of Koopman operators and turbulent power spectra. By windowing in the frequency domain, ResDMD avoids the problem of broadening and also allows convergence theory. We illustrate this link explicitly for experimental measurements of boundary layer turbulence. We also discuss how a new modal ordering based on the residual permits greater accuracy with a smaller DMD dictionary than traditional modal modulus ordering. This result paves the way for greater dynamic compression of large data sets without sacrificing accuracy.

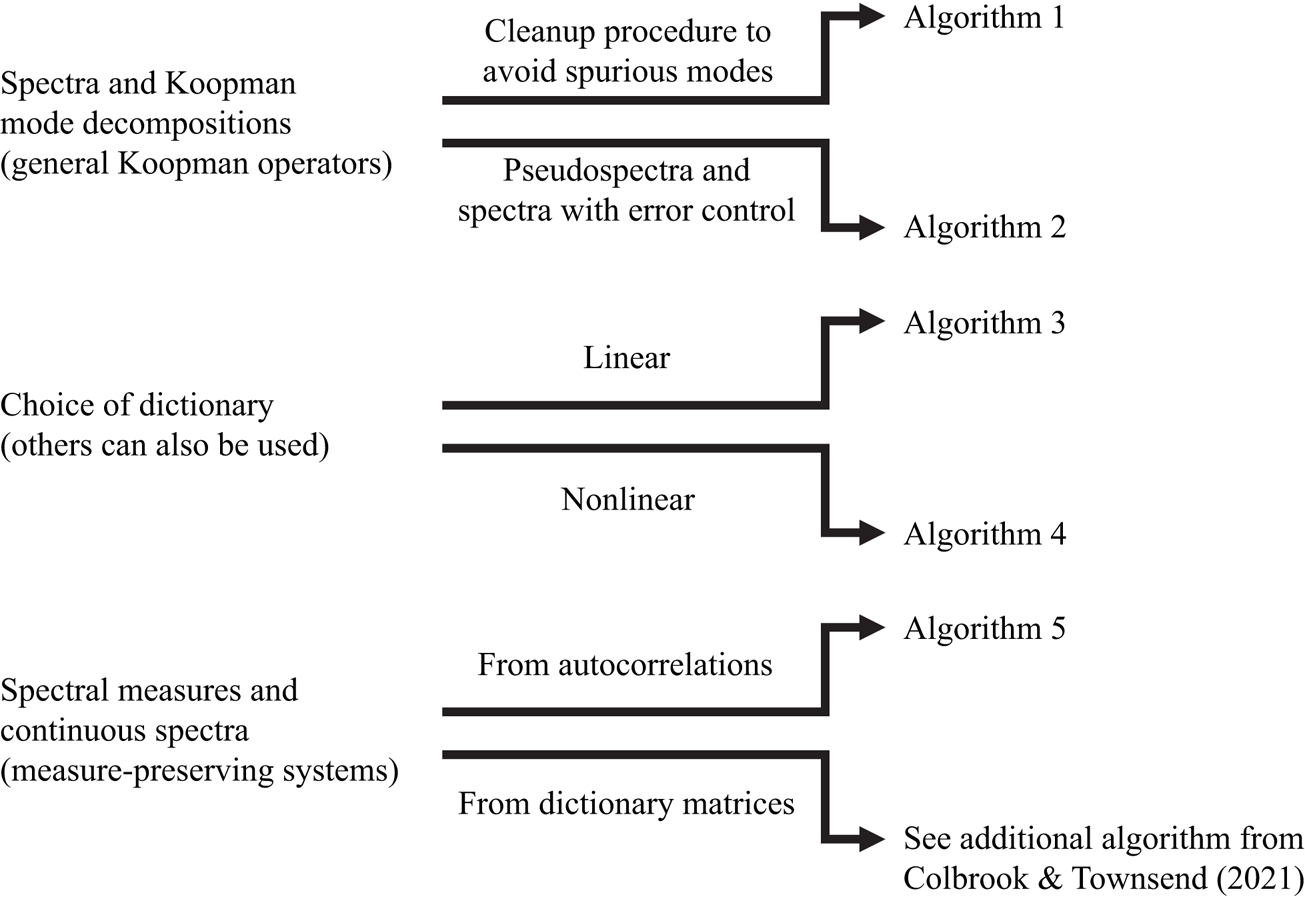

The paper is outlined as follows. In § 2, we recap EDMD, for which DMD is a special case, and interpret the algorithm as a Galerkin method. ResDMD is then introduced in § 3, where we recall and expand upon the results of Colbrook & Townsend (Reference Colbrook and Townsend2021). We stress that ResDMD does not make any assumptions about the Koopman operator or dynamical system. In § 4, we recall one of the algorithms of Colbrook & Townsend (Reference Colbrook and Townsend2021) for computing spectral measures (dealing with continuous spectra) under the assumption that the dynamical system is measure preserving, and make a new link between an algorithm for spectral measures of Koopman operators and the power spectra of signals. We then validate and apply our methods to four different flow cases. We treat flow past a cylinder (numerical data) in § 5, turbulent boundary layer flow (hot-wire experimental data) in § 6, wall-jet boundary layer flow (PIV experimental data) in § 7 and laser-induced plasma (experimental data collected with a microphone) in § 8. In each case, only the flow fields or pressure fields are used to extract relevant dynamical information. We end with a discussion and conclusions in § 9. Code for our methods can be found at https://github.com/MColbrook/Residual-Dynamic-Mode-Decomposition, and we provide a diagrammatic chart for implementation in figure 2.

Figure 2. A diagrammatic chart for the algorithms used in this paper. The computational problem is shown on the left, and the relevant algorithms on the right.

2. Recalling DMD

Here, we recall the definition of EDMD from Williams et al. (Reference Williams, Kevrekidis and Rowley2015a) and its interpretation as a Galerkin method. As a particular case of EDMD, DMD can be interpreted as producing a Galerkin approximation of the Koopman operator using linear basis functions.

2.1. Trajectory data

We assume that (1.1) is observed for ![]() $M_2$ time steps, starting at

$M_2$ time steps, starting at ![]() $M_1$ initial conditions. It is helpful to view the trajectory data as an

$M_1$ initial conditions. It is helpful to view the trajectory data as an ![]() $M_1\times M_2$ matrix

$M_1\times M_2$ matrix

\begin{equation} B_{{data}} = \begin{bmatrix} \pmb{x}_0^{(1)} & \cdots & \pmb{x}_{M_2-1}^{(1)}\\ \vdots & \ddots & \vdots \\ \pmb{x}_0^{(M_1)} & \cdots & \pmb{x}_{M_2-1}^{(M_1)}\\ \end{bmatrix}.\end{equation}

\begin{equation} B_{{data}} = \begin{bmatrix} \pmb{x}_0^{(1)} & \cdots & \pmb{x}_{M_2-1}^{(1)}\\ \vdots & \ddots & \vdots \\ \pmb{x}_0^{(M_1)} & \cdots & \pmb{x}_{M_2-1}^{(M_1)}\\ \end{bmatrix}.\end{equation}

Each row of ![]() $B_{{data}}$ corresponds to an observation of a single trajectory of the dynamical system that is witnessed for

$B_{{data}}$ corresponds to an observation of a single trajectory of the dynamical system that is witnessed for ![]() $M_2$ time steps. In particular,

$M_2$ time steps. In particular, ![]() $\pmb {x}_{i+1}^{(j)} = F(\pmb {x}_i^{(j)})$ for

$\pmb {x}_{i+1}^{(j)} = F(\pmb {x}_i^{(j)})$ for ![]() $0\leq i\leq M_2-2$ and

$0\leq i\leq M_2-2$ and ![]() $1\leq j\leq M_1$. For example, these snapshots could be measurements of unsteady velocity field across

$1\leq j\leq M_1$. For example, these snapshots could be measurements of unsteady velocity field across ![]() $M_1$ initial state configurations. One could therefore use just consider

$M_1$ initial state configurations. One could therefore use just consider ![]() $M_2=2$. One could also consider a single trajectory,

$M_2=2$. One could also consider a single trajectory, ![]() $M_1=1$, and large

$M_1=1$, and large ![]() $M_2$. The exact structure depends on the data acquisition method. In general, (2.1) could represent measurements of the velocity along

$M_2$. The exact structure depends on the data acquisition method. In general, (2.1) could represent measurements of the velocity along ![]() $M_1$ trajectories for

$M_1$ trajectories for ![]() $M_2$ time steps, a total of

$M_2$ time steps, a total of ![]() $M_1M_2$ measurements. One can also use our algorithms with data consisting of trajectories of different lengths (i.e. general snapshots).

$M_1M_2$ measurements. One can also use our algorithms with data consisting of trajectories of different lengths (i.e. general snapshots).

Letting ![]() $\{\pmb {x}^{(m)}\}_{m=1}^M$ and

$\{\pmb {x}^{(m)}\}_{m=1}^M$ and ![]() $\{\pmb {y}^{(m)}\}_{m=1}^M$ be enumerations of the first

$\{\pmb {y}^{(m)}\}_{m=1}^M$ be enumerations of the first ![]() $M_2-1$ columns and the second to final columns of

$M_2-1$ columns and the second to final columns of ![]() $B_{{data}}$, respectively, with

$B_{{data}}$, respectively, with ![]() $M=M_1(M_2-1)$, we can conveniently represent the data as a finite set of

$M=M_1(M_2-1)$, we can conveniently represent the data as a finite set of ![]() $M$ pairs of measurements of the state

$M$ pairs of measurements of the state

One could also consider measurements of certain observables ![]() $g, \{g(\pmb {x}^{(m)}),g(\pmb {y}^{(m)})\}_{m=1}^M$, and interpret the following algorithms as such. EDMD provides a way of using these measurements to approximate the operator

$g, \{g(\pmb {x}^{(m)}),g(\pmb {y}^{(m)})\}_{m=1}^M$, and interpret the following algorithms as such. EDMD provides a way of using these measurements to approximate the operator ![]() $\mathcal {K}$ via a matrix

$\mathcal {K}$ via a matrix ![]() $\mathbb {K}\in \mathbb {C}^{N\times N}$.

$\mathbb {K}\in \mathbb {C}^{N\times N}$.

2.2. Extended DMD

We work in the Hilbert space ![]() $L^2(\varOmega,\omega )$ of square-integrable observables with respect to a positive measure

$L^2(\varOmega,\omega )$ of square-integrable observables with respect to a positive measure ![]() $\omega$ on

$\omega$ on ![]() $\varOmega$. We do not assume that this measure is invariant. A common choice of

$\varOmega$. We do not assume that this measure is invariant. A common choice of ![]() $\omega$ is the standard Lebesgue measure. This choice is natural for Hamiltonian systems, for which the Koopman operator is unitary on

$\omega$ is the standard Lebesgue measure. This choice is natural for Hamiltonian systems, for which the Koopman operator is unitary on ![]() $L^2(\varOmega,\omega )$ (Arnold Reference Arnold1989). Another common choice is for

$L^2(\varOmega,\omega )$ (Arnold Reference Arnold1989). Another common choice is for ![]() $\omega$ to correspond to an ergodic measure on an attractor (Mezić Reference Mezić2005).

$\omega$ to correspond to an ergodic measure on an attractor (Mezić Reference Mezić2005).

Given a dictionary (a set of basis functions) ![]() $\{\psi _1,\ldots,\psi _{N}\}\subset \mathcal {D}(\mathcal {K})\subseteq L^2(\varOmega,\omega )$ of observables, EDMD constructs a matrix

$\{\psi _1,\ldots,\psi _{N}\}\subset \mathcal {D}(\mathcal {K})\subseteq L^2(\varOmega,\omega )$ of observables, EDMD constructs a matrix ![]() $\mathbb {K}\in \mathbb {C}^{N\times N}$ from the snapshot data (2.2) that approximates

$\mathbb {K}\in \mathbb {C}^{N\times N}$ from the snapshot data (2.2) that approximates ![]() $\mathcal {K}$ on the finite-dimensional subspace

$\mathcal {K}$ on the finite-dimensional subspace ![]() ${V}_{{N}}=\mathrm {span}\{\psi _1,\ldots,\psi _{N}\}$. The choice of the dictionary is up to the user, with some common hand-crafted choices given in Williams et al. (Reference Williams, Kevrekidis and Rowley2015a, table 1). When the state-space dimension

${V}_{{N}}=\mathrm {span}\{\psi _1,\ldots,\psi _{N}\}$. The choice of the dictionary is up to the user, with some common hand-crafted choices given in Williams et al. (Reference Williams, Kevrekidis and Rowley2015a, table 1). When the state-space dimension ![]() $d$ is large, as in this paper, it is beneficial to use a data-driven dictionary. We discuss this in § 3.3, where we present DMD (Kutz et al. Reference Kutz, Brunton, Brunton and Proctor2016a) and kEDMD (Williams et al. Reference Williams, Rowley and Kevrekidis2015b) in a unified framework.

$d$ is large, as in this paper, it is beneficial to use a data-driven dictionary. We discuss this in § 3.3, where we present DMD (Kutz et al. Reference Kutz, Brunton, Brunton and Proctor2016a) and kEDMD (Williams et al. Reference Williams, Rowley and Kevrekidis2015b) in a unified framework.

Given a dictionary of observables, we define the vector-valued observable or ‘quasimatrix’

Any new observable ![]() $g\in V_{N}$ can then be written as

$g\in V_{N}$ can then be written as ![]() $g(\pmb {x})=\sum _{j=1}^{N}\psi _j(\pmb {x})g_j=\varPsi (\pmb {x})\,\pmb {g}$ for some vector of constant coefficients

$g(\pmb {x})=\sum _{j=1}^{N}\psi _j(\pmb {x})g_j=\varPsi (\pmb {x})\,\pmb {g}$ for some vector of constant coefficients ![]() $\pmb {g}=[g_1\cdots g_N]^\top \in \mathbb {C}^{N}$. It follows from (1.2) that

$\pmb {g}=[g_1\cdots g_N]^\top \in \mathbb {C}^{N}$. It follows from (1.2) that

where

\begin{equation} R(\pmb{g},\pmb{x})=\sum_{j=1}^{N}\psi_j(F(\pmb{x}))g_j- \varPsi(\pmb{x})(\mathbb{K}\,\pmb{g}). \end{equation}

\begin{equation} R(\pmb{g},\pmb{x})=\sum_{j=1}^{N}\psi_j(F(\pmb{x}))g_j- \varPsi(\pmb{x})(\mathbb{K}\,\pmb{g}). \end{equation}

Typically, the subspace ![]() $V_{N}$ generated by the dictionary is not an invariant subspace of

$V_{N}$ generated by the dictionary is not an invariant subspace of ![]() $\mathcal {K}$, and hence there is no choice of

$\mathcal {K}$, and hence there is no choice of ![]() $\mathbb {K}$ that makes the error

$\mathbb {K}$ that makes the error ![]() $R(\pmb {g},\pmb {x})$ zero for all choices of

$R(\pmb {g},\pmb {x})$ zero for all choices of ![]() $g\in V_N$ and

$g\in V_N$ and ![]() $\pmb {x}\in \varOmega$. Instead, it is natural to select

$\pmb {x}\in \varOmega$. Instead, it is natural to select ![]() $\mathbb {K}$ as to minimise

$\mathbb {K}$ as to minimise

where ![]() $\|\pmb {g}\|$ denotes the standard Euclidean norm of a vector

$\|\pmb {g}\|$ denotes the standard Euclidean norm of a vector ![]() $\pmb {g}$. Given a finite amount of snapshot data, we cannot directly evaluate the integral in (2.6). Instead, we approximate it via a quadrature rule by treating the data points

$\pmb {g}$. Given a finite amount of snapshot data, we cannot directly evaluate the integral in (2.6). Instead, we approximate it via a quadrature rule by treating the data points ![]() $\{\pmb {x}^{(m)}\}_{m=1}^{M}$ as quadrature nodes with weights

$\{\pmb {x}^{(m)}\}_{m=1}^{M}$ as quadrature nodes with weights ![]() $\{w_m\}_{m=1}^{M}$. The discretised version of (2.6) is the following weighted least-squares problem:

$\{w_m\}_{m=1}^{M}$. The discretised version of (2.6) is the following weighted least-squares problem:

\begin{equation} \mathbb{K}\in \mathop{\mathrm{argmin}}\limits_{B\in\mathbb{C}^{N\times N}} \sum_{m=1}^{M} w_m\|\varPsi(\pmb{y}^{(m)})-\varPsi(\pmb{x}^{(m)})B\|^2. \end{equation}

\begin{equation} \mathbb{K}\in \mathop{\mathrm{argmin}}\limits_{B\in\mathbb{C}^{N\times N}} \sum_{m=1}^{M} w_m\|\varPsi(\pmb{y}^{(m)})-\varPsi(\pmb{x}^{(m)})B\|^2. \end{equation}We define the following two matrices:

\begin{equation} \varPsi_X=\begin{pmatrix} \varPsi(\pmb{x}^{(1)})\\ \vdots\\ \varPsi(\pmb{x}^{(M)}) \end{pmatrix}\in\mathbb{C}^{M\times N},\quad \varPsi_Y=\begin{pmatrix} \varPsi(\pmb{y}^{(1)})\\ \vdots \\ \varPsi(\pmb{y}^{(M)}) \end{pmatrix}\in\mathbb{C}^{M\times N},\end{equation}

\begin{equation} \varPsi_X=\begin{pmatrix} \varPsi(\pmb{x}^{(1)})\\ \vdots\\ \varPsi(\pmb{x}^{(M)}) \end{pmatrix}\in\mathbb{C}^{M\times N},\quad \varPsi_Y=\begin{pmatrix} \varPsi(\pmb{y}^{(1)})\\ \vdots \\ \varPsi(\pmb{y}^{(M)}) \end{pmatrix}\in\mathbb{C}^{M\times N},\end{equation}

and let ![]() $W=\mathrm {diag}(w_1,\ldots,w_M)$ be the diagonal weight matrix of the quadrature rule. A solution to (2.7) is

$W=\mathrm {diag}(w_1,\ldots,w_M)$ be the diagonal weight matrix of the quadrature rule. A solution to (2.7) is

where ‘![]() ${\dagger}$’ denotes the pseudoinverse. In some applications, the matrix

${\dagger}$’ denotes the pseudoinverse. In some applications, the matrix ![]() $\varPsi _X^*W\varPsi _X$ may be il -conditioned, so it is common to consider a regularisation such as a truncated singular value decomposition (SVD).

$\varPsi _X^*W\varPsi _X$ may be il -conditioned, so it is common to consider a regularisation such as a truncated singular value decomposition (SVD).

2.3. Quadrature and Galerkin methods

We can view EDMD as a Galerkin method. Note that

\begin{equation} \varPsi_X^*W\varPsi_X= \sum_{m=1}^{M} w_m \varPsi(\pmb{x}^{(m)})^* \varPsi(\pmb{x}^{(m)}),\quad \varPsi_X^*W\varPsi_Y= \sum_{m=1}^{M} w_m \varPsi(\pmb{x}^{(m)})^*\varPsi(\pmb{y}^{(m)}). \end{equation}

\begin{equation} \varPsi_X^*W\varPsi_X= \sum_{m=1}^{M} w_m \varPsi(\pmb{x}^{(m)})^* \varPsi(\pmb{x}^{(m)}),\quad \varPsi_X^*W\varPsi_Y= \sum_{m=1}^{M} w_m \varPsi(\pmb{x}^{(m)})^*\varPsi(\pmb{y}^{(m)}). \end{equation}If the quadrature approximation converges, it follows that

where ![]() $\langle {\cdot },{\cdot } \rangle$ is the inner product associated with the Hilbert space

$\langle {\cdot },{\cdot } \rangle$ is the inner product associated with the Hilbert space ![]() $L^2(\varOmega,\omega )$. Let

$L^2(\varOmega,\omega )$. Let ![]() $\mathcal {P}_{V_{N}}$ denote the orthogonal projection onto

$\mathcal {P}_{V_{N}}$ denote the orthogonal projection onto ![]() $V_{N}$. As

$V_{N}$. As ![]() $M\rightarrow \infty$, the above convergence means that

$M\rightarrow \infty$, the above convergence means that ![]() $\mathbb {K}$ approaches a matrix representation of

$\mathbb {K}$ approaches a matrix representation of ![]() $\mathcal {P}_{V_{N}}\mathcal {K}\mathcal {P}_{V_{N}}^*$. Thus, EDMD is a Galerkin method in the large data limit

$\mathcal {P}_{V_{N}}\mathcal {K}\mathcal {P}_{V_{N}}^*$. Thus, EDMD is a Galerkin method in the large data limit ![]() $M\rightarrow \infty$. There are typically three scenarios where (2.11a,b) holds:

$M\rightarrow \infty$. There are typically three scenarios where (2.11a,b) holds:

(i) Random sampling: in the initial definition of EDMD,

$\omega$ is a probability measure and

$\omega$ is a probability measure and  $\{\pmb {x}^{(m)}\}_{m=1}^M$ are drawn independently according to

$\{\pmb {x}^{(m)}\}_{m=1}^M$ are drawn independently according to  $\omega$ with the quadrature weights

$\omega$ with the quadrature weights  $w_m=1/M$. The strong law of large numbers shows that (2.11a,b) holds with probability one (Klus, Koltai & Schütte Reference Klus, Koltai and Schütte2016, § 3.4), provided that

$w_m=1/M$. The strong law of large numbers shows that (2.11a,b) holds with probability one (Klus, Koltai & Schütte Reference Klus, Koltai and Schütte2016, § 3.4), provided that  $\omega$ is not supported on a zero level set that is a linear combination of the dictionary (Korda & Mezić Reference Korda and Mezić2018, § 4). Convergence is typically at a Monte Carlo rate of

$\omega$ is not supported on a zero level set that is a linear combination of the dictionary (Korda & Mezić Reference Korda and Mezić2018, § 4). Convergence is typically at a Monte Carlo rate of  ${O}(M^{-1/2})$ (Caflisch Reference Caflisch1998). From an experimental point of view, an example of random sampling could be

${O}(M^{-1/2})$ (Caflisch Reference Caflisch1998). From an experimental point of view, an example of random sampling could be  $\{\pmb {x}^{(m)}\}$ observed with a sampling rate that is lower than the characteristic time period of the system of interest.

$\{\pmb {x}^{(m)}\}$ observed with a sampling rate that is lower than the characteristic time period of the system of interest.(ii) Highorder quadrature: if the dictionary and

$F$ are sufficiently regular and we are free to choose the

$F$ are sufficiently regular and we are free to choose the  $\{\pmb {x}^{(m)}\}_{m=1}^{M}$, then it is beneficial to select

$\{\pmb {x}^{(m)}\}_{m=1}^{M}$, then it is beneficial to select  $\{\pmb {x}^{(m)}\}_{m=1}^{M}$ as an

$\{\pmb {x}^{(m)}\}_{m=1}^{M}$ as an  $M$-point quadrature rule with weights

$M$-point quadrature rule with weights  $\{w_m\}_{m=1}^{M}$. This can lead to much faster convergence rates in (2.11a,b) (Colbrook & Townsend Reference Colbrook and Townsend2021), but can be difficult if

$\{w_m\}_{m=1}^{M}$. This can lead to much faster convergence rates in (2.11a,b) (Colbrook & Townsend Reference Colbrook and Townsend2021), but can be difficult if  $d$ is large.

$d$ is large.(iii) Ergodic sampling: for a single fixed initial condition

$\pmb {x}_0$ and

$\pmb {x}_0$ and  $\pmb {x}^{(m)}=F^{m-1}(\pmb {x}_0)$ (i.e. data collected along one trajectory with

$\pmb {x}^{(m)}=F^{m-1}(\pmb {x}_0)$ (i.e. data collected along one trajectory with  $M_1=1$ in (2.1)), if the dynamical system is ergodic, then one can use Birkhoff's ergodic theorem to show (2.11a,b) (Korda & Mezić Reference Korda and Mezić2018). One chooses

$M_1=1$ in (2.1)), if the dynamical system is ergodic, then one can use Birkhoff's ergodic theorem to show (2.11a,b) (Korda & Mezić Reference Korda and Mezić2018). One chooses  $w_m=1/M$ but the convergence rate is problem dependent (Kachurovskii Reference Kachurovskii1996). An example of ergodic sampling could be a time-resolved PIV dataset of a post-transient flow field over a long time period.

$w_m=1/M$ but the convergence rate is problem dependent (Kachurovskii Reference Kachurovskii1996). An example of ergodic sampling could be a time-resolved PIV dataset of a post-transient flow field over a long time period.

In this paper, we use (i) random sampling, and (iii) ergodic sampling, which are typical for experimental data collection since they arise from long-time trajectory measurements.

The convergence in (2.11a,b) implies that the EDMD eigenvalues approach the spectrum of ![]() $\mathcal {P}_{V_{N}}\mathcal {K}\mathcal {P}_{V_{N}}^*$ as

$\mathcal {P}_{V_{N}}\mathcal {K}\mathcal {P}_{V_{N}}^*$ as ![]() $M\rightarrow \infty$. Thus, approximating the spectrum of

$M\rightarrow \infty$. Thus, approximating the spectrum of ![]() $\mathcal {K}, \sigma (\mathcal {K})$, by the eigenvalues of

$\mathcal {K}, \sigma (\mathcal {K})$, by the eigenvalues of ![]() $\mathbb {K}$ is closely related to the so-called finite section method (Böttcher & Silbermann Reference Böttcher and Silbermann1983). Since the finite section method can suffer from spectral pollution (spurious modes), spectral pollution is also a concern for EDMD (Williams et al. Reference Williams, Kevrekidis and Rowley2015a). It is important to have an independent way to measure the accuracy of the candidate eigenvalue–eigenvector pairs, which is what we propose in our ResDMD method presented in § 3.

$\mathbb {K}$ is closely related to the so-called finite section method (Böttcher & Silbermann Reference Böttcher and Silbermann1983). Since the finite section method can suffer from spectral pollution (spurious modes), spectral pollution is also a concern for EDMD (Williams et al. Reference Williams, Kevrekidis and Rowley2015a). It is important to have an independent way to measure the accuracy of the candidate eigenvalue–eigenvector pairs, which is what we propose in our ResDMD method presented in § 3.

2.3.1. Relationship with DMD

Williams et al. (Reference Williams, Kevrekidis and Rowley2015a) observed that if we choose a dictionary of observables of the form ![]() $\psi _j(\pmb {x})=e_j^*\pmb {x}$ for

$\psi _j(\pmb {x})=e_j^*\pmb {x}$ for ![]() $j=1,\ldots,d$, where

$j=1,\ldots,d$, where ![]() $\{e_j\}$ denote the canonical basis, then the matrix

$\{e_j\}$ denote the canonical basis, then the matrix ![]() $\mathbb {K}=(\sqrt {W}\varPsi _X)^{\dagger} \sqrt {W}\varPsi _Y$ with

$\mathbb {K}=(\sqrt {W}\varPsi _X)^{\dagger} \sqrt {W}\varPsi _Y$ with ![]() $w_m=1/M$ is the transpose of the usual DMD matrix

$w_m=1/M$ is the transpose of the usual DMD matrix

Thus, DMD can be interpreted as producing a Galerkin approximation of the Koopman operator using the set of linear monomials as basis functions. EDMD can be considered an extension allowing nonlinear functions in the dictionary.

2.4. Koopman mode decomposition

Given an observable ![]() $h:\varOmega \rightarrow \mathbb {C}$, we approximate it by an element of

$h:\varOmega \rightarrow \mathbb {C}$, we approximate it by an element of ![]() $V_N$ via

$V_N$ via

Assuming that the quadrature rule converges, the right-hand side converges to the projection ![]() $\mathcal {P}_{V_N}h$ in

$\mathcal {P}_{V_N}h$ in ![]() $L^2(\varOmega,\omega )$ as

$L^2(\varOmega,\omega )$ as ![]() $M\rightarrow \infty$. Assuming that

$M\rightarrow \infty$. Assuming that ![]() $\mathbb {K}V=V\varLambda$ for a diagonal matrix

$\mathbb {K}V=V\varLambda$ for a diagonal matrix ![]() $\varLambda$ of eigenvalues and a matrix

$\varLambda$ of eigenvalues and a matrix ![]() $V$ of eigenvectors, we obtain

$V$ of eigenvectors, we obtain

As a special case, and vectorising, we have the Koopman mode decomposition

\begin{equation} \pmb{x}\approx \underbrace{\varPsi(\pmb{x})V}_{\text{Koopman e-functions}} \underbrace{[V^{{-}1}(\sqrt{W}\varPsi_X)^{\dagger} \sqrt{W}(\begin{matrix} \pmb{x}^{(1)} & \cdots & \pmb{x}^{(M)} \end{matrix})^\top]}_{N\times d\text{ matrix of Koopman modes}}. \end{equation}

\begin{equation} \pmb{x}\approx \underbrace{\varPsi(\pmb{x})V}_{\text{Koopman e-functions}} \underbrace{[V^{{-}1}(\sqrt{W}\varPsi_X)^{\dagger} \sqrt{W}(\begin{matrix} \pmb{x}^{(1)} & \cdots & \pmb{x}^{(M)} \end{matrix})^\top]}_{N\times d\text{ matrix of Koopman modes}}. \end{equation}

The ![]() $j$th Koopman mode corresponds to the

$j$th Koopman mode corresponds to the ![]() $j$th row of the matrix in square brackets, and

$j$th row of the matrix in square brackets, and ![]() $\varPsi V$ is a quasimatrix of approximate Koopman eigenfunctions.

$\varPsi V$ is a quasimatrix of approximate Koopman eigenfunctions.

3. Residual DMD

We now present ResDMD for computing spectral properties of Koopman operators, that in turn allows us to analyse fluid flow structures such as turbulence. ResDMD, first introduced in Colbrook & Townsend (Reference Colbrook and Townsend2021), uses an additional matrix constructed from the measured data, ![]() $\{\pmb {x}^{(m)},\pmb {y}^{(m)}\}_{m=1}^M$. The key difference between ResDMD and other DMD-type algorithms is that we construct Galerkin approximations for not only

$\{\pmb {x}^{(m)},\pmb {y}^{(m)}\}_{m=1}^M$. The key difference between ResDMD and other DMD-type algorithms is that we construct Galerkin approximations for not only ![]() $\mathcal {K}$, but also

$\mathcal {K}$, but also ![]() $\mathcal {K}^*\mathcal {K}$. This difference allows us to have rigorous convergence guarantees for ResDMD and obtain error guarantees on the approximation. In other words, we can tell a posteriori which parts of the computed spectra and Koopman modes are reliable, thus rectifying issues such as spectral pollution that arise in previous DMD-type methods.

$\mathcal {K}^*\mathcal {K}$. This difference allows us to have rigorous convergence guarantees for ResDMD and obtain error guarantees on the approximation. In other words, we can tell a posteriori which parts of the computed spectra and Koopman modes are reliable, thus rectifying issues such as spectral pollution that arise in previous DMD-type methods.

3.1. ResDMD and a new data matrix

Whilst EDMD obtains eigenvalue–eigenvector pairs for an approximation of the Koopman operator, it cannot verify the accuracy of the computed pairs. However, we show below how one can confidently identify true physical turbulent flow structures by rigorously rejecting inaccurate predictions (spurious modes). This rigorous measure of accuracy is the linchpin of our new ResDMD method and is shown pictorially in figure 1.

We approximate residuals to measure the accuracy of a candidate eigenvalue–eigenvector pair ![]() $(\lambda,g)$ (which could, for example, be computed from

$(\lambda,g)$ (which could, for example, be computed from ![]() $\mathbb {K}$ or some other method). For

$\mathbb {K}$ or some other method). For ![]() $\lambda \in \mathbb {C}$ and

$\lambda \in \mathbb {C}$ and ![]() $g=\varPsi \,\pmb {g}\in V_{N}$, the squared relative residual is

$g=\varPsi \,\pmb {g}\in V_{N}$, the squared relative residual is

\begin{align} &\frac{\displaystyle \int_{\varOmega}|[\mathcal{K}g](\pmb{x})- \lambda g(\pmb{x})|^2\, {\rm d} \omega(\pmb{x})}{\displaystyle \int_{\varOmega} |g(\pmb{x})|^2\, {\rm d} \omega(\pmb{x})}\nonumber\\ &\quad=\frac{\displaystyle \sum_{j,k=1}^{N}\overline{{g}_j}{g}_k [\langle \mathcal{K}\psi_k,\mathcal{K}\psi_j\rangle - \lambda\langle \psi_k,\mathcal{K}\psi_j\rangle -\bar{\lambda}\langle \mathcal{K}\psi_k,\psi_j\rangle+|\lambda|^2\langle \psi_k,\psi_j\rangle]}{\displaystyle \sum_{j,k=1}^{N}\overline{{g}_j}{g}_k\langle \psi_k,\psi_j\rangle}. \end{align}

\begin{align} &\frac{\displaystyle \int_{\varOmega}|[\mathcal{K}g](\pmb{x})- \lambda g(\pmb{x})|^2\, {\rm d} \omega(\pmb{x})}{\displaystyle \int_{\varOmega} |g(\pmb{x})|^2\, {\rm d} \omega(\pmb{x})}\nonumber\\ &\quad=\frac{\displaystyle \sum_{j,k=1}^{N}\overline{{g}_j}{g}_k [\langle \mathcal{K}\psi_k,\mathcal{K}\psi_j\rangle - \lambda\langle \psi_k,\mathcal{K}\psi_j\rangle -\bar{\lambda}\langle \mathcal{K}\psi_k,\psi_j\rangle+|\lambda|^2\langle \psi_k,\psi_j\rangle]}{\displaystyle \sum_{j,k=1}^{N}\overline{{g}_j}{g}_k\langle \psi_k,\psi_j\rangle}. \end{align}We approximate this residual using the same quadrature rule in § 2.2

As well as the matrices ![]() $\varPsi _X^*W\varPsi _X$ and

$\varPsi _X^*W\varPsi _X$ and ![]() $\varPsi _X^*W\varPsi _Y$ found in EDMD, (3.2) has the additional matrix

$\varPsi _X^*W\varPsi _Y$ found in EDMD, (3.2) has the additional matrix ![]() $\varPsi _Y^*W\varPsi _Y$. Note that this additional matrix is no more expensive to compute than either of the EDMD matrices, and uses the same data for its construction as EDMD. Since

$\varPsi _Y^*W\varPsi _Y$. Note that this additional matrix is no more expensive to compute than either of the EDMD matrices, and uses the same data for its construction as EDMD. Since ![]() $\varPsi _Y^*W\varPsi _Y= \sum _{m=1}^{M} w_m \varPsi (\pmb {y}^{(m)})^*\varPsi (\pmb {y}^{(m)})$, if the quadrature rule converges then

$\varPsi _Y^*W\varPsi _Y= \sum _{m=1}^{M} w_m \varPsi (\pmb {y}^{(m)})^*\varPsi (\pmb {y}^{(m)})$, if the quadrature rule converges then

Hence, ![]() $\varPsi _Y^*W\varPsi _Y$ formally corresponds to an approximation of

$\varPsi _Y^*W\varPsi _Y$ formally corresponds to an approximation of ![]() $\mathcal {K}^*\mathcal {K}$. We also have

$\mathcal {K}^*\mathcal {K}$. We also have

\begin{equation} \lim_{M\rightarrow\infty} {res}(\lambda,g)^2=\frac{\displaystyle \int_{\varOmega}|[\mathcal{K}g](\pmb{x})-\lambda g(\pmb{x})|^2\, {\rm d} \omega(\pmb{x})}{\displaystyle \int_{\varOmega} |g(\pmb{x})|^2\, {\rm d} \omega(\pmb{x})}. \end{equation}

\begin{equation} \lim_{M\rightarrow\infty} {res}(\lambda,g)^2=\frac{\displaystyle \int_{\varOmega}|[\mathcal{K}g](\pmb{x})-\lambda g(\pmb{x})|^2\, {\rm d} \omega(\pmb{x})}{\displaystyle \int_{\varOmega} |g(\pmb{x})|^2\, {\rm d} \omega(\pmb{x})}. \end{equation}

In Colbrook & Townsend (Reference Colbrook and Townsend2021), it was shown that the quantity ![]() ${res}(\lambda,g)$ can be used to rigorously compute spectra and pseudospectra of

${res}(\lambda,g)$ can be used to rigorously compute spectra and pseudospectra of ![]() $\mathcal {K}$. Next, we summarise some of the algorithms and results of Colbrook & Townsend (Reference Colbrook and Townsend2021).

$\mathcal {K}$. Next, we summarise some of the algorithms and results of Colbrook & Townsend (Reference Colbrook and Townsend2021).

3.2. ResDMD for computing spectra and pseudospectra

3.2.1. Avoiding spurious eigenvalues

Algorithm 1 uses the residual defined in (3.2) to avoid spectral pollution (spurious modes). As is usually done, we assume that ![]() $\mathbb {K}$ is diagonalisable. We first find the eigenvalues and eigenvectors of

$\mathbb {K}$ is diagonalisable. We first find the eigenvalues and eigenvectors of ![]() $\mathbb {K}$, i.e. we solve

$\mathbb {K}$, i.e. we solve ![]() $(\varPsi _X^*W\varPsi _X)^{\dagger} (\varPsi _X^*W\varPsi _Y){\pmb g} = \lambda {\pmb g}$. One can solve this eigenproblem directly, but it is often numerically more stable to solve the generalised eigenproblem

$(\varPsi _X^*W\varPsi _X)^{\dagger} (\varPsi _X^*W\varPsi _Y){\pmb g} = \lambda {\pmb g}$. One can solve this eigenproblem directly, but it is often numerically more stable to solve the generalised eigenproblem ![]() $(\varPsi _X^*W\varPsi _Y){\pmb g} = \lambda (\varPsi _X^*W\varPsi _X){\pmb g}$. Afterwards, to avoid spectral pollution, we discard eigenpairs with a relative residual larger than a specified accuracy goal

$(\varPsi _X^*W\varPsi _Y){\pmb g} = \lambda (\varPsi _X^*W\varPsi _X){\pmb g}$. Afterwards, to avoid spectral pollution, we discard eigenpairs with a relative residual larger than a specified accuracy goal ![]() $\epsilon >0$.

$\epsilon >0$.

The procedure is a simple modification of EDMD, as the only difference is a clean-up step where spurious eigenpairs are discarded based on their residual. This clean-up step is typically faster to execute than the eigendecomposition in step 2 of algorithm 1. The total computational cost of algorithm 1 is ![]() ${O}(N^2M+N^3)$, which is the same as EDMD. The clean up avoids spectral pollution and also removes eigenpairs that are inaccurate due to numerical errors associated with non-normal operators, up to the relative tolerance

${O}(N^2M+N^3)$, which is the same as EDMD. The clean up avoids spectral pollution and also removes eigenpairs that are inaccurate due to numerical errors associated with non-normal operators, up to the relative tolerance ![]() $\epsilon$. The following result (Colbrook & Townsend Reference Colbrook and Townsend2021, theorem 4.1) makes this precise.

$\epsilon$. The following result (Colbrook & Townsend Reference Colbrook and Townsend2021, theorem 4.1) makes this precise.

Algorithm 1 : ResDMD for computing eigenpairs without spectral pollution. The corresponding Koopman mode decomposition is given in (3.6).

Theorem 3.1 Let ![]() $\mathcal {K}$ be the associated Koopman operator of the dynamical system (1.1) from which snapshot data are collected. Let

$\mathcal {K}$ be the associated Koopman operator of the dynamical system (1.1) from which snapshot data are collected. Let ![]() $\varLambda _{M}$ denote the eigenvalues in the output of algorithm 1. Then, assuming convergence of the quadrature rule in § 2.2

$\varLambda _{M}$ denote the eigenvalues in the output of algorithm 1. Then, assuming convergence of the quadrature rule in § 2.2

We can also use algorithm 1 to clean up the Koopman mode decomposition in (2.15). To do this, we simply let ![]() $V^{(\epsilon )}$ denote the matrix whose columns are the eigenvectors

$V^{(\epsilon )}$ denote the matrix whose columns are the eigenvectors ![]() $\pmb {g}_j$ with

$\pmb {g}_j$ with ![]() ${res}(\lambda _j,g_{(j)})\leq \epsilon$ and compute the Koopman mode decomposition with respect to

${res}(\lambda _j,g_{(j)})\leq \epsilon$ and compute the Koopman mode decomposition with respect to ![]() $\varPsi _X^{(\epsilon )}=\varPsi _X V^{(\epsilon )}$ and

$\varPsi _X^{(\epsilon )}=\varPsi _X V^{(\epsilon )}$ and ![]() $\varPsi _Y^{(\epsilon )}=\varPsi _Y V^{(\epsilon )}$. Since

$\varPsi _Y^{(\epsilon )}=\varPsi _Y V^{(\epsilon )}$. Since ![]() $(\sqrt {W}\varPsi _X V^{(\epsilon )})^{\dagger} \sqrt {W}\varPsi _Y V^{(\epsilon )}$ is diagonal, the Koopman mode decomposition now becomes

$(\sqrt {W}\varPsi _X V^{(\epsilon )})^{\dagger} \sqrt {W}\varPsi _Y V^{(\epsilon )}$ is diagonal, the Koopman mode decomposition now becomes

\begin{equation} \pmb{x}\approx \underbrace{\varPsi(\pmb{x})V^{(\epsilon)}}_{\text{Koopman e-functions}} \underbrace{[(\sqrt{W}\varPsi_XV^{(\epsilon)})^{\dagger} \sqrt{W}(\begin{matrix} \pmb{x}^{(1)} & \cdots & \pmb{x}^{(M)} \end{matrix})^\top]}_{N\times d\text{ matrix of Koopman modes}}. \end{equation}

\begin{equation} \pmb{x}\approx \underbrace{\varPsi(\pmb{x})V^{(\epsilon)}}_{\text{Koopman e-functions}} \underbrace{[(\sqrt{W}\varPsi_XV^{(\epsilon)})^{\dagger} \sqrt{W}(\begin{matrix} \pmb{x}^{(1)} & \cdots & \pmb{x}^{(M)} \end{matrix})^\top]}_{N\times d\text{ matrix of Koopman modes}}. \end{equation}3.2.2. Computing the full spectrum and pseudospectra

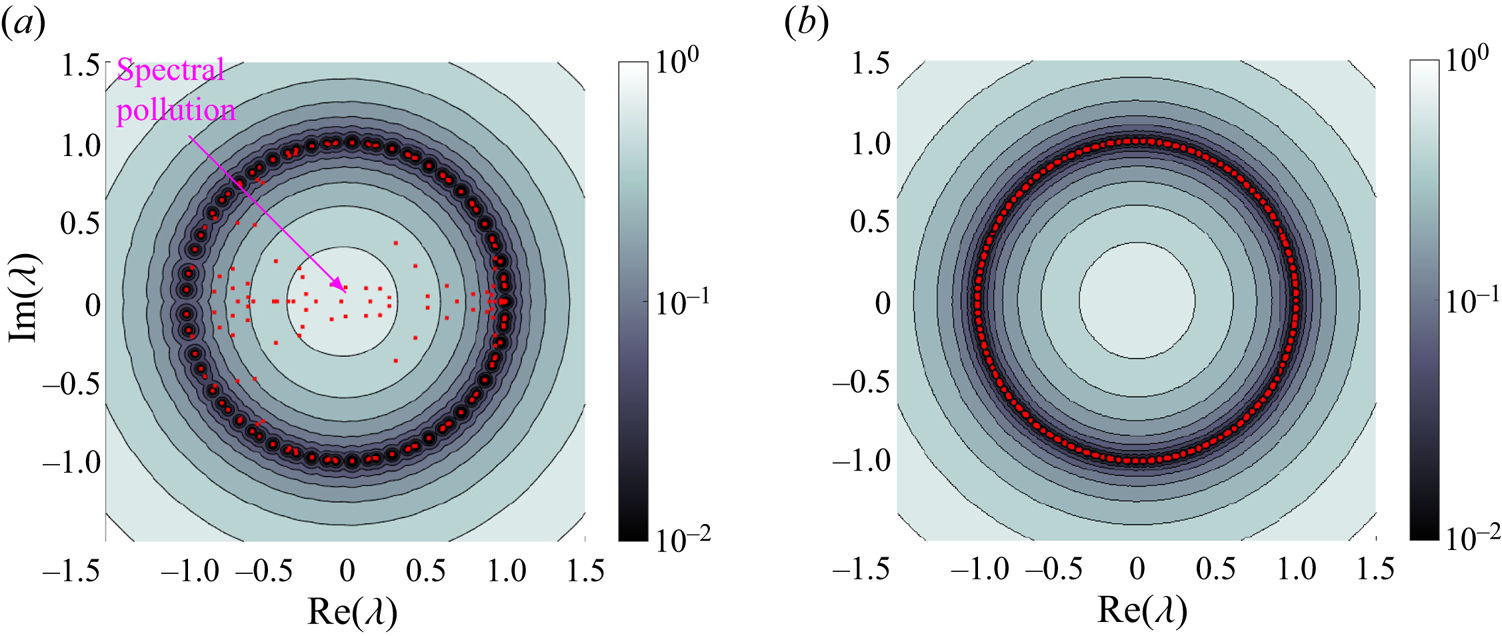

Theorem 3.1 tells us that, in the large data limit, algorithm 1 computes eigenvalues inside the ![]() $\epsilon$-pseudospectrum of

$\epsilon$-pseudospectrum of ![]() $\mathcal {K}$. Hence, algorithm 1 avoids spectral pollution and returns reasonable eigenvalues. The

$\mathcal {K}$. Hence, algorithm 1 avoids spectral pollution and returns reasonable eigenvalues. The ![]() $\epsilon$-pseudospectrum of

$\epsilon$-pseudospectrum of ![]() $\mathcal {K}$ is (Trefethen & Embree Reference Trefethen and Embree2005)

$\mathcal {K}$ is (Trefethen & Embree Reference Trefethen and Embree2005)

where ![]() ${\rm cl}$ denotes the closure of a set, and

${\rm cl}$ denotes the closure of a set, and ![]() $\lim _{\epsilon \downarrow 0}{\sigma }_{\epsilon }(\mathcal {K})= {\sigma }(\mathcal {K})$. Despite theorem 3.1, algorithm 1 may not approximate the whole of

$\lim _{\epsilon \downarrow 0}{\sigma }_{\epsilon }(\mathcal {K})= {\sigma }(\mathcal {K})$. Despite theorem 3.1, algorithm 1 may not approximate the whole of ![]() ${\sigma }_{\epsilon }(\mathcal {K})$, even as

${\sigma }_{\epsilon }(\mathcal {K})$, even as ![]() $M\rightarrow \infty$ and

$M\rightarrow \infty$ and ![]() $N\rightarrow \infty$. This is because the eigenvalues of

$N\rightarrow \infty$. This is because the eigenvalues of ![]() $\mathcal {P}_{V_{N}}\mathcal {K}\mathcal {P}_{V_{N}}^*$ may not approximate the whole of

$\mathcal {P}_{V_{N}}\mathcal {K}\mathcal {P}_{V_{N}}^*$ may not approximate the whole of ![]() ${\sigma }(\mathcal {K})$ as

${\sigma }(\mathcal {K})$ as ![]() $N\rightarrow \infty$ (Colbrook & Townsend Reference Colbrook and Townsend2021; Mezic Reference Mezic2022), even if

$N\rightarrow \infty$ (Colbrook & Townsend Reference Colbrook and Townsend2021; Mezic Reference Mezic2022), even if ![]() $\cup _{N}V_N$ is dense in

$\cup _{N}V_N$ is dense in ![]() $L^2(\varOmega,\omega )$.

$L^2(\varOmega,\omega )$.

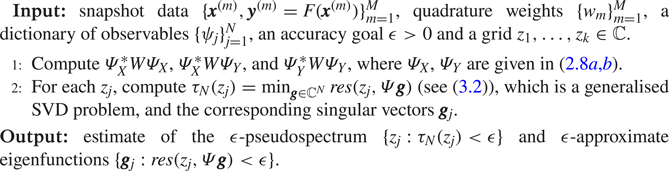

To overcome this issue, algorithm 2 computes practical approximations of ![]() $\epsilon$-pseudospectra with rigorous convergence guarantees. Assuming convergence of the quadrature rule in § 2.2, in the limit

$\epsilon$-pseudospectra with rigorous convergence guarantees. Assuming convergence of the quadrature rule in § 2.2, in the limit ![]() $M\rightarrow \infty$, the key quantity

$M\rightarrow \infty$, the key quantity

is an upper bound for ![]() $\|(\mathcal {K}-\lambda )^{-1}\|^{-1}$. The output of algorithm 2 is guaranteed to be inside the

$\|(\mathcal {K}-\lambda )^{-1}\|^{-1}$. The output of algorithm 2 is guaranteed to be inside the ![]() $\epsilon$-pseudospectrum of

$\epsilon$-pseudospectrum of ![]() $\mathcal {K}$. As

$\mathcal {K}$. As ![]() $N\rightarrow \infty$ and the grid of points is refined, algorithm 2 converges to the pseudospectrum uniformly on compact subsets of

$N\rightarrow \infty$ and the grid of points is refined, algorithm 2 converges to the pseudospectrum uniformly on compact subsets of ![]() $\mathbb {C}$ (Colbrook & Townsend Reference Colbrook and Townsend2021, theorems B.1 and B.2). In practice the grid

$\mathbb {C}$ (Colbrook & Townsend Reference Colbrook and Townsend2021, theorems B.1 and B.2). In practice the grid ![]() $\{z_j\}_{j=1}^k$ is chosen for plotting purposes (e.g. in figure 3). Strictly speaking, we converge to the approximate point pseudospectrum, a more complicated algorithm leads to computation of the full pseudospectrum – see Colbrook & Townsend (Reference Colbrook and Townsend2021, appendix B). For brevity, we have not included a statement of the results. We can then compute the spectrum by taking

$\{z_j\}_{j=1}^k$ is chosen for plotting purposes (e.g. in figure 3). Strictly speaking, we converge to the approximate point pseudospectrum, a more complicated algorithm leads to computation of the full pseudospectrum – see Colbrook & Townsend (Reference Colbrook and Townsend2021, appendix B). For brevity, we have not included a statement of the results. We can then compute the spectrum by taking ![]() $\epsilon \downarrow 0$. Algorithm 2 also computes observables

$\epsilon \downarrow 0$. Algorithm 2 also computes observables ![]() $g$ with

$g$ with ![]() ${res}(\lambda,g)< \epsilon$, known as

${res}(\lambda,g)< \epsilon$, known as ![]() $\epsilon$-approximate eigenfunctions. The computational cost of algorithm 2 is

$\epsilon$-approximate eigenfunctions. The computational cost of algorithm 2 is ![]() ${O}(N^2M+kN^3)$. However, the computation of

${O}(N^2M+kN^3)$. However, the computation of ![]() $\tau _N$ can be done in parallel over the

$\tau _N$ can be done in parallel over the ![]() $k$ grid points, and we can refine the grid adaptively near regions of interest.

$k$ grid points, and we can refine the grid adaptively near regions of interest.

Algorithm 2 : ResDMD for estimating ε-pseudospectra.

3.3. Choice of dictionary

When ![]() $d$ is large, it can be impractical to store or form the matrix

$d$ is large, it can be impractical to store or form the matrix ![]() $\mathbb {K}$ since the initial value of

$\mathbb {K}$ since the initial value of ![]() $N$ may be huge. In other words, we run into the curse of dimensionality. We consider two common methods to overcome this issue:

$N$ may be huge. In other words, we run into the curse of dimensionality. We consider two common methods to overcome this issue:

(i) DMD: in this case, the dictionary consists of linear functions (see § 2.3.1). It is standard to form a low-rank approximation of

$\sqrt {W}\varPsi _X$ via a truncated SVD as

(3.9)Here,

$\sqrt {W}\varPsi _X$ via a truncated SVD as

(3.9)Here, \begin{equation} \sqrt{W}\varPsi_X\approx U_r\varSigma_rV_r^*. \end{equation}

\begin{equation} \sqrt{W}\varPsi_X\approx U_r\varSigma_rV_r^*. \end{equation} $\varSigma _r\in \mathbb {C}^{r\times r}$ is diagonal with strictly positive diagonal entries, and

$\varSigma _r\in \mathbb {C}^{r\times r}$ is diagonal with strictly positive diagonal entries, and  $V_r\in \mathbb {C}^{N\times r}$ and

$V_r\in \mathbb {C}^{N\times r}$ and  $U_r\in \mathbb {C}^{M\times r}$ have

$U_r\in \mathbb {C}^{M\times r}$ have  $V_r^*V_r=U_r^*U_r=I_r$. We then form the matrix

(3.10)Note that to fit into our Galerkin framework, this matrix is the transpose of the DMD matrix that is commonly computed in the literature.

$V_r^*V_r=U_r^*U_r=I_r$. We then form the matrix

(3.10)Note that to fit into our Galerkin framework, this matrix is the transpose of the DMD matrix that is commonly computed in the literature. \begin{equation} \tilde{\mathbb{K}}=(\sqrt{W}\varPsi_XV_r)^{\dagger}\sqrt{W} \varPsi_YV_r=\varSigma_r^{{-}1}U_r^*\sqrt{W}\varPsi_YV_r=V_r^* \mathbb{K}V_r\in\mathbb{C}^{r\times r}. \end{equation}

\begin{equation} \tilde{\mathbb{K}}=(\sqrt{W}\varPsi_XV_r)^{\dagger}\sqrt{W} \varPsi_YV_r=\varSigma_r^{{-}1}U_r^*\sqrt{W}\varPsi_YV_r=V_r^* \mathbb{K}V_r\in\mathbb{C}^{r\times r}. \end{equation}(ii) Kernelised EDMD (kEDMD): kEDMD (Williams et al. Reference Williams, Rowley and Kevrekidis2015b) aims to make EDMD practical for large

$d$. Supposing that

$d$. Supposing that  $\varPsi _X$ is of full rank, kEDMD constructs a matrix with an identical formula to (3.10) when

$\varPsi _X$ is of full rank, kEDMD constructs a matrix with an identical formula to (3.10) when  $r=M$, for which we have the equivalent form

(3.11)Suitable matrices

$r=M$, for which we have the equivalent form

(3.11)Suitable matrices \begin{equation} \tilde{\mathbb{K}}=(\varSigma_M^{\dagger} U_M^*)(\sqrt{W}\varPsi_Y \varPsi_X^*\sqrt{W})(U_M\varSigma_M^{\dagger}). \end{equation}

\begin{equation} \tilde{\mathbb{K}}=(\varSigma_M^{\dagger} U_M^*)(\sqrt{W}\varPsi_Y \varPsi_X^*\sqrt{W})(U_M\varSigma_M^{\dagger}). \end{equation} $U_M$ and

$U_M$ and  $\varSigma _M$ can be recovered from the eigenvalue decomposition

$\varSigma _M$ can be recovered from the eigenvalue decomposition  $\sqrt {W}\varPsi _X\varPsi _X^*\sqrt {W}=U_M\varSigma _M^2U_M^*$. Moreover, both matrices

$\sqrt {W}\varPsi _X\varPsi _X^*\sqrt {W}=U_M\varSigma _M^2U_M^*$. Moreover, both matrices  $\sqrt {W}\varPsi _X\varPsi _X^*\sqrt {W}$ and

$\sqrt {W}\varPsi _X\varPsi _X^*\sqrt {W}$ and  $\sqrt {W}\varPsi _Y\varPsi _X^*\sqrt {W}$ can be computed using inner products. The kEDMD applies the kernel trick to compute the inner products in an implicitly defined reproducing Hilbert space

$\sqrt {W}\varPsi _Y\varPsi _X^*\sqrt {W}$ can be computed using inner products. The kEDMD applies the kernel trick to compute the inner products in an implicitly defined reproducing Hilbert space  $\mathcal {H}$ with inner product

$\mathcal {H}$ with inner product  $\langle {\cdot },{\cdot }\rangle _{\mathcal {H}}$ (Scholkopf Reference Scholkopf2001). A positive–definite kernel function

$\langle {\cdot },{\cdot }\rangle _{\mathcal {H}}$ (Scholkopf Reference Scholkopf2001). A positive–definite kernel function  $\mathcal {S}:\varOmega \times \varOmega \rightarrow \mathbb {R}$ induces a feature map

$\mathcal {S}:\varOmega \times \varOmega \rightarrow \mathbb {R}$ induces a feature map  $\varphi :\mathbb {R}^d\rightarrow \mathcal {H}$ so that

$\varphi :\mathbb {R}^d\rightarrow \mathcal {H}$ so that  $\langle \varphi (\pmb {x}), \varphi (\pmb {y})\rangle _{\mathcal {H}}=\mathcal {S}(\pmb {x},\pmb {y})$. This leads to an implicit choice of (typically nonlinear) dictionary

$\langle \varphi (\pmb {x}), \varphi (\pmb {y})\rangle _{\mathcal {H}}=\mathcal {S}(\pmb {x},\pmb {y})$. This leads to an implicit choice of (typically nonlinear) dictionary  $\varPsi (\pmb {x})$, that is dependent on the kernel function, so that

$\varPsi (\pmb {x})$, that is dependent on the kernel function, so that  $\varPsi (\pmb {x})\varPsi (\pmb {y})^*=\langle \varphi (\pmb {x}),\varphi (\pmb {y})\rangle _{\mathcal {H}}=\mathcal {S}(\pmb {x},\pmb {y})$. Often

$\varPsi (\pmb {x})\varPsi (\pmb {y})^*=\langle \varphi (\pmb {x}),\varphi (\pmb {y})\rangle _{\mathcal {H}}=\mathcal {S}(\pmb {x},\pmb {y})$. Often  $\mathcal {S}$ can be evaluated in

$\mathcal {S}$ can be evaluated in  ${O}(d)$ operations, meaning that

${O}(d)$ operations, meaning that  $\tilde {\mathbb {K}}$ is constructed in

$\tilde {\mathbb {K}}$ is constructed in  ${O}(dM^2)$ operations.

${O}(dM^2)$ operations.

In either of these two cases, the approximation of ![]() $\mathcal {K}$ is equivalent to using a new dictionary with feature map

$\mathcal {K}$ is equivalent to using a new dictionary with feature map ![]() $\varPsi (\pmb {x})V_r\in \mathbb {C}^{1\times r}$. In the case of DMD, we found it beneficial to use the mathematically equivalent choice

$\varPsi (\pmb {x})V_r\in \mathbb {C}^{1\times r}$. In the case of DMD, we found it beneficial to use the mathematically equivalent choice ![]() $\varPsi (\pmb {x})V_r\varSigma _r^{-1}$, which is numerically better conditioned. To see why, note that

$\varPsi (\pmb {x})V_r\varSigma _r^{-1}$, which is numerically better conditioned. To see why, note that ![]() $\sqrt {W}\varPsi _XV_r\varSigma _r^{-1}\approx U_r$ and

$\sqrt {W}\varPsi _XV_r\varSigma _r^{-1}\approx U_r$ and ![]() $U_r$ has orthonormal columns.

$U_r$ has orthonormal columns.

3.3.1. The problem of vanishing residual estimates

Suppose that ![]() $\sqrt {W}\varPsi _XV_r$ has full row rank, so that

$\sqrt {W}\varPsi _XV_r$ has full row rank, so that ![]() $r=M$, and that

$r=M$, and that ![]() $\pmb {v}\in \mathbb {C}^{M}$ is an eigenvector of

$\pmb {v}\in \mathbb {C}^{M}$ is an eigenvector of ![]() $\tilde {\mathbb {K}}$ with eigenvalue

$\tilde {\mathbb {K}}$ with eigenvalue ![]() $\lambda$. This means that

$\lambda$. This means that ![]() $(\sqrt {W}\varPsi _XV_M)^{\dagger} \sqrt {W}\varPsi _YV_M \pmb {v}=\lambda \pmb {v}$. Since

$(\sqrt {W}\varPsi _XV_M)^{\dagger} \sqrt {W}\varPsi _YV_M \pmb {v}=\lambda \pmb {v}$. Since ![]() $\sqrt {W}\varPsi _XV_M$ has independent rows,

$\sqrt {W}\varPsi _XV_M$ has independent rows, ![]() $\sqrt {W}\varPsi _XV_M(\sqrt {W}\varPsi _XV_M)^{\dagger} =I_M$ and hence

$\sqrt {W}\varPsi _XV_M(\sqrt {W}\varPsi _XV_M)^{\dagger} =I_M$ and hence ![]() $\sqrt {W}\varPsi _YV_M\pmb {v}=\lambda \sqrt {W}\varPsi _XV_M\pmb {v}$. The corresponding observable is

$\sqrt {W}\varPsi _YV_M\pmb {v}=\lambda \sqrt {W}\varPsi _XV_M\pmb {v}$. The corresponding observable is ![]() $g(\pmb {x})=\varPsi (\pmb {x})V_M\pmb {v}$ and the numerator of

$g(\pmb {x})=\varPsi (\pmb {x})V_M\pmb {v}$ and the numerator of ![]() ${res}(\lambda,g)^2$ in (3.2) is equal to

${res}(\lambda,g)^2$ in (3.2) is equal to ![]() $\|\sqrt {W}\varPsi _YV_M\pmb {v}-\lambda \sqrt {W}\varPsi _XV_M\pmb {v}\|^2.$ It follows that

$\|\sqrt {W}\varPsi _YV_M\pmb {v}-\lambda \sqrt {W}\varPsi _XV_M\pmb {v}\|^2.$ It follows that ![]() ${res}(\lambda,g)=0$. Similarly, if

${res}(\lambda,g)=0$. Similarly, if ![]() $r$ is too large,

$r$ is too large, ![]() ${res}(\lambda,g)$ will be a bad approximation of the true residual.

${res}(\lambda,g)$ will be a bad approximation of the true residual.

In other words, the regime ![]() $r\sim M$ prevents the large data convergence

$r\sim M$ prevents the large data convergence ![]() $(M\rightarrow \infty )$ of the quadrature rule and (3.4), which holds for a fixed basis and hence a fixed basis size. In turn, this prevents us from being able to apply the results of § 3.2. We next discuss overcoming this issue by using two sets of snapshot data; these could arise from two independent tests of the same system, or by partitioning the measured data into two groups.

$(M\rightarrow \infty )$ of the quadrature rule and (3.4), which holds for a fixed basis and hence a fixed basis size. In turn, this prevents us from being able to apply the results of § 3.2. We next discuss overcoming this issue by using two sets of snapshot data; these could arise from two independent tests of the same system, or by partitioning the measured data into two groups.

3.3.2. Using two subsets of the snapshot data

A simple remedy to avoid the problem in § 3.3.1 is to consider two sets of snapshot data. We consider an initial set ![]() $\{\tilde {\pmb {x}}^{(m)},\tilde {\pmb {y}}^{(m)}\}_{m=1}^{M'}$, that we use to form our dictionary. We then apply ResDMD to the computed dictionary with a second set of snapshot data

$\{\tilde {\pmb {x}}^{(m)},\tilde {\pmb {y}}^{(m)}\}_{m=1}^{M'}$, that we use to form our dictionary. We then apply ResDMD to the computed dictionary with a second set of snapshot data ![]() $\{\hat {\pmb {x}}^{(m)},\hat {\pmb {y}}^{(m)}\}_{m=1}^{M''}$, allowing us to prove convergence as

$\{\hat {\pmb {x}}^{(m)},\hat {\pmb {y}}^{(m)}\}_{m=1}^{M''}$, allowing us to prove convergence as ![]() $M''\rightarrow \infty$.

$M''\rightarrow \infty$.

How to acquire a second set of snapshot data depends on the problem and method of data collection. Given snapshot data with random and independent ![]() $\{\pmb {x}^{(m)}\}$, one can split up the snapshot data into two parts. For initial conditions that are distributed according to a high-order quadrature rule, if one already has access to

$\{\pmb {x}^{(m)}\}$, one can split up the snapshot data into two parts. For initial conditions that are distributed according to a high-order quadrature rule, if one already has access to ![]() $M'$ snapshots, then one must typically go back to the original dynamical system and request

$M'$ snapshots, then one must typically go back to the original dynamical system and request ![]() $M''$ further snapshots. For ergodic sampling along a trajectory, we can let

$M''$ further snapshots. For ergodic sampling along a trajectory, we can let ![]() $\{\tilde {\pmb {x}}^{(m)},\tilde {\pmb {y}}^{(m)}\}_{m=1}^{M'}$ correspond to the initial

$\{\tilde {\pmb {x}}^{(m)},\tilde {\pmb {y}}^{(m)}\}_{m=1}^{M'}$ correspond to the initial ![]() $M'+1$ points of the trajectory (

$M'+1$ points of the trajectory (![]() $\tilde {\pmb {x}}^{(m)}=F^{m-1}(\pmb {x}_0)$ for

$\tilde {\pmb {x}}^{(m)}=F^{m-1}(\pmb {x}_0)$ for ![]() $m=1,\ldots,M'$) and let

$m=1,\ldots,M'$) and let ![]() $\{\hat {\pmb {x}}^{(m)},\hat {\pmb {y}}^{(m)}\}_{m=1}^{M''}$ correspond to the initial

$\{\hat {\pmb {x}}^{(m)},\hat {\pmb {y}}^{(m)}\}_{m=1}^{M''}$ correspond to the initial ![]() $M''+1$ points of the trajectory (

$M''+1$ points of the trajectory (![]() $\hat {\pmb {x}}^{(m)}=F^{m-1}(\pmb {x}_0)$ for

$\hat {\pmb {x}}^{(m)}=F^{m-1}(\pmb {x}_0)$ for ![]() $m=1,\ldots,M''$).

$m=1,\ldots,M''$).

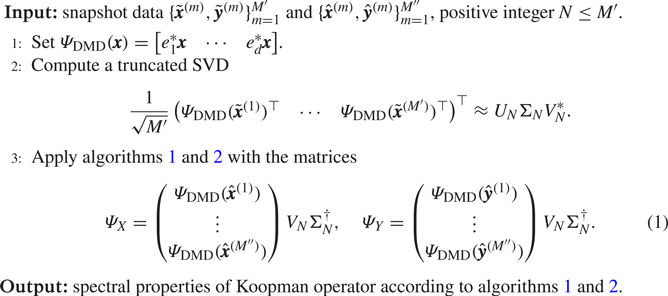

Algorithm 3 : ResDMD with DMD selected observables.

Algorithm 4 : ResDMD with kEDMD selected observables.

In the case of DMD, algorithm 3 summarises the two-stage process. Often a suitable choice of ![]() $N$ can be obtained by studying the decay of the singular values of the data matrix or the condition number of the matrix

$N$ can be obtained by studying the decay of the singular values of the data matrix or the condition number of the matrix ![]() $\varPsi _X^*W\varPsi _X$. When

$\varPsi _X^*W\varPsi _X$. When ![]() $M'\leq d$, the computational cost of steps 2 and 3 of algorithm 3 are

$M'\leq d$, the computational cost of steps 2 and 3 of algorithm 3 are ![]() ${O}(d{M'}^2)$ and

${O}(d{M'}^2)$ and ![]() ${O}(Nd{M''})$, respectively.

${O}(Nd{M''})$, respectively.

In the case of kEDMD, we follow Colbrook & Townsend (Reference Colbrook and Townsend2021), and algorithm 4 summarises the two-stage process. The choice of kernel ![]() $\mathcal {S}$ determines the dictionary and the best choice depends on the application. In the following experiments, we use the Laplacian kernel

$\mathcal {S}$ determines the dictionary and the best choice depends on the application. In the following experiments, we use the Laplacian kernel ![]() $\mathcal {S}(\pmb {x},\pmb {y})=\exp (-\gamma {\|\pmb {x}-\pmb {y}\|})$, where

$\mathcal {S}(\pmb {x},\pmb {y})=\exp (-\gamma {\|\pmb {x}-\pmb {y}\|})$, where ![]() $\gamma$ is the reciprocal of the average

$\gamma$ is the reciprocal of the average ![]() $\ell ^2$-norm of the snapshot data after it is shifted to have mean zero.

$\ell ^2$-norm of the snapshot data after it is shifted to have mean zero.

We can now apply the theory of § 3.2 in the limit ![]() $M''\rightarrow \infty$. It is well known that the eigenvalues computed by DMD and kEDMD may suffer from spectral pollution. However, crucially in our setting, we do not directly use these methods to compute spectral properties of

$M''\rightarrow \infty$. It is well known that the eigenvalues computed by DMD and kEDMD may suffer from spectral pollution. However, crucially in our setting, we do not directly use these methods to compute spectral properties of ![]() $\mathcal {K}$. Instead, we only use them to select a good dictionary of size

$\mathcal {K}$. Instead, we only use them to select a good dictionary of size ![]() $N$, after which our rigorous ResDMD algorithms can be used. Moreover, we use

$N$, after which our rigorous ResDMD algorithms can be used. Moreover, we use ![]() $\{\hat {\pmb {x}}^{(m)},\hat {\pmb {y}}^{(m)}\}_{m=1}^{M''}$ to check the quality of the constructed dictionary. By studying the residuals and using the error control in ResDMD, we can tell a posteriori whether the dictionary is satisfactory and whether

$\{\hat {\pmb {x}}^{(m)},\hat {\pmb {y}}^{(m)}\}_{m=1}^{M''}$ to check the quality of the constructed dictionary. By studying the residuals and using the error control in ResDMD, we can tell a posteriori whether the dictionary is satisfactory and whether ![]() $N$ is sufficiently large.

$N$ is sufficiently large.

Finally, it is worth pointing out that the above choices of dictionaries are certainly not the only choices. ResDMD can be applied to any suitable choice. For example, one could use other data-driven methods such as diffusion kernels (Giannakis et al. Reference Giannakis, Kolchinskaya, Krasnov and Schumacher2018) or trained neural networks (Li et al. Reference Li, Dietrich, Bollt and Kevrekidis2017; Murata, Fukami & Fukagata Reference Murata, Fukami and Fukagata2020).

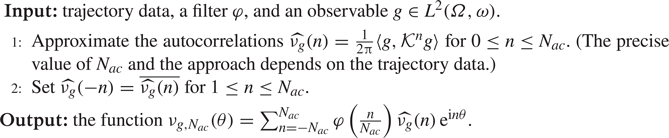

4. Spectral measures

Many physical systems described by (1.1) are measure preserving (preserve volume). Examples include Hamiltonian flows (Arnold Reference Arnold1989), geodesic flows on Riemannian manifolds (Dubrovin, Fomenko & Novikov Reference Dubrovin, Fomenko and Novikov1991, Chapter 5), Bernoulli schemes in probability theory (Shields Reference Shields1973) and ergodic systems (Walters Reference Walters2000). The Koopman operator ![]() $\mathcal {K}$ associated with a measure-preserving dynamical system is an isometry, i.e.

$\mathcal {K}$ associated with a measure-preserving dynamical system is an isometry, i.e. ![]() $\|\mathcal {K}g\|=\|g\|$ for all observables

$\|\mathcal {K}g\|=\|g\|$ for all observables ![]() $g\in \mathcal {D}(\mathcal {K})=L^2(\varOmega,\omega )$. In this case, spectral measures provide a way of including continuous spectra in the Koopman mode decomposition. This inclusion is beneficial in the case of turbulent flows where a priori knowledge of the spectra (e.g. whether it contains a continuous part) may be unknown. The methods described in this section allow us to compute continuous spectra.

$g\in \mathcal {D}(\mathcal {K})=L^2(\varOmega,\omega )$. In this case, spectral measures provide a way of including continuous spectra in the Koopman mode decomposition. This inclusion is beneficial in the case of turbulent flows where a priori knowledge of the spectra (e.g. whether it contains a continuous part) may be unknown. The methods described in this section allow us to compute continuous spectra.

4.1. Spectral measures and Koopman mode decompositions

Given an observable ![]() $g\in L^2(\varOmega,\omega )$ of interest, the spectral measure of